Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

WebGL Terrain Rendering in Trigger Rally - Part 1

Rendering large, detailed terrains efficiently is an Interesting Problem in computer graphics and games. Doing it with WebGL makes it even more interesting.

In this series of posts, I’ll talk about the terrain rendering techniques used in the WebGL game Trigger Rally.

An Interesting Problem

Rendering large, detailed terrains efficiently is an interesting problem in computer graphics and games.

Note: JavaScript performance is an area of intense interest to HTML5 game developers. This article covers some recent developments and asm.js in particular.

Doing it with WebGL makes it even more interesting. WebGL is OpenGL for the browser, providing access to the power of the GPU, but with some constraints. Importantly, CPU-side data processing with JavaScript is slower than in a native app. Transferring data to the GPU involves more security checks than in a native app, to keep Web users safe. However, once the data is on the GPU, drawing with it is fast.

A great way to maximize GPU work and minimize CPU work is to load static data (vertices, indices and textures) onto the GPU at start-up, and render it at runtime with as few draw calls as possible.

But it’s hard to make terrain look good with purely static data. Because the viewpoint will often be close to the ground surface, there can be many orders of magnitude difference in screen-space resolution between the closest and furthest parts of the terrain.

Furthermore, there are limits to the number of triangles that a given GPU can draw at interactive rates. Your triangle budget will depend on what range of systems you would like your app or game to be enjoyed on, but generally you can not afford to render a large terrain at full detail across its entire surface.

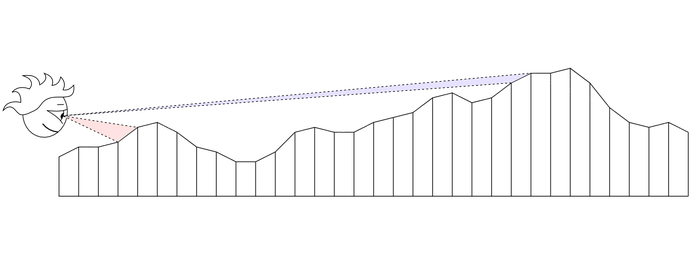

Since triangle budget is limited, we have to decide how we should distribute triangles for best effect. A uniform distribution results in low detail close to the camera, and excessive detail that the user can't appreciate further away:

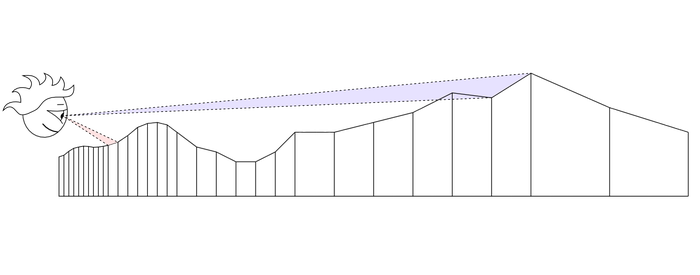

Notice that the red segment has a much larger apparent (on-screen) size than the blue segment. Ideally we would like to even this out by using more triangles close to the camera, and fewer at a distance:

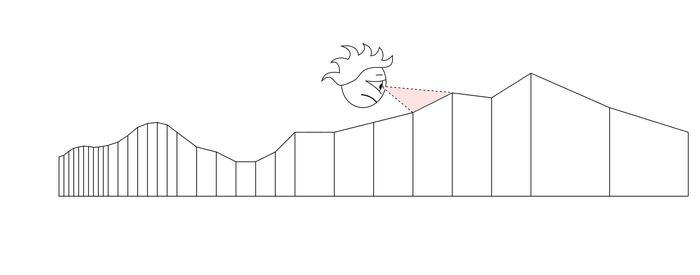

But this doesn’t work so well with a moving viewpoint and purely static vertex data:

If you’re willing to do some work on the CPU, you can use one of many algorithms to adapt the level of detail (LOD) of terrain to the current viewpoint. Each algorithm balances the work between CPU and GPU in its own way.

Solution: Geoclipmapping

How can we achieve adaptive LOD with purely static vertex data? Geoclipmapping and vertex texturing to the rescue!

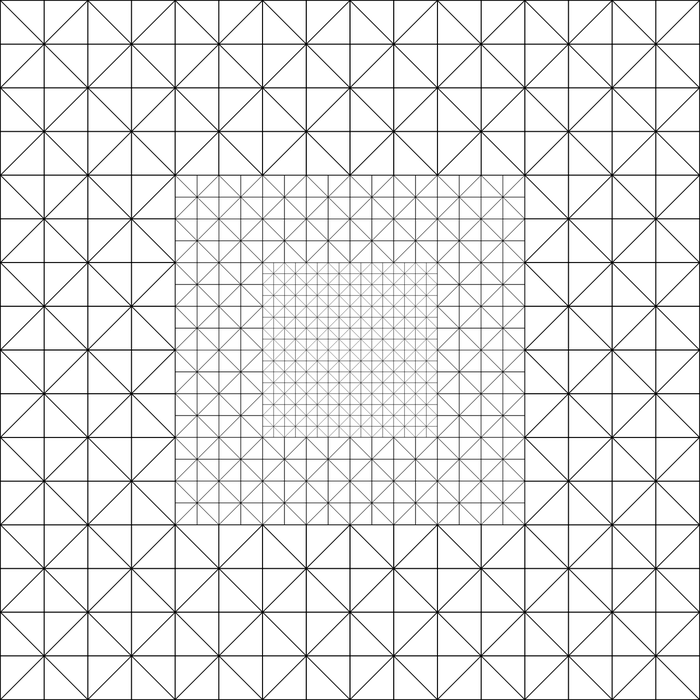

Instead of encoding height information in the vertex data, we keep it in a separate texture. Our vertex data can then encode a simple mesh with a higher resolution in the center, and rings of decreasing resolution as you move away from the origin:

At runtime, we position this mesh underneath the current viewpoint, and take samples from a height texture map in the vertex shader.

There's actually a bit more to it than this, so in the next article I’ll go into the specifics of how it works, and how to implement geoclipmapping with morphing efficiently in WebGL. After that, I'll discuss multiresolution heightmapping and surface shading.

Read more about:

Featured BlogsAbout the Author

You May Also Like