GPU Path Tracing in Unity – Part 3

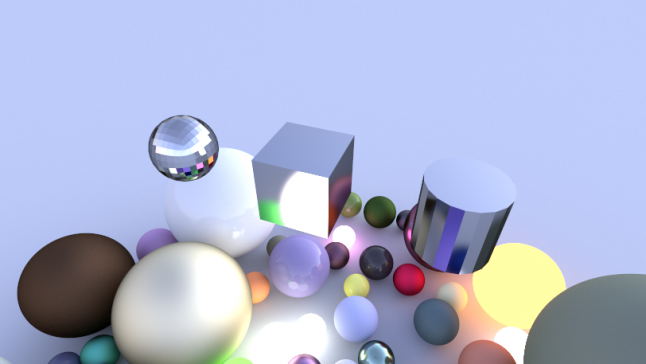

Our Unity Compute-Shader-based ray tracer can now display spheres with fuzzy effects like soft shadows, glossy reflections and diffuse GI, but we're missing an important piece of the puzzle: triangles. This is our job for today.

“Arithmetic! Algebra! Geometry! Grandiose trinity! Luminous triangle! Whoever has not known you is without sense!” – Comte de Lautréamont

Today, we’re going to make a big leap. We’re going beyond the purely spherical structures and the infinite plane we have been tracing so far, and introduce triangles – the essence of modern computer graphics, the element that entire virtual words are comprised of. If you want to pick up where we left off last time, use the code of part 2. The finished code of what we’re going to do today can be found here. Let’s go!

Triangles

The definition of a triangle is simple: It’s merely a list of three connected vertices, each of which stores its position and, later on, normal. The winding order of the vertices from your point of view determines whether you’re looking at the front or the back. Traditionally, counter-clockwise winding order is considered ‘front’.

First, we need to be able to tell whether a ray hits a triangle, and where. A very popular (but certainly not the only) algorithm for determining ray-triangle intersections has been introduced by Gentlemen Tomas Akenine-Möller and Ben Trumbore in 1997. You can read all the details in the paper ‘Fast, Minimum Storage Ray-Triangle Intersection’ here.

The code from the paper can easily be ported to HLSL shader code:

static const float EPSILON = 1e-8; bool IntersectTriangle_MT97(Ray ray, float3 vert0, float3 vert1, float3 vert2, inout float t, inout float u, inout float v) { // find vectors for two edges sharing vert0 float3 edge1 = vert1 - vert0; float3 edge2 = vert2 - vert0; // begin calculating determinant - also used to calculate U parameter float3 pvec = cross(ray.direction, edge2); // if determinant is near zero, ray lies in plane of triangle float det = dot(edge1, pvec); // use backface culling if (det < EPSILON) return false; float inv_det = 1.0f / det; // calculate distance from vert0 to ray origin float3 tvec = ray.origin - vert0; // calculate U parameter and test bounds u = dot(tvec, pvec) * inv_det; if (u < 0.0 || u > 1.0f) return false; // prepare to test V parameter float3 qvec = cross(tvec, edge1); // calculate V parameter and test bounds v = dot(ray.direction, qvec) * inv_det; if (v < 0.0 || u + v > 1.0f) return false; // calculate t, ray intersects triangle t = dot(edge2, qvec) * inv_det; return true; }

To use this function, you need a ray and the three vertices of a triangle. The return value tells you whether the triangle is hit or not. In case of a hit, three additional values are calculated: t describes the distance along the ray to the hit point, and u / v are two of the three barycentric coordinates that specify the location of the hit point on the triangle (where the last one can be calculated as w = 1 - u - v). If you don’t know about barycentric coordinates yet, read an excellent explanation on Scratchapixel.

Without further ado, let us trace a single, hard-coded triangle! Find your shader’s Trace function and add the following snippet:

// Trace single triangle float3 v0 = float3(-150, 0, -150); float3 v1 = float3(150, 0, -150); float3 v2 = float3(0, 150 * sqrt(2), -150); float t, u, v; if (IntersectTriangle_MT97(ray, v0, v1, v2, t, u, v)) { if (t > 0 && t < bestHit.distance) { bestHit.distance = t; bestHit.position = ray.origin + t * ray.direction; bestHit.normal = normalize(cross(v1 - v0, v2 - v0)); bestHit.albedo = 0.00f; bestHit.specular = 0.65f * float3(1, 0.4f, 0.2f); bestHit.smoothness = 0.9f; bestHit.emission = 0.0f; } }

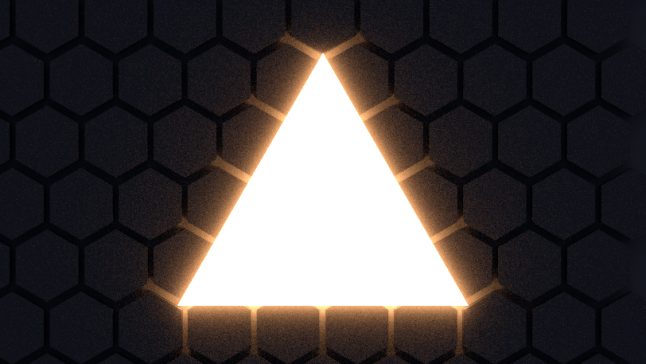

As mentioned, t stores the distance along the ray, and we can directly use it to calculate the hit point. The normal, which is important for correct reflection, can be obtained using the cross product of any two triangle edges. Enter play mode and enjoy your first self-traced triangle:

Exercise: Try to calculate the position using barycentric coordinates instead of distance. If you’re doing it right, the glossy triangle looks exactly the same as before.

Triangle Meshes

We’ve overcome the first hurdle, but tracing full-blown triangle meshes is another story. We need to learn some basic things about meshes first. If you are familiar with this, feel free to skim over this next paragraph.

In computer graphics, a mesh is defined by a number of buffers, the most important ones being the vertex and index buffer. The vertex buffer is a list of 3D vectors, describing each vertex’s position in object space (meaning that those values won’t need to be changed when you translate, rotate or scale the object – they are transformed from object space to world space on the fly using matrix multiplication instead). The index buffer is a list of integers that are indices pointing into the vertex buffer. Every three indices make up a triangle. For example, if the index buffer is [0, 1, 2, 0, 2, 3], there are two triangles: The first triangle consists of the first, second and third vertex in the vertex buffer, while second triangle consists of the first, third and fourth vertex. The index buffer thus also defines the aforementioned winding order. In addition to the vertex and index buffer, additional buffers can add information to each vertex. The most common additional buffers store normals, texture coordinates (knows as texcoords or simply UVs) and vertex colors.

Using GameObjects

The first thing we need to do is to actually find out about the GameObjects that should be part of the ray tracing process. The naive solution would be to just do a FindObjectOfType(), but we’ll go for something a little more flexible and fast. Let’s add a new component RayTracingObject:

using UnityEngine; [RequireComponent(typeof(MeshRenderer))] [RequireComponent(typeof(MeshFilter))] public class RayTracingObject : MonoBehaviour { private void OnEnable() { RayTracingMaster.RegisterObject(this); } private void OnDisable() { RayTracingMaster.UnregisterObject(this); } }

This component is added to each object that we want to use in our ray tracing and takes care of registering them with the RayTracingMaster. Add these functions in the master:

private static bool _meshObjectsNeedRebuilding = false; private static List _rayTracingObjects = new List(); public static void RegisterObject(RayTracingObject obj) { _rayTracingObjects.Add(obj); _meshObjectsNeedRebuilding = true; } public static void UnregisterObject(RayTracingObject obj) { _rayTracingObjects.Remove(obj); _meshObjectsNeedRebuilding = true; }

So far, so good – we know which objects to trace. Now comes the gritty part: We are about to gather all the data from Unity’s Meshes (matrix, vertex and index buffer, remember?), put them into our own data structures and upload them to the GPU so the shader can use them. Let’s start with our data structures and buffer definitions on the C# side, in the master:

struct MeshObject { public Matrix4x4 localToWorldMatrix; public int indices_offset; public int indices_count; } private static List _meshObjects = new List(); private static List _vertices = new List(); private static List _indices = new List(); private ComputeBuffer _meshObjectBuffer; private ComputeBuffer _vertexBuffer; private ComputeBuffer _indexBuffer;

…and let’s do the same thing in the shader. You’re used to this by now, aren’t you?

struct MeshObject { float4x4 localToWorldMatrix; int indices_offset; int indices_count; }; StructuredBuffer _MeshObjects; StructuredBuffer _Vertices; StructuredBuffer _Indices;

Our data structures are in place, so we can fill them with actual data now. We’re gathering all vertices from all meshes into one big List, and all indices into a big List<int>. While this is no problem for the vertices, we need to adjust the indices so that they still point to the proper vertex in our big buffer. As an example, imagine we have already added objects worth 1000 vertices so far, and now we’re adding a simple cube mesh. The first triangle might consist of the indices [0, 1, 2], but since we already have 1000 vertices in our buffer before we even start adding the cube’s vertices, we need to shift the indices, thus becoming [1000, 1001, 1002]. Here’s how it looks in code:

private void RebuildMeshObjectBuffers() { if (!_meshObjectsNeedRebuilding) { return; } _meshObjectsNeedRebuilding = false; _currentSample = 0; // Clear all lists _meshObjects.Clear(); _vertices.Clear(); _indices.Clear(); // Loop over all objects and gather their data foreach (RayTracingObject obj in _rayTracingObjects) { Mesh mesh = obj.GetComponent().sharedMesh; // Add vertex data int firstVertex = _vertices.Count; _vertices.AddRange(mesh.vertices); // Add index data - if the vertex buffer wasn't empty before, the // indices need to be offset int firstIndex = _indices.Count; var indices = mesh.GetIndices(0); _indices.AddRange(indices.Select(index => index + firstVertex)); // Add the object itself _meshObjects.Add(new MeshObject() { localToWorldMatrix = obj.transform.localToWorldMatrix, indices_offset = firstIndex, indices_count = indices.Length }); } CreateComputeBuffer(ref _meshObjectBuffer, _meshObjects, 72); CreateComputeBuffer(ref _vertexBuffer, _vertices, 12); CreateComputeBuffer(ref _indexBuffer, _indices, 4); }

Call RebuildMeshObjectBuffers in the OnRenderImage function, and don’t forget to release the new buffers in OnDisable. Here are the two helper functions I used in the above code to make buffer handling a little easier:

private static void CreateComputeBuffer(ref ComputeBuffer buffer, List data, int stride) where T : struct { // Do we already have a compute buffer? if (buffer != null) { // If no data or buffer doesn't match the given criteria, release it if (data.Count == 0 || buffer.count != data.Count || buffer.stride != stride) { buffer.Release(); buffer = null; } } if (data.Count != 0) { // If the buffer has been released or wasn't there to // begin with, create it if (buffer == null) { buffer = new ComputeBuffer(data.Count, stride); } // Set data on the buffer buffer.SetData(data); } } private void SetComputeBuffer(string name, ComputeBuffer buffer) { if (buffer != null) { RayTracingShader.SetBuffer(0, name, buffer); } }

Great, we have our buffers, and they are filled with the required data! Now we just need to tell the shader about it. In SetShaderParameters, add the following code (and, thanks to our new helper function, you can shorten the code for the sphere buffer too while you’re at it):

SetComputeBuffer("_Spheres", _sphereBuffer); SetComputeBuffer("_MeshObjects", _meshObjectBuffer); SetComputeBuffer("_Vertices", _vertexBuffer); SetComputeBuffer("_Indices", _indexBuffer);

Phew. That was tedious, but take a look at what we just did: We gathered all the internal data of our meshes (matrix, vertices and indices), put them in a nice and simple structure and sent it to the GPU, that is now eagerly waiting to make use of it.

Tracing Meshes

Let’s not keep the GPU waiting. We already have code to trace a single triangle in the shader, and a mesh really is just a bunch of them. The only new thing here is that we use our matrix to transform the vertices from object to world space using the intrinsic function mul (for multiply). The matrix contains translation, rotation and scale of the object. It is 4×4, so we need a 4d vector for the multiplication. The first three components (x, y, z) are taken from our vertex buffer. We set the fourth component (w) to 1, because we’re dealing with a point. If it was a direction, we’d put a 0 there to ignore any translation and scale in the matrix. Confused? Read this tutorial at least eight times. Here’s the shader code:

void IntersectMeshObject(Ray ray, inout RayHit bestHit, MeshObject meshObject) { uint offset = meshObject.indices_offset; uint count = offset + meshObject.indices_count; for (uint i = offset; i < count; i += 3) { float3 v0 = (mul(meshObject.localToWorldMatrix, float4(_Vertices[_Indices[i]], 1))).xyz; float3 v1 = (mul(meshObject.localToWorldMatrix, float4(_Vertices[_Indices[i + 1]], 1))).xyz; float3 v2 = (mul(meshObject.localToWorldMatrix, float4(_Vertices[_Indices[i + 2]], 1))).xyz; float t, u, v; if (IntersectTriangle_MT97(ray, v0, v1, v2, t, u, v)) { if (t > 0 && t < bestHit.distance) { bestHit.distance = t; bestHit.position = ray.origin + t * ray.direction; bestHit.normal = normalize(cross(v1 - v0, v2 - v0)); bestHit.albedo = 0.0f; bestHit.specular = 0.65f; bestHit.smoothness = 0.99f; bestHit.emission = 0.0f; } } } }

We’re just one step away from actually seeing all this in action. Let’s restructure our Trace function a little and add the tracing of mesh objects:

RayHit Trace(Ray ray) { RayHit bestHit = CreateRayHit(); uint count, stride, i; // Trace ground plane IntersectGroundPlane(ray, bestHit); // Trace spheres _Spheres.GetDimensions(count, stride); for (i = 0; i < count; i++) { IntersectSphere(ray, bestHit, _Spheres[i]); } // Trace mesh objects _MeshObjects.GetDimensions(count, stride); for (i = 0; i < count; i++) { IntersectMeshObject(ray, bestHit, _MeshObjects[i]); } return bestHit; }

Results

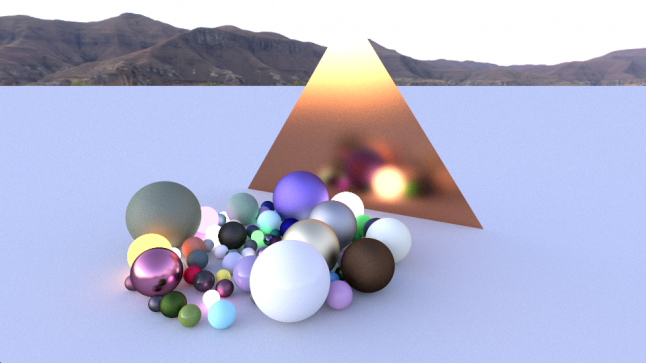

That’s it! Let’s add some simple meshes (Unity’s primitves work just fine), give them a RayTracingObject component and watch the magic happen. Don’t use any detailed meshes (more than a few hundred triangles) yet! Our shader is missing the proper optimization and it might take seconds or even minutes to trace one sample per pixel if you go overboard. The result is that your GPU driver will be killed by the system, Unity potentially crashes and your machine needs rebooting.

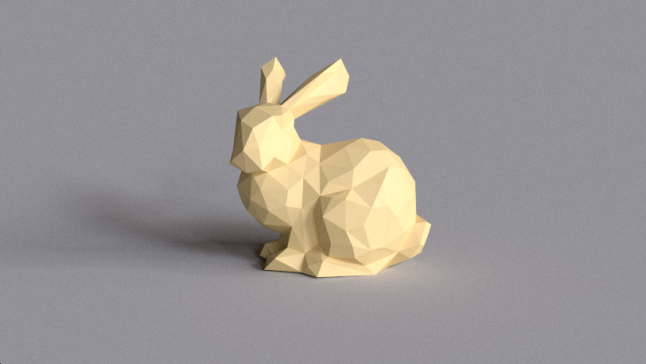

Notice that our meshes are not smooth, but flat-shaded. Since we didn’t upload the vertices’ normals to a buffer yet, we need to use the cross product to get each triangle’s normal individually and can’t interpolate across the triangle area. We will take care of this issue in the next part of this tutorial series.

For the fun of it, I’ve downloaded the Stanford Bunny from Morgan McGuire’s archive and reduced it to 431 triangles using Blender‘s decimate modifier. You can play around with the light settings and the hard-coded material in the shader’s IntersectMeshObject function. Here’s a dielectric bunny with nice soft shadows and subtle diffuse GI in the Grafitti Shelter:

…and a metallic bunny under the strongly directional light of Cape Hill that casts some disco-like spots on the floor plane:

...and two little bunnies hiding under a big rocky Suzanne under the blue sky of Kiara 9 Dusk (I hard-coded an alternative material for the first object by checking whether the index offset is 0):

What’s next?

It’s pretty cool to see a real mesh in your own ray tracer for the first time, isn’t it? We have juggled quite some data today, learned about the Möller-Trumbore intersection and integrated everything so that Unity’s GameObjects can be used right away. We have also seen one of the beautiful sides of ray tracing: As soon as you integrate a new intersection, all the cool effects (soft shadows, specular and diffuse GI etc.) just work.

Rendering the glossy bunny took forever, and I still had to use some slight filtering on the result to remove the most jarring noise. To overcome this, the scene is usually structured into a spatial structure like a grid, kD tree or a bounding volume hierarchy that considerably speeds up the rendering of larger scenes.

But first things first: What we should do next is to fix the normals, so our meshes (even if low-poly) look smoother than they do now. Automatic update of the matrices when objects are moved and an actual coupling to Unity’s materials instead of just a single hard-coded one also sound like a good idea. We will take care of this in the next part of this series.

You’ve made it this far. Thank you, and see you in part 4!

Read more about:

BlogsAbout the Author

You May Also Like