Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Quit Playing Games with My Heart: Biofeedback in Gaming

Biofeedback may seem like a technology of the distant future, but we can start using it today! The creator of the biofeedback-enhanced horror game "Nevermind," discusses her experiences with integrating biofeedback with gaming and the future of this tech.

“The only way I can tell if everything is OK is to play eXistenZ with somebody friendly. Are you friendly, or are you not?” (eXistenZ, 1999)

When I tell people that I have made a biofeedback-based game, the response is often one of the three “C’s” – “cool!” “creepy!” or “crazy!” (as in, you’re crazy). I think people often can’t help but invoke media depictions of biofeedback-esque technology such as the game pods from Cronenberg’s eXistenZ (a personal favorite) or perhaps even the SQUID device from Bigelow’s Strange Days. However, all these characterizations take place in worlds that still feel many years away. I’m here to tell you that this technology is already here – and it’s waiting to be put to good use by us.

eXistenZ game pod - image borrowed from here.

The Mayo Clinic defines biofeedback as follows:

… a technique you can use to learn to control your body's functions, such as your heart rate. With biofeedback, you're connected to electrical sensors that help you receive information (feedback) about your body (bio). This feedback helps you focus on making subtle changes in your body, such as relaxing certain muscles, to achieve the results you want, such as reducing pain. [http://www.mayoclinic.com/health/biofeedback/MY01072]

The term biometrics is often used as well – although that typically refers to the specific measurement of a physiological process. I like to think of biofeedback (as it pertains to gaming at least) as an input that allows the game to respond to your physiological state. Ultimately, it’s a way for the game to bypass your conscious decisions (e.g. move the joystick in that direction, press this button…now, etc.) and tap directly into your subliminal responses. To me, this new input-method feels like what’s next in the evolution of controllers.

Controlling the Future

Back in the early days of gaming, most players controlled their games through clunky knobs or joysticks*. Maybe a button or two. Then, the “controller” was introduced to home consoles, granting the player a few buttons and a handy-dandy directional pad. As the complexity of games evolved, so did the level of engagement players wanted to have with them, thus the controllers evolved to feature more and more buttons and inputs. Dual joysticks. Shoulder bumpers. Touch screens. Then full-body motion-based controllers such as the Wiimote, Kinect, and PlayStation Move became part of the mainstream experience. To me, this evolution reflects an innate desire that players have for a more intimate experience with their games. I can’t help but feel that players ultimately long to be less responsible for making discrete or conscious decisions and, instead, have something more akin to “mind-melded” interactions with their gaming sessions. It is only logical that biofeedback is the next, inevitable step for player-to-game interfacing.

*There are, of course, always exceptions. However, I wanted to focuses on describing the mainstream trend. There is a great illustration that can be found here. Original Page.

![Image borrowed from [http://mbcgrob.nl/portfolio_img/designstudies/game_controller_timeline_large.png] Image borrowed from [http://mbcgrob.nl/portfolio_img/designstudies/game_controller_timeline_large.png]](http://mbcgrob.nl/portfolio_img/designstudies/game_controller_timeline_large.png?width=700&auto=webp&quality=80&disable=upscale)

Sounds far-fetched? There is already evidence of this next evolutionary step taking place before our very eyes. You don’t need to look too much farther than the number of health apps available on the App Store – many of which use the iPhone’s accelerometer as a pedometer. Is there anyone here who doesn’t know someone using the Nike+ FuelBand or a FitBit? People want to leverage technology and its ability to passively gather and analyze data about themselves to create a more fully integrated, personalized, impactful experience than has ever been available (at a mainstream level) before.

I could geek out about all that for hours (and will do so for a bit a few paragraphs later). However, first, a little background on my journey as a game developer with biofeedback technology.

Learning to Listen

I had always been intrigued by biofeedback in games as a “neat idea," but never seriously considered it until I was in charge of developing a game called Trainer during the first year of my MFA program at the University of Southern California. Trainer was a browser-based fitness/health game intended to motivate children to cultivate healthy exercise and eating habits by rewarding them for completing fitness activities.

While developing it, the team and I knew that we had to hold the player accountable to actually completing the fitness activities – this is when the big “what if” around biofeedback came up. We tried for several months to find a way to integrate various sensors (such as a pedometer or even a mobile phone) with the Flash-based game, however, we were never able to get it working as intended. We ultimately ended up turning to motion detection via webcams to achieve our goal. That solution worked fine for Trainer (and avoiding the extra peripheral made it, in some ways, a superior option to full biofeedback). However, once I’d dipped my toe into the curious waters of biofeedback technology, I couldn’t help but feel like there was a lot more exploration to be done. When it came time for me to declare my thesis project for my MFA, I knew I had to incorporate it somehow.

Carry On, Nevermind

This brings me to Nevermind, the game I’m perhaps most well known for these days. Inspired and encouraged by a talk given at the 2011 GDC by Valve’s Mike Ambinder, I set out to give biofeedback a another shot – this time as a central pillar of the experience.

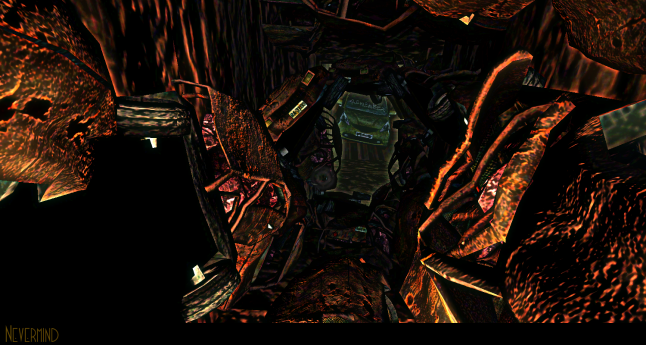

Nevermind is an adventure horror game that takes place inside the minds of severe psychological trauma patients. The player takes on the role of a Neuroprober who must dive into the subconscious world of these patients to uncover the repressed memories of the trauma that the patient suffered. The biofeedback twist? Since this is a horror game, the player must subject him/herself to a myriad of awful, uncomfortable, terrible things. If the player becomes scared or stressed while doing so, the game will become more difficult. The environment responds to the player’s physiological state and will impede his/her progress until the player calms down. As soon as the player relaxes, the game returns to its easier, default state.

The big-picture idea with Nevermind is that, by becoming more aware of their stress signals and learning/practicing techniques to return to a state of calmness in the midst of stressful scenarios, players can translate those necessary in-game skills to necessary every-day real world stress-management scenarios. Nevermind currently uses a Garmin cardio chest strap along with an ANT+ USB stick to gather heart-rate data from the player. Specifically, Nevermind looks at a metric called “Heart Rate Variability” (HRV) to determine when the player becomes scared or stressed – which is, essentially, the consistency/inconsistency of the duration between heartbeats. In my experience, HRV is one of the easier and more effective biometrics to use with existing technology, however there are (and will be) many other options to work with in integrating biofeedback into games. More on that soon…

How Does that Make you Feel?

Based on everything I’ve seen so far, the biofeedback technology available for games is essentially used as a high-tech mood ring*. I’m certainly not saying this to diminish the depth of their potential and value, but whether you’re using HRV or the brainwave headsets currently on the market, you’re only really getting basic physiological signals that may indicate a certain feeling.

*For all you whippersnappers reading this, here: http://en.wikipedia.org/wiki/Mood_ring

To be more specific, the sensors available to us today are good at measuring “arousal” but not the other key dimension of the emotional spectrum, “valence.”

Arousal can be thought of as the intensity of a feeling – it’s the scale of “meh” to “OMG”. If you go back to high-school bio or health class, you may recall that whole “fight-or-flight system” deal. When sensors measure arousal, they’re essentially measuring symptoms of your fight-or-flight system being activated. These symptoms include elevated heart rate, an increase in skin moisture (i.e. sweat), the consistency of your heart beat’s rhythm (i.e. HRV), a flushed skin tone, etc. That’s valuable data to have because, when matched to the context of an experience, a player’s feeling can be deduced. This is what we do in Nevermind.

As such, when I say Nevermind reacts to a player’s fear or stress levels, what I really mean is that it reacts to the player’s arousal levels. (However, explaining it that way usually gets me a few raised eyebrows, so I tend to stick to just saying “fear and stress levels”). We don’t actually know 100% if a player is scared or stressed at any given moment in the game. However, what we do know is that the player is feeling an intense emotion. Given the context of Nevermind as a horror game, it is safe to assume that that emotion is very likely one of anxiety or fear and not one of, say, extreme joy (which would register as the same thing as far as the game is concerned).

In fact, there is one section of Nevermind in which the limitation of only measuring arousal is evident. Near the end of the first level, there is an especially challenging maze we put the player through. At the end of the maze, there isn’t anything that is particularly scary – however, in playtests, we kept seeing the game react as if the player suddenly became very scared or stressed. It didn’t make sense until we realized that what the player was feeling wasn’t fear or anxiety – but excitement and intense relief to have completed the maze. Again, it’s all about context when leveraging arousal-sensing devices – so, in the case of the maze in Nevermind, we plan to resolve that issue by selectively “numbing” the game’s response to any stress input at the area around the end of the maze.

Presently, I’ve had less experience working with brainwave-based devices such as the NeuroSky equipment and, as such, am less familiar with the specific science behind their assessment methods. However, from my understanding, like “arousal,” their readings are relatively binary - essentially ranging from focused to non-focused.

If arousal is the intensity of a feeling, then valence is the quality of that feeling – positive or negative. Think Al Green – “good or bad, happy or sad." So, with a device that could detect valence, a game could tell if the player is, essentially, in a good mood or bad mood. A valence sensor could, for example, differentiate between whether the player is feeling something negative (dread, fear, anxiety) or positive (joy, relief, excitement) at the end of the maze in Nevermind. However, to my knowledge (and, please, someone correct me if I’m wrong), we currently have no easy way of detecting valence – especially in a way that could be easily available and usable to the consumer.

Ultimately, the holy grail of biofeedback sensors would be one that could measure both arousal and valence. With that information, a game could respond to the entire spectrum of human feelings and emotions. Biofeedback would no longer be a binary “mood ring,” a technology demanding contextual cues from the design of the experience in order to “guess” the nature of the player’s feelings, but rather a direct input that could help the game understand exactly what the player is feeling at all times.

This future is a bright and exciting one indeed. However, until valence detection is refined and paired with arousal sensors, we’ll just have to do the best we can with the arousal detecting sensors that are currently out there.

You See the Tools I Have to Work With…

The good news is that there are still many great options for sensors that can be used in games. Here are just a few:

Heart Rate Sensors – These sensors basically detect when the player’s heart beats. With this information, games can use heart rate or heart rate variability as a metric to gauge arousal. The unreleased Wii Vitality Sensor was a pulse oximeter (which, basically, reads pulse based on the amount of oxygen the blood has at any given moment). Nevermind originally attempted to use a pulse oximeter for its biofeedback sensor, but we had more success with an over-the-counter cardio strap.

One of the easiest and most innovative ways I could see developers integrate biofeedback into their games is by using the LED light and camera on the iPhone. An app developer called Azumio has developed a few apps that do this very thing (I recommend checking out Stress Doctor). Essentially, this technique turns your iPhone’s camera into a pulse oximeter. Lit up by the LED light, the camera can “see” color fluctuations in your finger as blood is pumped through your veins. Using that data, your game is all set up to use heart rate or heart rate variability! Additionally, from what I understand, the Kinect 2 for Xbox One will be able to read Heart Rate (as well as sweat responses, which brings us to the next biometric…).

GSR – Galvanic Skin Response (also commonly known as Electrodermal Response or Skin Conductivity). This type of sensor determines arousal based on subtle fluctuations in your skin moisture. It usually works by running a very mild current across an area of your skin. Depending on the conductivity of your skin to that current, the sensor will tell how moist (i.e. stressed) you are - ultimately indicating arousal levels. In addition to Heart Rate, the Wild Divine system uses GSR. Sony even investigated integrating GSR into the new PS4 controllers. I still hope that they do at some point.

Brain Waves – “Brain wave” headsets, such as those developed by NeuroSky, offer developers the opportunity to create games that can react to a player’s brain waves using a consumer-grade form of EEG (electroencephalography). What’s great about the NeuroSky devices specifically is that they already come with Unity integration resources - and many of the systems are already designed with game developers in mind.

Other Biometrics – There are other ways to leverage common technology to gain insight into the player’s biometrics. Some are as simple as using an accelerometer to assess a player’s movements and steps. Others use webcams to read things like eye movement. In Nevermind, we even used mouse movement and the rate of keyboard presses (i.e. button mashing) to approximate a player’s stress levels in scenarios where a user chose not to wear the cardio sensor. Perhaps some of these options are not “biofeedback” tech per se, however they illustrate that there are many other technologies and techniques available to us that allow for some of the same opportunities (albeit perhaps less powerful) that the more sophisticated sensors afford.

With all these options already available to us as developers, the questions we should be asking ourselves shouldn’t be about the hardware but, instead, the content. What opportunities does a biofeedback-based game really offer that can’t currently be fulfilled?

The Good Side of Biofeedback:

Smarter Games

The ability to use biofeedback in games creates a whole new avenue of gameplay experiences that can be monitored and manipulated in real-time. Many games already use complex metrics to track player behavior which is, in turn, aggregated and analyzed to inform gameplay tuning decisions. This process creates a loop that can often take weeks, if not months, to generate meaningful results. With the capabilities of biofeedback, games will have the power to monitor players and adjust various elements of the experience to “automatically” maintain a player’s flow state. In other words, for example, a game can sense when a player is becoming over or under challenged and dynamically adjust the difficulty to prevent the player from becoming frustrated or bored (essentially the opposite of what we do in Nevermind). With the game knowing -on the fly- more about the player than the player even knows about him/herself, it can challenge and enrich the player on a personal, individualized level in ways that have never been truly possible before.

Health Games

Along those lines, biofeedback in games can be the key in truly connecting healthcare with entertainment technology. As just one example, accessible biofeedback can help fitness games be more effective, responsive, and engaging.

On a more serious front, biofeedback-based gaming could be used to help diagnose medical conditions and provide engaging tools (for home or hospital use) to help treat or monitor said conditions. Additionally, especially with the eventual use of valence sensors, a wide variety of potentially highly effective therapeutic games and digital experiences could emerge in a big way. These games would become a revolutionary tool in a variety of therapy treatments - opening up the door to more accessible and, in many ways, more approachable treatments for a diversity of people.

The Dark Side of Biofeedback

A Balancing Act

As exciting as biofeedback technology can be, we need to make sure we use it in meaningful and impactful ways so it isn’t cast aside as a gimmick. First and foremost, we need to make sure that the technology is married well to the content. If it feels like the biofeedback takes an obvious back seat to the content, the biofeedback component could feel like a cheap magic trick. On the other hand, if it feels like the content takes an obvious back seat to the biofeedback, the product may feel too much like a health app instead of a game. There is also the risk of developers simply not realizing how to use the technology wisely. Poor implementation of biofeedback-based predictive game behavior could lead to exceedingly frustrating experiences.

TMI (Privacy Issues)

Rarely can players consciously control their physiological responses (which serve as a primary input when it comes to biofeedback gaming). This lack of intentional control is part of what makes biofeedback so powerful. However, it also limits the player’s ability to consciously decide what information is provided to the game. Biofeedback input grants us access to data that hasn’t been curated by the player, so establishing trust is key. If players don’t feel safe providing their biofeedback data, then the tech could be abandoned before it has a chance to flourish.

External Sensor Dependencies

With the exception of the Kinect 2.0 and the iPhone “hack” mentioned earlier, most biofeedback sensors exist as an external, wearable peripheral – increasing the barrier to entry. This need for extra hardware is, of course, never ideal as it requires the consumer to make an extra purchase (or buy a potentially costly bundle). It also increases the likelihood of technical/compatibility/user errors.

As sensors such as the FuelBand and FitBit become more and more ubiquitous (and means of interpreting biofeedback data via plugins and the like become more standardized) this challenge will become less and less of an issue. Nonetheless, for now, it’s a key hurdle to be overcome.

Sensor Reliability

Some devices and metrics are simply more reliable than other - and few, if any, are fool proof for all users. For example, the NeuroSky headset doesn’t work well if you have a lot of hair (I’ve never been able to get it to work all that well on me). HRV is based on the delta of the user’s heart rate (not the heart rate itself). As such, while games that use HRV (like Nevermind) for the most part inherently accommodate most individuals regardless of their natural heart rate, we occasionally encountered players whose HR and HRV fell into either ends of the “pulse” extremes. One player was so inherently nervous that the game registered him as always being stressed. Another player was so cool and collected, that the game never read any stress signals from him at all (we had to check the sensor to make sure it was even working!). Proper calibration of your tools helps to address some of the inevitable player inconsistencies, but there is always a risk that some players will inherently be more suited to biofeedback technology than others.

Putting the I/O in Biofeedback (and into your games):

As mentioned earlier, there are several relatively easy ways that you can implement biofeedback in your games using currently available tech. These include:

The iPhone (with the LED and Camera)

Kinect 2.0 (ok, not currently available, but soon!)

Devices such as NeuroSky headset

Web cams

Homemade sensors (many people have built effective HRV and GSR sensors using an Arduino).

And many more are becoming available each day. Even just in writing this, I received a newsletter from indiegogo featuring this sensor.

Once you have the sensor hooked up to the game, you will need to determine how to tell the software what to do with it. The Nevermind team was fortunate enough to be able to meet with a number of experts and advisors both at USC and within the industry who helped guide us in translating the biometric data into meaningful information on a player’s psychological state. However, there is basic information online on how biometrics can be read – you just need to be willing to do a little research. Personally, I see the increasing availability of this information as a promising predictor of how this technology is truly on the brink.

With that, this is all a very long-winded way of saying that biofeedback is about to make its debut into the mainstream market in a very big way. And we, as game developers, have the opportunity to start having fun with this technology today! Woot! There aren’t as many barriers to entry as you may think there are and, really, the biggest challenges we as developers face lie not in figuring out the hardware but in figuring out how to make the most compelling game experiences to delight, enrich, and move our players in brand new ways.

We’re all smart, curious people here and I can't wait to see what we all can come up with!

About Erin: Erin Reynolds is a 10 year veteran of the games industry who has worn many hats - including that of a designer, artist, producer, and more. She is currently the creative director of Nevermind, the first of many projects that reflect her vision of making edgy games for good by leveraging new, existing, and emergent technology. Erin can be reached at [email protected].

Read more about:

Featured BlogsAbout the Author

You May Also Like