Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Design Lessons from ALT.CTRL's Emotional Fugitive Detector

Have you ever wondered about how a unique alternative controller game gets designed? In this mini postmortem I go through how we designed Emotional Fugitive Detector, and hopefully provide some insights you can use on your own installation game!

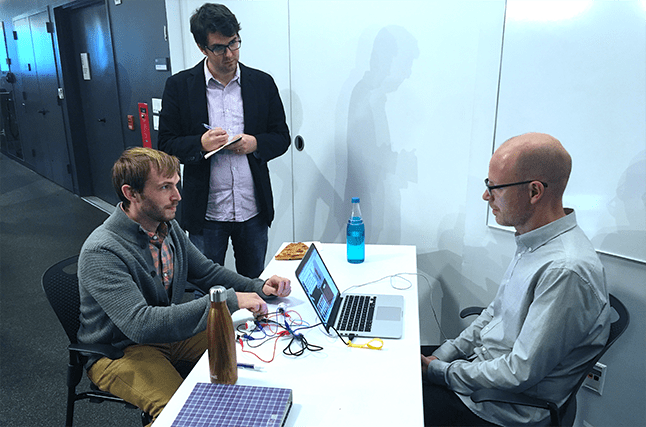

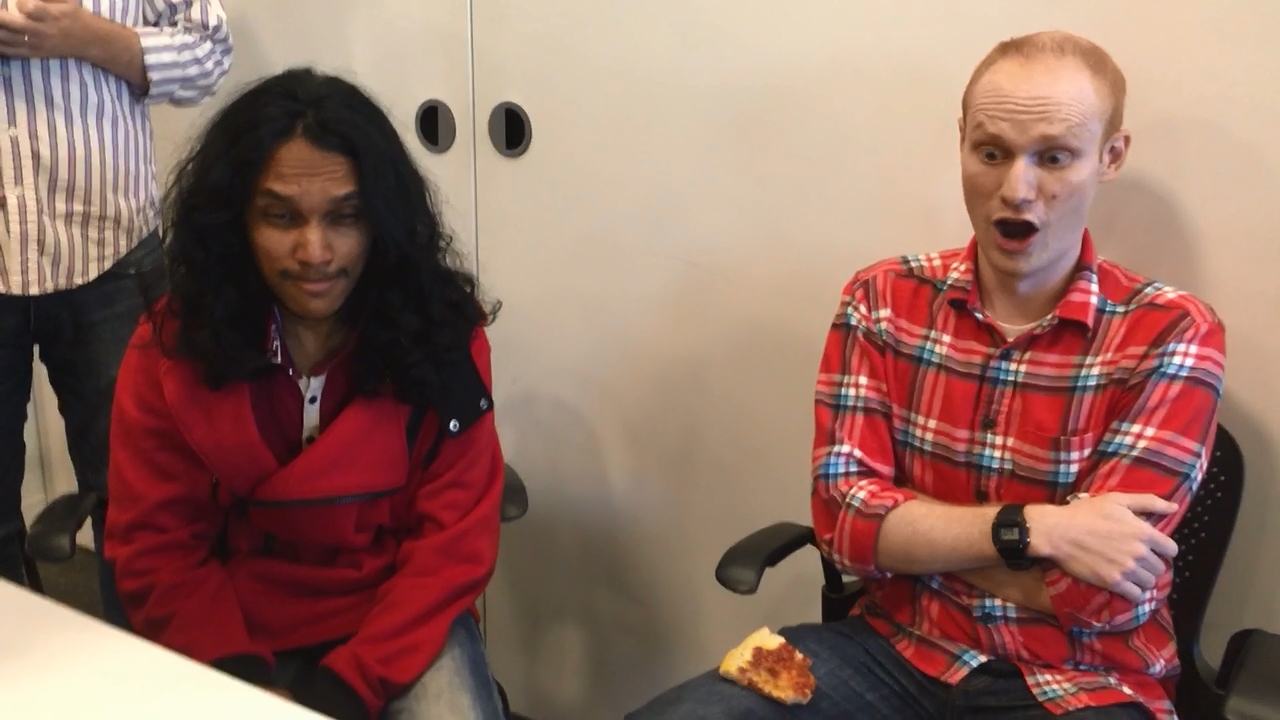

Earlier this month, we showed an alternative controller game that I designed with Sam Von Ehren and Noca Wu at GDC's ALT.CTRL exhibition. ALT.CTRL is a showcase of games with unique controllers. Throughout the exhibition, attendees were curious what our design and development process had been making Emotional Fugitive Detector. I wanted to share some info on our process, as a sort of mini postmortem, in case it’s useful to any future designers of games using novel input methods. So here are the steps to designing a dystopic face-scanning game!

About the Finished Game

Emotional Fugitive Detector is a two-player cooperative game where players work together to outwit a malevolent face-tracking robot. One player is being scanned, and tries to get their partner to pick the emotion they’re making using their face. If they’re too expressive though, the face-tracking API will detect them, and if they’re too subtle, their partner might pick the wrong emotion. It's been called "unfriendly tech", "surprisingly affecting", and, uh... "the tech itself was a little janky... but the concept is so good"! It’s a game about emotional nuance, where a human face is both the controller and screen. But it didn’t necessarily start off that way…

Step 1: Have A Weird Idea & Be Inspired

Sometime early last year, we had read about some open source face-tracking libraries somewhere or another. And, as game designers often do, we thought it might make for a pretty cool game. We originally wanted to make a face fighting game, where players would make different faces at eachother to attack or block. But it never progressed past the preliminary idea phase until we attended last year’s ALT.CTRL show. Seeing so many amazing games that created unique in-person experiences inspired us to actually move beyond the idea phase and try to actually make something.

Step 2: See If Anyone Else Had That Idea Already

So we knew we wanted to make a game using face-tracking. The first thing we did though is something I often do when exploring a new mechanic: see if someone else has done it before! I think this is a neglected part of the process, because games as an artform has such short memory of its own history. But if you take the time to do some research, you're not just saving yourself the potential embarrassment of rehashing what you thought was a new idea, you're also able to learn from the successes or failures of your predecessors. (Also, I just love games history.)

In our case we didn't turn up much. While body tracking had been explored in many Kinect games, we found very few games using facial input (to wiser people, the dearth of examples might have been a red flag). The only ones we could find were various tech demos, or simple games where the face was just a substitution for a button. Games like Eye Jumper or Face Glider have players using their face to make inputs, but in a very direct manner to steer or jump.

Seeing those other games helped clarify to us that we wanted to use the affordances of both face-tracking and the human face as integral parts of the design. Using facial movement as an input is something you could explore better in VR, for instance. So we wanted to detect expressions, and have that be the primary method of play. Our brains are wired to read faces, but it's not a skill we're often asked to use in games.

Step 3: Feasibility Test Your Tech

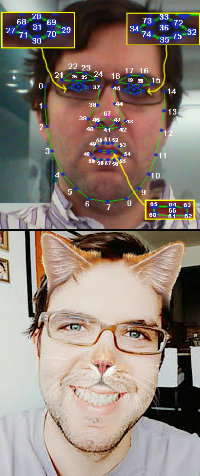

Now it was time to start putting our ideas into practice. Initially we were using OpenCV, by far the best documented open source library for face-tracking. Using a variety of techniques, it makes it easy to detect facial points in photos or videos, similar to what you see in the face-filters on SnapChat. It’s a great library and has an easy to implement plugin for Unity as well. However, it’s primarily a facial detection method only; it provides you with the points on a face and nothing more. While this is all you need if you're superimposing cat ears onto someone (a noble goal), we wanted to detect expression changes like smiling or frowning. We tried building our own methods to determine these. While they sort of worked… it turns out facial gesture recognition is a non-trivial problem! But thankfully other people had trodden this path before us. We ended up finding a Java library called clmtrackr, that extends a similar methodology to OpenCV, but had already been trained against a library of faces so it could output confidence intervals of detecting four emotions (sad, happy, angry, and surprised).

Now it was time to start putting our ideas into practice. Initially we were using OpenCV, by far the best documented open source library for face-tracking. Using a variety of techniques, it makes it easy to detect facial points in photos or videos, similar to what you see in the face-filters on SnapChat. It’s a great library and has an easy to implement plugin for Unity as well. However, it’s primarily a facial detection method only; it provides you with the points on a face and nothing more. While this is all you need if you're superimposing cat ears onto someone (a noble goal), we wanted to detect expression changes like smiling or frowning. We tried building our own methods to determine these. While they sort of worked… it turns out facial gesture recognition is a non-trivial problem! But thankfully other people had trodden this path before us. We ended up finding a Java library called clmtrackr, that extends a similar methodology to OpenCV, but had already been trained against a library of faces so it could output confidence intervals of detecting four emotions (sad, happy, angry, and surprised).

I want to emphasize that we are game designers, not researchers, computer scientists, visual recognition experts, or anything like that. Anyone with genuine interest in this area would likely be horrified at our shortcuts and hacks. Rather, we were consciously repurposing a tool to turn it into a game experience.

So it took some doing, but we had our initial technology up and running within a month or two. We could read four emotions from human faces, and use those as inputs into a game system. While initially we weren’t sure the technology would be even feasible, it hadn't taken long to get something working relatively well. Though 'relatively' being an operative word there.

Step 4: Playtest Forever

Sam, Noca and I met at the NYU Game Center, where the three of us are finishing our MFAs. A core principle in the Game Center's approach to game design is playtesting. Playtesting all the time. Interactive systems are almost impossible to judge a priori, so something that seems great in your head can fall apart when someone who's unfamiliar with it plays. The program hosts a weekly playtest night called Playtest Thursday where students, faculty and local developers test games and get feedback from the public (said public being predominantly undergrads there for the free pizza).

We went almost every week for several months, testing different gameplay mechanics. This was invaluable for learning the affordances of the technology. It was very persnickety. While it worked great in ideal conditions (ie, when we tested it ourselves), testing with real people revealed several limitations. It was far from 100% accurate, extremely sensitive to how the subject was lit, and if someone moved their head even slightly then it was all over.

As our initial prototypes grew from feasibility tests into game prototypes, we also struggled making a game that was in any way fun. These early prototypes centered on being read by the computer. It would tell you what was it looking for, and the first player to be successfully detected would win. We kept trying to use the design of the game to compensate or hide the limitations of the technology. We'd test narrative framing to explain why the "AI" was so capricious, or use turn-based mechanics to hide the slow recognition speed. It’s very frustrating testing ideas and finding they don’t work! But we kept at it, iterating constantly.

Step 5: Keep Going Till You Find a Great Idea

The watershed moment for us was realizing the detection margin of error could be an asset to the design, rather than a liability. Matt Parker, a game designer and artist with experience in physical installation games, told us about a game he had worked on where players tried to avoid being detected by a Microsoft Kinect. Players had to contort their bodies into weird non-human shapes to win. The genius of that was immediately obvious: if players were trying to avoid being detected by our emotion scanning, rather than attempting to conform to the faulty algorithm, we could build a game around that. Turning a bug into a feature is a great way to stumble on great game design.

The rest fell into place very quickly. A charades-like format, with one player attempting to communicate with another, worked very well in testing. This, coupled with a hidden information mechanic, provided an excellent framework to the game.

A well designed system is critical to a good game experience. Occasionally players at GDC would remark how interesting the underlying technology is, and would speculate about how fun it would be even without the surrounding game. I can tell you empirically that this is totally false!

Step 6: Bottom-up Design

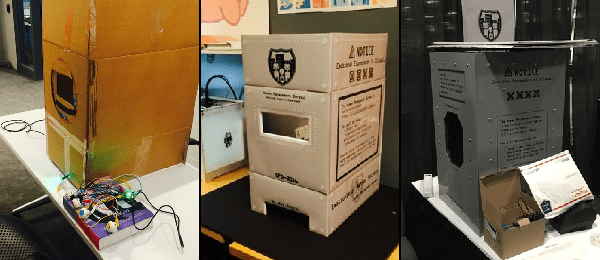

The finished game seems like it was designed in a top-down manner: A dystopic future, robots versus emotions, a scanning aperture and so on. But in fact, all of the physical design was driven purely by the needs of the game design. Why would you try to convey emotions subtly? Because they’re illegal! How can we get players to stop moving their heads and screwing up the tracking? By having them stick their faces into a hole to constrain their movement! How can we ensure consistent lighting? By enclosing the camera in a box!

Every part of the physical and narrative design is serving the game, in a way that feels organic. That’s actually the part of the project I’m most proud of! Although the physical design of the box improved dramatically over different builds, the core essentials were present when it was just a discarded cardboard box!

Step 7: Polish & Keep Playtesting Forever

Everything subsequently was just polish. We put in audio, improved the box design, and experimented with different timing in the gameplay. We even recorded voiceover instructions with a proper voice actor (at a very friendly rate on account of being married to me). While this process lasted a long time, it was primarily incremental improvements to the core ideas. The game was essentially done after only a few months, and the remaining time was just improving implementation and ensuring a good player experience.

We also never stopped playtesting during this time, whether at Playtest Thursday or by taking it to local events like BQEs and Betas at the Brooklyn Brewery. Constant feedback from real people is critical, at every step of a game's development. Even showing it at GDC, none of us think of the game as 'done', and there are many improvements we identified showing it there.

So I hope you find that useful! This is an overview, but I wanted to give an idea of how we went from a vague idea about face-tracking to a complete game experience. Designing for physical spaces using unproven technology is incredibly difficult, but also very rewarding. For some players they might only play your game once in their life, and the experience they have can be unique and interesting, and it can be something impossible to replicate with conventional controllers. So why not start designing your own!

Read more about:

Featured BlogsAbout the Author

You May Also Like