Deep Learning for Pattern Recognition in Movements of Game Entities

A look at how deep learning can be used to recognize patterns in the movements of players, with the goal in mind of creating more dynamic and adaptive AI agents.

Introduction

With the increase in resources being dedicated towards AI, developers have started experimenting with different ways of creating more dynamic agents and AI systems. In this article, we'll be presenting a deep learning technique that attempts to recognize different playstyles in player movements. Exposing this information to a game's developers could allow AI designers to create agents that adapt as the player tries new strategies, while retaining full control of their behavior. We'll be taking a look at the implementation of the technique, as well as possible improvements and applications. This article is based on my thesis for the Master in Game Technology at BUas, which can be found here.

Deep learning for games

Lately, we've been seeing deep learning take over in several different fields, including in the games industry. Neural networks offer solutions to various different problems that used to be difficult to tackle. This prompts the question if they could be used to make game AI more interesting and dynamic. Plenty of research has proven that neural networks can be trained to control agents in complex games, but the majority of this research is conducted towards simulating intelligence, rather than actual game development. Although these trained agents show complex decision making capabilities, they suffer from two major problems: First of all, when it comes to games, the goal of AI agents is not to be as effective as possible at defeating the player. Their goal is rather to pose a challenge that can be overcome by the player. Secondly, neural networks are hard to debug. Therefore, it's hard to predict or alter their behavior once they're trained.

Different solutions have been attempted to solve these problems, yet none of them have convinced developers to step away from more traditional AI architectures like finite-state machines and behavior trees. Although these techniques cannot adapt to the player, and tree like structures can get very complex, they do give the developer full control over the AI's behavior. This allows them to craft fine-tuned experiences for the player. However, the inability of the AI to adapt to the player's playstyle can often ruin the challenge or player experience. If we want to adapt our agents to player behavior, while retaining full control, we need a way to combine the strengths of deep learning with the control of traditional techniques.

Methodology

Our approach to this problem was to train a neural network to analyze how the player plays the game against a normal, scripted AI. To assess the capabilities of a neural network for this task, we conducted an experiment using a slightly modified version of Unity's open-source "Tanks!" game. Two game modes were added to the game, each enforcing a specific playstyle. The timer mode was designed to force the player to play aggressively, while the second game mode made the player start with only 1 health remaining, which pressured them into a more defensive playstyle. This game was used to collect input data to train our neural network.

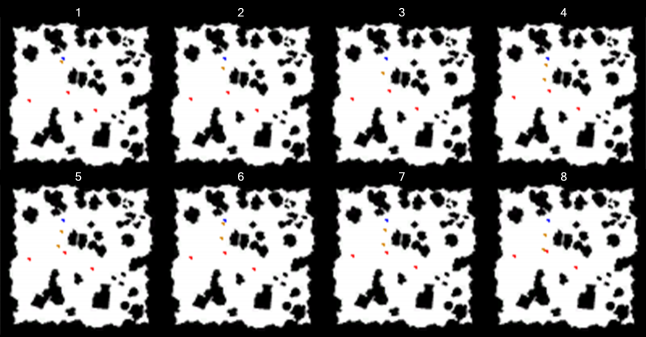

The method we applied was based on successful research in sports analysis, where neural networks were trained to classify different strategies in basketball, using player movements as input. In our case, the movements of all relevant game entities were saved and converted into sequences of images. These sequences were in turn used as input for our neural network, which tried to classify them in three different ways:

Per player

Per action

Per game mode

Below is a snippet of 8 frames of one of these sequences used as input for our neural network.

The neural network

We used a recurrent convolutional neural network (RCNN) for the classification task. This combination of deep learning techniques was necessary due to the nature of our input. Recurrent neural networks are capable of dealing with sequences as input, while convolutional neural networks are designed to extract features from images. As our input came in the form of sequences of images, using an RCNN allowed us to extract spatio-temporal features from a sequence. In simpler words, the network tried to find patterns in the movements of the game entities.

The network was implemented in Keras, running on top of Tensorflow. Keras is a high level Python API which allowed fast iterations of the training configuration and layer setup of the network. It provides different solutions to combine recurrent with convolutional neural networks. We used the ConvLSTM2D layer, which is a built-in combination of a convolutional layer and an LSTM layer. LSTM refers to long-short term memory, which is an improved version of the traditional recurrent neural network architecture. The network used a categorical cross entropy loss function to learn to determine which class a sequence belonged to.

# Create neural network seq = Sequential() seq.add(GaussianNoise(0.1, input_shape=(None, 100, 100, 3))) seq.add(ConvLSTM2D(filters=50, kernel_size=(3, 3), #input_shape=(None, 100, 100, 3), padding='same', return_sequences=True)) seq.add(BatchNormalization()) seq.add(ConvLSTM2D(filters=50, kernel_size=(3, 3), padding='same', return_sequences=True)) seq.add(BatchNormalization()) seq.add(ConvLSTM2D(filters=50, kernel_size=(3, 3), padding='same', return_sequences=False)) seq.add(BatchNormalization()) seq.add(Flatten()) seq.add(Dense(nClasses, activation='softmax')) adadelta = optimizers.adadelta(lr=0.0001) seq.compile(loss='categorical_crossentropy', optimizer=adadelta, metrics=['accuracy']) # Train the network seq.fit_generator(sequence_generator(), steps_per_epoch = 200, epochs = 80, validation_data = sequence_generator(shouldAugment = False), validation_steps = 20)

Gathering training data

We decided to train the network using supervised learning. Therefore, labeled data was required. A group of players was invited to play the 'Tanks!' game, and their movements in-game were saved and labeled. The labeling process was automated. Games were saved and converted to sequences along with the information of which participant was playing and which game mode was being played. Furthermore, it was also recorded whenever one of the following actions happened:

The game started

The player killed an opponent

The player died

Unlimited ammo pickup consumed

Health pickup consumed

As the dataset was relatively small, augmentations such as noise, rotations and shifting were added to enlarge the dataset and prevent the model from overfitting.

Results

After training, the results for each classification task showed varying results:

The network classifying players peaked at an accuracy of 53%, with a chance performance of 1/9 ≈ 11%.

Classifying actions performed poorer than originally anticipated, but still reached an accuracy of 52% with a chance performance of 1/6 ≈ 17%.

The network showed some ability to recognize which game mode the player was playing, but only managed to reach 72% accuracy with a chance performance of 1/2 = 50%.

Limitations

Although results weren't up to par with expectations, the network still showed potential for each classification task. The poor results are likely caused by three factors:

The hardware limitations. The network was trained on a GeForce GTX 1070 with 8 GB of VRAM. Due to the limited amount of VRAM, suboptimal training parameters had to be used and our sequences had to be sliced, possibly losing important information. The depth of the network was also restricted due to these limitations. It's plausible that a deeper neural network would achieve better results, considering neural networks can learn a more complex, non-linear function as they become deeper, therefore allowing them to find more complex patterns.

The quality of the data. The participants were new to the game. Therefore, during a portion of their games, they were still getting used to controls and gameplay. This may have meant that they neglected the game mode in those games.

The size of the dataset. A total of 297 games were played, by 9 different players. Considering neural networks are usually trained on immense datasets, 297 games is a rather sparse dataset.

Discussion

The most successful of the three experiments was the player classification process. From the results, it can be concluded that the network can identify spatio-temporal patterns that are unique to each player. However, it cannot be assumed that these patterns indicate a specific playstyle. They may be related to factors such as the player’s experience, stress level, etc. As most of these factors were not measured for this experiment, it cannot be established which kinds of patterns the network uses to classify its input, only that they are, to some extent, unique to the player.

When classifying actions, the network seemed to find patterns unique to certain actions, but often misinterpreted other actions. It is possible that not all the classes were distinct actions, but rather events that happened, not necessarily related to the player's movements. For example, the player hitting themselves was often misclassified, but was probably never intended by the player, and therefore not reflected in their movements.

Lastly, the network showed some capability to identify which game mode a sequence came from. The only difference between these sequences is how the players approached the level. Therefore, while the network’s accuracy was not particularly high, it can still be concluded that the network was able to identify patterns that indicate a certain playstyle.

Further research

Given the results show potential in all three categories, this opens up avenues for further research in this area. The technique presented in our research could be improved in multiple ways:

The most obvious improvement of our network is related to the hardware issues. A deeper network may achieve better results, especially combined with a denser dataset.

It could be interesting to see how a similar network would perform on other games. Preferably games that have existed over longer periods of time, with established strategies. This would improve the quality of the input data and make the labeling process easier.

A different representation of the data could be used. Using images restricts the input data to only movements of game entities. Not all gameplay choices necessarily correlate to movements, and therefore cannot be added to our network's input. Changing the way we represent our input could solve this problem. For example, we could use the player's inputs, alongside their current game-state and relative position as input for the network. Due to the novelty of this classification task, the optimal way to represent input is not yet determined.

The effects of stress, gaming experience, focus, etc. could also be measured in future experiments, to see how they relate to player movements. This could also give us more insight about the origin of the patterns the network uses to classify sequences.

Finally, the labeling process is currently a limitation of the technique. If we could use unsupervised learning to identify patterns that indicate the usage of certain strategies, this process could be eliminated.

Creating the adaptive AI

The original intent of the research was to find patterns that indicate the usage of a particular playstyle, and use this information to create an adaptive AI. To avoid this new information further increasing the complexity of behavior trees or finite-state machines, we suggest an approach using utility AI. Utility AI is a system that chooses which action an agent will take by comparing their expected utility values. These values are calculated depending on the game-state. By increasing or decreasing the expected utility value of specific actions depending on the player’s current playstyle, an agent can be steered towards a more fitting playstyle against that player. For example, when playing versus a defensive playstyle, the agent's offensive actions can be given an increase in expected utility value, and vice versa.

Other possible applications

Patterns unique to individual players may also prove useful. If the exact origins of these patterns could be determined, this information can be used by the game developer to adjust gameplay at runtime. For example, they could be an indication of stress or boredom. Access to the current stress level of a player can allow the developer to enhance stressful moments and avoid boring experiences. Alternatively, they may also indicate whether or not a player is struggling with controls or the difficulty of the game. Such information could allow for a dynamic difficulty system, or prompt UI pop-ups to help the player.

Furthermore, they could be used to prevent people from account sharing. These practices are against the rules of many games. For example, competitive games frequently struggle with skilled players boosting other players (increasing their in-game rank by playing on their account, often in exchange for money). Such abuses could be detected using spatio-temporal pattern recognition.

Recurrent neural networks can also learn to predict what comes next in a sequence. This would allow us to predict player movements, which can be useful when implementing AI behavior, or could aid in making game content more dynamic and compelling.

Lastly, this technique could be used for bot detection in games. Although this concept was not tested in our research, the proposed methodology could be used to assess if a neural network can differentiate a real player from a bot, based on their movements. If successful, this could be used to detect gold farmers, usage of third-party scripts, etc.

Conclusion

The goal of our research was to find a way use deep learning to create an adaptive, yet controllable and predictable game AI. To achieve this, a neural network was created that identified spatio-temporal patterns in movements of game entities. In particular, patterns that indicate the usage of a certain playstyle. Three classification experiments were conducted, each with varying success. From the results, we deduced that players exhibit unique movements that differentiates them from other players. The network also demonstrates some ability to recognize game specific actions based on player trajectories. Lastly, the model showed potential when classifying movement sequences per game mode, which infers that it can distinguish different playstyles. Following these results, we suggest an approach using utility AI in combination with the proposed technique to adapt agents to player behavior. Finally, we speculated about some of the other potential applications of our neural network.

Read more about:

BlogsAbout the Author

You May Also Like