Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Gathering Sky: Audio Journal #3

Another journal entry about the audio development of Gathering Sky, covering audio implementation within Fmod and Java.

(This is the third of the journal entries that will cover the audio development for the game, Gathering Sky. If you missed Audio Journals Pt 1 and Pt 2 you can find them here or here.)

My intention was to run this journal alongside the development of the game (which I did in the first two journals), but development is complete now and this last journal will be more of a look back at some of the takeaways I felt might be beneficial to other indie studios or audio folks working in indie games.

IMPLEMENTATION FIXATION

In the last couple of years, audio middleware has fallen so easily into our indie game developer hands that it’s become a ‘no brainer’ to take advantage of these tools. All of the major audio middleware has a free/cheap license for indies, non-profits and educational institutions, and it simply makes our jobs easier and gives us more control. While it does require some code to call out sound events, once that is set up a lot of other nitty gritty work can get done by the audio team and the engineers can focus on other stuff.

I mentioned to a friend recently that Gathering Sky is probably the game I’m proudest of, up to this point, and when prodded as to why I felt that way, clearly one of the reasons was because of my ability to quickly iterate with the game build and Fmod Studio (along with a true emotional connection to the game and a fantastic team).

THE SETUP

We had a unique situation in that Gathering Sky was built with Java. So As I’d mentioned in a previous journal, team member John Austin was able to code bindings for Fmod to work within Java. Fmod doesn’t provide an API for using Fmod within Java, so this had to be made from scratch! My only previous experience with Java had been installing mods for Minecraft with my kids, and some of that knowledge turned out to be pretty useful.

When I would create new sound events in Fmod, I would have to pass on the new sound event names so that John could hook up the sound. Then I’d get a new .jar build of the game from him. Once I had the .jar I could open up the contents, find my Fmod banks, and simply replace them. Then I had a couple of Minecraft mod-esque steps after that to turn the build back into a .jar and run the game.

Once the code was calling the sounds, i could easily tweak away at the Fmod session, replace the banks, repeat and play...over and over and over. That’s just way too much work to ask your programmer to do if you aren’t using middleware, (let alone describe what tweaks needed to be done). Ultimately the results became far more polished because I had this easy method of testing the Fmod session in game. With more commonly used engines it’s quite easy to hook up your middleware while the game is running and mix it accordingly. We didn’t quite have that luxury, but this was certainly workable.

We were also able to easily run the Fmod profiler, to help us troubleshoot some of the sound events, to see if/when they were triggering and to measure our processor expense, audio streams etc. It was an incredibly valuable tool.

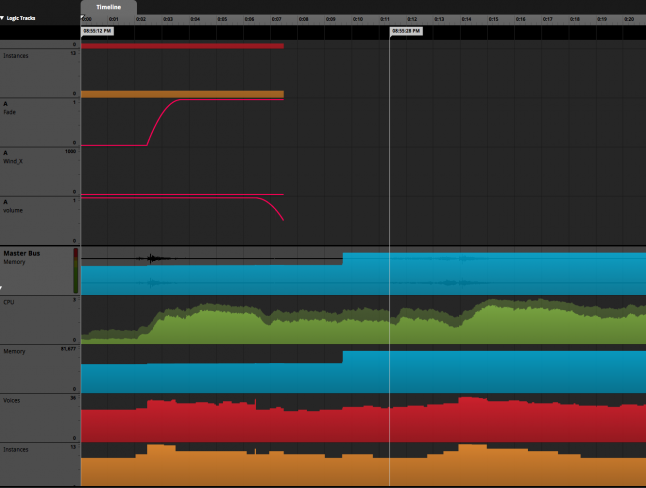

A screen shot of the Fmod Profiler showing the Master Bus in the lower half (upper half is one of the wind sound events).

DYNAMIC MUSIC...IN REVERSE

The typical example that is given when discussing a dynamic music system in games is something like: mellow exploration music>tension rises in music as danger/encounter grows closer>big stinger>battle music>more intense battle music>really intense battle music>stinger>battle music backs off>mellow exploration music.

The music is communicating the danger, impending action, and building tension by way of layering musical stems (usually). But in Gathering Sky we were kind of trying to do the opposite.

The music in Gathering Sky is communicating emotion, and supporting gameplay, much like any soundtrack, but as in every game, each player will play differently and spend more or less time in each area of the game. There were times when we knew approximately how long it would probably take a player to explore a certain area, so we had to figure out 1) how long the initial ‘this is a new area’ music should last and 2) how to change it to let the player know that they could move on soon.

I’m not embarrassed to say that I’ve written a fair amount of casual game music loops in my day, and when a game is looping a short piece of music it’s vital to keep the melody to a minimum. In fact, it’s almost a formula: the shorter the loop the weaker the melody should be. Otherwise ear fatigue sets in quickly and no one wants to play the game.

But I wanted Gathering Sky to have a strong melodic approach, and used several little motifs throughout the score. So during the composition and testing phase of the music (before recording), we identified many of those areas within the game, and set up the recording so that the melodies were recorded as overdubs so that I could layer them with the foundation laid down by (usually) cello, bass clarinet and viola.

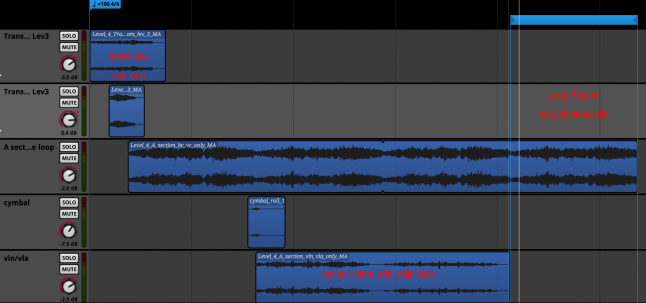

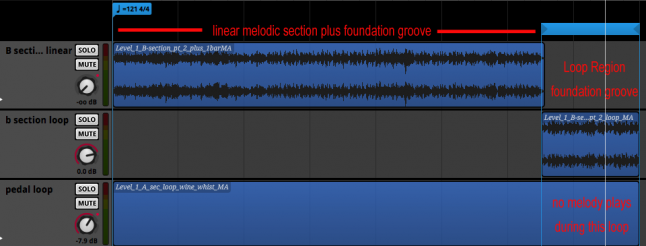

In context, the player would wander into a new area of the game, a new music cue would begin and the melodic layer would play over the foundation. If we had identified this area as needing about 90 seconds of exploration, then the melodic layer of the cue was about 90 seconds. But the cue was designed with a loop point in mind, so that the melodic parts could subside and the foundation ‘groove’ could continue to play after the initial 90 seconds of melody.

In some cases we recorded all of the parts together (melody and foundation) and then recorded just the foundation. In these cases I could simply drop these regions next to each other and set up a loop point on the foundation groove.

In some cases we recorded all of the parts together (melody and foundation) and then recorded just the foundation. In these cases I could simply drop these regions next to each other and set up a loop point on the foundation groove.

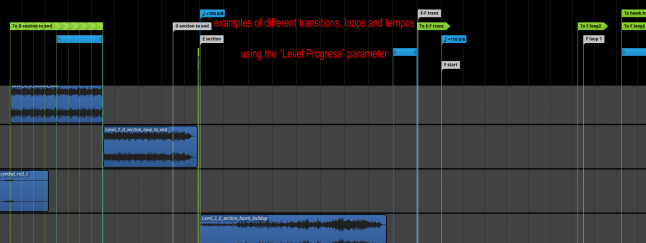

Without hearing the melody, the player would still feel the tempo/groove from the cue, but instead of feeling fatigue, the player might feel like things were getting a bit stale and might be encouraged to move along to the next section. The music would move from “holy cow, something new to explore, woohoo, hey check this out, whoa!” to “so that was cool, i wonder what else there is” to “okay, i guess I’ve been here a while, let’s see what’s next.” This was all controlled by one parameter in each music event called “Level Progress” and there would be transitions throughout that timeline, setup to move the timeline forward, based on where the player was, geographically, on the map.

It’s certainly a bit of a backwards example of a typical dynamic music system, but I think that it’s important to note that had we not had time to explore our options and experiment a bit during the composition phase (while concurrently dropping the mockup cues into Fmod and the game build) then we wouldn’t have been able to identify this as a solution before the recording. And if we had recorded all of those parts together (the melody and the foundation) then we would have been stuck with that version of the music cues. I think that I discussed the importance of flexibility within the music cues as you plan out the recording session in a previous journal, and this example further illustrates that point.

SIDECHAINING BETWEEN SOUND DESIGN AND MUSIC

A wordless game such as Gathering Sky really relies on the music to help tell the story, so it needs to be audible and present. But there are certain events within the experience of Gathering Sky which demand that mother nature be heard!

When your game takes place in the sky, obviously you’ll need some wind sounds! I collected 6-7 different classes of wind recordings, some of it recorded by me, some recorded by others (special thanks to Ann Kroeber here!), and some of it was generated by synths etc. Every different world required a different emotion or attitude from the wind, and the balance between the wind and music was probably where I spent most of my implementation time.

When the wind had to be forceful, I used the Sidechaining feature in Fmod between the wind group and the music group within the mixer. If you aren’t familiar with ‘sidechaining’ it’s similar to the process called ‘ducking’ which you find in GarageBand for podcasts: when there is music in the background, it plays normally until a voice starts to talk. When the voice starts to talk, the ‘ducking’ (sidechaining) lowers the level of the music, based on how loud and how often the voice is speaking.

So when the music got louder, the wind would back off a little bit, and when the music subsided a bit, the wind would get louder. You might ask, well why didn’t you sidechain the other way around, and let the wind become the prominent element? We tried this, and it turns out that we get really tired of listening to strong winds. It’s very fatiguing on the ears, and as previously mentioned, we needed the music to continue to tell the story.

AUDIO STREAMS AND BUGS!

At one point during development we ran into a bug that took some time to solve. There was a particular streaming sound that would occur during the appearance of recurring object in the game. Sometimes there were multiples of these objects on screen (speaking a bit vague here in order to remain spoiler free!)

When there was only one of these objects, the looping, streaming sound would always play correctly. Within this sound event (the looping one, which was set to ‘stream’), there were a few other short sounds that would also eventually trigger depending on the progress of the player’s interaction with the object. Those other (short) sounds were not set to streaming.

In our testing the streaming sound would drop whenever there were more than one of these objects on screen, but we would always hear the non-streamed, short sounds, eventually, if enough progress was achieved. (Within Fmod the user can choose which audio tracks within a sound event can be streamed or loaded into memory).

John had explained that he had several sound events set up to constantly loop throughout the game, as it was easier to bring some of these sounds in and out this way. So we were using a lot of the audio channels already, as it turned out. Eventually we discovered that FMOD has a default setting to 32 maximum simultaneous layers within the virtual audio channels. So we changed that setting to 256 (from the 32 default) and all of our streaming audio loops were audible again. That was a big moment because we knew that the streams were playing, and there was nothing apparently wrong except that the audio was dropping. If we had set up the profiler at this point, we would have seen the problem pretty quickly, but we hadn’t done that yet.

TAKING FLIGHT

I feel like there’s a lot to discuss within this project, given its unique nature and the small team, DIY-approach that we took to developing Gathering Sky. The game releases on 8/13/15 and I’m happy to answer more questions here if you get a chance to play through (it takes about 25-30 minutes to play the entire game). Or if there are enough questions about a particular part of the game, I might write up another journal based on that.

Gathering Sky will be available on Steam, Humble, iOS and Android and I’d love to hear what you think about it.

Read more about:

Featured BlogsAbout the Author

You May Also Like