Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

License an engine or create your own?

This question is often asked at the beginning of a new game project, and the decision has profound effects on its whole life cycle and possibly even on its follow up projects. Here�s how and why we have chosen to answer this question over the last decad

This question is often asked at the beginning of a new game project, and the decision has profound effects on its whole life cycle and possibly even on its follow up projects. Here’s how and why Sproing has chosen to answer this question over the last decade.

Picking an engine is a complicated topic and, curiously enough, tends to get emotional too. I have worked on, and with, many engines in the past, but the decision which one to choose has never been made by me. In recent years however, it has become my responsibility to make these decisions and to defend them. So, I took some time to write down my thoughts on what needs to be considered, and what the repercussions are. I hope to present non-obvious and somewhat universal aspects of the topic, because, obviously, your mileage will vary greatly depending on your company setup and plans.

What is everyone else doing?

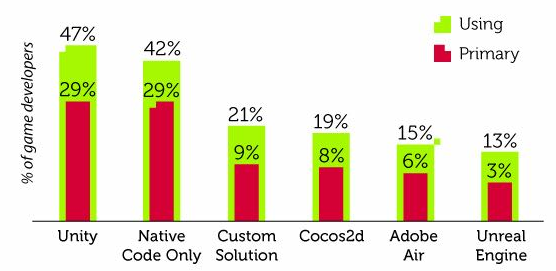

When faced with any decision, especially a big one, it is usually a good idea to do some research and find out what others in that situation haven chosen to do. A study titled “Developer Economics | State of the Developer Nation Q3 2014”[1] contains a fairly comprehensive graph (Figure 1) showing the distribution of engine use amongst mobile games developers.

Figure 1: Engines used by mobile games developers

It is noteworthy that primary use (red column) is roughly equally distributed between licensed engines and custom solutions (Columns “Native Code Only” and “Custom Solutions” combined). While Unity3D is clearly leading in this particular field, it is in no way as clear-cut as engine vendors and their fans try to tell you. In addition, were one to make a similar graph for console or PC game development, the individual bars would be different (Unity3D much smaller, Unreal Engine much bigger, …), but overall there would still be a rough 50:50 split between licensed engines and homemade ones. There is no optimal solution to be found when looking at what others are doing.

Part 1: Strategy

The first thing to consider is to get clarity about where you want to spend your money. In general, there are two ways: Investment and Costs. Investment is money spent in order to gain something long term, ideally more than initially invested, but at least more than nothing. Costs are money spent to get something back immediately, but they are “sunk” by default. You can think of costs as investments with a zero return, which, hopefully, sounds less than ideal to you.

Let’s look at the example of people working on your product. On the one hand, you can have employees, who will get a substantial salary. In fact, for almost all game companies that is what they spend most of their money on. But the money spent is not necessarily lost. If your employees actually do their jobs, which should be a priority, a product will be made that will generate revenue in the future. If you can keep employees for a period of time, they’ll get better at what they do and how they work with each other. This proficiency increase can amount to quite a bit of additional value and is, in my opinion, one of the main things that separates great companies from mediocre ones. On the other hand, you can choose to work with freelancers. They might be perfect fits for the particular job, but, once it’s over, they are gone, taking all the time and money spent on them with them.

We face a similar situation when thinking about licensing an engine vs. developing your own. License fees, by definition, are pure cost. You get to use something for a limited amount of time. If you want to get it again, more license fees need to be paid. Developing your own tools ensures that they are available at any time at no additional cost.

Now, you could say that by sticking to the same engine, it is also available at any time, and those license fees are well worth it. In principle, this is a very good idea, because I think switching an engine, especially during development, is a tremendously bad thing that will destroy any investments made into that engine. Not only will any custom tools be worthless, but a lot of the specialized experience gained will be gone, setting back your company’s overall proficiency for months or even years. For a chilling example of this, review the development history of Duke Nukem Forever[2].

However, bear in mind that a license is a very weak form of ownership, which guarantees you very little. A cautionary tale from Sproing’s past. In the early 2000s an engine called RenderWare[3] was becoming tremendously popular. Sproing decided to build its game engine around it, as did other developers, most famously maybe Rockstar North for the Grand Theft Auto 3 trilogy. This went very well, until, in 2004, RenderWare’s owner Canon, of camera and printer fame, decided to sell it to Electronic Arts. Soon after that, EA stopped license sales to 3rd parties, and support and maintenance of “public” version of RenderWare started to decay. Most notably, it became clear that no versions for the then new consoles PlayStation 3 and Xbox 360 would be forthcoming. Rockstar started working on RAGE[4], and Sproing, left with no rendering engine and considerably less monetary power, was forced to start over as well.

The RenderWare incident was pretty bad, but what about the currently available engines? Let’s look at two very popular examples.

Unreal Engine

Its owner, Epic Games, is and has always been a highly respected developer. However, in 2012 an aggressively expanding Chinese company called Tencent - today the biggest games publisher worldwide - bought roughly 48.8% of Epic Games, including the right to appoint members of the board, for hundreds of millions of dollars. In the wake of this acquisition the business model for Unreal Engine changed from large one-time payments (with or without additional royalties) to a subscription based model with mandatory royalties. Custom contracts are still available, but Epic will be in a very strong position in such negotiations. In addition, some key people left Epic Games, as a further indicator of a substantial transition.

Their standard licensing terms[5] entitle you to very little (you may download and use it), but require royalty payments for almost everything that would generate revenue for you, once it has been touched by Unreal Engine. Epic has no obligations to provide support or updates, or even keep the current versions available. In addition, the license terms prohibit you from legal actions against Epic, which is probably the result of their 2007 legal encounter with Silicon Knights[6].

Also worth considering is the fact that, if you develop a game with the Unreal Engine, Tencent, a competitor on the games market, will know about it the minute you register for publishing, negotiate a custom license, or even need help from support. Finally, if Tencent wanted, it could probably gobble up Epic completely and make Unreal Engine unavailable to the rest of the world, although I think this is a rather unlikely scenario.

Unity3D

Another company that changed a lot in the market. They dominate the mobile 3D market, probably thanks to their fantastic marketing and community support programs. However, they are, like RenderWare was, entirely investment funded, and investors like to get their money back with a substantial profit to boot.

Browser plug-ins are dying, putting pressure on one of their main platforms. While their licensing model is great for users (buy once, use forever), it’s probably not great for their cash flow, as they quickly approach market saturation. So they have started to push their subscription license model, and aggressively started to offer cloud services with it, which make you as a developer even more dependent.

So, add a subscription model, which can be terminated at any time, dependence on their services, and the fact that there are constant buy-out rumors, and you have a potent mix of possible futures, many of them very undesirable. You can negotiate custom contracts with Unity as well, but it will require deep pockets.

If Unity3D gets sold off, it does not necessarily mean that its operations will change dramatically, but no one should act surprised when it happens.

Part 2: Maintenance

So, you’ve picked or made your toolset and completed a project with it, or are otherwise ready to start the next project. We have already established that proficiency comes from consistency, and that changing engines is one of the worst things you can do. Therefore it is a good idea to look in depth into the previous project to find out what went right and what went wrong. If it should turn out that the current approach is broken beyond repair, you’ll have to go back and revisit your strategy again. But usually it is not quite as bad and your current tools worked well enough, but obviously have shortcomings, which you could and should fix for the next project.

Revolution

We probably all know the feeling. You’ve completed cobbling together a wooded shack and now you feel pumped and ready to construct the Sistine Chapel and it will be great! At Sproing in the late 2000s we were working on a similar, but slightly more modest, undertaking. The complete rewrite of our Athena engine.

Start from a clean slate and build the engine for the future. Dropping old platforms and adding new ones, adding a plethora of nifty features in a quite ambitious project. Unfortunately the project was developed in isolation most of the time, and the focus was on breadth and not depth. As a result, we had a lot of stuff implemented nicely, but many things were left only half-finished. This caused a quite substantial net loss of functionality, which caused months of frustration and, to some extent, continues to haunt us to this day.

You can hear a similar story, but on a much larger scale, in Chris Butcher’s GDC 2015 talk about the rewrite of Bungie’s internal engine titled “Destiny: Six Years in the Making”[7], which goes a lot more into detail.

Evolution

The great thing about making mistakes is that you can learn from them. Nowadays, we wouldn’t attempt a complete rewrite anymore, and instead focus on incremental improvements, which can get integration tested in ongoing projects quickly. Larger changes are isolated in branches, so that they can easily be rolled back should the integration fail.

As appealing as refactoring seems, it can easily result in solutions that are less functional than the original version. This can and should be referred to as “refucktoring”, and naturally, avoided at all costs.

Your choice, or not?

As far as the engine you are using is concerned, you won’t have to make the choice of how to improve it yourself. The engine vendor will make it for you and you’ll have to live with the consequences. The difference is, if you can make improvements yourself, you can make sure that they are in line with your requirements, whereas vendor-provided changes are geared towards what they think their customer would like to have, or what might look best in press releases. Basically, whatever is good for their long-term success, which, in turn, isn’t necessarily the best for your long-term success.

Usually vendors like to package their changes in version updates, which can be more or less disruptive to users of the engine. What’s pretty universally true is that updates, though not technically mandatory, have to be done by all engine users sooner or later, because support for older versions will decay quickly.

These version updates come with their own set of little troubles. Backwards compatibility often breaks subtly, new features are introduced, making other ones obsolete, tools usage and interfaces change, and so forth. Migration paths are often not offered, and certainly never for going back again. Things get worse for so-called major version changes. For instance, moving from Unreal Engine 3 to 4 requires at least re-exporting all assets, rewriting all gameplay code, and recreating all your tool customizations.

All of this can hurt your proficiency just as badly as unwise decisions on your internal development can do. So, if you can, choose wisely which path you take. The revolutionary path, which is fast but dangerous, or the evolutionary path, which is slow but controlled. If you can’t, try your best to foresee and mitigate changes forced onto you.

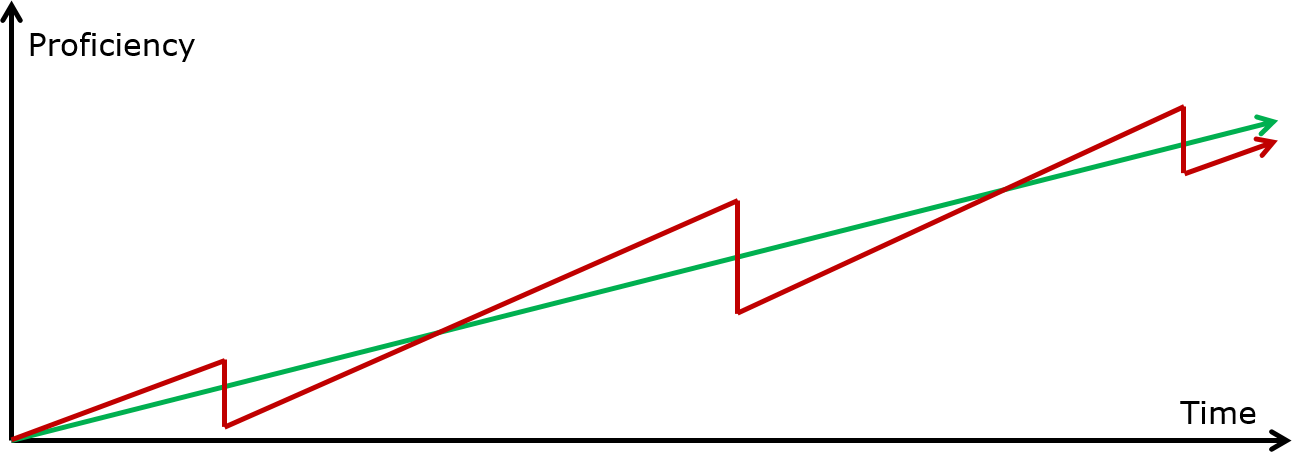

Figure 2: Proficiency growth graph (idealized)

A look at the proficiency growth (Figure 2) makes this thought visible. It is an idealized graph, reality is much less linear and much, much more complicated, but the point is that with your own engine there can be continuous growth of proficiency (green line). With an external solution your proficiency drops down with every version change (red line).

Part 3: Tools

So far, we have been talking about engines, toolsets, frameworks, and so on, but what are these actually? Everything that’s used for game development (and in fact, to do anything) is a tool. So, if you license Unreal Engine, for instance, all you do is get limited access to a large set of tools. Whenever you make a script to make your (and other’s life) easier, you have created a tool. When you find a new way to do a certain thing better, you have created a tool, too.

Tools are so ubiquitous that it becomes quite hard to distinguish them from what you create when using them. But, they are not only incredibly useful, but also incredibly expensive. If you are smart, a large part of your development budget will be spent on tools development, regardless of what technological base you build on.

However, there is a big problem with tools provided by others!

Every tool sucks!

Whenever you get exposed to a new tool, you’ll find it awkward to use. Think back to the first time you used a hammer, a rather simple tool, to affix a picture to a wall. It is very likely that this was a rather unpleasant experience. But, ultimately, it can get the job done, the second time is less weird, and after a while you can hammer in nails like a boss.

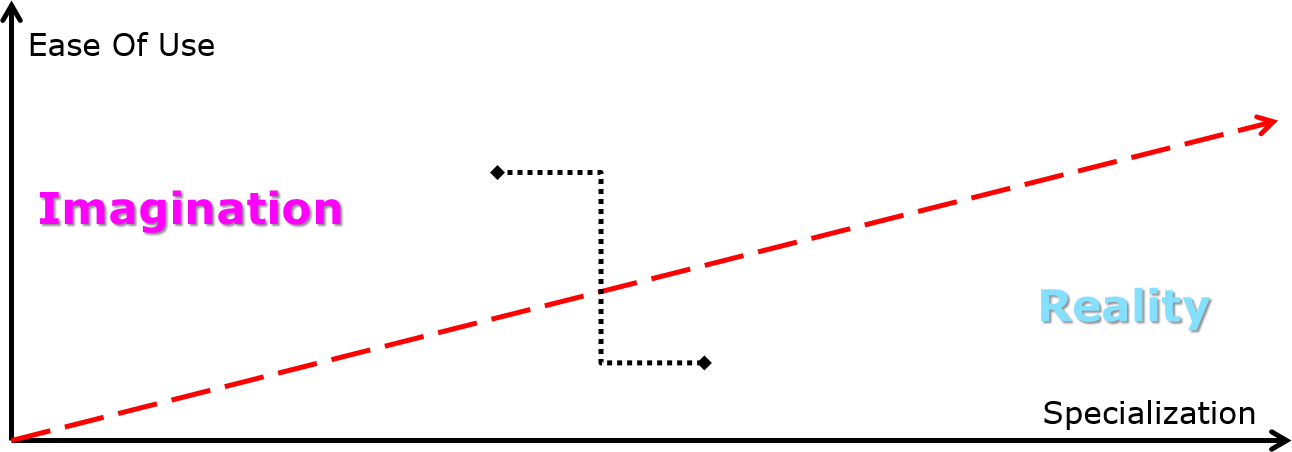

So, every tool comes with the big obstacle of “getting used to it” that needs to be overcome, before it can be used to its full potential. I have created a model to help you visualize this, called “Possible tools space” (Figure 3).

Figure 3: Possible tools space

Let me explain. On the vertical axis we have “ease of use”. It measures how “simple” it is to get used to a tool and use it effectively. “Specialization” the horizontal axis measures the amount of different things a tool can do. The most specialized tools can do exactly one thing, while the least specialized tools can (in theory) do everything. It is the size of a tools domain.

For example, a C compiler and computer where you have root access makes it possible for you to unlock that machine’s full potential. But that will be very hard to do. Nobody can pick up and use a C compiler (or any compiler, really) without any prior knowledge, and mastering its use is, according to common lore, unattainable for mere mortals. So it would be somewhere in the bottom-left corner of the graph: hard to use but very generic.

Another example would be the “red eye correction” tool in many image editing programs. Load a photo, hit a button, and the red eyes are gone. Very easy to use, can be used without prior knowledge, but does only exactly one thing. It would be somewhere in the upper-right corner of the graph.

These examples already indicate a bit of a trend. Tools can either be generic but hard to use or specialized and easy to use. And the dashed red line is an indicator of exactly that. It represents the upper limit for ease of use, given a particular level of specialization. So, specialized tools can be hard to use, but generic ones have to be by necessity. This also means that the best tool for anything would try to get as close to that line as possible. It also explains the fact that tools tend to get harder to use, when their domain is expanded.

All the space above the red line is unattainable for real tool. This is where the tools of our imagination dwell. My hypothesis is that the initial frustration when getting used to a particular tool is proportional to the Manhattan distance[8] between the expected tool (which can and often will live in the realm of imagination) and actually encountered tool (which is constrained to reality). An example of this is illustrated in Figure 3 by two black diamond shapes (the imaged and real version of a tool) connected by a black dotted line (the distance).

Armed with this knowledge we can try to analyse why some tools are successful and loved, while others doing the same thing, or even better things, aren’t. The trick is not only to get designated tool users excited about what the tool can do for them, but, at the same time, to get the expectation as close as possible to reality. This way the initial hurdle can be minimized and users won’t get turned off the tool before reaching its full potential.

Some tools vendors are excellent in doing exactly that. In my opinion, this is where the Unity3D marketing efforts excel, leaving all their competitors behind. And this is also where internally developed tools regularly fail most spectacularly.

Conclusions

I’ve covered a lot of stuff, but believe me, there is much more. To wrap this up, let me give you a TL;DR version of what you should get out of this article:

Start by picking a good strategy to make your game and possible follow-up games. Pick the tools that have the greatest chance of getting you there and stick with them! Only consistency will unlock the potential of the tools and the people using them!

Gradually improve what you have and add things that you need, making sure that you keep an eye on the expectations of everyone involved. Remember, big changes bear big risks and are often not worth it. Be patient and soldier on, and eventually all the hard work will pay off.

In case things go catastrophically awry, lick your wounds and start over with your newly gained wisdom.

[1] http://www.visionmobile.com/product/developer-economics-q3-2014/

[2] https://en.wikipedia.org/wiki/Development_of_Duke_Nukem_Forever

[3] https://en.wikipedia.org/wiki/RenderWare

[4] https://en.wikipedia.org/wiki/Rockstar_Advanced_Game_Engine

[5] https://www.unrealengine.com/eula

[6] https://en.wikipedia.org/wiki/Silicon_Knights#Silicon_Knights_vs._Epic_Games

[7] http://destiny.bungie.org/n/1425

[8] https://en.wikipedia.org/wiki/Taxicab_geometry

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)