Graphics Deep Dive: Cascaded voxel cone tracing in The Tomorrow Children

"Rather than a traditional forward or deferred lighting system, we created a system that lit everything by tracing cones through voxels," explains James McLaren, director of engine technology at Q-Games.

Deep Dive is an ongoing Gamasutra series with the goal of shedding light on specific design, art, or technical features within a video game, in order to show how seemingly simple, fundamental design decisions aren't really that simple at all.

Check out earlier installments, including the action-based RPG battles in Undertale, using a real human skull for the audio of Inside, and the challenge of creating a VR FPS in Space Pirate Trainer.

Who: James McLaren, director of engine technology at Q-Games

I've been programming from the age of 10 when I got a good old ZX Spectrum for my birthday, and since then I've never looked back. I worked my way through an 8086 PC and a Commodore Amiga while I was a teenager, and then went on to do a degree in computer science at Manchester University.

After Uni, I went to work for Virtek/GSI on some PC flight simulators (F16 Aggressor and Wings of Destiny) for a few years, before leaving to work on some racing games (F1 World Grand Prix 1 & 2 on Dreamcast), at the Manchester office of a Japanese company called Video System. Through them I got the chance to come out to Kyoto a few times, loved the place, and eventually ended up coming to work out here at Q-Games in early 2002.

At Q-Games I worked on Star Fox Command on the DS, the core engine used in the PixelJunk game series, as well as being lucky enough to work directly with Sony on the OS graphics and music visualizers for the PS3. I popped over to Canada to work at Slant Six games on Resident Evil Raccoon City for three years or so in 2008, before returning to Q-Games to work on The Tomorrow Children in 2012.

What: Cascaded voxel cone tracing

For The Tomorrow Children, we implemented an innovative lighting system based on voxel cone tracing. Rather than using a traditional forward or deferred lighting system, we created a system that lit everything in the world by tracing cones through voxels.

Both our direct and indirect lighting is handled in this way, and it allows us to have three bounces of global illumination on semi-dynamic scenes on the PlayStation 4. We trace cones in 16 fixed directions through six cascades of 3D textures, and occlude that lighting by Screen Space Directional Occlusion, and spherical occluders for our dynamic objects to get our final lighting result. We also support a scaled down spherical harmonic-based lighting model to allow us to light our particles, as well as allowing us to achieve special effects such as approximating subsurface scattering and refractive materials.

Why: A totally dynamic world

Early on in the concept phase for The Tomorrow Children we already knew that we wanted a fully dynamic world that players could alter and change. Our artists had also begun to render concept imagery using Octane, a GPU renderer, and were lighting things with very soft graduated sky's, and fawning over all the nice color bouncing they were getting. So we started to ask ourselves, how we were going to achieve this real-time GI look that they wanted without any offline baking.

Here's an early concept shot rendered with Octane, that shows the kind of look they were hoping for

Here's an early concept shot rendered with Octane, that shows the kind of look they were hoping for

We tried a number of different approaches initially, with VPLs, and crazy attempts at real-time ray-tracing, but early on, the most interesting direction seemed to be the one proposed by Cyril Crassin in his 2011 paper about voxel cone tracing using sparse voxel octrees. I was personally drawn to the technique, as it I really liked the fact that it allowed you to filter your scene geometry. We were also encouraged by the fact that other developers, such as Epic, were also investigating the technique, and used it in the Unreal Elemental demo (though they sadly later dropped the technique).

So what is Cone Tracing?

Cone tracing is a technique that shares some similarity with ray tracing. For both techniques we’re trying to obtain a number of samples of the incident radiance at a point by shooting out primitives, and intersecting them with the scene.

And if we take enough well-distributed samples, then we can combine them together to form an estimate for the incident lighting at our point, which we could then feed through a BRDF that represented the material properties at our point, and calculate the exit radiance in our view direction. Obviously, there are a lot more subtleties that I’m skipping over here, especially in the case of ray tracing, but they aren’t really important to the comparison.

With a ray, when we intersect it’s at point, where as with a cone it ends up being an area or perhaps a volume, depending on how you are thinking about it. The important thing is that it’s no longer a infinitesimally small point, and because of that the properties of our estimate change. Firstly, because of the need to evaluate the scene over an area, our scene has to be filterable. Also, because we are filtering we are no longer getting an exact value, we are getting an average, and so the accuracy of our estimate goes down. But on the upside, because we are evaluating an average, the noise that we would typically get from ray tracing is largely absent.

It was this property about cone tracing that really grabbed my attention when I saw Cyril Crassin’s presentation at Siggraph. Suddenly we had a technique where we could get a reasonable estimate of the irradiance at a point, with a small number of samples, and because the scene geometry was filtered, we wouldn’t have any noise, and it would be fast.

So obviously the challenge is: how do we sample from our cone? The purple surface area in the picture above that defines where we intersect is not a very easy thing to evaluate. So instead, we take a number of volume samples along the cone, with each sample returning an estimate of light reflected towards to apex of the cone, as well as an estimate of the occlusion in that direction. It turns out that we can combine these samples with the same basic rules we would use for ray marching through a volume.

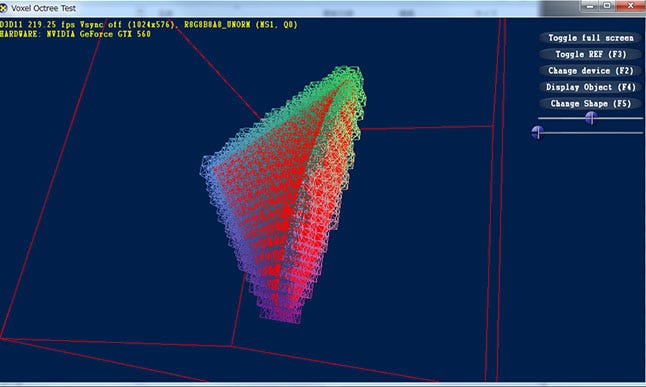

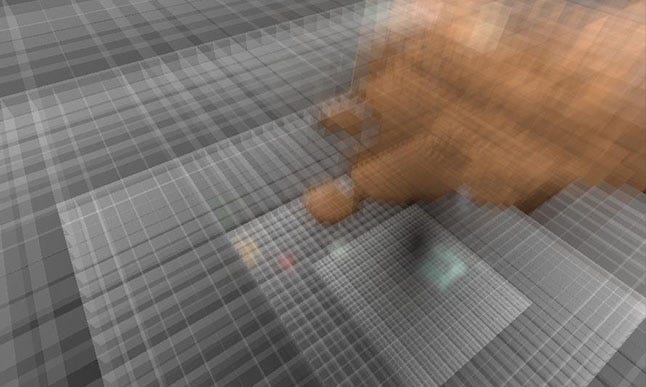

Cyril's original paper called for voxelizing to a sparse voxel octree, and so in early 2012 upon returning to Q, I started to experiment with just that on the PC in DX11. Here are some very early (and very basic) screen shots from that initial version (the first showing a simple object being voxelized, the second showing illumination being injected into the voxels from a shadow mapped light):

There were various twists and turns in the project, and I ended up having to put my R&D aside for a while to help get the initial prototype up and running. I then went on to get this running on PS4 development hardware, but there were some serious frame rate issues to contend with. My initial tests hadn't run that well anyway, so I decided that I needed to try a new approach. I had always been a little unsure if voxel octrees made sense on the GPU, so I decided to try something much simpler.

Enter the voxel cascade:

With a voxel cascade, we simply store our voxels in a 3D texture, instead of an octree, and have various levels of voxels stored (six in our case), each at the same resolution, but with the dimensions of the volume it covers doubling each time. This means that close by we have our volumetric data at fine resolution, but we still have a coarse representation for things that are far away. This kind of data layout should be familiar to anyone who's implemented clip maps or light propagation volumes.

For each voxel we have to store some basic information about its material properties like albedo, normal, emission etc., which we do for each of the six face directions of our box (+x,-x,+y,-y,+z,-z). We can then inject light into the volume, on any voxels that are on the surface, and trace cones a number of times to get our bounce lighting, again storing this information for each of the six directions in another voxel cascade texture.

The six directions I mentioned are important as our voxels are then anisotropic, and without that, light that bounces on one side of a thin wall for example, would leak through to the other side, which is something that we really don't want.

Once we have a calculated the lighting in our voxel texture, we then still need to get that on screen. We do this by tracing cones from the positions of our pixels in world space, and then combining that result with material properties that we also render out to a more traditional 2D G-Buffer.

One other subtlety about how we render compared to Cyril's original method is that all lighting, even our direct lighting, comes from the cone tracing. This means we don't have to do anything fancy for light injection, we simply trace our cones to acquire our direct lighting, and if they go outside of our cascade bounds, we accumulate lighting from the sky, modulating this by any partial occlusion that's been built up. Dynamic point lights are handled at the same time by having another voxel cascade that we use a geometry shader on to fill with radiance values. We can the sample from this cascade and accumulate as we trace our cones.

Here are some screenshots of the technique running in an early prototype.

First, here's our scene without any bounce from the cascades, just lighting from the sky:

And now with the bounce lighting:

Even using cascades instead of octrees cone tracing is pretty slow. Our initial tests looked great, but we were nowhere near to where we needed to be in budget, as just cone tracing the scene took on the order of about 30ms+. So we needed to try to make some further alterations to the technique to get ourselves close to being able to run in real-time.

The first big change we made is to chose a fixed number of cone tracing directions. Cyril Crassin's original SVO-based technique would sample a number of cones per pixel, based around the normal of the surface. This meant that for every pixel we would potentially walk through our texture cascades in an incoherent way with respect to close by pixels. It also had a hidden cost in that each time we sampled from our lit voxel cascade, we would have to sample from the three of the six faces of our anisotropic voxels and take a weighted average based on our traversal direction in order to determine the radiance in the direction of our viewer pixel/voxel. As we cone traced we touched multiple voxels along the cones path, and would pay this extra sampling, bandwidth and ALU cost each time.

If instead we fixed the directions that we trace (which we did to 16 well-distributed directions around the sphere), then we could do a highly-coherent pass through our voxel cascade, and very quickly produce radiance voxel cascades that represented how our anisotropic voxels would look from each of the 16 directions. We'd then use those to accelerate our cone tracing, so that we only needed a single texture sample instead of three.

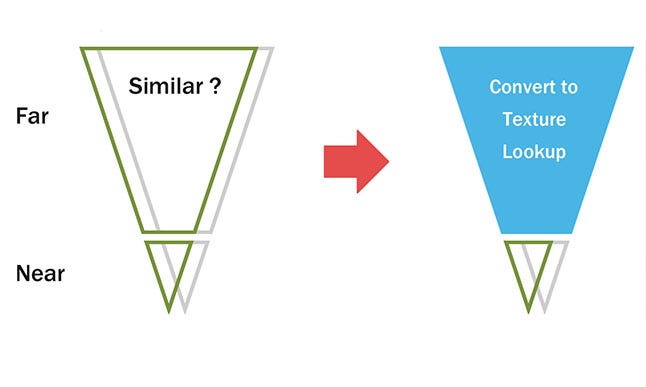

The second major observation we made was that as we were doing our cone trace and stepping up through the levels of our cascade, the voxels that we accessed for adjacent pixels/voxels became increasingly similar the further away that we got from the apex of the cone. This observation is of course just the simple effect that we all know very well: "parallax." If you move your head, things close to you move within your vision, but things further away move much more slowly. With this in mind, I decided to try calculating another set of texture cascades, one for each of our 16 directions, that we could periodically fill with pre-calculated results for cone tracing the back half of a cone from the center of each voxel.

This data essentially represents that far away lighting that hardly alters with changes in the local viewer position. It was then possible to combine this data, which we could just sample from our texture, with a full cone trace of the "near" part of the cone. Once we correctly tuned the distance for where we transitioned to the "far" cone data (1-2 meters proved to be sufficient for our purposes), we got a large speed up with very little impact on quality.

Even these optimizations didn't get us where we needed to be so we also made some tweaks to how much data we tried to compute, both spatially and temporally.

It turns out that the human visual system is quite tolerant to latency in the update of indirect lighting. Up to around 0.5-1 second of lag in the update of indirect light still produces images that people find very visually pleasing, and lack of correctness hard to detect. So, with this in mind, I realized that I could get away without actually updating every cascade level on the system every frame. So rather than update 6 cascade levels, we simply pick one to update each frame, with finer detail levels being updated more frequently than coarser wider levels. So the first cascade would be updated every second frame, the next one every fourth the next one every eighth, and so on. In this way indirect lighting results close to the player where the action was, would still be updated at a reasonable speed, but course results far away would update at a much lower rate, saving us a whole bunch of time.

For computing our final screen space lighting result as well, it became obvious that we could get a huge speed up with very little drop in quality by calculating our screen space cone traced results at a lower resolution, and then using a geometry aware up-sample, plus some extra fix up shading for fail cases to get our final screen space irradiance results. For The Tomorrow Children, we found that we were able to get good results by tracing at just 1/16 screen size (1/4 dimensions).

This works well because we are only doing this to the irradiance information, not to the material properties or normals of the scene, which is still calculated at full resolution in our 2D G-Buffers, and combined with the irradiance data at the per pixel level. It's easy to convince yourself of why this works, by imagining the irradiance data that we have in our 16 directions around the sphere as a tiny environment map, if we move small distances (from pixel to pixel) then unless we have sharp discontinuities in depth, this environment map changes very little, and so can be safely up-sampled from the lower resolution textures.

With all of this combined, we managed to get the update of our voxel cascades each frame down to the order of around 3ms, and our per-pixel diffuse lighting also down to ~3ms, which put us within the realms of usability for a 30hz title.

Using this system as a basis, we were also able to build on it further to add other effects. We developed a form of Screen Space Directional Occlusion that calculated a visibility cone for each pixel, and uses that to modulate the irradiance we receive from our 16 cone trace directions. We also did something similar for our character shadows (as the characters move too quickly and are too detailed to work with the voxelization) and used sphere trees derived from the characters collision volumes to provide further directional occlusion.

For particles we produced a simplified four-component SH version of our light cascade textures, which is significantly lower quality, but also looks good enough to allow us to shade our particles. We were also able to use this texture to produce sharp(ish) specular reflections using a distance field accelerated ray march, and produce simple sub surface scattering like effects. Describing all of this in detail would take pages and pages, so I encourage anyone who wants more details to read my GDC 2015 presentation, where a lot of this is covered in more detail.

Result:

As you can see we ended up making a system that is radically different from a traditional engine. It was a lot of work, but I personally think the results speak for themselves. By heading off in our own direction we have been able to give our title a really unique look, and produce something that we never could have if we'd have stayed with standard techniques or used someone else's tech.

About the Author

You May Also Like