Tinybuild is using AI tools to monitor staff and identify "problematic" workers

Update: Tinybuild CEO Alex Nichiporchik says a recent talk that indicated the publisher uses AI to monitor staff was "hypothetical."

Tinybuild boss Alex Nichiporchik says the company is using AI to identify toxic workers, which according to the CEO also includes those suffering from burnout.

Outlining how the company utilizes AI in the workplace during a Develop: Brighton talk called "AI in Gamedev: Is My Job Safe," Nichiporchik introduced a section called "AI for HR" and discussed how the tech can be used to comb through employee communications and identify "problematic" workers.

In excerpts from the talk published by Why Now Gaming, Nichiporchik explains how the Hello Neighbor publisher feeds text from Slack messages, automatic transcriptions from Google Meet and Zoom, and task managers used by the company, into ChatGPT to conduct an "I, Me Analysis" that allows Tinybuild to gauge the "probability of the person going to a burnout."

Nichiporchik said the technique, which he claims to have invented, is "bizarrely Black Mirror-y" and involves using ChatGPT to monitor the number of times workers have said "I" or "Me" in meetings and other correspondence.

Why? Because he claims there is a "direct correlation" between how many times somebody uses those words, compared to the amount of words they use overall, and the likelihood of them experiencing burnout at some point in the future.

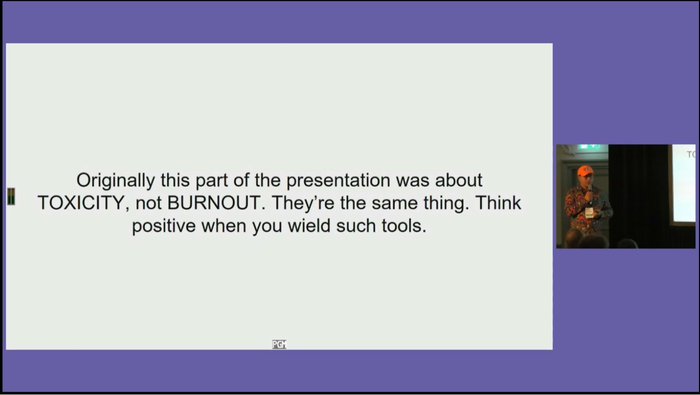

Toxicity and burnout? Tinybuild boss claims they're the "same thing"

Notably, Nichiporchik suggests the AI tool can also be used to identify "problematic players on the team" and compare them with the "A-players" by searching for those who talk too much in meetings or who type too much. He calls these employees "time vampires," because they're people who in his view essentially waste time, and notes "once that person is no longer with the company or with the team, the meeting takes 20 minutes and we get fives times more done."

At one point, Nichiporchik suggests AI was use to identify a studio lead who wasn't in a "good place" and help them avoid burnout. On the surface, that might seem like a win, but the CEO goes on to suggest that "toxic people are usually the ones about the burn out," adding "they're the same thing."

Image via Why Now Gaming / Develop

Equating toxicity to burnout is misguided at best, but what's more concerning is the fact that, earlier on in the talk, Nichiporchik spoke about removing problematic players from the company to increase productivity. In this instance, that might seemingly include those suffering from burnout.

Towards the end of his talk, Nichiporchik said companies should look to wield AI tools in a positive manner and avoid making workers "feel like they are being spied on." It's a carefully worded piece of advice that suggests it might be possible to spy on your employees, but only if you don't allow them to "feel" as if they are being spied on.

Game Developer has reached out to Tinybuild for more information on how it uses AI in the workplace.

Update (07/14/23): Responding to Why Not Gaming's original story on Twitter, Nichiporchik claims that some of the slides featured in his presentation were taken out of context, and suggests that using AI in the workplace is "not about identifying problematic employees," but rather "about giving HR tools to identify and prevent burnout of people"

Nichiporchik then references another slide that featured in the presentation, which states, "99 percent of the time when you identify burnout, it's already too late." He also notes that the title slide for the "AI for HR" section has been switched out for a new slide that reads "AI for preventing Burnout," which he describes as "more accurate."

"The ethics of such processes are most definitely questionable, and this question was asked during Q&A after the presentation," continues Nichiporchik, indicating an understanding that funneling employee chatter into an AI tool in a bid to assess performance and the mental state of workers is morally dubious. "This is why I say 'Very Black Mirror Territory.' This part was HYPOTHETICAL. What you could do to prevent burnout," he adds.

The CEO closed his Twitter thread by asking for feedback from those in attendance at Develop: Brighton who saw the presentation with "full context."

"Post-presentation I've had insightful discussions about the subject matter, especially in terms of coding and using AI tools to accelerate creativity," he said, before stating that Why Now Gaming's coverage comes from a "place of hate."

Update (07/14/23): In a separate response sent directly to Why Now Gaming, Nichiporchik said the HR portion of his presentation was purely a "hypothetical" and that Tinybuild doesn't use AI tools to monitor staff.

"The HR part of my presentation was a hypothetical, hence the Black Mirror reference. I could’ve made it more clear for when viewing out of context," reads the statement. "We do not monitor employees or use AI to identify problematic ones. The presentation explored how AI tools can be used, and some get into creepy territory. I wanted to explore how they can be used for good."

About the Author

You May Also Like