Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Writing a Game Engine from Scratch - Part 2: Memory

Writing an Engine from scratch can be a daunting task. With a good architectural design laid out, we face the first step of actually coding anything meaningful.

Part 1 - Messaging

Part 2 - Memory

Part 3 - Data & Cache

Part 4 - Graphics Libraries

In the previous Article on our Journey to write a Game Engine from Scratch, we looked at designing the Architecture of our Engine and explored a simple way to tie all the individual Systems together. We also took a look at a very basic road map we could take to actually fill this Architecture with life.

I will try to keep this Article as self-contained as possible, so you don't need to go back and read the first one.

This time we will cover a very important topic, that is often concidered to be something esoteric you shouldn't mess with too much. Memory Management. spooky.

You don't need a strong Programming Background to understand the Concepts here. It will however be helpful. When there is Code, it is mainly here to illustrate a Point better and you can probably skip it.

Let's get dirty.

Memory Management. If you think "this is just some C, C++ or ASM thing, my Garbage collected language does that for me" you are in for a ride. The Memory Management in Garbage collected Languages only works well up to a certain point. Garbage Collection in Java for example, has to work in such a wide spectrum of cases, of course it won't be as perfect for a Game Engine as it could be.

But even if you don't care about Memory Management in your Game, perhaps there are some interesting points to take from what follows.

Good Memory Access Patterns & Good Memory Management are what make a Game run fast!

If you want good-quality Graphics paired with 60 FPS, you will need to adhere to this. Why? This is why!

I will List Jonas Bonér's table here, in case you don't want to click that.

Latency Comparison Numbers

--------------------------

L1 cache reference 0.5 ns

Branch mispredict 5 ns

L2 cache reference 7 ns 14x L1 cache

Mutex lock/unlock 25 ns

Main memory reference 100 ns 20x L2 cache, 200x L1 cache

Compress 1K bytes with Zippy 3,000 ns

Send 1K bytes over 1 Gbps network 10,000 ns 0.01 ms

Read 4K randomly from SSD* 150,000 ns 0.15 ms

Read 1 MB sequentially from memory 250,000 ns 0.25 ms

Round trip within same datacenter 500,000 ns 0.5 ms

Read 1 MB sequentially from SSD* 1,000,000 ns 1 ms 4X memory

Disk seek 10,000,000 ns 10 ms 20x datacenter roundtrip

Read 1 MB sequentially from disk 20,000,000 ns 20 ms 80x memory, 20X SSD

Send packet CA->Netherlands->CA 150,000,000 ns 150 msIf a lot of those words don't mean much at this point, don't worry, we will talk about some of them in a bit. Those statistics are of course not up to date, but they convey a simple message: Memory Access is slow. However, by organizing our Memory well enough, we can attempt to reduce this latency by alot.

Now, keep in mind what 60 FPS means. 60 FPS means, we have 16.666 ms (=16,666,666 ns) to render a single Frame. Now take a look at the Table. If you aren't weeping like I am at this point, spend more time with this table and just see how much you can do in just 16 ms. And as a Tip, keep using milliseconds as a measurement unit instead of FPS.

Of course I am exaggerating. It isn't all as linear as that. Not everything must be done in Sync. But I do hope you see how important this subject is. Executing a lot of Instructions on your CPU / GPU is conciderably cheaper than fetching a piece of information from the RAM or even HDD.

The good news is, that basic Memory Management can be done in most Languages.

But what actually is Memory Management?

The Name says it all, it's simply the way you organize your Memory. Nothing fancy. If you've been programming for a while, you probably have already done this in some form, without even knowing.

But before we can talk about Management, we must first get to know the Memory better.

Every Program / Application has the following 4 Memory Regions. 2 of those are of less interest to us:

Data Segment

This is the Place where Globals and Statics live.

Code Segment

This is the part of your Memory that holds your Program.

And now for the interesting 2 Regions:

The Stack is a Stack!

Our next Region is the Stack. Well, it's a Memory Region that works like a Data Structure that is called a "Stack". "Stack" simply refers to the way Memory is added and removed. It works like a Pile, or "Stack", of Plates. You add a Plate by putting it on the Stack (called a push) and remove it by taking from the top of the Stack (called a pop). Last in, first out, LIFO.

The Operating System allows the Program to reserve a sizable chunk of Memory to be our Stack. It's a few MB in size, depending on what OS and Linker you run. This whole area is reserved just for your Program.

When does your Program use the stack? This depends on the Language you use. In C++ it would be each time you create a scoped variable, which is also the case for most other Languages, at least if you aren't creating Objects. To get a quick understanding of Scope, take the following code example:

// Some Code here

{ // <--- We begin a new Scope

int i = 0; // <--- We push a new variable on the Stack called i

i = i + 5; // <--- We can use this variable as we please

} // <--- The Scope ends and we pop all variables that

// were created in this scope. Dead.Gone.NeverExisted.Sounds reasonable. This ensures we don't create an infinite amount of variables over time in our program. Actually we can't, the Stack is way to small. So small, you can even leave it's bounds by accident, in what is called a Stack Overflow.

We need more Memory, Heaps more!

You might have guessed, a few MB isn't all that much to do a big Game in. And you are right, we need more! And we can have more, almost as much as we want. This Memory Region is called the Heap!

The Heap is just a big pool of Memory managed by your Operating System. By calling new, malloc and similar operations, we receive some free Memory on the Heap. This Memory is not scoped! Which means, it never dies, unless we kill the Program or call some delete or free operation.

Sounds clumsy. And you are right! Not calling those deleting operations actually leads to Memory leaks. Your Program gets more and more Memory if you forget that. This time it's not just until some tiny Stack fills up and crashes your Program, this time you get so much Memory, that you fill the entire RAM and portions of your HDD. We will need to manage this!

We now have a rough understanding of our Memory, let's move on to what we can do with it.

"Never call new!"

I've often heard this Statement in C++. Even back in University our professor told us to avoid new and malloc like the plague. But saying these things out of Context is really hurting the Heap's reputation. It almost makes you feel like a bad Programmer if you have to create Memory on the Heap. The sentiment seems to be, that you should do pretty much everything on the Stack. There is of course truth to this, and for the most part, you should. But we are here to make a Game...

... So don't worry! We are here to learn how to use the Heap in a sensible way! And that is what we will call Memory Management (As the Stack is already managed for us).

malloc vs new

Like I mentioned earlier, calling a function like malloc we will get a Pointer or Address to a Region in the Heap of some specified size. We can do with this area as we please, the Operating System flagged it as ours! This is called Allocation (hence m<strong>alloc</strong>). It's a very C-thing to do.

Now, what happens when we call something like new, as in C++? Now, we don't just allocate Memory, we will also call the Constructor on that thing we just created, which will fill that space with some interesting things.

There is a slight, yet noticeable difference here. Obviously, malloc will be faster, but we might not have control over what is in that space we just allocated. And new is pretty much just a malloc call followed by the constructor.

So, let's focus on the Allocation part and worry about what is in the Memory later.

The Allocator

What we want to do now, is write a simple Allocator. You might start saying:

Hey! You! Java doesn't even have a malloc, how should I even write an Allocator?!

And you would be right! Java does however let you make Objects on the Heap. And that is all we want, creating data on the Heap.

I'll let you in on another secret: We aren't even going to write our own malloc function. As a matter of fact, we are going to use the existing one.

You Imbecile! You just told me we are going to write an Allocater ourselves, and now you're saying, we are just going to use malloc? Is this one of your weird Wrapper ideas again?

Well, no. Stick with me, you're Question will be answered soon.

So, what does an Allocator actually need to do?

An Allocator needs to be able to give you parts of the Memory that are not in use. That is all. How it does that, doesn't matter to the caller, it just needs to know that no one else can get it via the Allocator.

But who says that we need to ask the Operating System for some more Memory like malloc occasionaly does? What if we just ask it once in the beginning for a large chunk and redistribute parts of that?

And that is exactly what we are going to do!

First, let's just use what we have. Like malloc and new! Or in case of Java, we just need to create an Object, those are automatically stored on the Heap.

Second, we create a big Array of those Objects on the Heap by calling malloc or new.

Third, we write some code that let's the user claim certain Objects of that Array as their own and simply document in an index, which Objects have been claimed (i.e. "allocated") and which haven't.

Boom. Memory Management.

(The above would be what we call a Pool)

Let's take an extreemly simplistic look at what an Allocator might look like in code using some butchered version of common Languages. We will use an even easier example compared to a Pool-based Allocator. The easiest example possible is probably a Stack based Memory Allocator:

class MyStackAllocator

{

public:

void initialize()

{

data = malloc( sizeof(Object) * 64 );

stackPointer = 0;

}

void destroy()

{

free( data );

}

objectPointer allocate()

{

if ( stackPointer < 64 )

{

stackPointer++;

return &data;[stackPointer - 1];

}

else

{

return NULL;

}

}

void free()

{

if ( stackPointer > 0 )

{

stackPointer--;

}

}

private:

objectPointer data;

int stackPointer;

}What does it do? Well, we start of by allocating 64 Objects using a malloc call, once! (By using the initialize function and only using it that one time) After that, we can assign our memory to other parts of code for up to 64 Objects by simply calling allocate. We can also give back the memory of the last created Object, by calling free. These are pretty much our push and pop from before.

And why 64 Objects? Well, why not. I wanted to use a concrete value as an example, use as many as you need.

So yeah, we end up pretty much with a Stack full of Objects.

So what?

You might be wondering what we did all this for? Why burden ourselves with this, if we can just call the Language specific Allocator and simply not forget to free the memory afterwards?

Well, there are many reasons to do this. Let's take a look at some of them.

Speed.

malloc is slow. Our Allocator is fast! Just a few Operations and no actual need to do any Operating System calls, once it has been set up. Awesome!

Memory Fragmentation.

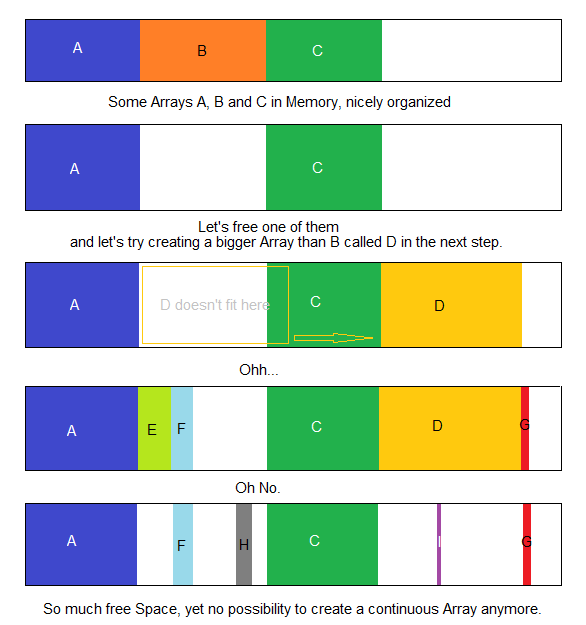

You have no control over where malloc, new or any other of those build in Memory Allocators actually create your piece of Data. They could all be in sequence, the could also be completely scattered. Now imagine calling some form of delete or free and then calling malloc again. Can you be certain the new Memory will be at the same spot? Most definitely not. I'll try illustrating this using my godly painting skills once again.

You've probably seen this picture when you defragment your HDD. This almost random distribution of data is aptly named Fragmentation. If we were to rely purely on malloc, the above would be a disaster and would eventually happen, if we run the code long enough. We would eventually reach the point where the Memory would be so fragmented, we couldn't create large Arrays anymore. This is one of the reasons, why restarting a Program sometimes helps.

There is a solution to this problem. You can defragment the Memory, like you would a HDD. However, since we used malloc directly to create all this, we must use malloc and free to fix it. This is insane.

But if we were to use our Custom Allocator, we can either avoid this scenario altogether (for example using a Stack-based Allocator), or implement a Defragmentation Routine without the constant need to call malloc and free!

More Speed.

There are alot of Factors to Speed. Some of them we covered here. Some of them will be covered later.

Let's recall Jonas Bonér's List of Memory Access times. Remember the L1 and L2 Cache Access times? Those were fast, right? By writing our Custom Allocator, we can actually influence what access times are going to be relevant! What we are trying to avoid here are so called Cache Misses.

But what is this L1 Cache stuff? Basically, every CPU has a tiny little bit of Memory close to it's heart. These are called L1, L2 and sometimes L3 (where L means Level), corresponding to the distance. And by distance I mean actual distance you measure with a tape.

Whenever the CPU requests Memory from the RAM, this Memory is stored inside these Caches. The CPU does this, in case it needs to Access some Memory that is nearby the last Memory it accessed. This means, the CPU doesn't just read one variable of 4-Byte size (an integer for example), it might read the entire "Cache-Line" that is maybe 64-Bytes in size.

If it were to read something that isn't part of the Cache at this moment, it would need a totally different Cache-Line in first. This is what we call a Cache-Miss.

This is due to the fact that the CPU doesn't have infinite space on it's die, so these Caches are tiny. Really tiny, only a few KB.

We can however still use this to our advantage! By making sure that our Allocator packs all data in, for example, 64-Byte blocks, we can be certain that the entire block get's loaded into the Cache at the same time. Were we to shift the Block by accident and have 60-Bytes in one line and 4-Bytes in the other, all this would have been for nothing, as the CPU needs to load 2 Cache-Lines now. Furthermore, we can assure a certain locality in our custom allocated space.

There is so much more to writing Cache-friendly code, but you should keep the above in mind when deciding on how to pack your Data.

We will cover more on this in a future Article on Optimization. But I'll leave you with this for now.

Debugging

Custom Allocators are an awesome debugging Tool. Remember our little MyStackAllocator? Well, we could just add simple print statements everywhere and keep track of what Memory is being used and what not. Or we could add an int somewhere that counts the actual Memory we consumed. Cool Stuff! Try doing that with malloc...

Memory Hogging.

Our Application won't be the only one running. Aren't you scared, that while playing the Game, you suddenly run out of Memory and it crashes?

Or even worse, what if the RAM starts getting so clogged that we have to resort to... dare I say it... Pagefiles on the HDD? The Horror.

By Allocating all the Memory beforehand, we can do checks and be certain that all is well. We would ensure that our Game had enough Memory or give the Player a Pop-up to close some other Applications.

... etc. etc... More and more reasons to use Memory Managers. Let's look at one we could actually need in our Engine

Memory Manager Types in Games

We now know what an Allocator does. We know a few pitfalls we have to take into account. We know why we are doing it. We know roughly how to do it. But we don't know, which type of Allocator to choose?

Well, each Allocator is there for specific reasons. There is no Golden Bullet that will solve all your Memory Management needs. So far we've discussed 2 Types of Memory Managers. Stack-Based and Pool-Based, of which you can choose as you please.

Those are a great start. You can do alot with these two. But they aren't all that special to be entirely honest. We are programming a Game Engine, shouldn't there be Game-specific Memory Managers?

Introducing, the (Multi-)Frame-Based Memory Manager

The Frame-Based Memory Manager, is something very specific to Rendering Engine Design. Basically it is a Memory Allocator, either Pool- or Stack-Based, that get's reset each (or every n) Frame(s). It's just suddenly empty. Well not actually "empty", as that would entail you filling the entire thing with 0's, but we treat it as such by resetting the Stack Pointer or clearing the Index. We can of course still clear the entire thing if needed.

Why do we want such an absurd thing? An Allocator that dumps all our Memory each Frame?

Well, as you will see when writing the Rendering part of your Engine, there is alot of Memory we need to use in order to create a Scene. This Memory is sometimes completely useless on the next Frame. Things like Culling or Sorting Data that differs from Frame to Frame when the Camera moves. Having to create dynamic Arrays each frame, just to do Culling could stall our Engine. So, let's dump it!

Cool, we know a few cool things about Memory Management and Allocators...

Now what?

The Question remains: Should I use these Techniques?

To be honest: Probably not.

For most Games, what we have discussed here is overkill. Furthermore, messing up your Memory Management actually slows things down. As Robert Basler points out in the comments, speedwise it is a good idea to benchmark any custom Memory Allocation against malloc and new, just to make sure that we are actually doing anything sensible.

So, unless you intend to write some insane Rendering Engine, most of this is not worth the trouble and will introduce the possibility of more bugs in your code. However, this Series is about the nasty parts of writing Engines. And some Engines really do need good Memory Managers. Now you know how they work. Roughly. Or at least read some new words.

If you have any questions, feel free to contact me via Twitter @Spellwrath. I'm currently finishing up another Engine using the methods I have described in this Article.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)