Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Using Map/Reduce to Run Bulk Simulations

This article describes an approach using existing Map/Reduce tools to test games that have a high degree of variability in players’ starting scenarios to verify and improve on the initial engagement.

Abstract

As the players of a game experience only a small subset of a game’s possibilities before becoming invested, it is crucial to verify that all starting scenarios provide an engaging flow from curiosity to thirst. Designers need to use a testing tool to exercise and evaluate more scenarios than is possible using manual testing. Expanding the testing sample from anecdotes to a substantial subset of the population will yield statistically significant, more predictable assessment of player experiences. This article describes an approach using existing Map/Reduce tools to test games that have a high degree of variability in players’ starting scenarios to verify and improve on the initial engagement. Using this technique allowed our team to identify and eliminate opportunities for churn in our Action RPG at an affordable cost before launch.

Introduction

In free-to-play games, the designer can assume only that the player is curious about game at the outset. In order to retain the player, the game must provide an experience that satisfies the player’s curiosity and expands upon it until the player desires to consume the content in a greedy fashion, thirsts for it. Designers craft, test, and tune the steps from starting scenario to investment. Players recognize their relative lack of agency in the early stages of a game, rely upon the designers’ crafted experiences, and correctly blame the designers when the game presents an unreadable, unpreventable, and thus unfair outcome. If a player feels that the game hard-failed them after presenting no valid choices, then that is a significant opportunity for resentful abandonment. It is the responsibility of the designers to test and eliminate these churn opportunities prior to investment.

Some games have highly variable starting scenarios from player to player. For example, “Monster Strike” gives the player some initial random characters while introducing the gacha mechanics. Heavy use of random numbers or a variety of effects giving rise to emergent behavior contributes to highly variable outcomes at each step from curiosity to thirst. However, evaluating player experience during development is often a function of manual testing, for example via designer playthroughs or focus group testing. Based on such limited activity, the number of outcomes that can be tested is too small compared to the size of the population to accurately extrapolate. Predictably unfair outcomes will be missed in testing and discovered by players, some of whom will churn before becoming sufficiently invested in the game to accept the setback and try again. While the gaming market continues to expand, competition for players is already tough; developers cannot afford to write off a subset of their population to bad luck, especially small developers with small user acquisition budgets.

Approach

Instead of relying on small numbers of anecdotal playthroughs, how do you exercise your game so that you can reliably anticipate player experiences? One solution is to use a tool to run a representative subset of your scenarios with AIs acting for all players, and then capture and aggregate the outcomes. If the product of the number of starting combinations and the duration required to test each scenario exceeds your testing window, then the tool will need to be able to scale in parallel across multiple processors. Map/Reduce tools, like Hadoop, can provide the framework within which you can run parallel tests and aggregate the results.

Utilizing an existing MR tool has challenges, as they are more built for parsing, transforming, and aggregating large chunks of data, generally for analytics reporting. Also, most MR jobs for Hadoop are written in Java, and very few games are built in Java. The remainder is a walkthrough of an example of how to use Amazon’s Elastic MapReduce (EMR) tool to exercise the rules engine and AIs for a simple game written in C#.

The basic components of the solution are a rules engine (C#), AIs (C#), a test harness (C#), Mono, a reducer script (Python), and an EMR Cluster. The test harness is a standalone executable that can exercise the rules engine and AIs. It will be bundled into an executable compatible with the EMR servers. The cluster will be configured to use the test harness as the mapper, essentially converting scenario inputs into win/loss outcomes. Finally, the cluster will use the Python reducer to aggregate the results.

Ideally, your rules engine should be abstract and devoid of any dependencies on Unity or other game engine. It should just be pure game logic. The rules engine can inform the graphics engine using loose coupling designs, like callbacks, delegates, or event queues. However, for the sake of simplicity, these techniques are not illustrated in this example. Also, for the sake of brevity some of the code is omitted, but the full source can be found at https://github.com/seanpaustin/emr-for-bulk-simulation.

public class Engine { public enum Players { NONE, RED, BLUE }; public enum Actions { ROCK, PAPER, SCISSORS }; public Players Throw(Actions redAction, Actions blueAction) { … } }

Similarly, the AIs should also be abstract. For this exercise, I have built two different AIs that share a common interface.

public interface RPSAI { Engine.Actions GetNextThrow(Engine.Players player); void AddOutcome(Engine.Actions redAction, Engine.Actions blueAction, Engine.Players winner); }

The RandomAI implementation just picks its next move at random.

public class RandomAI : RPSAI { … }

The LetItRideAI will stick with the last throw, if it won, or attempt to beat the last throw, if it lost. It falls back to a random selection, if it draws too often and on the first throw.

public class LetItRideAI : RandomAI { … }

The TestHarness takes as arguments which AIs it should exercise and how many iterations it should play for that scenario. For each iteration, it writes the winner out to standard out. For Rock, Paper, Scissors winner is the only useful information, but a more rich game could also add more intelligence that gives insight to the player experience. For examples: How close did the winner get to failing before victory? What was the win condition: damage, poison, decking? How many times did the characters get to use their ultimate attack? How many turns did the contest last?

If possible, detect and report on whether a loss by the player representative AI was “unfair” and thus likely to induce churn. Was the player’s team effectively eliminated before the player was able to take a turn? Did the player ever have a path to victory?

public class TestHarness { public static void Main (string[] args) { if (args.Length == 3) { string redAI = args [0]; string blueAI = args [1]; int count = int.Parse (args [2]); Random stream = new Random (); Engine engine = new Engine (); LetItRideAI letItRideAI = new LetItRideAI (stream.Next()); RandomAI randomAI = new RandomAI (stream.Next()); RPSAI red = (redAI == "LetItRide" ? letItRideAI : randomAI); RPSAI blue = (blueAI == "LetItRide" ? letItRideAI : randomAI); for (int index = 0; index < count; ++index) { Engine.Actions redAction = red.GetNextThrow (Engine.Players.RED); Engine.Actions blueAction = blue.GetNextThrow (Engine.Players.BLUE); Engine.Players winner = engine.Throw (redAction, blueAction); … string out = (winner == Engine.Players.NONE ? "Draw" : (winner == Engine.Players.RED ? "Red" : "Blue")); // here is where you can report on "fairness" Console.WriteLine (redAI + " " + blueAI + " " + count + " " + out); } } else { Console.WriteLine ("Usage: Engine.exe {LetItRide|Random} {LetItRide|Random} {count}"); } } }

Install Mono, and build your project solution using xbuild yielding an executable file, in our case Engine.exe. The parameters are the test scenario that I want to run, and the output is the raw results. Running it should yield something like:

$ mono Engine.exe Random Random 3 Random Random 3 Red Random Random 3 Red Random Random 3 Draw

The file will require a Mono environment to run, but the EMR servers will not have Mono installed on them. You can get around this two ways. The first is to use EMR’s bootstrapper to install Mono on the server before the Hadoop job runs, but the bootstrapper was not sufficiently documented for us to succeed with this approach. The bootstrapper would fail with little to no logging to explain why. Ultimately, we opted to bundle the executable into a standalone executable using mkbundle:

$ mkbundle -o testharness Engine.exe --static --deps $ ./testharness Random Random 3 Random Random 3 Red Random Random 3 Red Random Random 3 Draw

If you choose the bundle option, make sure you bundle it for the target Linux environment, in EMR’s case amd64 Debian. Since our build server also runs at Amazon, this was an easy solution for us.

In the EMR tool, the mapper script is going to be called and passed a stream of data to process. However, the test harness only handles single executions. So, we need a simple looping script to run the test harness for each scenario allocated to this mapper.

$ cat mapper.sh while read line do ./testharness $line done < "${1:-/dev/stdin}"

The EMR tool is going to stream the output of the mapper into a reducer. Hadoop offers several off-the-shelf as Java classes, but we found it easier to write and test our own in Python.

$ cat reducer.py #!/usr/bin/python import sys counts={} for line in sys.stdin: key = line.rstrip('\n') counts[key] = counts.get(key, 0) + 1 for k,v in counts.iteritems(): print k, v

One challenge in working with an MR tool is the amount of startup time, and EMR is no exception. So, before you submit a job, it’s a good idea to run a local test by piping together the data, mapper, and reducer:

$ echo "Random Random 3" | ./mapper.sh | ./reducer.py Random Random 3 Red 2 Random Random 3 Draw 1

At this point, we have a test harness as an amd64 Debian compatible executable, a mapper.sh, and a reducer.py. Upload all of those to an S3 bucket. In that same bucket, create a subdirectory called “input”, and in that subdirectory upload a test data file that contains a listing of all the different scenarios you are interested in testing.

$ cat test.data Random Random 10 LetItRide Random 10 LetItRide LetItRide 10

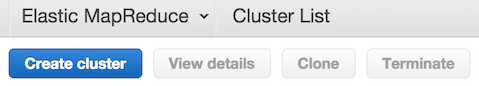

Now, navigate to the Elastic MapReduce web console:

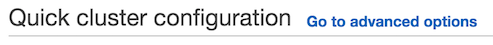

Click the “Create cluster” button and then click the “Go to advanced options” link.

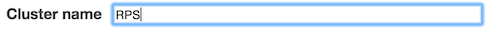

Give the cluster a name:

Under Hardware Configuration, setup whether you want to launch it in a VPC.

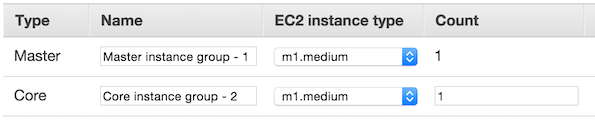

Setup how many of each kind of Hadoop node you need for the cluster. Refer to the Hadoop documentation for what each kind of node does and how many you should have.

For your initial proof of concept testing, you can set the EC2 instance types to m1.medium and use only 1 Master and 1 Core instance to keep costs down.

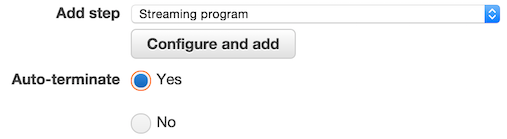

Under Steps, set Auto-terminate to “Yes” and add a “Streaming program” step.

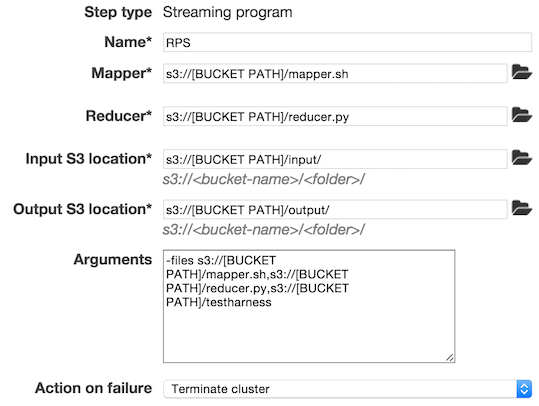

Add the path to your S3 bucket. Note the testharness in the -files part of the Argument. Without this, the mapper will fail.

Save the step, and then click on the “Create cluster” button. If the run will be “short”, then go grab a cup of coffee and wait for 15 minutes or so to see whether it succeeded. If it did succeed, the output will be in the output directory you specified when you created the cluster. Copy those files from S3, concatenate them, sort them, and import them into whatever presentation tool you use, for example: convert to CSV and distribute via email or import into a data warehouse for use with Tableau. The output below is from an expanded run that executed each scenario 100,000,000 times.

$ cat output.csv LetItRide,LetItRide,100000000,Blue,50000000 LetItRide,LetItRide,100000000,Red,50000000 LetItRide,Random,100000000,Blue,33339198 LetItRide,Random,100000000,Draw,33336277 LetItRide,Random,100000000,Red,33324525 Random,Random,100000000,Blue,33327297 Random,Random,100000000,Draw,33339355 Random,Random,100000000,Red,33333348

The results of the run demonstrates that there is a distinct difference in outcomes when LetItRide plays itself versus any game where the Random is involved. This is not only validation that Random is in fact random but also that LetItRide may be too predictable. Analyzing these results is also where you would attempt to identify which scenarios had problems with unfair player losses.

Results

In the below example from our ARPG game, you can see a scenario where a particular team composition was unable to pass the third wave of enemies in the first battle. Without a comprehensive test, this issue might have been made it through to production, where players who happened to have that team composition would not have been able to make progress in the game.

team,stage,wave,errors,pass_count ... team_0000001,stage_01,wave_01,0,100 team_0000001,stage_01,wave_02,0,100 team_0000001,stage_01,wave_03,0,0 team_0000002,stage_01,wave_01,0,100 team_0000002,stage_01,wave_02,0,100 team_0000002,stage_01,wave_03,0,99 team_0000003,stage_01,wave_01,0,100 team_0000003,stage_01,wave_02,0,100 team_0000003,stage_01,wave_03,0,94 ...

This procedure is only useful if it can be used to test a significant portion of possible starting scenarios, ideally all possibilities. If a team already has an existing Hadoop cluster that is ever idle, then any free intelligence gained from consuming the idle time is more useful than none. However, using EMR incurs a charge per execution as does expanding an existing Hadoop cluster specifically to accommodate this testing. In that case the solution must also be cost effective and timely to be useful.

A baseline run of RPS, that ran each scenario 10 times, consumed 4 normalized instance hours and took 15 minutes to complete:

4 instance hours * $0.055 per instance hour = $0.22

$0.22 / 30 iterations = $0.0073 per iteration

A more expansive run of RPS, that ran each scenario 100,000,000 times, consumed 12 normalized instance hours and took 132 minutes to complete:

12 instance hours * $0.055 per instance hour = $0.66

$0.66 / 300,000,000 iterations = $2.2 * 10-9 per iteration

The number of runs to create statistically significant results for all the possible starting scenarios for RPS was trivial compared to a game this tool was meant to solve for. The Action RPG we were building when we first tested this tool had all of the normal complexity in a mobile ARPG and stood as a reasonable real-world case. Comparing the runtimes of the test harnesses for the two showed that our ARPG required about 1,000x longer to run a single scenario than one run of RPS. Starting scenarios in our game consisted of teams of three to five unique characters out of a roster of 50. A separate analysis demonstrated that we needed to run on the order of 100 iterations of a single team in a single level to get statistically significant results to predict percent chance of success. Also, we wanted to test through the first ten levels.

$2.2 * 10-9 per iteration of RPS * 1,000 = $2.2 * 10-6 per iteration of ARPG

3 of 50 + 4 of 50 + 5 of 50 = 19,600 + 230,300 + 2,118,760 = 2,368,660 possible teams

2,368,660 teams * 10 levels * 100 iterations = 2.37 * 109 iterations

2.37 * 109 iterations * $2.2 * 10-6 per iteration = $5,200

While it was comprehensive, it was not cost effective. However, this represented the worst case estimate. We used several approaches to cut down that price. The first was to switch from On Demand to Spot instances, which saved at least 50% but did run the risk of not completing within the testing window, if the spot price never came down to our bid.

We also applied heuristics to reduce the number of possible teams. Specifically, we eliminated those characters that were in the roster but not available to new players; they were advanced characters that had to be unlocked later. We also eliminated characters that were strictly superior to other characters in the roster; for example, if one character was mechanically the same as a different character but had strictly better stats, then the better one was eliminated. Characters that were mechanically the same but varied in attributes that only mattered later in the game were eliminated. This reduced our effective roster to 35. Finally, we eliminated the three and four character teams; players always had five characters at their disposal. The purpose of this elimination was only to filter out invalid starting scenarios from our initial naive combinations. It is important to not filter out valid scenarios.

5 of 35 = 324,632 possible teams

324,632 teams * 10 levels * 100 iterations = 3.2 * 108 iterations

3.2 * 108 iterations * $1.1 * 10-6 per iteration = $350

While that was more than we could spend on a nightly process, it was low enough that we could run it a few times a month to check on progress and identify rough spots. Also, after running the comprehensive run once, much smaller, targeted runs were able to narrow down specific issues. If the run was small enough, engineers could use the same local test procedure to execute it locally rather than submitting it to EMR.

$ echo "team_0000001 stage_01 100" | ./mapper.sh | ./reducer.py

Refinements and Other Considerations

Once you have built out and tested this process, consider automating it using the AWS CLI tools or boto, if you prefer Python. In our case, we scripted it all and ran it via Jenkins, giving our designers direct execution and scheduling access without requiring them to have access to the Amazon web console. Using Amazon’s EMR tool also allowed us to spin these tests up on an ad hoc basis without having to directly manage the infrastructure. It helped to know Hadoop’s capabilities, but deep Hadoop experience was not required to set up a wide variety of very effective tests.

In addition to being a good tool for designers to better understand the full impact of their tuning decisions, this is also a good way to exercise your rules engine from a QA perspective. This tool can find edge conditions that would be challenging to uncover through manual testing. The errors column in the ARPG summary was used for this purpose and allowed us to identify scenarios that could be used to narrow the reproduction cases for intermittent errors.

For AI developers, they can use this tool to verify that their AI responds appropriately to a wider variety of circumstances than can be achieved through manual QA testing. For example, in a TCGO you could run all the AI variants (Easy, Medium, and Hard) against all other variants using all of the preconstructed decks available to the AIs to verify that Hard will beat Medium and Medium will beat Easy for all decks. This will allow you to detect scenarios where an AI variant is too effective with certain cards or certain decks.

Conclusion

While Map/Reduce tools are most often considered data analysis tools, they can also be a time and cost effective tool to give developers insight before launch about how a broad population of players is likely to experience their game. That insight allows developers to preserve more of the acquired players and convert them from curious to invested, passionate fans.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)