Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Most Inspiring Game Animation Tech Talks of 2016

A quick summary of my favorite talks related to game animation programming/technology of 2016.

Over the course of this last year there have been tons of great talks, papers and articles that are relevant to game animation technology. With the year having just ended I wanted to provide an overview and discussion of my 2 favorite talks of the last year. The first talk is by animation programmer Simon Clavet regarding the Motion Matching technique used in Ubisoft Montreal’s For Honor. The second talk is regarding the techniques that were used in UE4 for animating characters in Epic Games’ Paragon title, it is by senior gameplay/animation programmer Laurent Delayen. If you haven’t been paying attention to game animation tech for the last year and want to catch up, this is the right place to start!

Motion Matching for Realistic Motion in “For Honor” (Simon Clavet, Ubisoft Montreal)

We first heard about Motion Matching being implemented in a AAA game from Michael Buttner (Ubisoft Toronto) at the nucl.ai 2015 conference. Although I was not there in attendance, articles about the talk made their way online where you could find a decent amount of information about their implementation and references to some past papers that introduced the concepts. Motion Matching is such an exciting topic in animation programming that it’s the subject of one of my favorite talks from 2016 as well: Simon Clavet’s (Ubisoft Montreal) talk from nucl.ai 2016 entitled “Motion Matching For Realistic Animation in For Honor”. A video of the presentation and its slides can both be found online.

Simon starts by giving a quick retrospective of the history of game animation. This includes how we started out with simple state machines to control animation and eventually moved on to blend trees/arrays which allowed us to blend continuously between assets. Somewhere along the way the animation state machines have become so large and complex, with so many relationships between the various states, that they become very difficult to manage and modify. He mentions the problem of figuring out where to insert new animations in the graph; his specific example is an animation called “start-strafe90-turnOnSpot45-stop”. This could be difficult for someone who is already familiar with the tree and excruciating for a new developer who hasn’t worked with the state machine yet. I can definitely echo this sentiment, as I can see how new developers on my team get overwhelmed by it as well.

The growing complexity of animation state machines (from left to right).

He carries on by bringing up the question of why we need so many specific animations in the game if we can do parametric blends, right? So let’s just get the various extent animations required and use blend arrays to recombine animations and create the specific one we need in that context. This approach has 2 major problems. The first one is that for the various animations to blend properly, they need to be somewhat similar-looking. Therefore you end up with a ton of animations in the game, but they all look a little similar and you have no real variety. The second problem is that if the animations do not look similar enough, you end up with the problem that both animations can look good on their own but look terrible when combined (the “Brangelina” problem). Another problem with state machines mentioned by Simon is that you constantly have to be cutting up animations so that they match your various states. Not only is this process time-consuming and tedious; but it can also lead to having to make somewhat arbitrary decisions on where one move ends and the other begins. I love this sentence from the talk which totally nails it: “It’s sad to ask an animator where the start animation ends and where the loop begins”.

The talk then shifts over to introducing two of the more famous papers related to motion graphs and motion fields. In motion graphs, each animation contains specific predetermined points for branching in and out, and an algorithm decides when a branch should be taken and what is the destination frame and animation. The problem here is that for responsive controls we need to add more and more transition points, making the graph more and more dense and complex. Extrapolate that process a bit and you get to motion fields, where the key difference is that there are no transition points, and we can jump from any point of any animation to any point of any animation. Or as the paper puts it: “our run-time motion synthesis mechanism freely flows through the motion field in response to user commands.”

An example is presented of a character playing a very long animation to slow down, stop and settle. The speaker asks: When the character wants to stop, at which frame in this animation should we branch? The answer is that this point should be determined by a number of factors: the player’s current velocity, his current pose and the precise position where we wish the character to come to a stop. The algorithm combines all of these factors (and potentially others) in a fitness function to determine the cost of each potential transition, this cost is evaluated for each frame of each animation and the frame with the lowest cost is selected. For example, a candidate transition will have a very low cost and be a viable option if:

The character’s pose in the candidate animation frame is similar to the current pose in game.

The character’s velocity in the candidate frame is similar to the velocity in game.

The piece of motion that follows that animation frame is similar to a predicted/desired future trajectory for the character.

Motion graphs versus motion fields (from https://www.youtube.com/watch?v=G_bLwfzYqF4)

Of note, the third criteria requires that the game be able to predict a desired future trajectory for the character so it can be compared against the motion in the candidate animations. An added advantage here is that if the future trajectory is affected by biases in the world, then the Motion Matching algorithm can use this information to select motions that take this into account as well. The result is characters who interact with the world in a more believable way, the speaker shows examples of a character performing a planted turn when going around a wall and another case of a character locomoting believably on a narrow and winding path. Maybe finally I can stop seeing characters running directly into walls with no reaction in the games I play!

Biases in the world affect the future trajectory prediction (in red), which is then used by the motion graph to select animations which adapt to the environment.

This is the core of the algorithm, it computes the cost of various criteria to simultaneously optimize:

Responsiveness: How closely the animation follows the desired trajectory.

Fluidity: How closely the candidate animation matches the current velocity.

Smoothness of animation: How closely the animation pose matches the character’s pose.

Another great advantage is that it becomes very simple to prioritize some criteria over others by changing the parameters of the cost function. In a way, programmers don’t need to spend much time worrying about the whole look vs responsiveness/consistency issue, because it can all be tune-able with a few numbers by animators and/or designers. For example, does your specific game care more about responsiveness? If so, then add more weight to matching the desired/predicted future trajectory and the algorithm will select animations that take you close to where you want to be more often. Care more about animation smoothness? Add more weight to matching the character’s pose and velocity, the algorithm will then prefer choosing frames of animation which will result in smoother blending.

This was the core of the algorithm, but a few other things are mentioned which are relevant for taking this from concept to implementation:

Trick 1: You only need to pose match a few bones to get a good result. Mostly it is the positions and velocities of the feet, and in For Honor they also used the weapon position.

Trick 2: Always keep an array of desired future positions for your character, and compare this against where the remainder of any animation will take you if you play it.

Trick 3: To speed up the search, a kd-tree can be used. In this case, the cost function becomes a distance function in the tree.

Trick 4: A potential optimization is to run the selection algorithm on the GPU. Simon mentions that this should be possible but his team hasn’t gotten around to it yet due to the GPU being fully utilized already on his project. Kristan Zadziuk from Ubisoft Toronto demonstrates a node-graph system for creating motion shaders in his GDC talk. He doesn’t mention that these are running on the GPU but it seems like a first step in that direction.

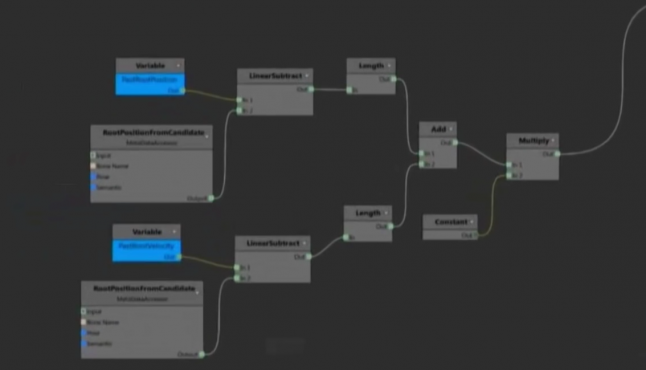

Example node-graph setup for motion shaders from Ubisoft Toronto (https://www.youtube.com/watch?v=KSTn3ePDt50)

That was it for Motion Matching, the rest of Simon’s talk goes into a variety of procedural touch-ups which are done to get the details looking right. These are not the focus of the presentation itself but remain important. One instance where touch ups are important is when the animations which are selected by the Motion Matching algorithm do not take the character in exactly the required position or orientation.

Predicted trajectory in red and animation motion in blue. The predicted error between the two can be compensated procedurally.

For situations like these, the animation’s trajectory will be warped slightly over time so that the speed of the character will match what the game requested. In situations like these, the author strongly suggested staying far away from time-scaling animations since this rarely looks good (totally agree here!) and instead just sliding the character over the duration of the error to get to the desired spot, perhaps adding a bit of foot IK to clean up the feet (the second talk has a more involved solution to this problem, read on!). For foot IK, For Honor doesn’t pre-process animations to figure out when the feet should be locked to the ground, rather they run some simple heuristics (probably looking at the foot velocity and proximity to the ground) and lock the toe to the ground when it doesn’t move too much in the main animation. Another note is to not lock the foot if it is too far from it’s position in the animation, this has a tendency to break the character’s pose/silhouette too much. In these types of situations it is often better to just slide the character a little.

That does it for this talk from Simon which was just filled to the brim with great technical tips for animation programming. From demonstrating how Motion Matching can work in a AAA video game to the list of tricks for procedural fix-ups, it’s all great stuff that is super valuable to any team working on animation-heavy games. Simon leaves us with a parting shower thought: “Let’s just directly markup the mocap with the controller inputs that should trigger the moves … and generate the game automatically”. Inspiring or crazy?!

Bringing a Hero from Paragon to Life with UE4 (Laurent Delayen, Epic Games)

My second favorite talk from 2016 was by Laurent Delayen, senior animation and gameplay programmer at Epic games. His talk was presented at nucl.ai 2016 (sounds like nucl.ai is THE conference for animation programming these days) and is about the various animation techniques used for characters in Epic Game’s new title, Paragon.

He begins by speaking about some of their objectives when creating their new animation setup. Some of their goals were pretty typical such as good visual fidelity, efficiency at run-time, and reduction of foot sliding. However some other goals were more indicative of the type of game they were making:

Mostly using physics motion (meaning: they want the game’s motion model to be extremely consistent)

Designer driven with high-adaptability (meaning: their designers should be able to tweak the motion model’s parameters quickly and have the animation system handle these changes without too much degradation of visual fidelity)

Efficient to author (meaning: the game will have many different characters so each one individually shouldn’t take too long to create)

The last of these objectives, “efficient to author”, is largely achieved by their usage of an animation template which is followed by all characters in the game. The speaker’s explanation of an animation template is a system of base animation systems, various layers, and post-processing fix-up effects which remains roughly the same for every character. The uniqueness of each character comes by the different assets that are used at each node/step. This should be a huge time-saver for them since adding new characters would mostly consist of populating the various steps with the required animation; without need to setup any complex animation systems.

The animation template used for characters in "Paragon".

At the base of their system is a state machine that is used to control transitions between the various full-body states. These can either be single animations or blend spaces. For example, the locomotion cycle consists of a blend space which contains animation loops for various ground inclinations. Since the various assets in the blend space could be slightly different, Laurent mentions sync markers which are placed in each of the animations as one foot crosses over the character’s root bone. When performing continuous blending between assets, it should now be possible to synchronize that specific moment between each of the assets.

Blend space used for locomotion cycles at various ground orientations.

Following this, we move on to the concept of reverse root motion. A concept used in Paragon for starts, stops and pivots. With this technique, markers are placed in the world to describe the motion and help to synchronize the animation.

For starts, a marker is placed in the world when the player first goes from idle to moving. They can then synchronize the animation with the distance to this marker.

For stops, a marker is placed in the world at the location where we want the player to settle. The animation can then be synchronized with the distance to the marker

For pivots, the marker is placed at the point of zero velocity and the animation will be synchronized first with the distance to the marker and secondly with the distance from the marker.

Reverse Root Motion with representative markers. Starts (yellow) and stops (red).

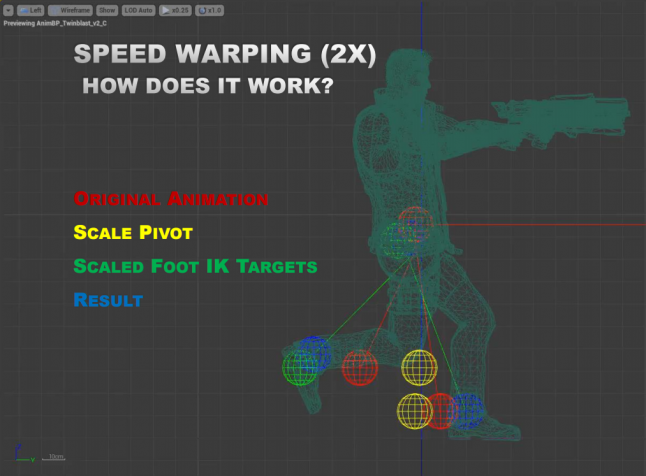

The major disadvantage of this technique is that to match the distance covered in the animation with the distance from the marker would require time scaling of the animation’s playback rate. This rarely gives natural-looking results (the previous talk mentioned this as well) so rather than scaling the playback rate they decided to modify the stride length. In a nutshell, the system begins by looking at the distance of each foot from the character’s root and then scaling this distance by the scaling that they wish to achieve on the animation’s displacement. For example, if we want the character to move at 1.25x the speed that is in the animation then we will try to place the feet 1.25x further than the character’s root (this is in 1 dimension along the axis of movement). I love this technique as it follows the rule of always scaling the existing motion up/down rather than trying to artificially create new motion in the animation. Laurent shows a side-by-side video of this technique compared to scaling of the playback rate and you can tell right away that when using this technique the results are noticeably better when trying to make the animation go further or shorter. This gem was the highlight of the talk in my opinion, the results were that good! Thanks to this technique in Paragon they can scale up the motion in animations by up to 60%, with an additional 15% done via playback rate scaling.

Speed/Stride warping. IK targets are in green, the original animation is in red, and the resulting foot position is in blue.

Another thing that can be noticed here is that if the scaling is quite large or small, the character’s feet will have a hard time reaching the IK targets. This is doubly the case considering they allow the legs to compress but never to extend further. To deal with this problem, whenever the feet have a hard time reaching the targets they allow some downward displacement on the hips to shorten that gap. The hip upward/downward motion is then smoothed out via springs.

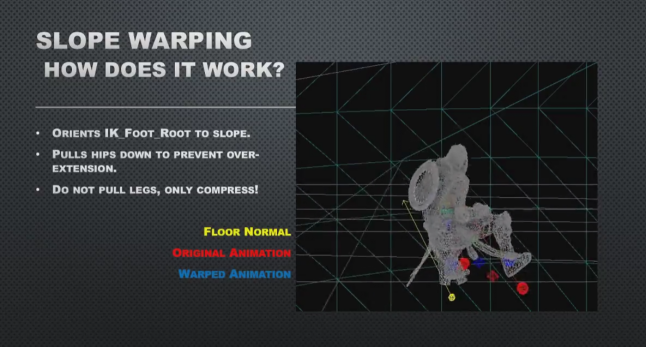

For motion on slopes, they animated specific loco cycles but did not create specific versions of starts, stops and pivots due to the sheer number of assets it would require. For these cases, Laurent outlined their solution: Grab the ground normal below the character and rotate the feet locations of the current animation by this normal to obtain the IK targets. All that is required then is some leg IK (as in the stride warping) to get the feet to reach the IK targets.

Slope warping. Original foot positions in the animation are in red.

In my opinion, these were the highlights of Laurent’s great talk. He ends by showing some footage of the game created by users; the quality of animations can be noticed immediately. The visual fidelity of the results is even more impressive when you consider how much each animation is being modified to achieve the speed and responsiveness required for the design of the game.

Parting Thoughts

There you have it, in my opinion the best 2 talks related to animation programming of the last year. If you have the time, I strongly suggest checking out the video links provided to watch the talks in their entirety. There are tons of details mentioned in there that I didn’t highlight in this article.

On a closing note, big thanks are in order for Simon Clavet and Laurent Delayen for taking the time to share their findings and results with the rest of us. It’s pretty obvious that the concepts they shared here will be having an impact on the world of game animation tech for the years to come. I can’t wait to see what 2017 will bring!

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)