Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Populating an entire game world with characters that give an impression of life is a challenging task, and it's certainly no simpler in an open world. Senior AI programmer John Krajewski explains how he did it in Destroy All Humans 2.

Populating an entire game world with characters that give an impression of life is a challenging task, and it's certainly no simpler in an open world, where gameplay is less restricted and players are free to roam and experience the world however they choose. The game engine has to be flexible enough to react and create interesting scenarios wherever the player goes. In particular, the demands on the AI are different from a linear game, requiring an approach that, while using established game AI techniques, emphasizes a different aspect of the architecture.

This article discusses the data-driven AI architecture constructed for Pandemic Studios' open world title Destroy All Humans 2. It describes the framework that holds the data-defined behaviors that characters perform, and how those behaviors are created, pieced together, and customized.

The premise of an open world game with sandbox gameplay is to give players the freedom to do what they want, the freedom to create their own game within the world the developers provide. Their play is not linear, which is fantastic for a sense of immersion, but reduces the ability of the game developer to control, limit, and pre-script scenarios that the players encounter.

The premise of an open world game with sandbox gameplay is to give players the freedom to do what they want, the freedom to create their own game within the world the developers provide. Their play is not linear, which is fantastic for a sense of immersion, but reduces the ability of the game developer to control, limit, and pre-script scenarios that the players encounter.

The AI code needs to be built on a foundation that is flexible enough to respond to any eventuality. It needs to handle a domain of gameplay that is broader in scope than a linear title and react to situations that might not have been anticipated. In effect, the AI needs to have a strong emphasis on breadth of behavior over depth. That is, the architecture must promote the ability to create large numbers of behaviors and make applying them to characters as easy as possible.

One solution to this challenge is to make the behaviors data-driven. They should be created without requiring changes to code, pieced together and reused as shared components, and substituted out for specialized versions. Ideally, the developer should be able to tweak not only the settings of a behavior, such as how long a timer lasts or how aggressive an enemy is, but also the very structure of the behavior itself. For example, what steps are needed to complete a given task or define how those steps are performed? By allowing our behaviors to fit into multiple situations, be reusable, and be quick to create and customize, we can more effectively create all the actions that the characters will need to give the game life.

The basis for the behavior system in Destroy All Humans 2 is a hierarchical finite state machine (HFSM), in which the current state of an actor is defined on multiple levels of abstraction. At each level in the hierarchy, the states will potentially use sub-level states to break their tasks into smaller problems (for example, attackenemies is at a high level of abstraction and uses the less-abstract fireweapon below it to perform part of its function). This HFSM structure is a common method used in game AI to frame a character's behaviors. It has several immediate benefits over a flat FSM. (For current information about HFSMs, see Resources)

In our implementation, each state in the HFSM is called a behavior and makes up the basic architectural unit of the system. Everything that characters can do in the game is constructed by piecing together behaviors in different ways that are allowed by the HFSM. A behavior can start more behaviors beneath it that will run as children, each performing a smaller (and more concrete) part of the task of the parent. Breaking each task into smaller pieces allows us to reap a lot of mileage out of the behavior unit-reusing it in other behaviors, overriding it in special cases, dynamically changing the structure, and so forth-and spend more time making the system intuitive and easy to modify.

Starting children. There are many ways to break a task into smaller pieces, and the correct choice ultimately depends on the type of task. Does the task require maintaining certain requirements, performing consecutive steps, randomly performing an action from a list, or something else? In our implementation, we allow several methods of breaking down a behavior into smaller pieces by allowing different ways to start children behaviors.

Prioritized children. The first and most common way to start children is as a list of prioritized behaviors. Behaviors that are started as prioritized will all be constructed at once (memory is allocated for them and they are added as children of the parent) and set into a special pending mode. (See Figure 1.)

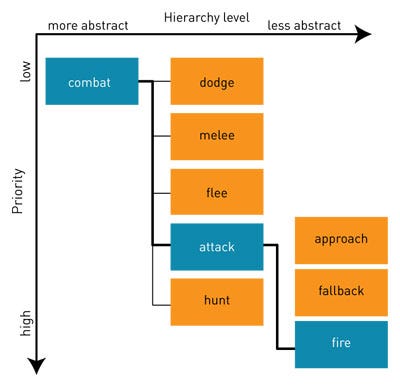

FIGURE 1 A combat behavior starts prioritized children, which in turn starts more prioritized children. Pending behaviors are orange and active ones are blue.

When a behavior is in pending mode, it is not updated; instead, it waits until the behavior itself decides it can activate, based on its own settings. When activated, the behavior will in turn start any children it has. Only active behaviors can have children, so a pending behavior will wait before starting its children.

As a rule, only one active behavior can run beneath a given parent, which creates a problem: what to do when multiple behaviors are able to run. We need to set a priority to determine which sub-task is more important. When starting children as prioritized, we define their priority implicitly based on the order the behaviors are added to the parent. The earlier a behavior is listed, the higher its priority (see Isla in Resources).

This solution avoids the problem that would have resulted had we determined priority strictly by number, such as priority-creep, in which priorities become larger and larger, trying to trump the rest. Here we localize the priority definition, so it's only relative to the small subset of behaviors that are started as siblings.

In the example above, we see a hierarchy of behaviors and the children available under each, with fire active as a child of attack, which in turn is active as a child of combat. If the currently pending behavior (dodge) were to determine that it needs to start (when the NPC detects it is being fired upon), it will interrupt its active sibling (attack) and revert it to pending, which in turn will delete all children of attack. Once no other active sibling behaviors are running, dodge may begin.

This method of applying priority implicitly works well in most cases, but sometimes the importance of the tasks cannot be described with a simple linear ordering. To handle cases in which a behavior is doing something important and should not be interrupted by non-critical tasks (even if they're higher priority), we can implement a feature called "can interrupt." Essentially, an active behavior may receive a boost in priority, preventing interruption during specific parts of its execution.

With this boost, priorities can be specified in ways more complex than simple linear ordering. For example, while melee is listed at a higher priority than dodge in Figure 1, it should still be allowed to finish its animation even if dodge decides to start-by giving it a boost in priority while running, we prevent dodge from cutting it off mid-animation.

Sequential and random children. Other ways to start child behaviors are known as sequential and random. Behaviors that are started sequentially are run in the order that they are listed. If the first can run, it will do so until it completes on its own, followed by the second, and so on. When the last behavior in the sequence finishes, the parent finishes as well. For a group of child behaviors started randomly, only one will be chosen to run, and the parent will complete once its child finishes.

Non-blocking children. Behaviors can also be started as non-blocking, in which case they may activate even if there are already other active behaviors running beneath the parent. They exist outside the prioritized list. These behaviors are useful for performing tasks that work simultaneously with others, such as firing while moving, or playing a voice over on a specific condition, or activating and deactivating effects. Generally, anything performed by a non-blocking behavior must not interfere with any other sibling behaviors that might be running, since a non-blocking behavior will only be interrupted when its parent is deactivated (and never by a sibling behavior).

By using various combinations of these methods up and down the tree, we can form decisions and task handling over multiple levels that would be difficult to define in a single behavior.

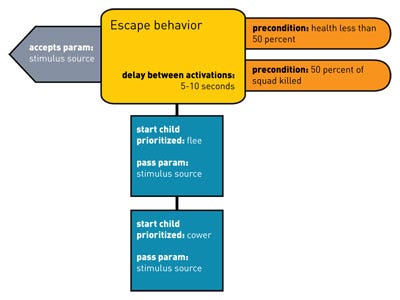

From this framework, we have defined the basic unit that will be used to construct HFSMs: the behavior. Now, by making behaviors sufficiently data-driven, we can expose not only their values and settings for modification, but the structure of the actions they perform as well. (See Figure 2.)

FIGURE 2 When either of this behaviour unit's preconditions are satisfied, it will activate, passing the stimulus source parameter to its two children. Since the children are prioritized, the higher-priority flee child will run whenever it can, allowing cower to activate only when it fails.

Each behavior is self-contained. Everything about it is determined within the behavior itself: when it can activate, when it can no longer run, what interrupts it, and what it does on activation, deactivation, and update. Most significantly, though, it defines what children it starts. Each of these features of a behavior are set within a .behavior file, one per behavior, which is parsed and read in with the rest of the game data.

Once a behavior is created in a pending state, its duty from then on is to decide when it can activate, since it contains its own activation requirements. These requirements are defined by attaching a list of precondition objects to the behavior. A precondition is a set of rules that may be evaluated to be true or false after checking conditions from the world. Game events, the health level of the character, and commands issued from a squad leader are all conditions that can contribute to activating a behavior, and a precondition can be configured to query any of them.

The settings that define a precondition are stored in a separate file and can be reused by other behaviors as well. This allows us to construct a library of preconditions that are easily selected for a new behavior simply by listing them in the behaviors configuration file.

Once a behavior is activated, it can perform its actual purpose, which in most straightforward cases is data-defined as well. Any behavior can start an asset on the character (play an animation, sound, or effect) or start more children without requiring any code side changes, which gives us tremendous flexibility in being able to quickly flesh out the structure of new behaviors. For more complicated actions, we use code-supported behaviors.

A code-supported behavior has all the configuration settings available to a base-level behavior, but with some extras available that plug into a corresponding module created in code. Fireweapon, pathfollow, and melee are examples of behaviors that have a code side associated with them, performing actions that would be too specific to generalize and make available for all behaviors. In fireweapon we have, for example, settings for "delay between shots" and "shots to fire in a burst," that would be useless on most other behaviors. These code-supported behaviors fit into the tree like any behavior and are typically found as the leaves of an HFSM, used at the bottom level as children of more abstract behaviors. Generally, the role of the higher-level behaviors is to separate out objects and tasks that need performing on those objects, and then start children to make them happen.

However, because the objects we're dealing with aren't defined until the game is actually running (such as the current target of a character during a battle or the last person to damage the player), we need a way to reference and make decisions about those objects within our behaviors. We need a parameter system.

Giving behaviors a list of settings in their configuration file adds a lot of generalization to the system, but it does not allow objects to be dynamically manipulated at run time, responding to arbitrary situations. For example, the target of fireweapon cannot be known when that behavior is being written, as it will vary. Each behavior must be able to understand and make decisions about arbitrary game objects in the world, decided at run time.

The solution we used in Destroy All Humans 2 was to allow parameters to be passed from a parent to its children when the children were created, letting those behaviors query and manipulate the object passed in as a parameter or pass it along to their own children.

Any behavior that needs to accept a parameter defines a slot for it, which must be filled by whatever parent behavior activates it as a child. From there, the variable in that slot can be used in a number of ways: it can be passed on to its own children, sent an event or message, or, for code-supported behaviors, made available to the code side. Pathfollow will seek to the object passed as its first parameter, for example, while melee will swing in the direction of its first parameter.

With this addition, the states in our HFSM can essentially send objects along their transitions (in our case, between parent and child), plugging them into other behaviors that expect them and use them as a target of their functionality. It's a way to blend some script-like functionality into the more rigid structure of an HFSM, giving extra flexibility without sacrificing organization.

Between concrete and abstract are partially-implemented behaviors. The ability to bypass parameters opens up a lot more ways to use behaviors, and many uses of parameters became quite common in our implementation. To help us with some of the standard ways in which parameters could be used, a few partially-implemented behaviors were created that would handle some standard tasks on the code side, even though they themselves were not full code-supported behaviors. That is to say they didn't belong as leaves of the HFSM; they were just helper behaviors that were still abstract until they were given the data that configured their actions.

The most commonly used partially-implemented behavior was rangetest, which would accept a target parameter that it would track and store throughout its existence, using it to make a number of decisions.

Because parameters are passed as soon as a behavior is created, even before it is activated, we can use them to determine whether we should activate it. In the case of rangetest, extra settings were available to test the range of the passed target (the first parameter) and deactivate it if it left a second range.

This functionality proved generally useful. Because the behavior can serve as the parent for both data-defined behaviors and code-supported behaviors, like pathfollow or melee, it is able to break tasks into responses based on properties of objects in the world and provide failcases implicitly when targets are too far away. By nesting rangetest behaviors and applying them to different objects at different times, we can define complex decisions about dynamic objects with just a few simple pieces.

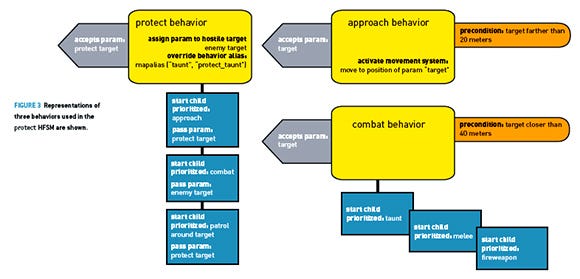

The behavior protect illustrates this point: when it is activated, it's cued to protect as its first parameter. It then starts a list of prioritized behaviors, the first of which is to stay at all times within a reasonable distance of the character being protected, represented by approach. Approach is implemented as a rangetest behavior that activates when the target is too far away, calling into the movement system to run closer. (See Figure 3.)

Second, a character in protect should engage any nearby enemies, which in our implementation are detected by a query to a target selector module running on the character (modules like these run separately from the behavior system). Combat is started in order to maintain this requirement (another rangetest behavior), and if an enemy is too close, it will activate and start children to engage the target.

If neither approach nor combat are able to start (because their preconditions are not satisfied), wander will activate by default, since it is the lowest priority behavior and has no preconditions.

The character will then patrol randomly around the actor being protected.

By creating behaviors that accept parameters at runtime, we are able to define a structure entirely in data to perform two very different tasks, while acting on multiple entities involved in those tasks-the person who should be protected and the enemies that pose a threat.

A distinct advantage of defining a character's actions hierarchically is the ability to reuse, replace, and remove behaviors from the hierarchy easily and intuitively.

Every behavior in this system (except for the simplest) has its settings defined in its .behavior settings file. However, when a behavior spawns its children, it does not start them using the filename directly; it uses an alias. Any character that starts behaviors will define a list of aliases, mapping each to a .behavior file. By abstracting behavior referencing by one layer, we are able to customize and reuse the behavior components by changing how that mapping is defined.

One of the down sides of using a flat FSM instead of an HFSM to drive your characters is the inability to quickly modify an existing behavior into a new one by changing only one aspect of it. To solve this in a FSM, you would need to recreate the entire state machine again, save the one difference.

We avoided this problem with our implementation by adding the ability to swap out behaviors at any layer in the hierarchy. By changing the mapping of a behaviors alias for a given character, you can swap out the actual behavior that's created when it references that alias, regardless of where the behavior sits in the hierarchy. This feature was commonly applied to give characters customizations on the behaviors that were widely shared, such as giving ninjas a special variant of melee or fireweapon. (See Figure 4.)

Common combat behaviors map

MapAlias("Combat", "pedestrian_combat.behavior")

MapAlias("Melee", "pedestrian_melee.behavior")

MapAlias("PathFollow", "pedestrian_pathfollow.behavior")

MapAlias("Patrol", "pedestrian_patrol.behavior")

MapAlias("Protect", "pedestrian_protect.behavior")

MapAlias("Approach", "pedestrian_approach.behavior")...

Ninja behaviors map

INCLUDE("Common Combat Behaviors Map")

OverrideAlias("Melee", "ninja_melee_claw")

OverrideAlias("FireWeapon", "ninja_throw_shuriken")

OverrideAlias("Flee", NONE)

FIGURE 4 A common behaviour map, and a specialized one for ninjas, who have different melee and firing methods, and never flee, are listed.

Aliases are typically defined in the file associated directly with the actor, but we gain some extra flexibility by allowing aliases to be redefined anywhere in the behavior tree as well. For example, consider the protect behavior described earlier. There are actually two variants of the approach behavior that are used as children within the HFSM, one to follow the character they are meant to protect and another for approaching enemies to engage in combat.

Conceptually, these behaviors do the same thing (move the character to a target), but the way they do it is different. For example, the combat variant can strafe around and move in a more aggressive way, while the protect variant simply runs to the target's location of the character it is sent to protect.

We can allow this customization in separate branches with the override alias setting that any behavior can contain. When this setting is present in a behavior, it triggers any child behaviors that it or its descendants activate to instead use the new mapping. For example, we can override approach in the combat branch to use a more aggressive version, while the opposite branch separately overrides it to use a less aggressive version. Now, whenever any of the descendants activate that alias, it will filter up through each parent in the HFSM, checking in turn for a new alias mapping until it finds the ones we assigned.

Another simple method that was very successful in customizing behaviors was adding the ability to not just customize, but remove entire behaviors from beneath a parent. This was accomplished by mapping a behavior alias to a special "none" keyword, which when encountered would simply not start the behavior. This was very useful in producing variants of enemies that didn't throw grenades or didn't dodge, for example.

In a game that features sandbox-style play, the AI needs to provide enough different and interesting characters to interact with in the world, and the size of the world doesn't have to get very big before it becomes unfeasible to hard code them all. Sometimes, even exposing behavior settings isn't enough-the structure of the tasks and subtasks must be exposed as well, in a way that's powerful but also simple to use.

In Destroy All Humans 2, the choices we made regarding AI architecture were intended to promote those traits. It's an adaptable, puzzle piece-like system in which functions are exposed in a generic way. It attempts to skirt the line between behaviors that are entirely hard coded and ones that are entirely script-defined, left to the technical designers to manage.

Instead, a system like Pandemic Australia's packages that complexity and exposes it as individual pieces to be fit together at various levels of abstraction. As a result, we generate extra flexibility in the way those pieces can be reused and expanded, multiplying their usefulness and bringing us a step closer to populating a virtual world with virtual life.

[EDITOR'S NOTE: This article was independently published by Gamasutra's editors, since it was deemed of value to the community. Its publishing has been made possible by Intel, as a platform and vendor-agnostic part of Intel's Visual Computing microsite.]

Alt, G. "The Suffering: A Game AI Case Study," in The Proceedings of the Nineteenth National Conference on Artificial Intelligence, July 2004.

Atkin, M. et al. "Hierarchical agent control: a framework for defining agent behavior," in The Proceedings of the Fifth International Conference on Autonomous Agents, May 2001. pp. 425-432.

Freed, M. "Managing Multiple Tasks in Complex, Dynamic Environments," in The Proceedings of the Fifteenth National Conferences on Artificial Intelligence, July 1998.

Fu, D. and Houlette-Stottler, R. "The Ultimate Guide to FSMs in Games," in AI Game Programming Wisdom 2. Hingham, Mass.: Charles River Media, 2003.

Isla, D. "Handling Complexity in the Halo 2 AI," in The Proceedings of the 2005 Game Developers Conference, San Francisco, March 2005.

Yiskis, E. "Finite-State Machine Scripting Language for Designers," in AI Game Programming Wisdom 2. Hingham, Mass.: Charles River Media, 2003.

Read more about:

FeaturesYou May Also Like