Augmented Reality through real time feature tracking on current generation mobiles.

This article is about a feature tracking plugin that I am developing.

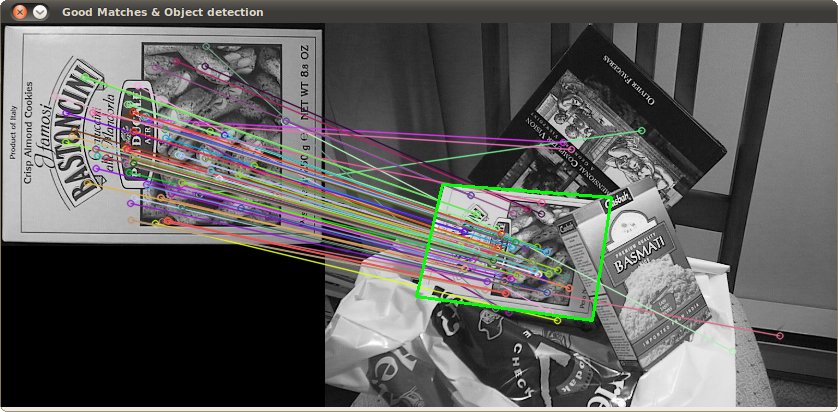

Image recognition through feature detection and tracking has been around for a while already. However, there are only a handful of plugins that can do this real time on mobile devices (Figure 1). My project aims to make this possible for all the latest mobile devices. The project will start off as a plugin for Unity, it will be easy to implement and use. The plugin makes use of the ORB, BRISK and AKAZE feature detection algorithms. The plugin has support for marker generation on the fly and it will use the GPU for faster calculations.

Figure 1: Feature detection on mobiles [1]

The Project

The plugin will be written in c++, and should be portable for use in other development environments. The method of image recognition that the plugin currently uses is called feature recognition and tracking. In its most simplest form what happens is the method finds unique features in two images and compares these to each other. A unique feature could be large changes in contrast (corners or edges) at a certain point or in certain regions of the image. Those features are found in the two images and compared, then if enough correct matches are found the process is able to determine if it is a correct match.

Figure 2: Feature detection [2]

The current algorithm I am working with is ORB. It finds corners which can be used to detect image features that are required for image tracking. It is one of the fastest algorithms available for finding these features. This algorithm has been implemented into the plugin and after several optimizations runs at an decent average speed of 98 milliseconds on my android test device. The algorithm will be optimized further through multithreading and possibly via GPU calculations. Ideally the algorithm should get its work done within 34 milliseconds, but a slight delay due to the calculations taking longer can be hidden through movement prediction.

I also investigated different algorithms in order to achieve better results. The two which seemed most promising were BRISK and AKAZE. I implemented both of these in my plugin and the BRISK algorithm seemed to be faster than AKAZE. The BRISK algorithm is able to do the entire process in approximately 302 milliseconds on my android test device. AKAZE is only able to do the entire process within a bit less than a second. Unless I am able to improve the performance of the AKAZE algorithm by using the GPU, AKAZE won’t be usable on current generation mobiles.

The BRISK algorithm has a potential to be used as a standard in my plugin if I am able to speed it up a bit, or think of another way to implement it so that it allows my plugin to run at 30 FPS. This looks like it is quite possible when using GPU calculations, and allowing multithreaded processing should allow the CPU variant to be a bit faster as well.

Performance Issues

Algorithm | Correct Matches | Total (ms) | Detection (ms) | Description (ms) | Matching (ms) |

|---|---|---|---|---|---|

ORB | 33 | 98 | 42 | 38 | 18 |

BRISK | 119 | 302 | 80 | 52 | 170 |

AKAZE | 23 | 831 | 390 | 352 | 89 |

Results from tests with a Galaxy S7

The biggest challenge in the creation of this product is making sure it runs fast enough on mobiles. Currently the only algorithm that gets close to what is deemed acceptable, performance wise, is the ORB algorithm, and this is after quite a few optimizations already. The test case, as shown in the table above, uses my Galaxy S7 device and a camera feed of 640x480 pixels with the target image visible on it. The table contains the average amount of time it takes for the algorithm to do its calculations for each of the 3 different stages in feature tracking. It also shows the amount of correct matches it is able to generate, which is significantly larger with the BRISK algorithm compared to the other two. This is the reason I want to optimize the BRISK algorithm as much as possible.

All the algorithms could benefit, performance wise, from reducing the camera feed’s resolution, but this would most likely cause a decrease in tracking quality. The ORB algorithm in it’s current state can be manipulated for use in real time tracking on the CPU. The BRISK algorithm currently does not run fast enough on the CPU. The BRISK algorithm can improved by looking into different methods of matching the found features, since this is where this method spends most of its time. As said before, and shown again in the table, the AKAZE algorithm will probably be too slow for use on mobile devices, but I will look into optimizing this one as well.

Using the GPU will improve performance for all of the algorithms, which should allow the BRISK algorithm to be used as well. One downside of GPU calculations is that not all mobiles support using the GPU for these kinds of calculations.

Final Product

The end product will be a fully functional feature detection and tracking plugin for use in Unity. It will be multithreaded for performance. The product will be easy to use for people who simply want feature tracking without all kinds of special behaviours, such as on the fly marker generation. Advanced users can choose which algorithm they wish to use for tracking, along with the parameters used in the algorithm.

Future Plans

After the first release of the product I will find other development environments that have use for the plugin. I will release the plugin as library files for use in any environment that is capable of using these files, such as a DLL for windows. I will also look into researching methods to further improve the quality of tracking.

Summary

To recap what has been mentioned in this article, I am working on creating my own augmented reality plugin. It will run on mobile and will utilize the GPU for calculations. To start off the product will be a plugin for Unity, but this will be expanded to other platforms in the future. The plugin will have 3 algorithms implemented and will allow the user to choose how and which algorithms they want to utilize in their product.

Thank you for taking the time to read about my project. I hope the article has been somewhat informative, or at least an interesting read. If you have any questions or suggestions, feel free to leave a comment or email me at [email protected].

References

[1] https://beyondreality.nl/projects/gasunie-gos/

[2] http://docs.opencv.org/3.1.0/d7/dff/tutorial_feature_homography.html

Read more about:

BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)