Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Atmospheric scattering and “volumetric fog” algorithm – part 1

This post series will cover atmospheric scattering phenomenon, related visual effects and their use in games. It will also describe “Volumetric Fog” algorithm, an efficient and unified way to reproduce physically based light single-scattering in real time

Atmospheric scattering and “volumetric fog” algorithm – part 1

This post series will cover atmospheric scattering phenomenon, related visual effects and phenomena and their use in games. It will also describe “Volumetric Fog” algorithm, an efficient and unified way to reproduce close and mid-range light single-scattering in physically based manner. Volumetric fog was developed at Ubisoft Montreal for Assassin’s Creed 4 and future games on Anvil engine. Before describing the algorithm itself, in this post let’s have a look at the scattering and the role it plays in real world and computer graphics, how it was solved so far and what we aimed to achieve.

Light scattering is a physical phenomenon describing interaction of light and various particles and aerosols in transporting media (like air, steam, smoke or water). Atmospheric scattering is responsible for various different visual objects, effects and phenomena, including:

Sky and air – atmospheric scattering of even tiny air particles causes that we can see any sky at all! Specific wavelengths of sun light (mostly blue ones) get scattered out of its original path and without them, sky would be black with only far, radiant objects like stars and galaxies visible! Non-uniform scattering of different wavelengths cause sky to have different color gradients and different behavior at various different times of a day. Blue tint of distant objects even in sunny weather is called “aerial perspective” and just like sky is caused by very subtle Rayleigh scattering.

Clouds – light scatters in air in clouds, which are high concentration of water particles (in forms of small droplets, steam or ice crystals). As light interactions are quite complex inside clouds, the result can be very complex volumetric look of clouds and the whole sky. In case of full sky cover (overcast weather) light diffuses completely and arrives from every direction producing very soft lighting.

Fog and haze – can be caused by many types of particles – usually water or dust / air pollution. Fog and haze reduce the visibility of faraway objects and can have varying density in both space and time. We can observe how fog concentrates around ground of for example swamps or plains – around wet areas or temperature gradients causing mist to form. On the other hand haze often accompanies urban, polluted environments.

Volumetric lighting and shadows – air and particles in it are being visibly lit by various relatively strong light sources. Volumetric lighting can be easily visible at night from local light sources. It gives nice, soft “volumetric” and mysterious feel. Lighting is obviously shadowed, which can creates following phenomena:

Light shafts and “god rays” – only specific light paths are volumetrically lit (other being shadowed) which creates visible rays and is special effect commonly used and desired in movies and games.

Why we use scattering in games?

There are many reasons why we want to reproduce such effects in games.

Most basic and obvious reason is that they increase the realism of the scene – allow rendering realistic skies with proper color and complex clouds. But also atmospheric scattering adds sense of distance – mentioned earlier long distance blue-tinted fog called “aerial perspective” helps to perceive spatial relations of scene objects in both natural and urban landscapes. Fog can help with hiding some of optimization-related scene simplifications like LOD and streaming. Finally, all kinds of fog, god rays and volumetric lights can be used as a tool to drastically change or build the mood of the scene. They can serve as a basis for some “special” effects like spells or sci-fi technology. Finally, atmospheric scattering and any of its manifestations can help level design and scene composition to separate scene planes or build points of eye focus.

Physical basics and properties of light scattering

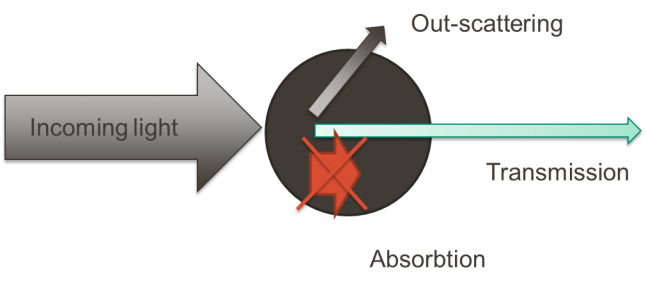

Phenomenon of scattering is caused by interaction of photons with particles that form any transporting media (like air or water – underwater rendering). When light traverses any medium that isn’t void, photons/light rays may collide with particles that create such medium. On collision, they may either be bounced away or absorbed (and turned into thermal energy). In optics, such process is usually modelled statistically and we can define amount of energy that takes part in following processes:

Transmittance

Scattering

Absorption

All those terms must add up to the amount of original lighting energy incoming at the given point – energy conservation.

As light may scatter huge number of times before reaching eye or camera sensor, this process is very difficult and costly to model and compute not only in the real time, but also even as an offline process! There is lots of on-going research on optimizing multi-scattering Monte-Carlo techniques used in movies, as still multi-scattering performance can be quite prohibitive or produce noisy results (path tracing).

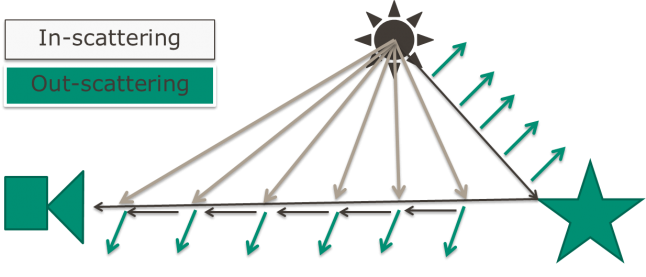

In games we obviously must simplify such computations and focus on the most significant and visible part of the effect. Therefore, in real time rendering assume single-scattering, so that light may enter the light path just once. In many cases this simplification and assumption produces results that are quite convincing. To compute single-scattering we must take into account 2 effects contributing to light paths between eye and visible points in space:

In-scattering. Photons entering the light path and going towards the eye. They add their luminance to the measured radiance by the rendering camera for given pixel.

Out-scattering. Light exiting this light path and making measured radiance smaller.

Both those effects are combined and result in loss of contrast and color saturation. They cause brightening of the dark parts of the scene combined with loss of strong highlights. Still, even with such approximation it is very difficult to calculate the scattering properly – as far in-scattered light must get out-scattered as well (otherwise we would have extremely large in-scattering contribution and way too bright effect), even in such simplified single scattering model! Let’s have a look how to compute in-scattering and out-scattering in such model.

Out-scattering

Out-scattering is very simple to model in single-scattering approximation, as it doesn’t depend on the lighting or previous events on a light path, only on concentration of scattering medium (scattering and absorption coefficients related to it). As scattering model assumes that at every point in space there is some probability p of light extinction, it can be modelled mathematically as exponential process and can be described by an exponential function. Physics and empirical laws confirm that and there is a physical law describing this relationship - Beer-Lambert law. It formulates the light transmittance function (amount of light that travels through given light path and is transmitted without loss) as an exponential decay function of light extinction integral.

.jpg/?width=700&auto=webp&quality=80&disable=upscale)

Be(x) is extinction coefficient at given point in space, being sum of scattering and absorption coefficients.

Therefore for uniform or analytical density media out-scattering can be computed using a single exponential function! However with non-uniform medium density (which we want to model) it becomes more costly and involves numerical integration.

In-scattering

Calculating in-scattered lighting is more complex, because we need to know how much lighting gets to given point in space. This is very similar to the way we shade our regular objects – we need to calculate shadowing, light falloff and light radiance entering given point. Depending on picked lighting and shadowing models it can add considerable cost on its own. Having such information (multiple components of incoming radiance and directions related to them) we can then compute how much light enters given light path. For this, we have 2 mathematical properties. One of them is scattering coefficient (already mentioned) – which is simply a percentage of incoming lighting being scattered.

The second one is more complex and is called a phase function. Due to different particle shapes, sizes, chemical structure, polarization and other physical properties, different amounts of lighting can get scattered in different directions. It is often non-uniform– so called anisotropic scattering. Phase functions describe how much lighting gets scattered (from the total scattered lighting) in which direction. In reality for many cases like clouds (they are formed of air, steam, water droplets and ice crystals) they can be very complex and not easy to represent numerically and we would use look-up-tables and numerical methods to represent them.

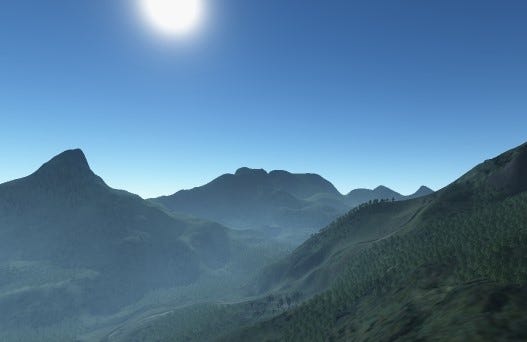

Fortunately to render haze, localized fog and volumetric lighting, we can rely on Mie scattering model. It is scattering of light in medium containing large particles like aerosols. Density of such aerosols and resulting scattering coefficients are approximated with exponential decay function of height (in addition to any local differences in density caused by mentioned factors like temperature gradient or wetness). It is also strongly anisotropic scattering.

Mie scattering phase function can be approximated by Henyey-Greenstein phase function (more about it in second part of this post). Effect of different scattering function anisotropy factors on look of atmospheric scattering and fog can be seen on following screenshots (progressively larger anisotropy).

.jpg/?width=700&auto=webp&quality=80&disable=upscale)

Anisotropy 0.1

Anisotropy 0.3

Very high anisotropy - 0.9. Note that this screenshot doesn’t contain any “bloom” effect, light disk is visible only because of the scattering.

Combining in-scattering and out-scattering

Even in the single scattering model, one must take into account out-scattering of the in-scattered lighting as well. Otherwise, in-scattered lighting would go to infinity with increasing length of the light path!

Therefore, solving the scattering requires some form of integration (either analytical or numerical) of in-scattered lighting and transmittance functions. It can be computed or approximated in many ways and our volumetric fog algorithm is one of such ways done using compute shaders and volumetric textures. Before explaining it, I will present solutions existing in games that we used on previous games, or considered using and finally why we didn’t pick any of them and decided to develop a new technique.

Existing game solutions

Since beginning of real-time 3D rendering use in games, graphics engineers and artists tried to approximate atmospheric scattering using many different solutions and approaches:

Analytical solutions. They are based on shaded object distance and position. Simple linear distance based fog was one of oldest rendering tricks used to hide streaming artifacts. More recently state of the art became Wenzel’s exponential fog, which is a proper and physically inspired solution to the light scattering equation. However it is based on many simplifications, like totally uniform lighting or exponential density being integrated – it cannot reproduce any local effects, local height gradients or light shadowing.

Source: C. Wenzel “Real-time Atmospheric Effects in Games Revisited”

Billboard / particle based solutions. Based on transparent objects manually placed in the scene by artists. Usually very simple approach, doesn’t require many special engine features and can be rendered effectively even on mobiles, but surprisingly plausible. It works robustly in some static conditions (due to distance and depth fade techniques used), but is not really realistic – in many cases there is a serious mismatch between actual lighting and shadowing and the produced effect. It is also flat and not physically based and requires lots of manual artist work to get it placed in proper places without producing unconvincing results.

Post-effect image based solutions. Popularized by Unreal Engine 3.0 and CryEngine. Usually done by applying a bright-pass (like for typical bloom post-effect) followed by a radial blur from light source position projected on the screen. By definition it cannot be physically based, but can look quite attractive for distant lights and we have seen this effect used with success in many games. For a year or two I would say it was overused and applied too much and without taste – like a bloom effect. Unfortunately, produced effect is flat and not proper for close lights, doesn’t compose very well with fog and transparencies and is completely lost when camera doesn’t face the light direction.

Raymarching based solutions. New category of solutions that became possible just recently because of computational complexity on the GPU. For every shaded pixel (not necessary in full screen resolution) raymarching algorithm fetches multiple lighting and shadowing samples along the view ray and accumulates them (in the moist naïve implementation using simple additions) to output final value. They work very well (especially with epipolar sampling extension) and give attractive results, but usually are very short range and have their limitations that I’m going to cover in the next section.

Source: C. Wyman, S. Ramsey “Interactive Volumetric Shadows in Participating Media with Single-Scattering”

Limitations of raymarching solutions

Raymarching-based volumetric shadows seemed the most modern solution, used by several other titles recently and potentially giving biggest visual improvement and smallest amount of artist work. However, we noticed some limitations of regular 2D raymarching.

Most of those algorithms and their implementations are for very close range “volumetric shadows” / “light shafts”, not really physically based and suited for supporting longer ranges fog (prohibitive number of samples required). They often use simply artist-specified blending mode instead of solving proper scattering equation.

They are based on assumption of a single light source (many special optimizations and techniques for it). It wasn’t acceptable for us, as we needed a coherent solution handling many light sources in a coherent manner. Example of smart and optimal technique with too many constraints can be epipolar sampling. It lacks varying media density (whole optimization is based on uniform participating medium scattering) and doesn’t handle multiple light sources. Not only every different light source would require a different epipolar sampling scheme and a separate pass, but also they wouldn’t blend properly – it is not order independent effect.

All modern implementations of raymarching work in smaller resolutions (half or even quarter of the screen size!). Usually signal is low-frequency so it isn’t a big problem on flat surfaces, but produces under-sampling, aliasing and edge artifacts that are difficult to avoid, even with smart bilateral up-sampling schemes.

Finally, almost all up to date implementations were designed as a post-effect and didn’t handle properly forward-shaded objects like transparencies or particles. Notable exception here is algorithm used in Killzone: Shadow Fall described and presented by Nathan Vos and Michal Valient and we came up with a similar solution for this problem, but we went much further with the use of volumetric textures.

All of those reasons made us to look for a better solution that would solve atmospheric scattering in unified manner:

for many light sources,

for many layers of shaded objects,

handling non-uniform participating medium distribution,

taking physical scattering phenomenon properties into account,

without edge artifacts from smaller rendering resolution,

and in parallel manner, fully utilizing the GPU power.

This is the end of the first part of the article. In the second one, I’m going to cover our algorithm in detail.

Post series based on my Siggraph 2014 talk: http://bartwronski.com/publications/

References (both parts of the article):

Naty Hoffman, “Rendering Outdoor Light Scattering in Real Time”, GDC 2002

Wojciech Jarosz, “Efficient Monte Carlo Methods for Light Transport in Scattering Media”

Carsten Wenzel, “Real-time Atmospheric Effects in Games Revisited”

Nathan Vos, “Volumetric Light Effects in Killzone: Shadow Fall”, GPU Pro 5

Michal Valient, “Taking Killzone Shadow Fall Image Quality into the Next Generation”, GDC 2014

Ramsey Wyman “Interactive Volumetric Shadows in Participating Media with Single-Scattering”

Egor Yusov, “Practical Implementation of Light Scattering Effects Using Epipolar Sampling and 1D Min/Max Binary Trees”

Anton Kaplanyan, “Light Propagation Volumes”, Siggraph 2009

Tiago Sousa, “Crysis Next Gen Effects”, GDC 2008

Kevin Myers, “Variance Shadow Mapping”, NVIDIA Corporation

Annen et al, “Exponential Shadow Maps”, Hasselt University

Wrennige et al, “Volumetric Methods in Visual Effects”, SIGGRAPH 2010

Simon Green, “Implementing Improved Perlin Noise”, GPU Gems 2

Ken Perlin, “Improving Noise”

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)