Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

An improved way of grabbing objects in VR with Unity3D

There are several ways to solve object grabbing in VR with Unity3D but all have some kind of issue. This blog post summarize different approaches and propose a better one.

Update: SimpleVR, the lightweight framework I develop (with the grabbing technique explained in this article) is now open source.

Gitlab page: https://gitlab.com/dacaes/SimpleVR

Reddit announcement: https://www.reddit.com/r/Unity3D/comments/bhpscd/introducing_simplevr_an_open_source_lightweight/

One of the most common actions performed in VR is taking objects, moving, rotating and throwing them, so one of the usual actions to program in VR development is a consistent way to deal with that kind of interactions.

I've been working with Unity for years, developing video games and VR applications and I've tried many different ways to grab objects, I am going to explain them with pros and cons, before explaining my recently discovered new better approach.

Parenting + Kinematic Rigidbody

The first idea that comes to mind if you have been developing with Unity3D before could be this one. When grabbing an object make it child of player's hand and set it as kinematic (not affected by physics).

grabbedGameObject.transform.parent = hand.transform; grabbedGameObject.getComponent<Rigidbody>().isKinematic = true;

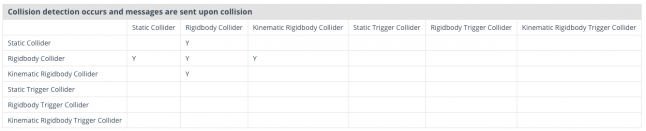

That works perfectly, the object follow hand's position and rotation smoothly but it has an inconvenient, kinematic Rigidbodies only detect collisions with Rigidbody Colliders as the following table shows.

Table from: https://docs.unity3d.com/Manual/CollidersOverview.html

Rigidbodies are bad for performance, they are supposed to be used to simulate physics and with that in mind they should be used only for that. So we should use Rigidbodies for that objects player can grab and throw but not for walls and other static objects.

Of course, you can set the object non-kinematic, but it will make it move on player's hand on every collision. That can be fun for a casual experience but it is nonsense for a real serious game experience. Imagine using a sword in VR that stagger on every hit ending with the the sword pointing backwards in player's hand.

But, do we really need collisions with Static Colliders and other kinematic Rigidbodies?

Well, it depends, maybe you don't need it, if that is your case that approach is ok for you, but I like to improve my experiences/games with details like some spark particles on a stone wall when player hit it with a metallic weapon with enough force. If you feel you would like to improve your games/VR experiences, keep reading!

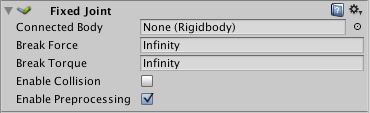

Fixed Joint

Another approach is to use Fixed Joints.

From Unity's manual:

"Fixed Joints restricts an object’s movement to be dependent upon another object. This is somewhat similar to Parenting but is implemented through physics rather than Transform hierarchy. The best scenarios for using them are when you have objects that you want to easily break apart from each other, or connect two object’s movement without parenting."

That way, you can add a Fixed Joint component to grabbable objects and when grabbing them set connectedBody public variable of Fixed Joint component to the hand.

grabbedGameObject.getComponent<FixedJoint>().connectedBody = hand.getComponent<Rigidbody>();

The problem here is that hands need a Rigidbody component. And even worse, Fixed Joint physics "fight" all the time with the object simulated physics and that causes some weird behaviour.

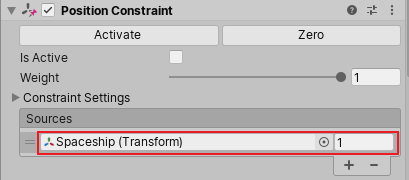

Constraints

Another approach is to use Constraints.

From Unity's Manual:

"A Constraint component links the position, rotation, or scale of a GameObject. The fundamental object in Unity scenes, which can represent characters, props, scenery, cameras, waypoints, and more. A GameObject’s functionality is defined by the Components attached to it. More info

See in Glossary to another GameObject. A constrained GameObject moves, rotates, or scales like the GameObject it is linked to."

I found that some months ago and I thought it could be the correct way to solve my problem with object grabbing. Specifically I tried with Parent Constraint; that is what Unity's Manual says about it:

"A Parent Constraint moves and rotates a GameObject

as if it is the child of another GameObject in the Hierarchy window. However, it offers certain advantages that are not possible when you make one GameObject the parent of another:

A Parent Constraint does not affect scale.

A Parent Constraint can link to multiple GameObjects.

A GameObject does not have to be a child of the GameObjects that the Parent Constraint links to.

You can vary the effect of the Constraint by specifying a weight, as well as weights for each of its source GameObjects.

For example, to place a sword in the hand of a character, add a Parent Constraint component to the sword GameObject. In the Sources list of the Parent Constraint, link to the character’s hand. This way, the movement of the sword is constrained to the position and rotation of the hand."

With that example what could be wrong?

I tried it with, apparently, success. It works more or less like Fixed Joints but without physics tricky things. I tried to write my own code to copy position and rotation of player's hand to grabbed object before and it didn't work well, I think Constraints work that way but they are maybe executed in the correct precise moment they need to, because they are part of the engine.

The problem. Constraints are so much better than Fixed Joints, but it seems like they fight Rigidbody Physics too and debugging frame by frame I realized that objects colliders were jumping to strange positions sometimes after a collision. That triggered false collisions around the player and it was so annoying.

3rd party plugins

Before explaining my recent discovery, I would like to point out that there are a lot plugins that make a developer's life better. On Unity Asset Store you can find them. I don't know if they solve this problem but if you want to work quickly and you don't mind to work tied to other's code architecture (I mind, and I prefer not using plugins if they are avoidable because then you depend on them, but that's another story) you can try.

TrackedPoseDriver and BasePoseProvider

The improved new approach for grabbing objects.

Recently, I've tried to use TrackedPoseDriver component, the same I use to make game Camera and hands GameObjects follow the Headset and Controllers, to grab objects in Unity.

When player grabs and object that is what happens to it:

//remember of: using UnityEngine.SpatialTracking; grabbedGameObject.transform.parent = null; grabbedGameObject.transform.position = Vector3.zero; grabbedGameObject.transform.rotation = Quaternion.identity; TrackedPoseDriver tpd = grabbedGameObject.AddComponent<TrackedPoseDriver>(); tpd.UseRelativeTransform = true; tpd.poseProviderComponent = hand.PoseProvider;

What that code do?

First the object is deparented (just in case, I parent objects for other things) and it is set on the position 0,0,0 and without rotation. On throwing, object will relocated to hands position and it will be rotated on hand's rotation too.

Then the code adds a Tracked Pose Driver component to the object that has to be destroyed when throwing the object. I've tried to disable it but it keeps tracking position and rotation, so I need to add and destroy it every time.

Setting a Pose Provider component to the Tracked Pose Driver makes it follow position and rotation of the Pose Provider.

The Pose Provider is a new component the hands need. It is basically a component that inherits from BasePoseProvider and implements its method "TryGetPoseFromProvider" which is used from Tracked Pose Driver to follow hand as it was parented.

A simplification of my code (I use offsets to grab objects in different ways but here it uses hand rotation and position) of Pose Provider:

using UnityEngine; using UnityEngine.Experimental.XR.Interaction; namespace SimpleVR { public class PoseProvider : BasePoseProvider { public override bool TryGetPoseFromProvider(out Pose output) { output = new Pose(transform.position, transform.rotation); return gameObject.activeInHierarchy; } } }

It is important to say that BasePoseProvider is experimental and it can change (it will migrate from UnityEngine.Experimental.XR.Interaction for sure).

With that new approach all seems to work perfectly, with collisions with walls and other static colliders and without strange physic simulations.

Conclusions

I don't know if this is the best approach for grabbing objects in VR with Unity3D at this moment, but this is the best I've been able to figure out. I'll keep investigating and trying new things to improve my skills and my games.

I hope you have found this post useful, if you have any question don't hesitate to ask here or sending an email to [email protected]

I've uploaded an example to GitLab: https://gitlab.com/dacaes/grabbingdemo

Thank you for your time!

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)