Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Where C# Meets CSS: Tech Tricks From An AR Lyrics Experiment

One woman's hacky approach to making beautiful, 3D, animated handwriting in augmented reality.

Where C# Meets CSS: Tech Tricks From An AR Lyrics Experiment

Frequently, when you’re working on a project, the biggest technical difficulty comes from where you would least expect it. This was the case for me when I was working with the Google Creative Lab to prototype an experiment that brings Grace Vanderwaal’s Moonlight into augmented reality. We loved the idea of surrounding the viewer with beautiful handwritten lyrics that would unfurl and float in space as they wandered around them.

Our AR lyrics in the real world.

I was the coder on the project, and when I sat down to prototype it, I figured that the hardest part of this project would be AR. Placing and keeping objects stable in AR is a huge bundle of complex calculations, right? Actually, it turned out to be pretty trivial, thanks to ARCore doing the heavy lifting. But creating the animated handwriting effect in 3D space? That was a whole other story.

In this post, I’ll talk about how some neat hacks I used to approach and solve the problem of animating our 2D handwriting in 3D space with just the Unity LineRenderer, in a way that’s was still performant even with thousands of points.

The concept

As we thought about what kind of music experiments we could do in AR, we were intrigued by the popularity of lyric videos. Far from stark karaoke-style text, these lyric videos are stunning, often having just as high production values and aesthetic detail as any other music video. You might have seen Katy Perry’s emoji-centered one for Roar, or Taylor Swift’s Saul Bass-esque video for Look What You Made Me Do.

We came across Grace VanderWaal’s lyric videos and loved their style. Her videos frequently include her handwriting, and it made us wonder: could we make a handwritten lyric video, but explode it out into AR? What if the song wrote itself out, as it was being sung, all around you — not in images, but in graceful (no pun intended), flowing, 3D-seeming lines?

We quickly fell in love with the concept and decided to give it a shot.

Getting the points

First, I broke down what data I would actually need to have in order to mimic this handwriting effect.

We didn’t want to just place images of handwriting into a scene — we wanted the lyrics to be a physical-feeling thing you could walk around and peer into. If we just placed 2D images into 3D space, they would effectively vanish when viewed from the side.

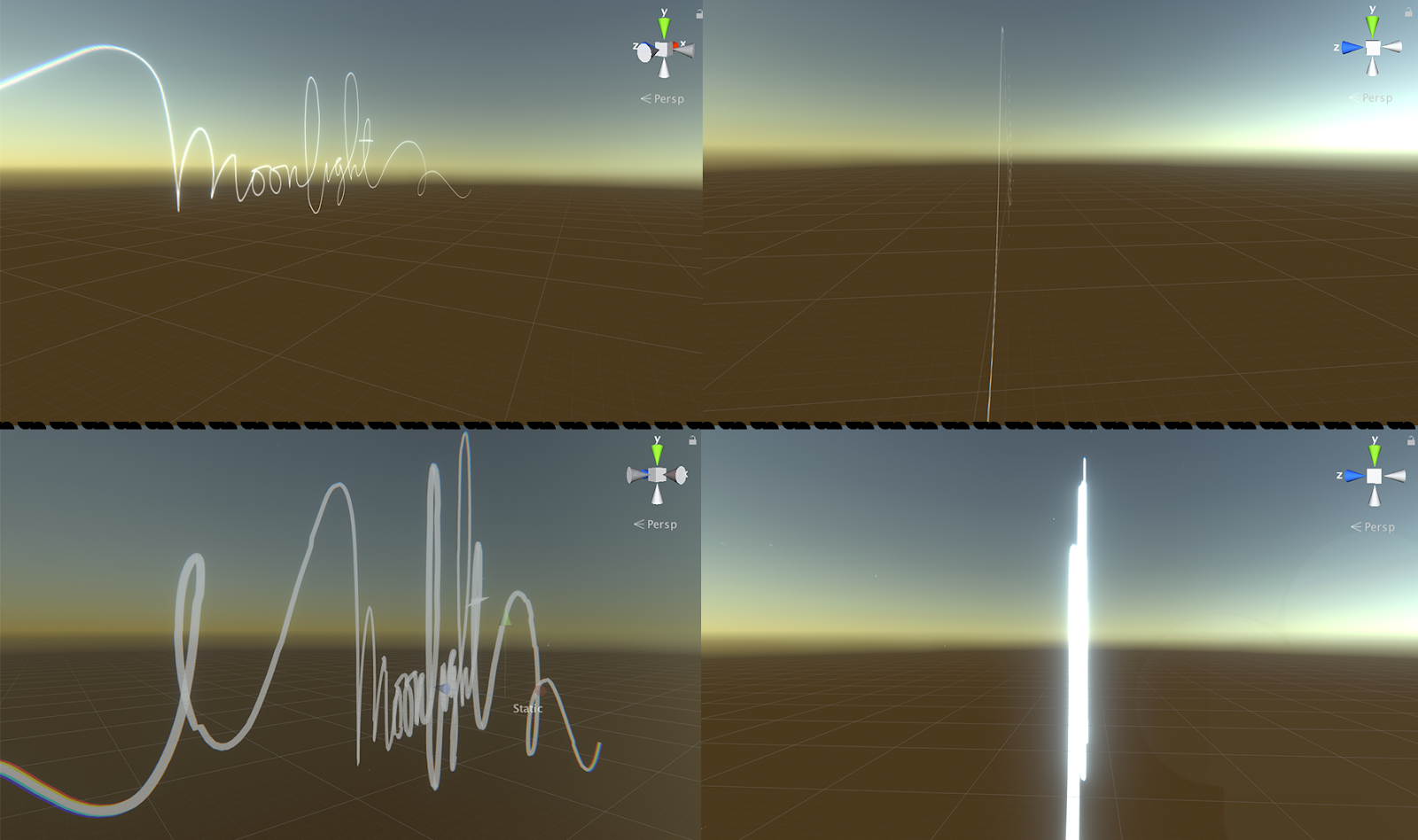

Top left and top right: a PNG image in a Unity scene. This looks okay… until you move around it. Once we view it from the side, it basically disappears. It can also look pixelated if you get too close. Bottom left and bottom right: 3D point data being used to draw a line in space. Viewed from the side, it maintains its sense of volume. We can wander around and through it, and it will still feel like a 3D object.

So, no images: first thing, I’d have to break any handwriting images into point data that we could use to draw lines.

In order to make it look like handwriting, I also needed to know in what order the points were drawn in. Writing loops back on itself and curves back, so points further to the left don’t necessarily come earlier than points further to the right. But how could I get this ordered data? You can’t get it from a pixel image: sure, you can get pixel color values in an (x,y) array, but it won’t tell you which point was colored first.

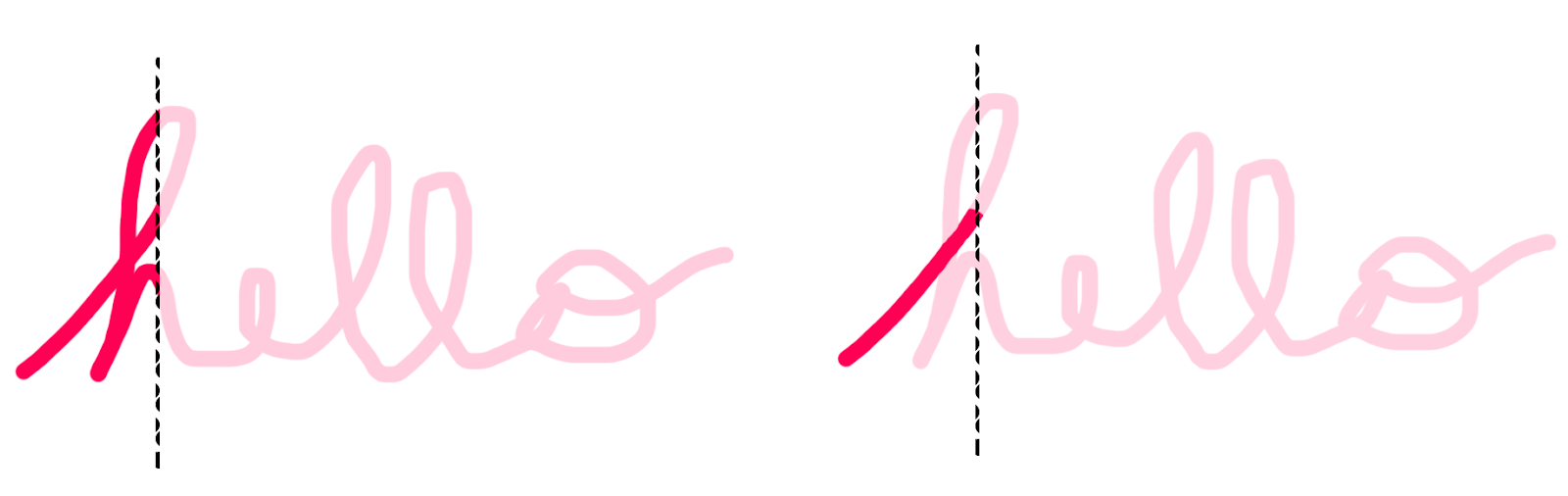

Behold, programmer art! Let’s say we’re trying to draw the word “hello” with data, and our dotted line represents the point that we’ve gotten to so far. The left example shows what would happen if we were just reading in PNG data, and drawing colored pixels to the left earlier than those on the right. This looks super weird! We instead want to mimic the right example, which requires us to know what order the points were drawn in.

Vector data, however, is inherently made of ordered point data — so although I couldn’t use a PNG, I could use the data from an SVG just fine.

But could Unity?

Drawing the points

As far as I could tell, Unity had no native support for extracting point data from SVGs. Anyone who wanted to use SVGs in Unity seemed to have to rely on (often expensive) outside assets, all in different states of being maintained. Further, those assets seemed geared towards displaying SVGs, not just pulling out their point/path data. As mentioned above, we didn’t need or even want to display our SVG in space: we just needed to get its point data in an ordered array.

After mulling on it for a bit, I had a realization. Unity doesn’t really support SVGs — but it absolutely does support loading in XML, via several standard C# classes (e.g. XmlDocument). And an SVG is really just an XML-based vector image format. Could I load in SVG data if I basically just changed the extension from .svg to .xml?

The answer, surprisingly, was yes!

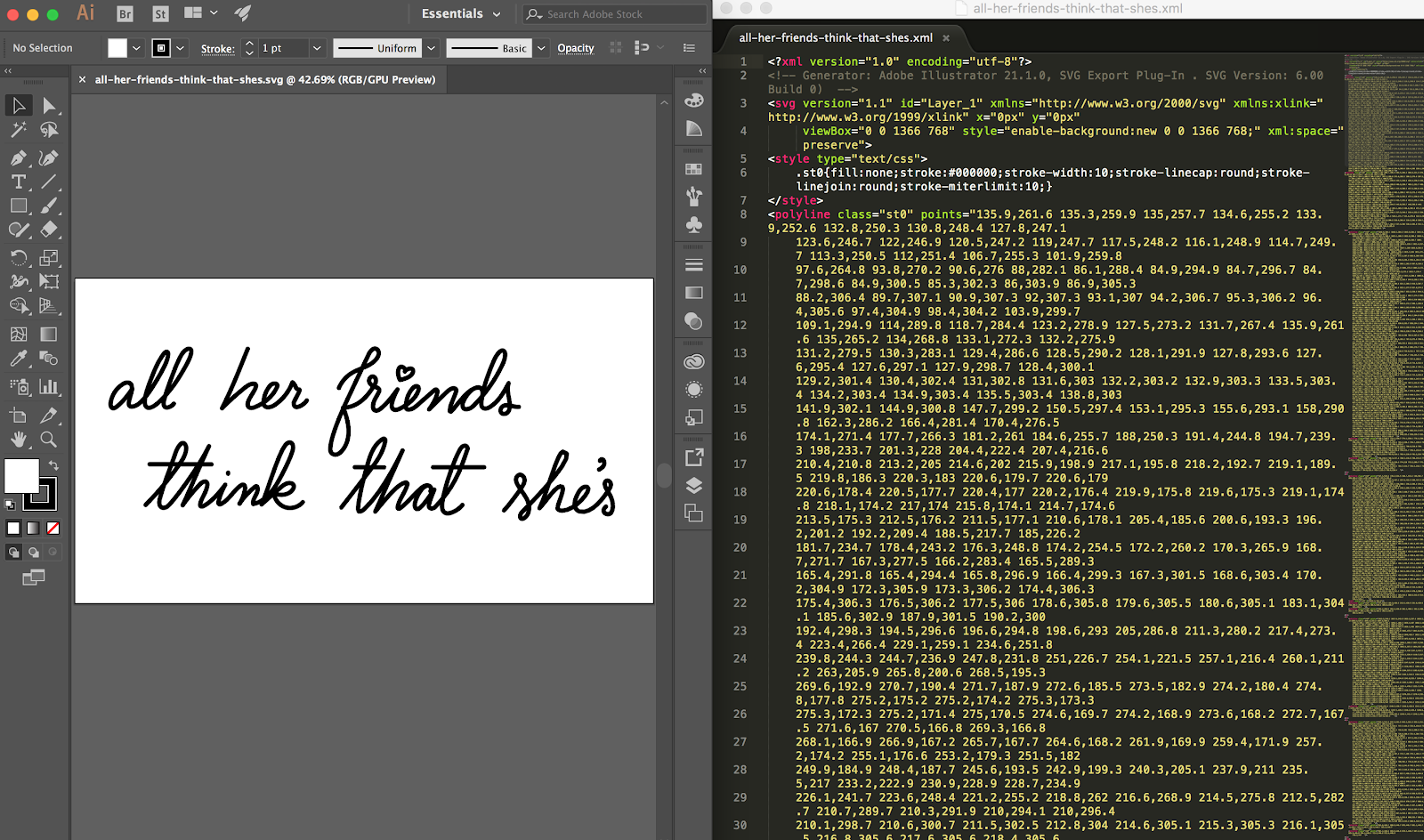

So we had our workflow: if the artists drew the lyrics as paths in Illustrator, I could simplify those paths into straight lines, export that path data as an SVG, convert that to XML (literally just changing the file extension from .svg to .xml), and load it into Unity no problem.

Left: one of the SVGs from our artists. Right: the XML data. Each curve has been simplified to polylines. For practical and aesthetic reasons, we decided that a given lyric’s words would all start drawing in at the same time.

I did this, and was pleased to find that we could easily load the data into Unity and put it into a LineRenderer without issue. Further, because the LineRenderer has a billboard effect by default, it looked convincingly like the line was a 3D volume. Hooray! AR handwriting! Problem solved, right? Well…

Getting the handwritten animation

So I had our handwriting floating in space, and now I just (“just”) had to animate it being written in.

In my first go, I took my LineRenderer, and wrote a script to add points to it over time, to create the animation effect. My face dropped when I saw the app slow to a crawl.

Turns out, adding points to a LineRenderer in realtime has a pretty heavy cost. I had wanted to avoid parsing complex SVG paths, and so had simplified our path data into polylines — but this required many more points in order to preserve the curve of our path. I had hundreds, sometimes thousands, of points in a given lyric, and Unity was not happy about having to dynamically change its LineRenderer data. Mobile — our intended platform — went even slower.

So, dynamically adding points to our LineRenderer was not feasible. But how could I get our animation effect without it?

The Unity LineRenderer is known to be stubborn, and sure, I could potentially skip all this by buying an outside asset instead — but like the SVG asset packs, many of them were some combination of expensive, overly complex, or not a good match for our needs. Plus, being a coder, I was intrigued by the problem, and felt compelled to solve it just for the satisfaction of solving it. It seemed like there must be a simple solution available inside the components I got for free.

I mulled on the problem for a bit, searching Unity forums nonstop. Each time I came up empty-handed. I banged my head against the wall with a few half-baked solutions, until I realized I had seen this problem before — just on a totally different stack.

That is, CSS.

Animating the points

I recalled having read about this problem years ago, on Chris Whong’s blog, where he detailed his approach to creating NYC Taxis: A Day In The Life. He was animating taxis moving along a map of Manhattan, but wasn’t sure how to make the taxi leave an (SVG) trail on the map.

He found, however, that he could manipulate the stroke-dasharray parameter of his line to make the effect work. This parameter turns a solid line into a dashed line, and basically controls how long the dashes and their corresponding spaces are. A value of 0 means no space between the dashes, so it looks like a solid line. Crank it up, though, and the line breaks into dots and dashes. With some clever tweening, he was able to animate his line withouthaving to add points dynamically.

Manipulating the dasharray lets you break a solid line up into chunks of color and spaces. Screencap taken from Jake Archibald’s great interactive.

In addition to stroke-dasharray, CSS coders can also manipulate the stroke-dashoffset of a path. In Jake Archibald’s words, the stroke-dashoffset controls “where along the path the first “dash” of the dotted dash created by stroke-dasharray starts.”

What does that mean? Well, let’s say we crank up stroke-dasharray, so that the colored dash and the space are both the full length of our line. With a stroke-dashoffset of 0, our line will be colored. But as we increase that offset, we move the start of our line to later and later in the path — leaving the space behind. We have an animating curved line!

If we max out our dasharray value, we can use the offset to make the line seem like it’s animating. Screencap taken from Jake Archibald’s great interactive.

Now, obviously, in C# we don’t have stroke-dasharray or stroke-dashoffset. But what we can do is manipulate the tiling and the offset of the material that our shader is using. We can apply the exact same principle here: if we have a texture that’s like a dashed line, with one part colored and one part clear, we can manipulate our texture tiling and texture offset to smoothly move the texture along the line — transitioning between colored and clear, without having to manipulate any points at all!

My material is half colored (white), half clear. As I manually manipulate its offset, it appears to write out the text. (In the app, we manipulate the shader with a simple SetTextureOffset call.)

And that’s exactly what I did! Given the spawn time of the word, as well as the time it should be filled out, I could simply lerp our offset based on how close we were to its deadline. No point value manipulation necessary!

Our performance and framerate rocketed back up, and we were able to see our AR lyrics writing themselves out smoothly and gracefully in the real world.

In the real world! We started playing with z-indexes and layered text to give it a greater sense of dimensionality.

Hope you enjoyed this little peek into how I wrangled a notoriously stubborn Unity component into doing something cool and performant. Want to see other explorations into AR art, dance, and music? Check out our trio video. You can find out more about AR experiments on our Experiments platform, or get started with ARCore here. Happy lerping!

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)