The Basics of Localization, Part 6

Machine translation is a readily available tool for localization teams, but it's probably also not the most ideal. In Part 6 of this series, we’ll take a look at why Google Translate (and other machine translation tools) may not be your best friend.

Sometimes, as a Localization Writer and Editor, I have to field many questions about, well...my field. Here’s a smattering of questions I get:

“What is localization?” (Jump on over to Part 1 for the answer!)

“Do you have to be an English major?”

“What tools do you work with?”

“Can’t you just plop the text into Google Translate?”

…

*record scratch*

Hoo boy. Let’s walk through that last question, hmm? (And yes, I HAVE gotten that question before.)

Ignis gets it. Source: Twitter

Machine translation, or MT for short, is commonly known as a tool to assist translators and editors in translating foreign text. Much like a spell checker, it’s meant to make the writing process a bit easier for linguists. However, machine translation is still in the works and is far from perfection. There’s a lot that goes into human translation that machine translation can’t pick up on, like understanding different dialects and slang terms, discerning the meaning of sentences, and breaking down sentence structure, such as who’s speaking to whom, context, and intent. Without a human translator, a sentence put into Google Translate can spit out something ENTIRELY different from its intended meaning.

The following is a bit of an extreme example, but it’s not terribly far from the truth. Let's put a common English sentence into Google Translate, run it through a few different languages, then translate it back into English.

Original English: The quick brown fox jumped over the lazy dog.

Japanese: クイックブラウンキツネは怠惰な犬の上を飛び出しました。

French: Le renard brun rapide a sauté sur le chien paresseux.

Chinese (Traditional): 快速的棕色狐狸跳上了懶狗。

Spanish: El zorro marrón rápido salta en el perro perezoso.

German: Der schnelle braune Fuchs springt auf den faulen Hund.

Korean: 빠른 갈색 여우는 게으른 개로 점프.

Translated English: A quick brown fox jumps into a lazy dog.

Poor doggo. Source: nebraskaris.com

...Yeah. Not exactly great. A few things changed from being run through Google Translate several times.

1) The preposition “over” became “into,” which completely changes the context and original meaning of the sentence. (Also, ouch!)

2) Both instances of the article “the,” which denote a specific fox and dog, became the article “a,” denoting any random fox and dog.

3) The tense changed from past (“jumped”) to present (“jumps”), meaning that the event is happening right now rather than it already having occurred.

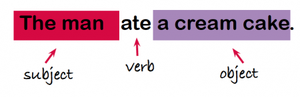

So while the subject and object, as well as their respective adjectives remained the same, the machine translated sentence still changes the original meaning by a mile. Again, this is an extreme example, as most people would probably translate a sentence or paragraph into one language than seven, but the point is that machine translation still has a ways to go. And it gets increasingly difficult with more complex sentences. Take puns, for example. Most languages have some sort of wordplay in effect, but run that through a machine translation and you probably won’t get the original meaning.

Mmm, cake. Source: pinterest.ca

Let’s take this really bad pun, courtesy of yours truly.

Original English: If the singer of “Royals” was a big Tolkien fan who enjoyed the sound of phone calls, would she be known as the Lorde of the Rings? (Author’s note: I am not sorry.)

Run it through Google Translate into one language (we’ll take Amharic, the official working language of Ethiopia), and you'll get the following.

Amharic: የ "ሬቤልስ" ዘፋኝ የስልክ ጥሪዎች ድምፅ ሲደክመው ትልቅ የቶልኪን ደጋፊ ከሆነ, የደውል ዘንጉድ ጌታ ይባላልን?

Now let’s run it back through Google Translate, and here’s what we get.

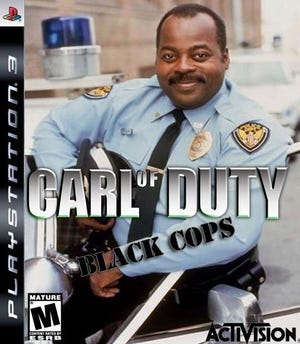

Translated English: If the vocalist of the "Rebecca" is vocal when he is a great supporter of Tolline, will he call the Call of God Call of Duty?

I knew I'd seen Carl Winslow somewhere else. Source: Everyday No Days Off

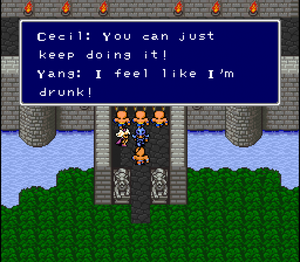

One of my favorite examples of a machine translation gone horribly (and hilariously) wrong is an experiment called “Funky Fantasy IV” done by translator Clyde Mandelin. He ran the popular video game Final Fantasy IV through Google Translate (Japanese into English), and the results of the experiment are, well, pretty funky. Some of the machine translated lines contain strong and potentially offensive language, so consider this your official warning.

I'd pay for this game. Source: Clyde Mandelin/Legends of Localization

While some of these examples noted above can seem pretty extreme, it should give you a general idea of machine translation’s current unreliability. And while it can certainly be helpful if human translators aren’t around, it’s got a long way to go in perfecting natural-sounding dialogue that’s appropriate for games and other media.

Read more about:

BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)