Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Perception: The most technical issue of VR

This article highlights some of the research results about perception in VR that were found by scientists during the last 50 years. It aims to provide a better understanding of the human perceptual system and its effects on the design of VR games.

This blog article is mainly a written protocol of my talk at the Quo Vadis 2017 conference in Berlin, Germany, entitled „Perception: The most technical issue of VR“: http://qvconf.com/content/perception-most-technical-issue-vr . The talk was also recorded and can be watched online: https://vimeo.com/ondemand/quovadis2017/

Fifty years of virtual reality research resulted in a lot of interesting findings that are also important for game development. Especially, perception is a very important factor for VR since it is connected to immersion, presence, and motion sickness, which are key terms for the development of VR games. Furthermore, perception might be different from the real world and can even be manipulated.

In this article, I want to highlight some of the research results about perception in VR we and other scientists found. I hope this will provide a better understanding of the human perceptual system and its effects on the design of VR games to you. Since perception in VR is a general topic, the content of this article can also be applied to other domains than game development like architecture, tourism, or therapy.

This article is structured according to three theses about perception:

Perception is important

Perception is different

Perception can be manipulated

1. Perception is important

In more detail: The user‘s perception is more important in VR games than in traditional games. When developing a traditional game, you have to care about a lot of things: fun, performance, reliability, balancing and so on. For VR games, you can add a few more things to this list:

Immersion

Presence

Perception

Immersion is limited by the objective capabilities of the VR technology [0] which are technical factors like vision, tracking, field of view, resolution, graphics quality etc. This means, a VR system that enables stereoscopic vision is more immersive than a VR system that enables only monoscopic vision. A VR system that enables position and orientation tracking is more immersive than a VR system that enables only orientation tracking. A VR system that enables to render real-time shadows is more immersive than a system that does not enable this.

Current head-mounted displays (like the Oculus Rift or HTC Vive) usually offer stereoscopic vision, position and orientation tracking, high resolution (> FullHD), low latency (< 20ms), high frequency (90 hertz), and a wide field of view (~110°). This is already quite good and delivers a high degree of immersion. But it does not yet fit the needs of human perception. The human field of view is about 220° and the human eyes would need a resolution with ~116,000,000 pixels while Rift or Vive offer only 2,592,000 pixels.

The Sense of Presence is defined as the subjective estimation of feeling present in an environment [1] and can be described by these sentences:

In the computer generated world I had a sense of "being there".

There were times during the experience when the computer generated world became more real or present for me compared to the "real world".

The computer generated world seems to me to be more like a place that I visited rather than images that I saw.

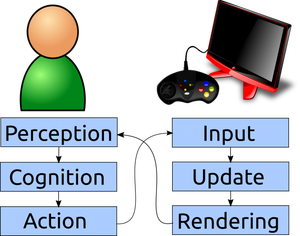

Immersion (objective) as well as presence (subjective) are connected to perception. But what exactly is perception? I like to explain it based on the core loop of a game engine because every game developer knows that. Basically, a game engine carries out three steps every frame: Input handling, computation and updating the game state, output/rendering of the game state. The human brain works similar. At any given time, we perceive the world around us, we process informations, and we carry out actions. These steps are called perception (= Input), cognition (= updating game state), and action (output). Hence, perception is the human input handling. Moreover, when playing a game, the three steps of a game engine and the three steps of the human brain are connected. The player perceives the output of the game, processes these informations and decides for an action, e.g. pressing a button on a gamepad, which is handled as input for the game.

Immersion (objective) as well as presence (subjective) are connected to perception. But what exactly is perception? I like to explain it based on the core loop of a game engine because every game developer knows that. Basically, a game engine carries out three steps every frame: Input handling, computation and updating the game state, output/rendering of the game state. The human brain works similar. At any given time, we perceive the world around us, we process informations, and we carry out actions. These steps are called perception (= Input), cognition (= updating game state), and action (output). Hence, perception is the human input handling. Moreover, when playing a game, the three steps of a game engine and the three steps of the human brain are connected. The player perceives the output of the game, processes these informations and decides for an action, e.g. pressing a button on a gamepad, which is handled as input for the game.

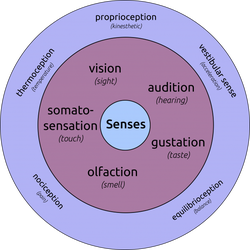

Since the human is a very complex system, perception is based on a lot of different sensory channels. A human can smell, taste, see, hear, and feel the output of a game. Traditional games usually only supply two channels: vision and audio. VR games on the other hand do not only offer a better visual stimulus than traditional games (cf. paragraph about Immersion) but they also provide vestibular (i.e. perceiving accelerations) and proprioceptive (i.e. „feeling“ the position and orientation of your bones and joints) feedback due to position and orientation tracking. Hence, the immersive nature of VR technology is directly coupled to the supported sensory channels of the human perception. Furthermore, the supported sensory channels influence the sense of presence: I feel more present when my vestibular and proprioceptive senses, or even my senses of smell and taste, are stimulated.

Since the human is a very complex system, perception is based on a lot of different sensory channels. A human can smell, taste, see, hear, and feel the output of a game. Traditional games usually only supply two channels: vision and audio. VR games on the other hand do not only offer a better visual stimulus than traditional games (cf. paragraph about Immersion) but they also provide vestibular (i.e. perceiving accelerations) and proprioceptive (i.e. „feeling“ the position and orientation of your bones and joints) feedback due to position and orientation tracking. Hence, the immersive nature of VR technology is directly coupled to the supported sensory channels of the human perception. Furthermore, the supported sensory channels influence the sense of presence: I feel more present when my vestibular and proprioceptive senses, or even my senses of smell and taste, are stimulated.

More than 50 years ago, in 1965, Ivan Sutherland, a pioneer in the field of 3d computer graphics, wrote an essay in which he phrased the vision of virtual reality [2]. He called it „The Ultimate Display“ and stated that such a display „should serve as many senses as possible“. Actually, it would be a display of smell, taste, vision, audio, and kinesthetics.

More than 50 years ago, in 1965, Ivan Sutherland, a pioneer in the field of 3d computer graphics, wrote an essay in which he phrased the vision of virtual reality [2]. He called it „The Ultimate Display“ and stated that such a display „should serve as many senses as possible“. Actually, it would be a display of smell, taste, vision, audio, and kinesthetics.

Hence, this first definition of virtual reality already put the importance of perception into focus.

But this is not the only reason why perception is important for virtual reality. Another one is that a mismatch of different sensory channels leads to cyber sickness or VR sickness [3]. This kind of sickness can have different sources but most often it occurs when a motion is visually perceived but not felt by the vestibular and proprioceptive senses. Hence, every VR developer should avoid it.

2. Perception is different

The user‘s perception of a virtual environment is different than the user‘s perception of the real world. This is true even if the virtual world is an exact replica of the real one. The effects can be observed for example for spatial perception and spatial knowledge.

Spatial perception describes the ability of being aware of spatial characteristics like sizes, distances, velocities, and so on. A lot of literature in VR research reports of studies that found a significant misperception of distances and even velocities when compared to the real world [4]. We do not know the exact reason but two explanations seem to be valid:

The quality of current head-mounted displays does not match the needs of the human perceptual system (cf. paragraph about Immersion). So, spatial perception in VR might get better when display quality gets better.

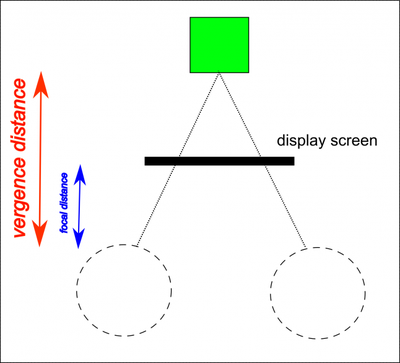

There are a lot of different depth cues that support us in judging spatial circumstances like distances to objects or sizes of objects. These include shadows, occlusions, or motion parallax. Two important depth cues which are provided by the human eyes are accomodation and convergence. Convergence is the ability of the eyes to rotate inwards and outwards to fixate a certain object. By triangulation, this ability can help estimating a distance to this object. Accomodation means the ability of the eyes to bring objects at a certain distance into focus, so that we can see them sharply. This supports distance estimation, too. In the real world, both distance estimations usually match. In VR, they do not often match. While convergence works as in the real world, accomodation is fixed on the distance to the display screen. This leads to two different distance estimations and is called accommodation-convergence conflict. It is not sure how much influence this conflict has on spatial perception but it might be a probable cause.

Spatial knowledge defines the ability to build up an understanding of the environment like a mental map of the virtual world. This is helpful for orientation in 3d space which is essential for navigational performance. Different studies showed that spatial knowledge and navigational performance is increased when the virtual environment was explored by a natural locomotion technique we know from the real world like walking [5]. Joystick controls and even teleportation leads to worse spatial knowledge.

3. Perception can be manipulated

The user‘s perception of a virtual environment can be manipulated. A very common example that this is even possible in the real world is the rubber hand illusion. This is a psycho-physical experiment in which the participant‘s hand is hidden and a rubber hand is placed in front of her. Both hands (real hand and rubber hand) are stimulated with a brush. After a while, the experimenter takes a hammer and punches the rubber hand. The participant developed an ownership for this rubber hand and is really scared of the hammer.

Students of our research group replicated this experiment in VR and extended it [6]. It shows off that this illusion also works in VR and that it even works when the virtual hand is visibly changed. The participants developed an ownership for the virtual arm although the arm was elongated.

This demonstrates that the visual stimulus is most often dominant in comparison with other sensory channels (like the proprioceptive). In Section 1, I stated that a big discrepancy between two or more sensory channels leads to cyber sickness. But in some cases, when the discrepancy is small enough, it is not noticed by the user.

Redirected Walking exploits this phenomenon by inducing rotations of the virtual camera while the user is walking with a HMD. Then, the user will unconsciuosly compensate this by turning to the opposite direction. This results in a walked arc while walking straight in the virtual world [7].

It was found out that a user does not notice these manipulations when she is walking on a circle with a radius of 22m [8]. But this threshold can vary depending on individual differences, walking velocity, type of virtual environment and so on. Actually, the redirection can be much greater when the user is already walking on a curved path in the virtual world that is bent to a path in the real world which has an even smaller radius [9].

Furthermore, it was found out that translations and rotations can be manipulated, too [8]. A virtual translation can be mapped to a translation in the real world that is 26% more or 14% less. And a virtual rotation can be mapped to a rotation in the real world that is 49% more or 20% less. The concept of redirected walking can also be transfered to Redirected Touch.

A last example of manipulating the user‘s perception in VR is the introduction of time gains [10]. It was shown that zeitgeber like a virtual sun movement have an influence on time estimation. A faster sun movement reduced the estimated duration of a VR session.

Conclusion

I hope, in this article, I could provide some interesting insights into the human perceptual system and how it is connected to virtual reality. If you have questions or are interested in further informations about this topic, I‘ll be glad to help you! Just write a comment or send me an email.

My website: https://www.inf.uni-hamburg.de/en/inst/ab/hci/people/langbehn.html

Literature:

[0] Slater & Wilbur: A Framework for Immersive Virtual Environments (FIVE): Speculations on the Role of Presence in Virtual Environments, 1997

[1] Slater et al: Depth of presence in virtual environments, 1994

[2] Ivan Sutherland: The Ultimate Display, Proceedings of IFIPS Congress, New York, 1965

[3] LaViola Jr, Joseph J.:A discussion of cybersickness in virtual environments. 2000

[4] Langbehn et al: Visual Blur in Immersive Virtual Environments: Does Depth of Field or Motion Blur Affect Distance and Speed Estimation? 2016

[5] Peck et al.: An Evaluation of Navigational Ability Comparing Redirected Free Exploration with Distractors to Walking-in-Place and Joystick Locomotion Interfaces, 2011

[6] Ariza, Oscar, et al.: Inducing body-transfer illusions in vr by providing brief phases of visual-tactile stimulation. 2016

[7] Razzaque et al. EUROGRAPHICS 2001

[8] Steinicke et al.: Estimation of Detection Thresholds for Redirected Walking techniques, 2010

[9] Langbehn et al.: Bending the Curve: Sensitivity to Bending of Curved Paths and Application in Room-Scale V, 2017

[10] Schatzschneider et al.: Who turned the clock? Effects of Manipulated Zeitgebers, Cognitive Load and Immersion on Time Estimatio, 2016

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)