Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In a detailed design article, Griptonite Games' Jason Bay uses classic usability concepts to break down the gamer's use of in-game weapons, objects, and puzzles, suggesting how this can make everyone build better video games.

[In a detailed design article, Griptonite Games' Jason Bay uses classic usability concepts to break down the gamer's use of in-game weapons, objects, and puzzles, suggesting how this can make everyone build better video games.]

A designer's most important job is to craft unique and interesting challenges for the player. Too often though, we discover that players are unexpectedly challenged by aspects that should be easy or even invisible during gameplay.

These might be the workings of the user interface, or an in-world interaction that should be a snap to figure out but somehow just isn't. When these things get in the way of normal progress, players become frustrated and can stop playing your game altogether.

As developers, we try to discover these problems early and rework the objects in question until they approach a better semblance of usability. But the journey from "this sucks" to "hell yeah!" can take a lot of work and iteration and the goal is often reached more through intuition than on established process.

Rather than running on gut instinct, we could benefit from a reliable method of understanding of how players interact with the game so we can consistently predict potential problems and adjust accordingly -- before the game ships.

In classic usability book The Psychology of Everyday Things, author Donald A. Norman describes a system of assessing the usability of objects by examining the psychology behind how people perform tasks. Although his discussion is oriented for real-world objects like doors and clock radios, we can apply his methods to our game worlds to find out whether each game object is truly intuitive and easy for the player to learn and use.

In this article, we'll examine how this idea can be applied to build more intuitive (or more challenging!) objects, puzzles, GUI controls and other game world interactions.

Norman defines that each person goes through seven stages of action when using an object, from the time he forms his goal to the time he evaluates whether he's successfully accomplished it:

Stage 1: Form the goal

Stage 2: Form the intention

Stage 3: Specify the action

Stage 4: Execute the action

Stage 5: Perceive the state of the world

Stage 6: Interpret the state of the world

Stage 7: Evaluate the outcome

You can assess the usability of any game entity by mentally walking through this list, in order, and examining whether it's possible for a player to engage in each step. If it seems like a player won't be able to perform one or more of the steps, then that's a red flag indicating a usability problem you should address before your design is perfected. Conversely, you can intentionally omit a step in order to create interesting challenges for the player depending on which one you leave out.

To help illustrate how you can check these stages to analyze a game entity's ease-of-use, let's run through a simple example. Let's say a player thinks the in-game explosions are too loud (he likes 'em loud, but he's at risk of waking his daughter who's sleeping in the next room). If we were to analyze the game's Settings menu, what sorts of things might this player be doing or thinking during each stage of the action?

1. Form the goal: This is the stage where the user forms the overarching end-goal they'd like to accomplish. "Here's the Settings menu. That seems likely to offer an option to lower the volume of the explosions."

2. Form the intention: In this stage, the user starts to drill down on how they could go about advancing toward the goal. "There's a control labeled 'Effects' -- I'll bet I can manipulate it in some way to affect the explosion sounds."

3. Specify the action: During this stage the user identifies specific actions that can be taken. "The 'Effects' control looks like a little handle on a horizontal track -- it looks like I could grab the handle and drag it along the track to change the volume. The right side of the rail has the icon of a large speaker and the left side has the icon of a smaller speaker, so I'll drag it to the left to decrease the volume."

4. Execute the action: (He grabs the little handle with his pointer and moves it along the track to the left.)

5. Perceive the state of the world: In this stage, the user first experiences any feedback that resulted from his change to the system. "I've dragged the handle to the left and then released it there. The game played a sample sound effect."

6. Interpret the state of the world: This is where the user interprets the meaning of the feedback he received in the previous step. "Now the handle is closer to the icon of the smaller speaker and the sample sound seemed to be quieter than what I heard in-game before I moved the handle, which probably means that the explosions will also be quieter."

7. Evaluate the outcome: "I wanted to turn down the sound volume, and that's what I did. Success!"

A typical user would move through these stages subconsciously in a couple of seconds, but if he isn't able to smoothly move through each and every one then it can lead to confusion or frustration. It's your job to make sure that doesn't happen.

Let's look at some examples of how one or more of the steps could fail if the simple menu in this example had been designed incorrectly:

Problems in Stages 1 and 2: I once ran across a game that had its sound control labeled as simply "SFX." In the game industry we often abbreviate the term "sound effects" in this way, but what if the player is not in the industry or if he interpreted it to instead mean something else, such as "special effects"?

During a play test I observed a user open this menu, examine it for a few seconds, and then ask me what "SFX" meant! This was a failure on the part of the menu's design, because bad labeling of the sound control was thwarting the player on Stage 1; without a good label for the sound control, he could not form the goal to "change the sound volume."

If the player had come to this menu already armed with the goal of "change the sound volume" then he would have been stuck on Stage 2; how could he form the intention of manipulating one of the controls to lower the sound, if none were clearly associated with that task?

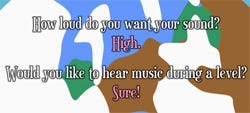

Problems in Stage 3: If a control is inadequately designed, it may be difficult or impossible for the player to determine what to do with it. I remember once seeing a horizontal slider control labeled "Sound".

But it didn't have any indication about which way to slide it to increase the volume and which way to decrease the volume[1], or even whether "volume" was indeed the property being controlled (it could just as easily have controlled the sound's left/right panning).

Without something to visually indicate which way is "more" and which way is "less," the player may have a hard time completing Stage 3: Specify the action with the ease we should expect from such a simple operation.

Without something to visually indicate which way is "more" and which way is "less," the player may have a hard time completing Stage 3: Specify the action with the ease we should expect from such a simple operation.

Problems in Stage 4: Once a user figures out what to do with a control, there are still several obstacles that could give him trouble with Stage 4: Execute the action. A few real-world examples might include:

If the user prefers the keyboard but the control only accepts mouse input

If the user has a wide finger and the control is too small and too close to other controls, on a touch screen

If the user has a disability and the control doesn't support adequate accessibility options

If the control doesn't provide as much precision as the user would like (e.g. the user wants to increase by three, but the control only lets him increase by increments of ten)

Problems in Stages 5 and 6: It's common for controls to fail to give user feedback (a problem at Stage 5), or to give feedback that's unclear or confusing (a problem at Stage 6). In our Settings menu example, what if the volume control didn't play a snippet of sound after the slider was moved?

The player might be able to visually see that the volume is decreased, but without the audio sample he'd have to go back into the game to find out whether he was satisfied with the new volume.

Even our well-designed example menu could be slightly inadequate: if the menu's audile feedback was to play the sound of a gunshot, for instance, then the player could only guess at the new relative volume of the explosions.

Results in Stage 7: By the time the player is at Stage 7: Evaluate the outcome, there's not much you can do for him; either he, and therefore you as a designer, have succeeded or failed.

Whatever the interaction, make sure you've allowed for good results in the prior steps so your user will perceive a success. If he perceives a failure, then be sure to make it clear to him why he's failed and indicate what he can do to correct the situation.

[1] Cultures that read from left-to-right might naturally associate "right" with "more", but if the menu were localized into a right-to-left language then the intuitiveness afforded by this cultural standard would no longer be helpful.

Utilizing Norman's seven stages of action enables us as game developers to make informed design decisions. Even in our simple Settings menu example, we were able to use the stages to identify and regress several interface issues.

Moreover, we've learned that failure to adequately address all seven stages of action during the design process will ultimately hinder game progress for the player.

But the applicability of this system goes far beyond menu UI, and in fact can be used to evaluate most or all of your game's in-world interactions.

By way of example, here's a brief look at how addressing the seven stages can influence our design decisions in a first-person action game:

Stage 1: Does the player know he has plasma bombs, and that he can throw them? (Is it displayed on the HUD? Is the HUD icon clear and understandable?)

Stage 2: Does the player understand whether this would be an opportune time to throw one? (Do plasma bombs work well in close quarters? How about underwater? Does the targeting reticule change when it's hovering over a valid target?)

Stage 3: Does the player know which button to press to throw a bomb? (Was he told in a tutorial but has since forgotten, and has his dog eaten the user's guide? If so, does the game provide instructions on-demand?)

Stage 4: Can the player physically press the correct button while doing other important things like moving and dodging? If it's a little kid on a big controller, can his tiny fingers work the analog stick and hit the plasma bomb button at the same time?

Stage 5: Is there satisfactory feedback to indicate that a plasma bomb was indeed thrown? Can he see and/or hear the bomb fly through the air? Even if it's dark, or in thick smoke? Did a HUD counter change?

Stage 5: Is there satisfactory feedback to indicate that a plasma bomb was indeed thrown? Can he see and/or hear the bomb fly through the air? Even if it's dark, or in thick smoke? Did a HUD counter change?

If the player is out of bombs then is there feedback to indicate why a bomb didn't appear? An icon flashing on the HUD? His avatar groaning "damn, out of plasma"?

Stage 6: Did the plasma bomb work? (Was there an explosion? Other effects? Do enemies visibly appear to have been injured? Did it land in water and visibly fizzle out?)

Stage 7: Was the plasma bomb a successful weapon to use under these circumstances? (Was it effective against this type of enemy? Did it bounce off a wall and end up injuring the player? Was it a satisfying interaction?)

Piecing out each element in this game world action further illustrates that Norman's psychology-based approach has applicability far beyond the workings of his doors, ovens, and clock radios.

It's a flexible and useful heuristic tool that can be applied to not only UI and combat, but also to puzzles, boss fights, and nearly any other interaction the player might have with your game.

Game designers can intentionally hobble one or more of the seven stages to purposely make an interaction more challenging for the player. This is a valuable design tool; to give you an idea of how omissions might create a good challenge, here are some examples:

Omit Stage 3: Many types of puzzles provide players with the goal (Stage 1) and the intention (Stage 2), but hide the means of solving the puzzle by omitting Stage 3: Specify the action. Once the player figures out the secret, it's a trivial task to manipulate the puzzle (Stage 4: Execute the action) to complete the challenge. The game can then give good feedback for the rest of the stages since the challenge has been successfully overcome.

Omit Stage 4: Another standard type of puzzle provides the goal (Stage 1), the intention (Stage 2), and the means (Stage 3), but then the trick is in the execution (difficulty in Stage 4: Execute the action).

This is common for instance among jumping puzzles in the action/platforming genre, where the player can easily see why and how he must complete a series of jumps but may find it challenging to execute the distances and timings without falling to his death.

Omit Stage 5: Have you ever played a game where you activated a switch but were then left with no idea what might have changed in the game world? If so then that was because the game's designer, whether intentionally or not, did not provide any feedback that might have helped you perceive the state of the world after the switch was thrown. This sort of challenge could be good or bad, depending on the context.

Omit Stage 6: Let's build from the above example. What if, after throwing the switch, you'd heard long, deep grinding sounds coming from somewhere down the corridor? It sounded like a large, heavy stone object had moved.

At this point, you have perceived a change in the game world (Stage 5), but may not be able to interpret exactly what that meant -- was it a platform, a door, etc.? This equates to a difficulty with Stage 6: Interpreting the state of the world; in order to interpret the new state, more exploration is warranted.

Another important concept put forth by Norman is the difference between "knowledge in the head" and "knowledge in the world," meaning that either the user knows what to do based on what the world is showing him, or he knows what to do based on knowledge that he's brought to the situation on his own. It's important to determine what information you can count on players to already know and what information you must teach them, because getting this wrong can create a situation that unintentionally hinders the player.

"Knowledge in the world" implies that the game is actively giving a player the information he needs to perform an action, as opposed to expecting him to already know (or leaving it up to him to figure out) without any help from the interface. In other words, the necessary information is already baked into the game world for his reference.

In a GUI, for instance, knowledge of what a widget does can be conveyed in several ways:

By giving it a text label to describe what it does

By offering "tool tips" or other context-sensitive help

By providing visual cues as to how something should be manipulated -- things that can be pressed should look like buttons, things that slide should have a grip icon, things that can be grabbed should have a handle

Concerning game world entities, there are limitless methods of baking in knowledge depending on the specific situation. Some examples:

In an RTS, don't make the player click and read each unit's property sheet in order to find out whether it can build structures. Instead, design the unit's graphics in such a way that it looks like something that can build structures. A player would know right away if the unit had a saw in its hand or a hammer in its belt.

In an FPS, don't make the player remember which hotkey switches to the shotgun -- show the available weapons on a HUD along with their associated key mappings[2].

Friendly NPCs can give hints and tips that ramp up in complexity as the player learns his way around the realm.

Naturally, you'll want to omit knowledge from the world when you're purposely trying to create a challenge for the player. But players are too often challenged by things that aren't meant to be so, such as a ledge that looks accessible but is not, and that leads to frustration -- or even worse, to a perception that your game is clunky, not user-friendly, or has an unnecessarily steep learning curve. Help your players understand the game by baking adequate cues into the game world wherever they may be needed.

[2] If you're scoffing at how ugly this might make your beautifully minimalist UI, you can offer the option to hide that display once he's memorized the necessary keys - that is, once the knowledge has been loaded into his head. In the meantime, he'll thank you for the handy reminders even if they aren't as pretty.

"Knowledge in the head" implies that the game world doesn't actively or persistently give players the information necessary to perform an action. Instead they're expected to intuitively know, remember, or figure out how to do the task without help or reminders.

In a game's GUI, you might be able to leverage the player's preexisting knowledge by:

Following established standards that the player will recognize, such as drop-down lists and scroll bars

Giving it an appearance and behavior of something from the real world that a typical user would already be familiar with, like a button or a folder tab

Labeling controls with familiar pictures/icons to jog his memory

In the game world itself, it's dangerous to rely on your players' preexisting knowledge; if he doesn't know what you expect him to know then he'll have trouble executing core tasks.

Many games "pre-load" the player's head with necessary information during a tutorial phase, and that's a good start, but problems arise if the player forgets what you've told him or else skips through the tutorial without paying much attention, which unfortunately seems to be commonplace.

Many contemporary games are so complex that players can't memorize all the necessary information until they've logged many hours of play.

Many contemporary games are so complex that players can't memorize all the necessary information until they've logged many hours of play.

You can also frustrate players if you require them to frequently retain new pieces of critical knowledge that are difficult or impossible to re-learn it if they happen to forget:

"Yeah the scientist told me the combination to that blast door. But I haven't played since last June, and now I've forgotten what he'd said!"

"Do I really have to click-click-click into the Unit Stats screen just to get a reminder about this tank's attack rating?"

"That boss monster just killed me a dozen times in a row. This sucks -- what am I doing wrong?"

In order to cope with these problems, we should provide accessible, abundant knowledge in the game world to alleviate the need to memorize, or we should provide reminders at key points to spark the player's memory. Some ideas that could help with the above examples might be:

Provide a way to store important information that the NPCs have given to the player (a Notes screen, a Quests menu, etc.)

Provide a popup with a visual stats summary when the player hovers his cursor over a unit, or offer a sidebar to show a stat overview for all units currently on-screen

Detect when the player is repeatedly failing a task, and then offer a helpful hint or make existing hints even more obvious

For every development cycle, game teams spend a lot of time revising and re-revising in-game actions to make them easier for players to understand and execute; often, the problems have stemmed from users not having the knowledge necessary to successfully perform a desired action.

If the developers can make an effort to bake as much knowledge as possible into the game world -- especially knowledge that facilitates the completion of Norman's Seven Stages of Action -- you'll find that players navigate more easily and get confused and frustrated far less often.

With these simple design tools, we can build more intuitive interactions and help our games go from "this sucks" to "hell yeah," all the time, every time.

Read more about:

FeaturesYou May Also Like