Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Classic Tools Retrospective: The tools that built Deus Ex, with Chris Norden

David Lightbown interviews Chris Norden about the history of tools development for Deus Ex. Learn about how the team customized the Unreal Editor, developed dialogue and export tools, and created innovative lip-sync technology for this innovative FP-RPG.

Introduction

In recent years, retrospectives of classic games have been well received at GDC, but there have been very few stories about classic game tools. This series of articles will attempt to fill that gap, by interviewing key people who were instrumental in the history of game development tools.

In recent years, retrospectives of classic games have been well received at GDC, but there have been very few stories about classic game tools. This series of articles will attempt to fill that gap, by interviewing key people who were instrumental in the history of game development tools.

The first two articles in this series have featured John Romero (about the TEd Editor) and Tim Sweeney (about the Unreal Editor).

For the third article, I am very happy and honored to speak with Chris Norden about the tools that were developed to create the landmark FPS / RPG hybrid, Deus Ex. I spoke to Chris at GDC 2018 in San Francisco.

Before the Ion Storm: Origin and Looking Glass

David Lightbown: How did you end up working on Deus Ex at Ion Storm?

Chris Norden: In 1994, I was working at Origin in Austin as a programmer. My first paying industry gig was on Jane’s Combat Simulations AD-64D Longbow, Jane’s Combat Simulations. It was originally an arcade-style game called Chopper Assault. I worked with Andy Hollis on that for two years.

After shipping that, the last thing I wanted to do was another military sim, because I’m not a military guy. I had been chatting with Warren [Spector] on and off, who was also at Origin. He was very much into story-driven role-playing games. I thought to myself, “I like those types of games, I want to work on something like that!”

In 1996, Warren left Origin to head up the Austin Looking Glass studio, which nobody at the time knew existed. That studio was doing Mac ports of games, like Links 386 Pro. They were also in process of developing an original golf game called British Open Championship Golf.

Ultima Online was still in development at Origin. It was being called Multima, and it was using the Ultima VII engine, I think. I played around with that a little before I left Origin to join Warren at Looking Glass.

[Author’s Note: The main Looking Glass studio was in Cambridge, MA, just outside Boston]

Warren had written a design doc, and we started working on a role-playing game with the acronym “AIR” (for the “Austin Internet Role-Playing”) for a while, which was going to be online. We wanted to do something 3D, because 3D accelerators were just starting to come out. We had gotten a prototype of the Voodoo 1, and we wrote a small engine using GLide.

We worked with Looking Glass in Cambridge [the main office]. After British Open shipped, I was helping Mark Leblanc and Doug Church with the engine for Thief, which was called the Dark Engine.

For financial – and other – reasons, Looking Glass wasn’t doing great at the time. They had amazing games, but they just didn’t sell that well. So, after about a year, they made the decision to shut down the Austin office.

[Author’s Note: Chris is absolutely right about the games… Ultima Underworld, System Shock, and Terra Nova were some of the most critically acclaimed titles of the 1990s]

There were just a few of us when the office closed – Warren, myself, Al Yarusso, Harvey Smith, Steve Powers – and we all still wanted to do this role-playing game. We all hung out together after the office closed for about six months or so, with the hope that we would be able to start a new company and make something. We had a really nice design doc, and we started shopping it around to publishers and talking to different people. I don’t even remember all the publishers we spoke to. Two of the people we spoke to were John Romero and Tom Hall, who were working with Eidos to start a new studio in Dallas called Ion Storm.

I had known John from back in the early Id days. In 1991, MTV threw a giant ship party for Wolfenstein 3D in Dallas. They had two VR Virtuality Arcade Machines set up. I was a kid at the time, and I was like “Oh my god, this is the coolest thing ever!” I met John, back in his “I'm too rich to care about anything” days.

I had known John from back in the early Id days. In 1991, MTV threw a giant ship party for Wolfenstein 3D in Dallas. They had two VR Virtuality Arcade Machines set up. I was a kid at the time, and I was like “Oh my god, this is the coolest thing ever!” I met John, back in his “I'm too rich to care about anything” days.

John is awesome, I actually went back and worked with him at Monkeystone Games, did some contracting for a Gameboy Advance title, but that’s a different story.

[Author’s Note: The game in question was called “Hyperspace Delivery Boy”. It was going to be published for Gameboy Advance by Majesco, but they decided not to go to manufacturing at the last minute.]

We talked to Romero and Tom Hall, and they were like “Warren, you’re awesome, let’s do a game!” and Warren said “Well, here’s the catch. We’re not moving to Dallas. We’re not moving to California. We’re not moving anywhere. We’re going to keep the studio in Austin. We’re going to stay completely autonomous. You’re going to give me full control over everything. You’re going to give me the money to do it, and it’s going to be cool.” and they said “OK, sounds good. Done.”

[Author’s Note: This is certainly an oversimplified version of the actual discussion, but it’s the basic gist of what happened]

Warren had a giant design doc for a game called “Troubleshooter” at the time, which eventually morphed into the Deus Ex design doc.

So, we started Ion Storm Austin studio with the six of us. At the time, I was CTO, head of IT, HR, security, and lead engineer. We didn’t have anybody to do all that stuff. So, we had to interview people and staff up pretty quick.

It was a tiny team. We had 3 engineers: myself, Scott Martin, and Al Yarusso. Sheldon [Pacotti] was hired as the writer. We had two design teams of three people. One headed up by Robert White, and one headed up by Harvey. I think we had six artists, headed up by Jay Lee.

Oh, and I hired Alex Brandon, because I was a huge fan of his music in Unreal. Now I’m really good friends with him. I introduced him to his wife because I was friends with her in high school. He’s a great guy: excellent musician, and he really understands the technical side of everything.

Then we hired an admin, and that was pretty much it. Then, it was: work your ass off for two-and-a-half-years! [laughs]

You go to Digital Extremes: Choosing between Unreal and Quake Technology

DL: So, while the rest of Ion Storm went with Quake technology, you guys chose Unreal. Can you explain how you came to that decision?

CN: As much as I respected the Quake technology, I knew at the time that there was no support. We were making a very specific type of game where we wanted you to be able to do anything. Quake was a shooter engine, and that’s it. If you wanted to make something other than an FPS, it was a lot of work, and you got no support from Id. They basically took a CD of code, threw it at you, and ran away.

DL: In my interview with Tim Sweeney, he called licensing Quake tech from Id at the time was basically a “quarter million dollar xcopy”.

CN: Yes, that’s exactly right! I mean, it was awesome tech. It was revolutionary at the time, but we knew that – as a team of 3 engineers – we can’t rewrite the engine, and we can’t write our own engine. We just don’t have time.

"[Unreal’s] focus at time was super-usable tools, which had never really been done before."

So, I was huge into the Amiga, C64, and the PC demo scene when it became a thing. I started seeing videos of this game called “Unreal”. And I thought “Holy shit, it’s got RGB lighting, and it’s full 3D. It uses MMX, SSE, and 3DNow. That looks really cool!” But, we couldn’t find much information on it, so we scheduled a trip up to Digital Extremes in London, Ontario, in Canada. We met Tim and the team, and they showed us all this cool stuff. I also met Carlo Vogelsang, who I now work with at Sony – he’s at Playstation. He wrote the Galaxy Audio Engine: pretty much all assembly, super hardcore guy. There was a particle systems called “Fire”. All of this was inside the engine. I was like “I like the way you guys do this stuff”.

Their focus at time was super-usable tools, which had never really been done before. The Quake tools were OK, but they weren’t very user-friendly for non-engineers. We had a bunch of designers who were not engineers, so they needed to know how to use this stuff.

Their focus at time was super-usable tools, which had never really been done before. The Quake tools were OK, but they weren’t very user-friendly for non-engineers. We had a bunch of designers who were not engineers, so they needed to know how to use this stuff.

So we said “you guys have good tools, everybody’s really nice, you’ve got a bunch of hardcore old-school guys who were around in the 80s doing the hardcore shit… how do you do your licensing?” And they said “We’ve never really done this before, this is new for us.” I don’t think they had an idea of how to do a licensing model back then. We told them “We’ve got Warren Spector, and we want to make a game using your technology”. When they heard that, it was clear that they were pretty excited to work with us.

I honestly don’t remember the terms of the original licensing agreement. Tim would probably remember. I don’t remember how much we paid, but I don’t think it was very much.

DL: It probably would have been in line with what Id was charging for Quake at that time?

CN: I think it was less! That was another good thing. Their technology were relatively new. We were not the first licensee. Wheel of Time was one of the early ones, Klingon Honor Guard was another.

[Author’s Note: You can read more about the history of licensing the Unreal Engine in my interview with Tim Sweeney on the Unreal Editor]

"I think if we had chosen [the Quake engine], it would have been a much more difficult game to make."

So, we decided to do it. They gave us all the source code, and they said “We’ll do our best to support you guys”. It was all one-on-one. We would just send an email if we had a question, there was no format support structure at all.

There was a lot of pain because it was something that they had never done before. We sent them so much code, and so many suggestions, back and forth, and got updates. But I think it was the right decision. I think if we had chosen Quake, it would have been a much more difficult game to make.

I also became a member of the Unreal Tech Advisory Group. We came up with the idea for the first tech meeting, where the leads from each of the companies who were licensees – which were, at the time, maybe four of us – all got together, talked about how to improve the engine, yelled at Tim about stuff that was silly, drank too much, ate too much, the usual stuff. I still stay in touch with all these people today.

"We came up with the idea for the first tech meeting, where the leads from each of the companies who were licensees [of Unreal] all got together, talked about how to improve the engine, yelled at Tim about stuff that was silly, drank too much, ate too much, the usual stuff."

DL: Was there anything that you shared with the other members of the Unreal Tech Advisory Group?

CN: I think we generally shared improvements to the Unreal Editor. It was great, but it still had a long way to go. Then, as we put it in the hands of more designers and artists, they also had suggestions for improving workflows. So, we would either implement it ourselves and then send it back to the Unreal team, or make a request to the Unreal team if they were able to do it more quickly.

We made a lot of changes to the core editor, but most of them were very specific to our game. We shared some of it, but most of the other teams weren’t really making the same kind of game.

I had to write a ton of systems that – I think – were some of the very first ever of those types of systems. At least, I’d never seen them before. For example, a system to blend animations, basically what we call morph targets or blend shapes today. I don’t remember anybody having done that in a game before that time. It could have been done somewhere, but this is before the widespread availability of the internet, so it was really hard to share information.

DL: Can you tell me about some of the changes that you made to the Unreal Editor?

CN: One thing – which, I don’t know why they didn’t have in the editor at the time – was camera spline visualization.

In the first versions of UnrealEd, you would drop down and connect camera path points. You could see the points, but you couldn’t see the connections between the points, which would determine the path that the camera would take.

DL: It’s like playing connect the dots, but without the numbers…

CN: Right! There are control points on the spline, and the designers couldn’t always tell where it went. They wanted to be very exact about how the camera were going through paths. So I added that, and they loved it.

It was a really simple thing. I mean, it was so easy to code: draw the coordinate axes at each main control point, and then draw the control point so you actually see where it went. It helped them so much, but it was such a simple thing. I don’t know why we had to add it, but, I guess Tim just didn’t think that they needed it.

DL: It’s funny, because I’m thinking about the first exposure that a lot of people had to the original Unreal game was a camera flying through a castle, so it’s not like Epic wasn’t doing any camera paths…

"We relied way too heavily on UnrealScript. That was one of the mistakes we made. UnrealScript was super flexible, but man, it was slow."

CN: I think a lot of that stemmed from the fact that a lot of their level designers were super technical and they were used to doing that kind of stuff in their head. But as the industry slowly matured, you had to create tools that were designed for all skill levels. Not every designer was an engineer… and certainly not today! That’s the exception rather than the rule. So, you have to make sure it’s usable and easy.

Also, I still don’t know why the pawn is that dinosaur head…

DL: [laughs] Yes, Tim and I spoke about that in our interview! So, is there anything you regret about the any of the technology decisions that you made?

CN: We relied way too heavily on UnrealScript. That was one of the mistakes we made. UnrealScript was super flexible, but man, it was slow. So, we did way more that we should have in UnrealScript, as opposed to native C.

Who let the Docs out: True North and False Crashes

[Author’s Note: At this point in the interview, I open up the Deus Ex Editor (a customized version of UnrealEd), as well as the documentation for the Deus Ex SDK]

DL: The Deus Ex SDK installer contains a folder called “Docs” with a couple of documentation files that are attributed to yourself, Robert White, Sheldon Pacotti, Al Yarusso, and Scott Martin. Can you tell me who those people were and what they worked on?

CN: Al was in charge of the conversation editor. Scott was in charge of the AI. I was in charge of… everything. [laugh] I was the assistant director and lead programmer, in addition to all the other stuff. But we all did a bit of everything, because there were only three engineers for the whole project, from start to finish.

Chris Norden (bottom left), Al Yarusso (above and to the right of Chris, wearing a watch), Robert White (middle row and slightly right of center, wearing sunglasses), Sheldon Pacotti (bottom right), and Scott Martin (second row, extreme right, also wearing sunglasses)

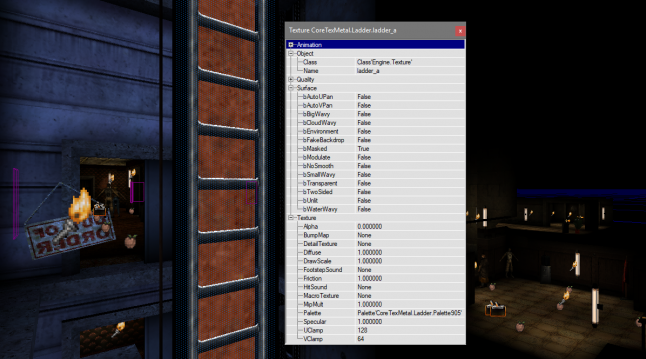

DL: In the documentation for the editor, there is a section that reads “Unreal does not support [ladders] natively but this was added for Deus Ex”. Can you tell me more about that?

CN: Ladders were interesting because we needed full level navigation, and traditionally in a first-person shooter – at the time – it was all stairs and ramps.

One of Warren’s design edicts – because, design is law! – was the player should be able to complete any objective in any way. The overarching idea for the whole game was that if you want to play the whole game without firing a shot, you can. Now, that didn’t quite happen, there were a few areas where you were required to fire a few shots, but we came pretty damn close.

DL: I think in the sequels they succeeded in doing that.

CN: Yeah, I think so.

So yeah, you should be able to play the entire game without firing a shot. You should also be able to play the entire game with doing nothing but firing shots. You should be able to play the entire game by never being detected by an enemy. You should be able to do all of these different things.

DL: Is the lack of ladder support one of those things that you talked to Tim about during your Unreal Tech Advisory Group meetings?

CN: I have to go back and look at the script code and see if I wrote “Fuck you, Tim!” in there, [laughs] because generally that’s what we would do when we got mad, we would write comments to remind ourselves about it later.

When we starting thinking about how to support ladders, at first, we said “let’s build geometry, and then maybe you can actually do some weird physics things where you can grab the rungs, and sync up the animation” and then we were like “fuck this, it’s way too complicated”.

DL: [laughs]

CN: We had to do all this stuff really fast, because the designers were like “we need this, we need this, we need this!”

So, I ended up hacking the hell out of it. There was this thing called texture groups. It was basically just a string tag assigned to certain types of textures so it was easier to sort. We could also query it programmatically. So we said “why don’t we just have the artists make certain textures that are climbable textures?” and then we’ll just put them in a texture group, and do a ray-cast and detect them, and then just glue you to it in the direction you’re going, with some constraints. It worked fine, but it wasn’t always perfect, because when you got to the top of it, you’re not casting anymore, and we had to push you up and over. So, it wasn’t perfect, but it ended up working, and it was a cheap and easy hack.

Today, you might think “why wouldn’t they have ladders, that doesn’t make any sense?”, but back then, there was nothing. It was just BSP chunks, we didn’t have static meshes… we didn’t have any of that.

DL: UnrealEd did have a really nice spiral staircase generator [laughs] but no ladders.

CN: Yeah, that’s true… I think we yelled at the designers for using it, because it generated holes in the BSP. It looked nice if there was nothing else around. But, as soon as you started doing angle cuts on the BSP, you could get these little holes that would make you fall through the BSP, but you couldn’t see the holes. It was such a pain in the ass… we were like “Don’t do that! It looks cool, but it’s broken…”

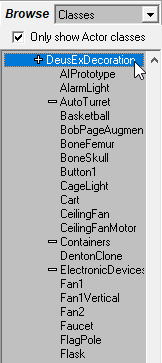

DL: So, the documentation also describes a class called “DeusExLevelInfo”, which contains information about the map, like if it was a multiplayer map, the author of the map, where the mission takes place, the mission number, and so on. But, I wanted to ask you about the “TrueNorth” thing…

CN: [laugh] That was the Unreal “BAM” type: Binary Angle Measurement!

DL: What’s up with these crazy numbers?!

CN: This was back when doing floating point operations was really expensive. Hardware was limited, and memory was very tight. Rather than using an 8-bit number to represent 360 degrees, whichwould only provide up to 255 units of precision – so, approximately 1.4 degrees per unit, which is not exactly accurate enough – Tim went with a 16-bit number for orientations, so you get a ton of precision.

As a programmer, these numbers are perfectly easy. Those are all just powers of two, no problem. You can do them all in your brain. But, designers and artists had a little more trouble. But, we had little shortcuts for helping designers remember them.

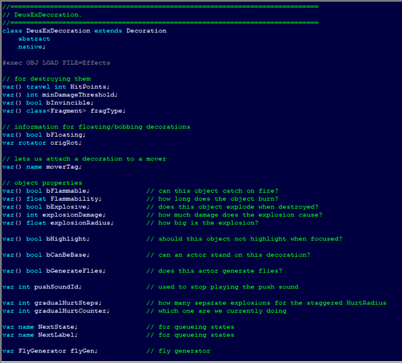

[As we are working with the editor, Chris comes across the Decorations class]

CN: Decorations was also a huge class. Everything interact-able that was a mesh was a decoration. That’s pretty much everything in the game!

DL: Under the Decorations section in the documentation, it reads “We advise you that changing some of the fields other than those listed below, could cause game or editor crashes.” Do you remember why that was the case?

CN: [laughs] I don’t remember why, honestly. I think that because Decoration was such a big class, and there was a lot of Epic stuff in there that interacted with the engine in weird ways, rather than try to explain why a particular field might give unintended behavior to a designer who may not understand the technical reasons, we would often say “uh, just don’t use it, it will crash”.

DL: [laughs]

CN: Kinda a scare tactic. In our programmer meeting, we’d ask ourselves “how do we explain to the users that this is going to corrupt the BSP chain, and do this and this… ah, we’ll just tell them that it crashes.

I would have to go back and look at the code, but we might have even been guilty of hiding intentional crashes in a few places to keep some users from screwing with certain things…

DL: So, here in the ParticleGenerator section, it reads “One of the main things is that it can be turned on and off via triggers and unTriggers.” So, it wasn’t possible to trigger and untrigger particle systems in the version of Unreal you were working with?

CN: At the time, no. You put the particle system in there, and it was on all the time, and never turned off. Which I thought was a weird little oversight.

"We still had to support software rendering. This was before hardware rendering was much of a thing. Tim had amazing software skills. He wrote an awesome software renderer, it was gorgeous."

Other than that, the Particle System in Unreal was awesome. A lot of it was assembly. It was procedural, and it was really well done compared to anything else out there. The designers loved it. They loved it a little too much, in fact. You could easily kill the frame-rate, and they went crazy with translucent layers over and over and over.

We still had to support software rendering. This was before hardware rendering was much of a thing. Tim had amazing software skills. He wrote an awesome software renderer, it was gorgeous.

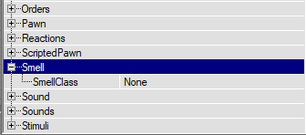

[As we are looking at the different objects in the game, I notice a category with an unusual-sounding name in the Properties panel]

DL: What is the “Smell” property?

CN: Smell was for animals to track. Basically, dogs could track smell nodes. If I’m remembering correctly, the player would leave smell nodes for a certain amount of time after they pass through areas. So, we made it so that dogs could track that.

DL: So if you would hide behind a box, the humans couldn’t find you, but the dogs could…

CN: Exactly, because that’s the way the world works, and we thought that was a pretty cool gameplay thing. However, I think we ended up cutting it. Since there was no visual feedback, it was a difficult concept to convey to the player.

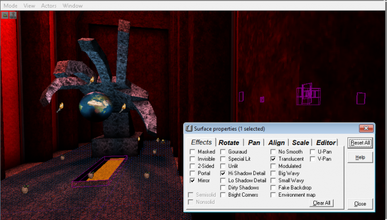

[We open the “Intro” level, which features one of the most iconic set-pieces of the game: a huge, intimidating hall featuring the statue of a giant hand, holding a spinning globe, which is seen in the opening cinematic of the game]

DL: I have a question about this. I don’t see a mirror of that geometry under the floor. In other games, mirrors would faked by placing a duplicate geometry on the opposite side of the floor...

CN: Nope, the mirrors actually worked! They were super slow, so we told the designers to rarely use them, but they were actual mirrors.

In fact, when I wrote the laser code… [laughs]

DL: [laughs] I see where this is going…

CN: …I actually had to check for mirrors. I had a test level where I would test the code for all the devices. When I did the laser pointer code, I built a reflective-mirror disco ball, and then I built a hallway with mirrors on each side. I took a laser pointer, and then I shined it at the disco ball, and it worked!

Of course, the frame-rate tanked, because you’re doing all those line-traces…

See the Lightwave: The “LWO23D” Command line tool

[Author’s Note: “LWO” stands for “Lightwave Object”. This is the file format for 3D geometry in Lightwave 3D, a software package from Newtek, also based out of Texas, like the Deus Ex team was at the time]

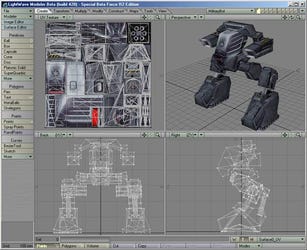

Screenshot from Tack's Deus Ex Lab

DL: So, why did you guys choose to use Lightwave?

CN: It’s what our artists were familiar with. So, we had to adapt to that.

DL: Tell me more about why you wrote the exporter as a command-line tool

"There was no skeletal animation in the game. I don’t know of any engine that did at that time. So, it was all vertex blended."

CN: One of the things I did a lot of, and I still do today, is writing tiny command line tools to automate tasks. Being an old-school programmer, I dislike unnecessary complication. I dislike most of C++. I dislike templates. I dislike all these things that waste time.

When I write command line tools, I’ll write a Win32 command-line, eliminate all of the built-in stuff, start with an empty file, with just “int main()”, and just go from there. It’s fast, it’s portable, and you don’t have to worry about it.

DL: I imagine that exporting static meshes was pretty standard. But, tell me about how you exported the animation.

Screenshots from Tack's Deus Ex Lab

CN: There was no skeletal animation in the game. I don’t know of any engine that did at that time. So, it was all vertex blended. Skinning was very expensive back then. No GPU, it all had to be done on CPU, you had to try to avoid floating point as much as possible.

Inside of Lightwave, they would do the skeletal animation, then output the keyframes. You’d have to create the t-pose, because in order to blend the animations, you had to start from a common base. So, they were basically offsets to the t-pose so they would blend properly. The blends were done on the engine side.

DL: So, the original Unreal game did it the same way?

CN: I think so, yeah. I don’t think Tim added skeletal animation until the next version.

Talking Heads: ConvEdit and Lip-Sync

[Next, we open a folder called “ConvEdit”]

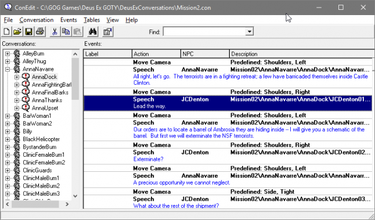

DL: Tell me about ConvEdit

CN: This was Al’s baby. He wrote it in Visual Basic.

DL: Just like UnrealEd, at the time.

Yes. Back then, there weren’t that many ways to do this easily. There was Delphi and a few other UI frameworks. Other than those, you had to write it all natively, like Win32 API, GDI, and all this stuff, which was just a massive pain.

So, Visual Basic – which, nowadays is pretty primitive – at the time, was a really good, simple visual framework, with a custom programming language which was kinda Basic-like, a precursor to C#. It allowed us to very quickly prototype things and write tools.

"[Lead Writer Sheldon Pacotti] actually had RSI (Repetitive Strain Injury), so he couldn’t type. He did almost all of Deus Ex with Dragon Dictate voice transcription."

[Author’s Note: If you’re curious about the use of Visual Basic in game development tools at the time, check out my interview with Tim Sweeney on the Unreal Editor]

Sheldon was pretty much the person that used this. He wanted tools to control the camera, tools to control audio cues. He wanted a lot of cinematic tools that had never been done before. I learned what rostrum zoom was for the very first time working on this game with Sheldon.

I think – even today – this tool holds up.

By the way, Sheldon actually had RSI (Repetitive Strain Injury), so typing was very painful. He did almost all of Deus Ex with Dragon Dictate voice transcription, which was nowhere near as good as it is today. He had to wear braces because he had really bad RSI.

DL: Wow! I had no idea. There’s a ton of dialogue in this game, and a lot of branching, as well…

CN: There was a massive amount of data, even just for a single mission.

Sheldon wanted a lot of control, and lot of interactive dialogues. He wanted the player to make choices that mattered, basically. He and Warren both hated clicking through dialogue with no choice. They wanted you to interact with the characters and choose things that mattered, and then set flags back in the game, and the game would remember how you treated characters or how you behaved moving forward.

DL: The first time I played Deus Ex, I remember being briefed by Anna Navarre in her office. As I would typically do in any other video game, I was running around the office, messing with stuff I was waiting for the NPCs to end their dialogue. Suddenly, she stops her speech, turns towards me and says “What the hell are you doing?”

CN: [laughs]

DL: I froze for a few seconds. I asked myself, “is this really happening?” It was such a significant event for me in terms of immersive-ness in a video game. No other games had done that before. I suspect that is in part thanks to the Conversation Editor.

CN: One of Warren’s examples was “If you’re in somebody’s office, being an asshole and breaking stuff, the character should say something! They shouldn’t ignore you, because that’s just not realistic.”

DL: Was there something related to the cut-scenes that was particularly difficult to provide a tools for?

CN: We had so much dialogue, and it was all voiced. We spent weeks in the recording studio, recording lines with actors. That meant that we had to think about lip syncing. I started thinking, “How the hell do you lip sync that much dialogue?” Not only did we had a ton of dialogue, and we also had multiple languages.

There weren’t many tools to help with lip syncing at the time. Most people would do pre-processing: run the dialogue through a tool, output a data file, and play that back. But, I was like “We don’t have time to do that, and if we have to do a re-take, we have to re-process all of that…”

"I started thinking that there has to be a way to do [real-time lip-sync] by dynamically analyzing the audio. I had studied electrical engineering in college, and I decided to figure it out… but without telling anybody."

So, I started thinking that there has to be a way to do this in real time by dynamically analyzing the audio. I had studied electrical engineering in college, and I decided to figure it out… but without telling anybody.

DL: [laughs]

CN: Let’s see if I can do this and surprise everybody. Again, I don’t think that this had ever been done before, but now it’s kinda commonplace.

At the time, the closest thing I had seen to this was Half-Life. They used a technique called Envelope Following, which was literally just: open and close the mouth, based upon the loudness of the sound. It was OK, but it didn’t look that real.

DL: It was basically “jaw flapping”.

CN: Yes, yes. That’s exactly what it was.

I remember talking to Gabe Newell at the time about what they were doing, and he said “Oh, we’re just using Envelope Following, and it’s good enough. Everything else was too complex.”

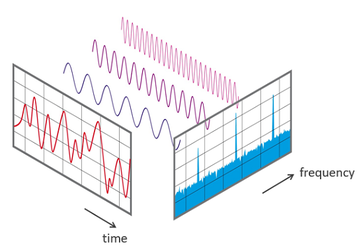

So, I got back home and started figuring out some math stuff, and wrote a system which did an FFT (Fast Fourier transform) Again, floating point was not cheap at the time, and I think I wrote an integer approximation that faked it.

What it does is that it analyzes a short section of audio in real-time and then extracts the major frequency components, and mapped then to six or seven different visimes.

I stealthily asked one of the artists “Hey, can you model me these blend shapes: I want ‘oh’, and ‘ah’, lips closed” and all these different ones. He asked “Why?” and I said “don’t worry about it, just do it and give me the data…”

Fast Fourier transform (image from Wikipedia), and the Preston Blair Phoneme Series

DL: [laughs] This seems like something that would lend itself perfectly to the blending approach that you took with Deus Ex.

CN: Yeah, it’s perfect. Exactly.

So, they gave me the data, I wrote the math, I manually mapped shapes as best I could. There wasn’t much research on this, and it was not cheap in terms of performance.

I created a test level with a giant head in the middle, and fed a couple lines to it. I ran a German line, an English line, French line through it… and it all sync’d up perfectly. I was like “Holy shit, this is amazing! This actually works!”

I found Warren. I said “Hey, I want to show you something that I think is kinda cool…” And… I think he freaked out. [laughs] He was like “What the hell, can we put this in the game? This is really cool!”

So, we did ship with it, but I had to make a lot of tweaks to it for performance, and unfortunately the visual quality degraded. But, my original test level was gorgeous. Still, I think it was the very first real-time lip syncing. I don’t remember seeing anybody else doing it.

I probably should have patented it. But I don’t believe in software patents, because I think they’re kinda evil…

"I also got into a huge argument with one of the Art Directors in Dallas who wanted to use [Deus Ex’s real-time lip-sync technology] for Anacronox"

I also got into a huge argument with one of the Art Directors in Dallas who wanted to use it for Anacronox. They were supposed to ship before us. I said “Well, I don’t mind sharing, but I want Deus Ex to be the first game to ship with it”. He said “No, you have to give it to us!” He was basically yelling at us in the hallway. I said “No I don’t”, and Warren backed me up on that. Turns out, we ended up shipping first, so it didn’t matter anyway. In fact, I think they ended up implementing their own version of it separately, because we wouldn’t give it to them. It may have been selfish, but I wanted us to ship with it first, so we could showcase it.

Everything else that came out of Ion Storm was developed in Dallas: Dominion: Storm Over Gift 3, Daikatana, Anacronox, all done in Dallas. Austin was only Deus Ex, and it was only our team, there was nothing else there. We were in a bubble. We were pretty isolated from the Dallas office, and that was intentional. We didn’t to be around a lot of their… quite frankly, a lot of their bullshit. That separation was very intentional, and very important for us. There was no tech sharing between our team and their teams. No real contact.

Conclusion

DL: So, to wrap up, I wanted to ask: if you can give advice to tools developers who are reading this article, what would you say?

CN: Listen to your customer. Don’t ever write tools in a vacuum. As an engineer, you think a certain way, and you think “Oh, I can use the tool this way, and that’s the most efficient way, so that’s the way I’m going to write it.”

"Listen to your customer. Don’t ever write tools in a vacuum."

You’re almost always wrong. You have to listen to your designers and artists, because they have a very different workflow. They work in very different ways than anybody else. You’re not the one who is going to be using the tools. For the most part, it’s them.

DL: Have you ever dealt with that mentality in some of the teams that you have worked with? And, how did you try to deal with that?

CN: You just have to tell them, “Why do you think this user is having this issue? Do you think it’s a coding problem? Do you think it’s a screen layout problem? Do you think that font is too small? Is the color wrong on this button? Are you doing something that’s not standard?”

Because, you look at a lot of the professionally designed tools, and they’re usually pretty consistent. There are design guidelines for how those things should work.

"You look at a lot of the professionally designed tools and they’re usually pretty consistent. There are design guidelines for how those things should work."

The creative team has to be able to use the tools. They really shouldn’t have to read docs. In an ideal world, everything should be obvious.

Nowadays, there are UX/UI designers, we didn’t really have that back then, so we had to design the interface ourselves, but with the feedback from the users.

So, sit with the users and watch the way that they use the tool. We would watch how they would use Lightwave. When we would write the first version of the tool, we would sit there with them – without telling them what to do – and watch them, and take notes, like“they struggles on this”, or “they had to constantly dig into this menu to find this”, or “maybe we should make this a shortcut”. Just watch them, and learn, and adapt.

So you have to really think about the designer’s workflow. You minimize excessive movement, you minimize digging in menus, and you try to make keyboard shortcuts. We were huge proponents of keyboard shortcuts for everything, because that speeds up your workflow. As you learn a tool, you start to get really fast with keyboard shortcuts, and you don’t have to deal with menus.

You can’t fall into the classic engineer trap of “I know best. I wrote it, I know how it works. You’re just an idiot because you can’t use it.” That is the worst thing you can do while working on tools.

DL: That’s excellent advice. Thank you, Chris!

Hey, you!

Are you a game tools developer?

Do you have tips, advice, or stories that you want to share with other game developers?

If so, the organizers of the GDC Tools Day want to hear from you!

Click here to submit your proposal to the GDC 2019 Tools Day

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)