Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Anti-Social behaviour in games viewed through the lenses of Engineering, Enforcement, and Education

Ben Lewis-Evans & Ben Dressler use the idea of the "three E's" to examine anti-social behaviour in games and the possible ways in which such behaviour could be reduced or prevented.

By Ben Lewis-Evans (@ikbenben) & Ben Dressler (@BenDressler)

Anti-social behaviours of even just a minority of players in a game can ruin the experience for the majority and build a reputation for your game as a hostile or "toxic" environment. So what can be done about it? In this article we will approach this issue through the lens of the "3 E's"; Engineering, Enforcement, and Education. The 3 E's are most typically used in accident prevention research but here we will apply them to preventing undesirable behaviours in games. To be brief, Engineering is about enabling or disabling behaviour with design. Enforcement is about your policies and their, well, enforcement. And education is about information delivery. Now let’s take a closer look.

The first of the E's, Engineering is generally considered to be the most effective for direct behaviour change. The basic approach with Engineering is to remove the ability to perform an unwanted behaviour or to reduce its impact. In safety science examples of this would be crash barriers on a highway (reducing the impact of falling asleep while driving) or a power tool that won't turn on without a safety guard in place (preventing you from literally screwing yourself).

The obvious example of engineering in games for social behaviour is Journey. With Journey the folks at That Game Company have designed a game that makes it basically impossible to grief or interfere with players almost exclusively by using 'engineering' methods. Specifically, they used four main design decisions in terms of engineering. The first is a pretty severe limit on communication: The only way of communicating in Journey is a melodious beeping sound. This not only removes the possibility for a player to abuse others verbally or via text but it also removes a channel via which griefers can get the response they are after i.e. hearing how pissed off they have made the other person. Interestingly while negative communication has been basically removed in Journey, cooperative communication is also made potentially more interesting. That is if you want to cooperate, it is now a guessing game to work out what the melodic beeps of your companion might mean in a given situation.

Beep, beeep, beep! Beep? Beep... Beeep!

The second important example is how player interactions in Journey are engineered. Specifically, that apart from communicating, the only possible direct interaction between players is helping each other via recharging the jump ability. That is it. You can only help. This not only places a limit on negative behaviour but since you automatically help other players just by being close to them it is likely to also increase feelings of reciprocity, in that people tend to like and want to help people who help them, thus inducing a virtuous circle of helping. Furthermore, since recharging your own power automatically helps your nearby companion, even selfish behaviour could result in getting this virtuous circle started. Plus the accompanying pleasant sounds and animations without doubt play their part by creating an impression of touch and connection between players.

Interestingly, the folks at That Game Company are on record as saying that at one point people could also interact with each other in Journey via player collision detection. However, nearly immediately people started to use this player collision to block and push other players around to grief. This suggests the obvious, that if you give players the option to affect each other, they will use it, and perhaps not in a fashion that is entirely social.

The third big engineering example in Journey comes down to using the power of similarity. People like others that are similar to them. In fact people ascribe all sorts of positive things to similar people, even if the similarity is something as arbitrary as sharing the same birthday. Similarity is effectively a cognitive shortcut that says that “I am a good person, therefore people who look and act like me must be good people” (there is of course a downside of this in terms of people who are not similar to you). In Journey, players look pretty much the same and have pretty much the same capabilities (some characters may be able to fly for longer, but that is it). They face the same dangers and obstacles and even the small bits of story link them together in a joined struggle. Also limiting the number of players to only two may help with the feelings of similarity and connection between the players, as does setting the game in desolate environments that highlight the humanity and similarity of other player. Similarity is further reinforced by the lack of communication and by players not being linked to their PSN network ID's (until the game is finished) thus allowing no way for real world differences to get in. This is a good example for how you can combine different engineering methods in a single design decision as player names are also a way of communication, from the straight forward Night Elf called ‘iPWNurM0M’ to a witty DOTA 2 team encountered by Ben (the DOTA 2 playing one) whose players had the single letter names; 'S', 'I', 'N', 'E', & 'P'.

Hey anonymous friend who is just like me, where are we going today?

The fourth and final engineering intervention that Journey uses is making people independent of others in terms of being able to enjoy and complete the game. Rather the other player is only there as a bonus. An extra. Sure they are an important extra for the wider emotional enjoyment and impact of the game. But in terms of actually playing and getting to the end of the game play experience they are not needed. This means that having another player along can, generally speaking, only make your experience better, not worse. Ben’s (we will let you decide which one) most remarkable experience with Journey was getting lost shortly before the end. When Ben finally got back to the path he found his companion – who had helped him along for quite a while - had waited for all that time, and for no direct in-game benefit.

All this said. There are a few things you can do to annoy someone in Journey. Firstly you could be "too helpful" to a new player and ruin their fun by pointing out every puzzle solution or the location of every secret. This may annoy some people. Also there are some tricks you can play with the environment that can ruin people's fun (sorry, not going to share these as they don't seem to be commonly known online). However, anyone you are griefing or annoying in Journey has a final engineering option to get rid of you. Since the multiplayer is random and based on proximity, they can just stand still and wait for you to walk away (or run away themselves), or drop connection and then reconnect and (theoretically) they will never have to deal with you again.

In a nutshell, Journey engineers a positive multiplayer experience by disabling nearly all communication, removing all interaction apart from helping, highlighting the similarity between players, and making players essentially mechanically independent of each other. The Game Company deserves a lot of credit for connecting these methods (and more) in a way that elicits positive chain reactions between players and helps create meaningful interpersonal moments between players.

Some of the ways that Journey operates is sure to be copied in the future. However, it is somewhat unique. So what can be done in games that are structurally different and more reliant on things like complex communication, a strong player identity, larger teams, and direct player conflict?

Examples that are already being tested or used are opt-in or friend-only communication (e.g. League of Legends or Clash of Clans), chat filters, the ability to mute players, removing player collisions (imagine what WoW would have been like with player collisions…) or the removal of friendly fire from many online shooters. Opt-in is a good idea for chat because if something is opt-in people have a reduced tendency to, well, opt-in. This is because people either miss the option to opt-in, or simply due to the fact that people have a tendency to just go with defaults (aka “The path of least resistance”). However, this means if you have a system in your game that you believe will help reduce anti-social play, but want to make it optional, then you should make it opt-out rather than opt-in.

Advanced skill/rank based matchmaking systems (e.g. Starcraft 2 or Halo) are another engineering approach that makes it less likely for players to be bothered by skill differences and the associated greifing. Bungie, even tried to take matchmaking one step further by allowing you to specify various social options (e.g. chatty or quiet games, team play or lone wolfing). Which lead Penny-Arcade to joke that this could be taken further with playlists for older gamers with jobs and families.

A chatty, yet polite, team player - that is how Urk rolls

Passive helpful abilities in team games such as healing or buff auras could be seen as similar to Journey’s automatic helping. These passive, helpful, abilities are, likely to setup feelings of liking and reciprocity between players. Although, they are more likely to do so when communicated with clear signals to other players, i.e. a small icon showing that you currently are buffed may not be as effective as a glowing line of power coming from the person helping you. These automatic abilities could even include the auto-barking that some shooters have (“Sniper over here!”) without the need for players to have mics on and actually talk. Similarly, the enemy marking system used in Battlefield 3 is a way for players to communicate quickly (without mics) and also gain an advantage, although in the case of Battlefield its use is not automatic (Which, can lead to “Noobs! Mark your targets!”).

Another option would be to add more ‘physical’ connections between players. We already have Lara Croft and Drake animated to naturalistically touch walls as they walk past, or stumble occasionally on uneven ground. Why not apply this to team based games and have characters naturalistically pat each other on the back or even just support each other as they come close. Another option would be for players to make a “good behaviour” commitment within their community (perhaps signified by a particular in game symbol and/or accompanied by additional rights/privileges). It is known from other work in psychology (e.g. see the work of Cialdini) that making a commitment can have significant impacts on improving social behaviours if the commitment is made a) voluntarily and b) publically

The blue team/red team mechanic that is common in games is useful not only in making targets visually distinct but also stresses the similarities between teammates (although at the same time obviously it causes hostilities towards the other team, those filthy blues…). This red vs blue/horde vs alliance/empire vs alliance mechanic is most effective if people can pick their side (not that random assignment doesn’t work, it just isn’t quite as effective) and if there is a clear distinction and identity between the two teams. This kind of clear team identity is missing from some team based games, for example in Dota 2 attribution to either ‘Dire’ or ‘Radiant’ has little impact in terms of feeling like you are on a team e.g. the heroes look the same no matter what team you are on as does the UI.

Anonymity online is another structural issue that has often been blamed for anti-social behaviour. Not necessarily because of the common wisdom that people become jerks when they have anonymity and crowds, but because misunderstandings and miscommunications become much more common in such an anonymous, relatively context free, environment. It should also be noted that plenty of people are still jackasses in real world games and sports, so the influence of competition should be taken into account. Furthermore, as previously mentioned anonymity in Journey is actually one of the factors that assisted with the social experience, so anonymity is not neccessarily automatically a bad thing.

Finally, it is important to note that even though engineering can mean quite a lot of restriction and control on players, it is best designed in a way that this restriction is not experienced in a negative fashion by the majority of players. Not surprisingly people do not like to feel that they are being controlled and have a strong tendency to counteract any perceived manipulation (called “countercontrol” or “reactance”). However, people regularly talk about the freedom and openness of Journey despite it being basically linear and quite restrictive in many ways. So you can implement control without people feeling like they are being controlled and/or make the experience so enjoyable that players don’t mind/notice the control.

An Engineering aside: MOBA’s

If Journey is a great example of a game that has engineered sociability, MOBA’s like League of Legends, Dota 2, and Heroes of Newerth, may be examples of games that encourage anti-social play simply due to their core design. First of all each game is a significant time commitment (meaning that people will more likely feel the need to get something out of it), they are a team reliant competitive game where even just one bad move by one teammate may affect the enjoyment of the whole team, there is no replacement of players that drop out of the game, there is often no real team identity due to an unrestricted mix of heroes and random side assignment, the games have steep learning curves, and they operate on a ‘snowball’ model where power discrepancies between teams can increase in a runaway fashion. This exponential power gain and relatively slow gameplay means the game can quickly turn into an experience of being repeatedly stomped into the ground while you wait for the other team to show some mercy and just end it all. This may produce a feeling of superiority and power in the victors (who are running all over their opponents) and a feeling of helplessness in the people who a losing. Both of which are feelings that can easily lead to anti-social behaviour.

Literally snowballing in DOTA 2

That is not to say that you can’t find social play in a MOBA (or any other similar genre of game) or that MOBA's are "Toxic". But rather just that the way these games are structurally designed is part of the explanation for why the communities can be hostile. When you are, by design, forced to rely closely on your teammates and have to make big time commitments to do so, then when fellow players are not doing what you want you may not feel very social towards them.

Enforcement is the next of the 3 E’s and essentially is the use of incentives, penalties, rewards, reinforcements, and/or punishments. Examples from real life are obviously things like police enforcement or payment for work.

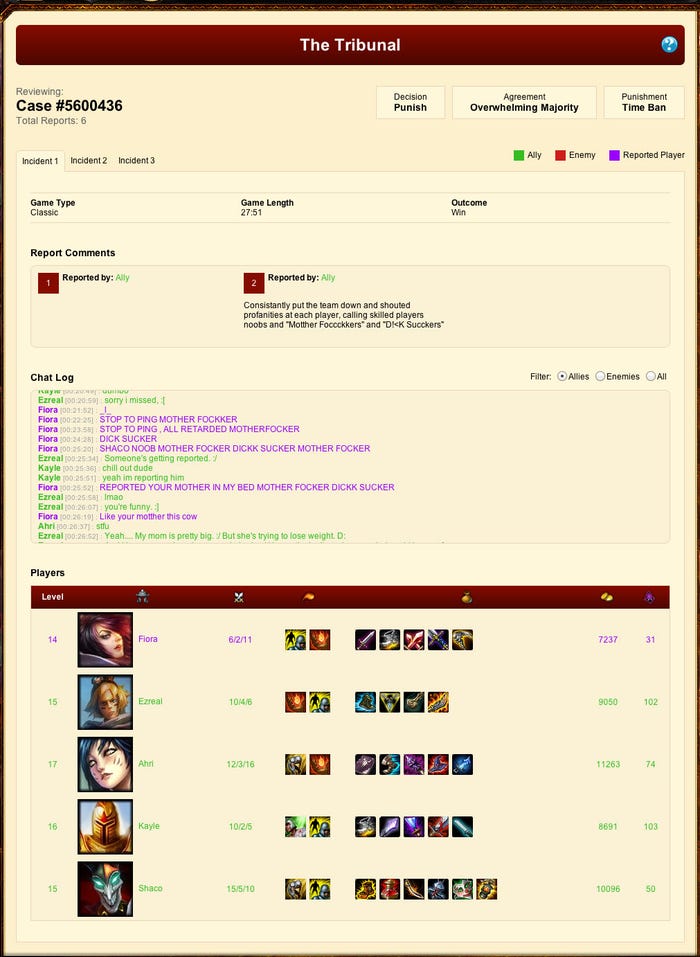

The extensive role of encouragement, aka rewards, in games in general has been well pointed out by John Hopson and others. Although, apart from attempting to make simply playing the game as rewarding as possible, encouragement is less often used to stop griefing in games. Rather, much like in wider society enforcement and punishment, often in the form of bans and/or being judged by peers (as League of Legends does with the tribunal), is probably the most common way in which game makers try to prevent anti-social behaviour. Interestingly, given the popularity of punishment in society, psychology in general advises that the use of punishment should be avoided if possible. There are a few reasons for this: People will resist if they feel controlled, being punished breeds resentment towards the punisher (i.e. the developer/publisher), punishment encourages deceit (i.e. getting more sneaky about misbehaving), that punishment can be hard to do effectively (as we will discuss), and finally that punishment only indicates whatever the person was punished for shouldn’t be done – i.e. it says “don’t do that” but doesn’t say “do this!” Furthermore, despite stories of babies, rats, and loud noises, punishment sometimes doesn’t generalise well. So banning a player for foul speech will probably not reduce their use of stealing loot or corpse camping.

So, just to be clear, psychology generally says that punishment should be avoided if possible. However, this is not to say that punishment doesn’t work. It can. But rather, it is that punishment is difficult to get right and it can have unintended negative side effects.

But, given that punishment is inherently appealing to people, and can work if the situation is right, let’s talk about the best way to design enforcement and punishment.

Enforcement can be summarised to work based on three basic principles.

1. The certainty of detection

2. The swiftness of consequence

3. The size of consequence (called “severity” in the case of punishment)

The above principles are also important for encouragement and reward. But, we will first talk about punishment. Which of these principles do you think is the most important? Certainty? Swiftness? Or Severity?

If you answered severity you are likely to be in the majority, however, you are also wrong. The size or severity of a punishment is actually the least important factor. Rather, the certainty of detection is generally thought of as the most important factor, closely followed by the swiftness of the delivery of the consequence, and only then the severity.

Ah, medieval times. Famous for the severity of their punishments and low crime rates... (Internet alert: Sarcasm)

Consider this example; imagine two systems of enforcing anti-social behaviour in a game. The first system will detect you 100% of the time and when it detects you, you will be prevented from playing for 10 minutes (a relatively minor punishment). The next system only detects at a rate of 5%, but when you are detected your account will be banned for 3 months (a relatively large punishment). Which is more likely to discourage you from whatever behaviour they are aimed at?

An example of the last scenario, the one with a low certainty but relatively high severity, could be a player reporting system for bad behaviour. Here, depending on how well designed the system is, reporting rates may be quite low. For example it may be hard to remember to report someone because the game moves on so quickly or the reporting function is hard to access. What this means is that most of the time the griefer is being rewarded like everyone else by having fun playing the core game. Plus they get the additional reward of griefing another player without consequence. Therefore, what is learnt is “I do this all the time and never get caught”. Then, even if someone is eventually caught, and severely punished, they can easily say to themselves “Well, I do that all the time with no negative consequence. I was just unlucky this time.” Furthermore, in reporting systems the behaviour often has to be looked at by a third party before a punishment is given. This reduces the ‘swiftness’ element of the punishment, meaning that the undesirable behaviour can continue to be performed (and continue to be rewarded) for quite a while before the banhammer arrives. With this in mind the practice of some companies of waiting until they have a large number of cheaters to ban at once (generating headlines) may also not be advisable, although there may be reason to dela in terms of learning about the how the cheat works or building evidence.

An example of the 100% detection system that is used in games could be automatically punishing people for quitting games early. Here, the punishment is certain, swift, relatively well communicated, and at least for a while the bad behaviour cannot be performed. At the same time the punishment is usually relatively mild (a short waiting period to get into the next game). We have no stats to support this, but given the popularity of this measure across various games we also assume that it reduces (although obviously does not remove) rage quitting and dropping. So, it is likely that such quit-bans are an example of where punishment is being implemented well, i.e. certainly and swiftly.

In the name of the Emperor, bring out the banhammer. Rage quitters (and Xenos) beware!

Unfortunately, much anti-social behaviour is not easily automatically detected, and thus cannot be easily dealt with in a swift and certain fashion (which is a problem with punishment). For example “feeding” in a game of Dota 2 or League of Legends where you intentionally get killed by the other team in order to let them gain levels and win. This leaves us with the often used, and often slow and less than certain, system of player reporting.

There are ways of improving reporting systems. You could look at tying them to some kind of automatic, minor, punishment that is immediately applied and communicated to the player who has been reported. Also, while severity of punishment is the least important factor, do think about what kind of punishment you are giving. Some games, such as Dota 2 and Max Payne 3 have systems in place where anti-social/cheating players are put into pools where they play against each other. This may seem like a good idea, as it means they are only ruining the game for other ‘bad’ people. However, you also risk giving such ‘bad kids’ a chance to meet each other, learn from, and reinforce each other (much like how prisons are said to sometimes make, not reform, criminals). So be careful, watch your reform rates.

Also in terms of reporting (for good or bad behaviour) immediate and clear communication is key! For example, Ben (obviously the naughty one) has one or two negative reports on his Xbox Live account. Whatever he did must have really annoyed the people who reported him because it isn’t a small thing to report someone on Xbox Live. However, Ben has no real idea of what he did, in what game, and when (really, he is an angel). So, the system is effectively useless in terms of Ben being able to learn what he did wrong so he could change his behaviour in the future. Conversely, in League of Legends you get clear feedback from other players immediately after the game and also get some direction as to what it was for (e.g. being helpful, being a good team player, etc.). This makes the feedback tangible, clear, and immediate, and therefore more likely to be effective.

Riot hopes you will be likely to reform after getting this card. Science backs them up.

What follows is that reporting systems also need to be easy and fast to use. If you want people to report or, preferably commend/reward, others don’t make it hard for them (Consider even not hiding it away behind a click on someone’s name for example). You could perhaps have a prompt/remind them to do it automatically at the end of each match. Another option could be to ask people to rate the quality of the game overall and then apply that as a score across all players (perhaps weighted by factors like if they lost or won the game or just looking at the opposing teams ratings) – resulting in a “games that this person plays in tend to be rated as 4.5 stars out of 5” and so on. Another option could be to use metrics to detect when someone may have been doing an unwanted/wanted behaviour (but you can’t say with certainty), and then prompt other players to confirm or deny these suspicions. An example of this last system would be boot voting that is automatically offered to the team after a certain number of team kills in a first person shooter.

If anonymity is needed (e.g. when players can communicate after the game) delaying notifications of being reported for anti-social behaviour a little might be a sensible trade off to avoid further negative interactions due people being able to work out who reported them. Rather, you could presenting them after a few games have passed (e.g. in the last 5 games you were reported 10 times for bad language), which might still be immediate enough and is certainly better than many current solutions (but more immediate will generally be better). Such notifications also help create the impression that play is being monitored, thus increasing the expectation of detection. On the other hand, commendations or rewards for good behaviour should definitely be automatically communicated as close as possible to when they were received.

With any reporting system there obviously have to be mechanics that disable abusing the said system, e.g. only giving a limited number of reports overall or a trust level that players earn by behaving positively and that is factored into their reports. This could even tie into a public commitment to be a ‘good player’ in the first place as was mentioned in the Engineering section. In that perhaps only those who commit to being ‘good players’ can report others (or their reports are weighed more heavily), and they (perhaps temporarily) lose this right if reported too often themselves. Although, if such an approach was taken then care would needed to make sure there are still enough ‘good players’ around to ensure a relatively high certainty of detection and reporting of bad behaviour.

One final thing that should be looked at when considering punishments is that people are generally more averse to losses than to gains. In other words, people will work harder to keep what they have than to get something they don’t. As such, taking things away from people that they already have (e.g. take XP away from them) may be more effective than removing their opportunity to gain more of something in the future (e.g. slowing their XP gain rate).

Level drain is, in accordance with loss aversion theory, a truly horrifying punishment

Before moving on to the final E, let’s also talk about reward systems or encouragement for good behaviour. The honour system in League of Legends is an obvious and well discussed example of this already. So we won’t go (too much) into it, other than that according to Riot it is working. Rather, we will comment on encouragement in general, which is generally preferred in psychology (although, as we will mention there are also arguments in academia about its use).

Rewards/encouragement are preferred because they teach what should be done, may increase liking towards the person (or company) delivering the rewards, and generally have the possibility of making people feel good. However, some of you are likely jumping up and down in your chairs right now saying “but they undermine implicit motivation!” This is to say that some people claim that external rewards reduce people’s internal motivation to do that behaviour. This is a common complaint in particular when it comes to behaviour like acting pro-socially, as it is thought that people should be internally motivated to do this due to the assumed moral rightness of doing so. Well… yes, it is possible that external rewards may reduce internal motivations. However, the science is far from settled (e.g. see this article and this article for just a small taste of this debate). Whatever the case may be, there does appear to be some steps that can be taken to reduce any potential negative side effects of external rewards.

As a first step, the reward should be given in a non-controlling fashion. In other words it shouldn’t be “you got this for doing what you should be doing, so now keep doing it” but rather “hey, you were doing something cool and helpful. Here have this”. Secondly, it seems that positive feedback rather than tangible rewards are more likely to keep internal motivation intact. Also, the reduction in internal motivation only occurs if there is internal motivation there in the first place. Finally, the effect often occurs only after rewards have been removed (since this is the most common way of testing this effect). But in the case of social behaviour within an anonymous, competitive, environment there may not be much internal motivation to be social to start with and as rewards are constantly present, it may be that these negative impacts of extrinsic rewards may not be as serious as in other areas.

As a second step, it may not be wise to add competitive elements (leaderboards, etc) to rewards for social behaviour. Competition is common in games, and games likely therefore attract a larger proportion of competitive people. However, in terms of social behaviours competition puts people in the wrong mindset, and may actually only motivate those at the top while at the same time demotivating people who do not perceive they can ‘win’. This is especially the case if being at the top gives some kind of valuable benefit and is exclusive to just a few people. Status can breed resentment (and snobbery), which is not something you want if you are working to encourage good social behaviour.

Rewards also need to be interesting/motivating enough to matter, should be task relevant (so actually be something to do with the game, and not some random extra), should be received swiftly after the desired behaviour occurred, should be clearly communicated, and receiving them for good behaviour should be relatively certain. Mentioning certainty here may be a bit odd, as if you read John Hopsons article you’ll remember though that the best type of reward schedule is not being rewarded every single time you do a behaviour, but rather when you only have a chance to be rewarded each time you do the behaviour (a ‘variable’ schedule of reinforcement). So maybe once you have to play two games before you get a reward and then another time you have play five times before you are rewarded. But, this does not mean certainty isn’t important. Rather, what has to be certain is the perception that you will receive the reward at some point. When casinos first open, or when they put in new machines, they often set a high chance for rewards and then start stretching it out. This means that gamblers first get used to getting rewarded, and therefore know it will come eventually if they keep going. So it isn’t as simple as random/variable rewards are best. Rather, variable rewards do work well, but if too much time goes between rewards they will still stop working i.e. they are more resistant but they aren’t immune to becoming ineffective. Also, consider mixing a variable, larger, reward with a smaller more constant and reliable reward.

The final E is Education, which the provision of information to create or support behaviour change. By itself education is generally the least effective way of producing behaviour change. However, what Education is good at is supporting the other two E’s. For example, by providing information on new enforcement measures you could increase the perception that people are likely to be caught for bad behaviours, thereby increasing the perception of the certainty of punishment. This method is used by road safety advertisements that stress police enforcement. Although if enforcement is actually not very certain many offenders will learn to ignore such educational messages as not reflecting reality.

The final E is Education, which the provision of information to create or support behaviour change. By itself education is generally the least effective way of producing behaviour change. However, what Education is good at is supporting the other two E’s. For example, by providing information on new enforcement measures you could increase the perception that people are likely to be caught for bad behaviours, thereby increasing the perception of the certainty of punishment. This method is used by road safety advertisements that stress police enforcement. Although if enforcement is actually not very certain many offenders will learn to ignore such educational messages as not reflecting reality.

Education is also useful for building support and acceptance for potentially unpopular engineering or enforcement decisions. Riot have done a few things in this regard to lower resistance with their players in terms of the changes they have made to League of Legends. Firstly by publicly hiring professionals (i.e. people with relevant degrees and experience in behaviour change) they got the perception of expert authority working for them. The use of expert(or ‘soft’) authority is useful, as such experts are less likely to be viewed as having solely a profit motive and therefore people are more likely to trust and believe what such experts say in terms of working to improve player experiences. Riot also communicated their intents very clearly inside and outside of the game. To get support for their measures by players they used a “gameplay tips” system with facts on the benefits of social behaviour, e.g. “Teams with positive communication perform x% better.” Mixing these with accepted facts from the game (“You get more gold for last hitting an enemy”) was another clever way of subtly reducing resistance (and apparently, they have even looked at using some priming techniques on these messages). In a hypothetical future these loading screen messages could be personalised with prompts to remind people of commitments they have made to be good players, or even just to remind them of their previous good behaviour (“Your fair play streak is 11 matches now”). Another approach could be to give information on the behaviour of other players. So giving information like “80% of all players earned 3 teamwork commendations in the last day”, the idea being that if you have not received such a reward you may be motivated to work towards it. Unfortunately there is some evidence that group (“normative”) data can cut both ways. For example, if someone has received more than 3 teamwork commendations in the last day they may actually see such a message as a sign that they don’t have to do work to get such commendations anymore, having achieved the average, and therefore may reduce or stop showing pro-social behaviour

An important in game message (from Jeffery Lin's GDC 2013 talk)

Another way in which education can be used, both by itself and along with the other two E’s, is via modelling. As mentioned in the enforcement section one of the reasons why encouragement is the way to go is that it rewards people for what should be done (rather than just what shouldn’t be) and therefore showcases the optimal behaviour. Modelling in itself is a very powerful way of learning. Therefore, whenever your gameplay is demonstrated in tutorials, trailers, other promotional material, and so on it is advisable to model good player behaviour (or at least not model bad behaviour) and show people getting rewarded for that social behaviour. This also raises the issue of the behaviour of pro-gamers playing your game and how that reflects back on behaviour of other gamers. People playing your game, especially the dedicated ones, will look at, and learn from Pro-gamers. So be aware of what is going on with and talk with your pro-gamers. Ask them about their bad behaviours (Do they rage quit? Rag on teammates or opponents?), and structure tournaments and showcases in ways that don’t encourage behaviour you don’t want to see in your games. You can also take the approach of having champions of social/good behaviour in the pro-scene, especially if they are respected by other pros. Although, beware that such champions work best if the champion has made a voluntary choice and commitment to showing the behaviours you are after, rather than just doing it for a promise of extra money or fame. In addition to pro-gamers think about how you can get your (likely majority of) good players to speak up and help out. Many good players are too shy, or too scared to share what they know. Coaching systems, as proposed by the Dota 2 team, may help here, as will giving people the tools and information they need to help others in a low-risk environment. In this way, your engineering and enforcement measures can actually create education by themselves. In that by producing good behaviour in experienced players via engineering or enforcement then this also creates a model of how to behave for new players to follow.

Summary

The material covered above is not all that can be said on the matter of anti-social behaviour in gaming. But hopefully viewing the issue through the lenses of the “Three E’s” have been helpful. In the end the message is that the structure or Engineering of your game is probably the most powerful tool you have in reducing these behaviours. Enforcement and encouragement can also help, but will work best if it is efficient, clear, usable, and as direct as possible. Finally, Education, while maybe not achieving much on its own can be valuable as a way to reinforce and support your overall efforts.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)