Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

A User-Centred Approach to Control Design

In this post, I discuss how user-centred design techniques from the field of human-computer interaction can be applied to game development. Specifically, I'll examine our team's process for developing platforming controls via the UCD approach.

Note: This post was originally published on our studio's development blog. We're a small student team based in Ontario, Canada working on our first commercial project.

It’s a familiar image - the furious slamming of fists into a laggy keyboard; the innocent mouse knocked aggressively from its perch; the once-glorious gamepad, now laying cracked on the floor beneath a film of cheese dust. Who among us has never resorted to blaming the controller for our own failures? And yet, in some cases, perhaps we are justified in our rage against the machine, for a poor control implementation can lead to any manner of misclicks, misunderstandings, and missed opportunities. Controls are a fundamental aspect of any game’s design, serving as a key factor in determining the game’s playability. Simply put, a game’s controls facilitate each and every interaction available to the player. This holds true regardless of input device - whether mouse and keyboard, gamepad, motion sensors, or brain-computer interface. No matter the input chosen, developers are tasked with designing a set of controls that is logical, easy to learn, fluid, responsive, and as unobtrusive to gameplay as possible. Ultimately, this process boils down to the creation of an interaction schema that effectively maps real-world actions, such as a button press or a wave of the arm, into an in-game action, like jumping or swinging a sword.

All of this rhetoric prompts us to inquire, how might we define good or great interaction design for game controls? We might assess a control scheme as effective if it is usable and contributes to a good user experience - that is to say, it enhances a player’s experience, rather than detracting from it. However, accurately measuring usability and user experience necessitates usertesting, which presumes that we’ve already implemented our design. May we determine some aspects of our design a priori as a pre-development measure, thus improving the quality of our initial efforts? The answer, thankfully, is yes, through the application of user-centred design, or UCD. UCD is a process oft-applied in the realm of productivity and web applications, though it is increasingly applied to the development of interactive entertainment, including games. In a nutshell, UCD focuses on understanding user needs, developing system requirements based on those needs, prototyping alternative designs, and finally evaluating the effectiveness of those designs. In this post, we’ll focus on how we can leverage the first two phases of UCD methodology - understanding needs and formulating requirements - to inform our designs pre-implementation.

Case Study: Designing Controls for Spirit

Our team’s interest in UCD is motivated by our current project, a 3D puzzle-platformer in a quasi-open world. Controls are of particular importance in the platforming genre, where players are frequently tasked with executing a precisely timed sequence of movement, jumps, and other abilities. Poorly-mapped or unresponsive controls spell disaster for any platforming game, as they maximize player frustration, or worse, make certain challenges impossible to complete. Our need for great controller design is compounded by the nature of our project in particular; since our core mechanic allows players to control a number of different objects, we may find ourselves designing a dozen control variants for any given input device. Furthermore, many of our puzzles are physics-based, demanding that our controls seem physically realistic while maintaining a good game feel. We’ve chosen to apply UCD in achieving these objectives to ensure that our players’ needs form the basis of our interaction design.

The first step in our design process is the establishment of our target user population, and an understanding of player needs based on their demographics, preferences, and past experience. Next, we formulate design requirements based on lessons learned from existing titles, expected use context, and player behaviours. Following this, we create our initial designs for three different player-controlled entities and refine them through early internal testing. Finally, we plan our next steps in usertesting and iteration to validate and refine our designs.

Understanding Players & Establishing Design Requirements

Our target audience for Spirit comprises players between the ages of 18 and 34 with at least a moderate amount of gaming experience. The ideal user will have fairly extensive experience with platforming games, enjoys exploration and puzzle-solving, and is willing to devote an hour or more to individual play sessions.

Based on the needs of our target players, we can categorize the requirements of our design into a few key groups:

Functional Requirements - What the controls should do.

For each set of controls, we need to support core game interactions - primarily, we are concerned with movement, jumping, interacting with objects, and manipulating the camera. Each interaction should be mapped to its own region on the appropriate input device, and real-world manipulation of the input should translate sensibly into in-game action.

Non-Functional Requirements - What the controls should be.

We’re developing Spirit for PC, so we’d like to offer both keyboard and gamepad support for players. Right now, we’re focused on interaction design for both mouse/keyboard and Xbox controllers. Since players will find themselves in situations where they might need to rapidly time jumps, precise changes in direction, or switching between objects, responsiveness and fluidity should feature prominently in the eventual implementation.

Data Requirements - What the controls should know.

Our controls need to respond differently depending on game scenarios - connecting with in-game feedback like contextual hints, restricting input when appropriate, and even responding to in-game physics. Thus, our control system should interface with game data to pull information regarding the camera, game state, position of interactive objects, and so on.

Context Requirements - How the controls will be used.

Since players will want to concentrate on what’s happening on-screen, we need to ensure that they won’t feel the need to glance down at the keyboard or gamepad to be sure of their next input. We expect that players will have prior gaming experience, and so our design can borrow from established conventions in the genre to assist in this effort.

Usability and Experience Requirements - What the user should perceive.

We want our controls to feel unobtrusive and fluid, minimizing the barrier that users perceive between their actions and in-game results. Controls should be easy to learn, and easy to use - we want challenge to come from puzzle-solving and platforming, not wrangling a gamepad. Lastly, we want players to feel good about mastering the abilities of any given object that they control, and so our controls should integrate with our animation and gameplay systems to create the most fluid experience possible.

Following the establishment of our design requirements, we examined (and played!) a number of different games. Since we’re concerned with designing controls for a several different objects, our research extended beyond the platforming genre to include games like flight simulators and shooters. For the purposes of our case study, we’ll look at the first few entities that we’ve implemented into our gameplay prototype - our main character, a beach ball, a marble, and a paper plane. Each design follows from a core set of universal attributes that we’ve developed based on estimated player expectations, with refinements to individual objects focused on improving game feel and maximizing usability.

Control Designs

Universal attributes. At its core, Spirit is a platformer, and so we looked at a lot of different platforming games to get a feel for the sort of controls players would expect - from classics like Super Mario 64, Banjo-Kazooie, and Chibi-Robo to modern incarnations of the genre, like Yooka-Laylee. We also played quite a bit of Ori and the Blind Forest - though a 2D platformer, the controls in Ori are outstandingly responsive and fluid, with snappy animations that respond near-instantaneously to most inputs.

Since players will be in a 3rd-person, 3D environment regardless of the object they’re controlling, we also looked at games like The Legend of Zelda: Breath of the Wild to learn from some truly great 3rd-person control schemes. Ultimately, we decided on a few standards from which we could build and refine each individual entity’s control scheme:

Locomotion. No need to reinvent the wheel on mapping basic movement - we’re going to keep primary directional movement on WASD for keyboard users and LS for gamepad players. We’ll map jumping to spacebar on keyboard, and A on gamepad. For each individual control variant, we’ll use the physical qualities of the entity that the player controls to determine how movement controls should behave - including acceleration, directional changes, and any animation delays.

Camera Movement. Following the aiming conventions of first- and third-person games alike, we’ll map this movement to RS and mouse movement. For keyboard and mouse users, we’ll allow toggling of locked and free camera modes with the tab key.

Interactions. We’ll map primary and secondary actions, like possessing objects and interacting with NPCs, to Q/E on keyboard and Y/X on gamepad. We’ve opted for a primarily one-button scheme (using E and X respectively), which will perform the correct action based on the interaction available in-world. To accomplish this, we’ll check for interactive areas within the player’s FOV and display a prompt in-world to highlight the available interaction.

Each of the control variants below is based on these core universal attributes, with variations based on the physical attributes of the entity in question, and any expectations players may have from previous gaming experiences.

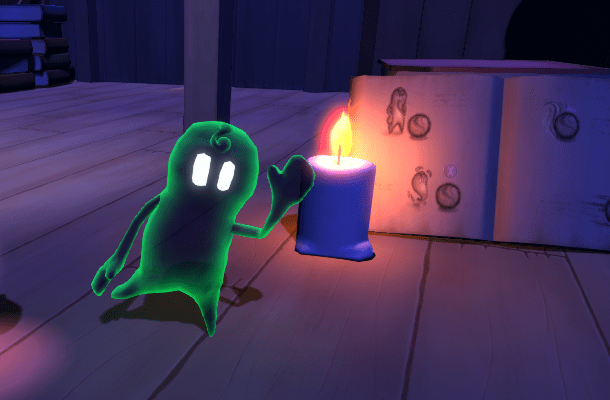

Third-person humanoid. Players will spend most of their time as Spirit himself, a tiny ghost with roughly humanoid features. We want motion to feel snappy and responsive, so for this design, we’ll map the movement axes directly to the player’s velocity, with a slight acceleration timed to match the character’s run animation. We’ll base this on a character controller that considers game feel first, and physics second - to give players a fluid experience that integrates well with animation. Following the standard of most platformers and third-person games, we’ll allow players to “turn on a dime” - turning animations are nice and cinematic, but may prove frustrating when players want tight controls above all else. The result in-prototype looks something like this:

Round objects (physics-based). Two of our initial objects, the beach ball and marble, both follow a scheme inspired by the feel of locomotion in games like Katamari Damacy or Super Monkey Ball. In contrast to the main ghost controller, we’ll base this scheme almost entirely on physics, mapping movement axes to forces applied on the object, rather than instantly changing the object’s velocity. By configuring the amount of acceleration applied, we can create the feeling of a large, weighty rubber ball or a small marble with a tight turning radius. We’ll let the physics engine handle angular momentum, preserving it through jumps and collisions, to create an experience that feels more physically realistic. In past iterations, we experimented with more direct schemes that were less physics-based, as in the main character’s controls; the consensus from the majority of players was that they preferred and expected more physics-dependent behaviour for traditionally “inanimate” objects. The end result is a nice, responsive force-based controller that “fights back” if this object controlled is particularly weighty:

Flight (hybrid). Developing controls for a paper airplane was particularly interesting - though inanimate like the ball, the plane functions as more of a vehicle than a dead weight, and so we looked to spaceflight and flight simulators like X-Plane for inspiration. Stripping away the complexities of a bona fide flight sim, we can reduce the act of flight to a few primary controls - throttle (forward motion), yaw (turning), pitch, and roll.

Since throttle and yaw correspond to motion in the XZ plane, we can associate this with the “locomotion” controls for other objects - as such, we map throttle to the z-axis of movement (W/S on keyboard, or up/down on LS for gamepad) and yaw (which we’ll tie into roll, for smoother animation, to the x-axis of movement (A/D on keyboard, left/right on LS). Pitch and roll are a bit more interesting - in keeping with the conventions of traditional flight controls, we’ll lock the camera to the direction the aircraft is travelling, and use the axes freed up from camera controls to modulate pitch (up/down with mouse or RS) and roll (which, coupled with yaw, is offered secondarily by using left/right with mouse or RS). The result is something that feels like a simple, zippy little flight simulator:

Next Steps

Over the course of our work so far, communication among the dev team has proven crucial - our initial implementations have undergone many iterations to improve responsiveness, physical accuracy, and animation. However, we’re still very much at a prototype stage, and we’ll need to test our current variations with real players to validate and further improve our designs. Our next step will be finalizing control variants for a few more in-game objects, before proceeding to some early alpha testing with potential players. As part of our usertesting efforts, we’ll be integrating techniques like gameplay recording, questionnaires, and semi-structured interviews to understand our players’ perspectives on controls and interactions in our game. In the meantime, we’ll be working on improving in-game feedback to help users learn available interactions more effectively, and designing some simple puzzles to facilitate an in-game environment for testing where users will be able to focus most of their efforts on evaluating the game’s controls.

Overall, the UCD approach has proven immensely helpful to our interaction design process, improving the quality of our initial designs and our efficiency as a team.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)