Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

The music system 15 years in the making Part 2

Continuing the exploration of a complex dynamic music system and its development. Part 2 goes into more detail of exactly how the system works

Part Two

In Part One of the story of creating a dynamic and generative audio system, I introduced the general concept of developing a unique music system that allows me to create interactive, dynamic and generative music for Defect Spaceship Destruction Kit (SDK).

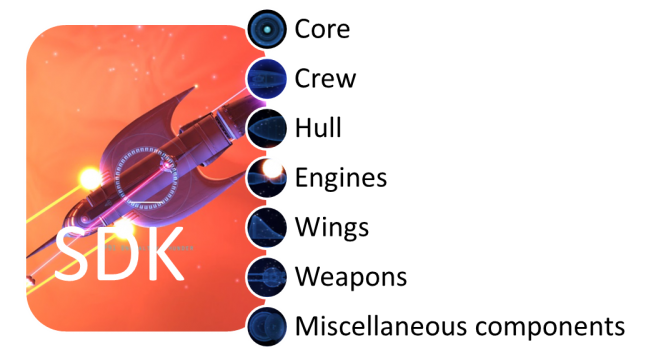

In SDK you build spaceships out of different parts and send them out to fight. After each victory, your beloved crew mutinies and steals your ship. You then need to go back and build a new ship, which you can defeat your old ship in. It’s an innovative game that tests your ability to create almost any spaceship you can imagine and then to defeat your own creations.

As each ship is built from components, so too is the music. There are currently close to 200 components in the game and each component has its own musical motif.

I believe the music system I am using in SDK can be applied to multiple different game styles, in fact, I’m already drawing on what I’ve created for my next game. In this second part, I will go into the fine detail of how the music system works in SDK.

At the time of writing, SDK is still in production, and therefore so too is the sound and music, however the system is currently functioning quite effectively.

A second of smugness

One of the primary goals in creating this music system was to give me more control and also to have something that was incredibly ambitious without requiring ridiculous amounts of programmer time (and as a result money). The entire system as it now stands and works is all driven from a single FMOD Studio Sound Event and 6 parameters. It took our programmer less than two hours to get the entire thing up and running and will only require very small amounts of additional time to hook up additional parameters. THIS is the other aspect of our modern tools that people seem to have missed. A carefully planned and designed project in a quality audio middleware tool can have fantastic levels of complexity and not require hours, days or even weeks of programmer time to implement.

I built this project not only to minimize programmer time, but also to build something that could serve as a template for future projects. This system could easily be used to control pre-recorded musical elements (like a full orchestra), other sample based scores or any combination in between. Even at this stage I am fairly proud of what has been achieved.

I have already discovered that the layering of the instrumental layers and the style of the game means my individual motifs can be much simpler than I originally thought necessary. I “composed” various drum and bass and melodic patterns, but once they were in game I discovered that less notes was indeed, often more effective. Now I can instantly test each new pattern to make sure.

Game Elements

Now let’s take a look at the details of how the music is actually assembled within the game. Ships are built by the player in the Shipyard. Each ship can be built from a selection of component types.

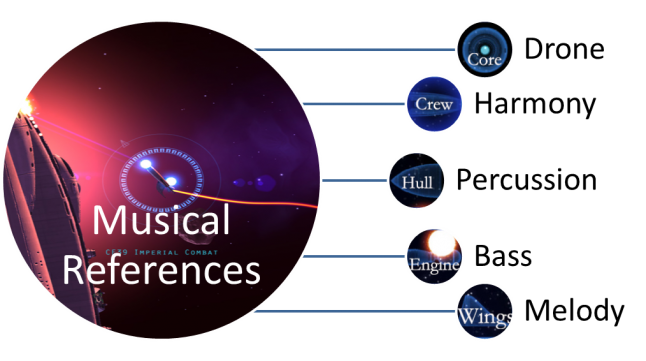

I did not want to allocate musical reference for weapons or misc components, and a core is a compulsory component, so the music is divided into instrumental layers depending on the component types.

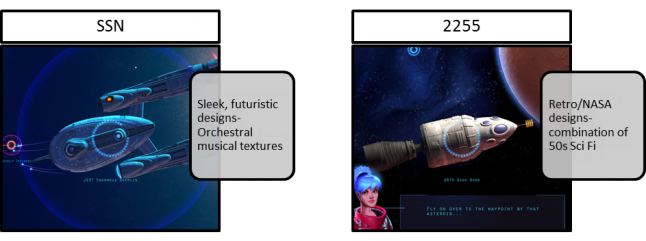

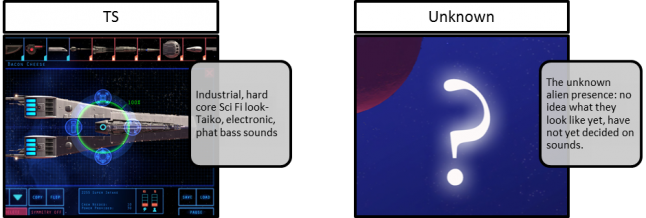

Then there were the aesthetic groups for components. I allocated instrumental group types to correspond suitably to the look of the components being used.

So the component type defines the instrumental roll and its aesthetic design defines the instrument group type.

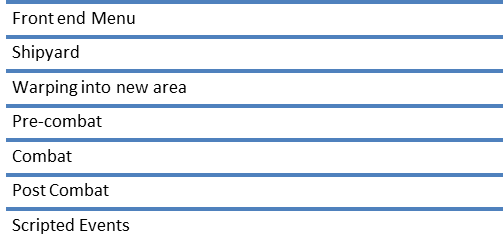

The game itself has multiple game states; each one of these is defined within the FMOD Studio project as a parameter value that can be used to change what the music is doing at any time.

The entire system across all levels is quantized so that layers stacked vertically and motifs placed horizontally will force transitions to always occur musically. Currently I have a single theme for Warp, Pre and Post Combat. So the unique themes for ships play in the shipyard and in combat. Scripted events can have flags placed anywhere I may need them so I can control the music in a scripted event to provide a cinematic result tracking time, start and end of specific events and actions and a range of other parameters.

When you develop a new approach to something, generally the process falls into a series of stages. This is both a natural part of trial and error, as well as a way to approach the task while minimising redundancy.

Stage One: Planning/R&D

Before I even wrote a note of music, or even considered what instruments I wanted to use, I needed to design a system. I needed a system that would allow me the level of control of the music in the game that I had always wanted. Working on the system, I spent a few months scribbling diagrams on pieces of paper, making rough flow charts of how elements would interact and communicate with other elements. I built simple events within FMOD Studio using just sine wave sounds to test the control. Could I actually achieve the results I needed? I spoke with other audio folk within the industry to get an idea of some of the control systems they had used in games to trigger audio events and if they found one system better than another.

In many ways the biggest problem I had to deal with here was that “I didn’t know what I didn’t know.” It is very difficult to find the answer to a question when you are not even sure what question you should be asking. Creating these test events allowed me to experiment, break things, fail at things and learn slowly exactly what questions I needed to be asking myself.

I think this is the first real benefit to the current generation of audio tools that we sometimes miss. Busy schedules and complex projects often do not allow us the time to sit down and try out new ideas and expand upon our skills. When you are pressed for time, it’s only natural to fall back on the skill sets we know and are comfortable with. By trying outrageous ideas directly within the audio tool, I was able to expand my understanding of just exactly what was possible.

Stage Two: Control down to the smallest level

In my years as a sound designer, I have always used a variety of control properties to provide greater variation of sounds in game projects. So the sound of a shotgun could be improved by providing a selection of shotgun sound files for random selection, then adding some pitch and volume randomisation to improve on the effect. I had considered applying this to musical content, but pitch shifting would unmusically affect tonal sounds, and volume randomisation would interfere with precise control of dynamics.

I started to play with EQ balancing using a simple 3-band equalizer module to add variety to a sound. So a single violin marcato note could be varied using multiple sound files; but it could have further variation applied by randomizing the exact values of the High, Mid and Low frequencies. Like any sound, the effective range of randomization depends on the sound itself, but I found I was able to achieve a good range of variation using these properties and keep the musicality of the sounds.

Stage Three: A fortuitous accident

In a situation that would have made Howard Florey proud, one of the most significant discoveries I made when experimenting with these ideas was mostly by accident. While testing some drum impact sounds to see what level of variation I could achieve using the 3 -band EQ module I stumbled upon an aspect of control that I had not expected to be possible. I was using a sound file of a single drum strike played at a Fortissimo level. The sound was suitably loud and sharp for a heavy drum impact. While tweaking EQ values however I started to notice that the drum sounded more subdued; not just quieter, but actually as if it had been hit with less force. I continued to work with just this one sound and after several days of tweaking various values, I managed to achieve something that would significantly impact the project I was building.

Using sound files at the highest velocity produced by an instrument and then applying a range of property alterations I was able to produce very convincing results of that instrument note played through the entire dynamic range. So my FF drum strike sound file could be tuned to provide drum strikes at FF, F, MF, MP and P. The significance of this from a memory usage point of view was massive and it opened up many possibilities for the project that I had not previous dreamed of.

Stage Four: Building Music

The term “Building” is not one often associated with the idea of creating or composing music, but at this stage of the project, building was exactly what I was doing. I needed to further develop the system of how I would create the music for this project. Being able to tune the dynamic range of notes meant that I could create my music right down to individual notes.

So I started to use FMOD Studio essentially as a piano roll sequencer. I used recordings of single notes as my samples, and placed them on the audio tracks aligned to beats and bars exactly as I would in a simple notation program. I was using Studio in a way that no game audio tool had really been designed to operate. At this stage I was still working on proving if this method was valid. I needed to work with the functions available, not all the functions I would have liked to have.

I built musical motifs, and layered different instruments together to create simple themes. The tool’s regular functions allowed me to apply effects where needed or automation of properties if I desired, but essentially I was making a template that I would later use to be able to compose music for the project.

I quickly learned that some instrument sounds worked better than others and discovered what extremes of range I could utilize and still maintain a true representation of each instrument.

Stage Five: The Real Design

Eventually, I had enough information to decide on a design for the game itself. As always, the system I had created had limitations. Using samples in FMOD Studio meant I had to build up the sounds I wanted to use. Because of the differences in how much pitch shifting I could use with different instruments found I needed to work out a set of sounds that would work well in the system. When it came to actually writing the music I would compose using these instruments.

Interestingly I had a several audio folk ask me about these limitations. The question was always “But why do you want to limit yourself like that? A true composer should write the music they need or feel and not be limited because of samples.” I found this amusing as we ALL are limited as composers ALL the time. There are very few of us that can go out and write for a 150 piece orchestra. Actually there are probably very few of us that could go out and write for a 50 piece orchestra for every project. Time, budget and availability are always limitations for game composers, so sadly I am not likely to have a 100 piece bagpipe ensemble with 400 Tibetan horns for my main theme, fun though it would be. I don’t really consider limitations of working in this system any greater to other limitations. I work with what I have available.

Limited to using a 60 piece orchestra for Jurassic Park Operation Genesis

The Magic Event

One of the other functions that the current series of game audio tools provides is the ability to “nest” an event inside another event. FMOD Studio lets you create an event within another event like this, and it also allows for Events within the main Event browser hierarchy to be dragged into another event to create a parent/child relationship. When you do this, the child is actually a reference event. Any changes to the original reference event in the browser are instantly applied to all instance reference events. You can still make some unique changes to each individual instance object that do not affect other reference events.

I initially suspected that using reference events would be useful for my music system, as I wanted to create multiple layers, but it was not until I started to work with them directly that I understood just how powerful this functionality would be.

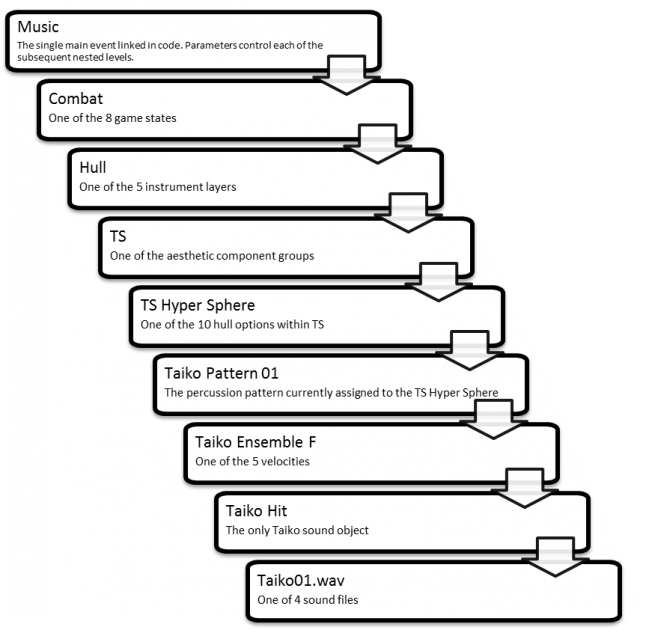

To demonstrate this I will explain how “deep” I went with creating my layers. My project was designed so that at the top level, the Music event would define each of the game states, and then at each level downwards there would be further definitions to control the playback.

The current project looks a bit like this:

At each level a relevant Parameter defines exactly which sound files are triggered and the overall selections combine to produce the piece of music required at that stage of the game. Of the eight levels of nesting displayed, here most of them simply define exactly which elements are triggered. The Hull layer combines with five other layers to create the overall theme at any point in time.

Although it is a complex control system, the actual number of sounds being played at any one time is not all that many, so channel allocation on a mobile device has not been a problem.

The benefit of reference events is that if I decided to change the sound file right at the bottom of that 8-level nested hierarchy, any change made to the main Event will instantly propagate across the entire project. This means I can simply swap out a sound file in an event from Taiko to Tabla and instantly audition every rhythmic pattern, motif, theme and musical piece that previously used Taiko and listen to them with a different instrument sound. This is immensely powerful, efficient and I am now starting to think that reference events should become the standard of EVERY audio event used within a game project. They increase the ability of the audio team to significantly edit and alter projects with the minimum of fuss. I will be continuing to assess this idea for future projects, but so far I have found them incredibly useful.

Next Stages

I am still developing both this system and the actual audio for the project itself, and I think there is still a lot to be done. The nested nature of the project means that I cannot utilize FMOD Studio’s mixer in the usual way. Although each reference event does exist as an input bus in the Mixer, when the main Music event is triggered it triggers the nested instances of each reference event, so their main input buses will never activate. Thankfully, I have discovered that I can place a Send in each of the nested instances and create a corresponding Return Track in the Mixer, so I will be able to create individual inputs for all 200 components and assign them to group buses for DSP effect efficiency as well as utilising snapshots and sidechains to provide even greater control of the many layers. The obvious one is to channel-duck certain layers when new layers come in, so the victory and defeat layers could reduce the volumes of existing layers automatically.

At all times I will need to be aware of resource usage on the target platforms, but in all honesty this game at a pre-alpha stage is more complete than some projects I have worked on when they were shipped. This is the advantage of using engines such as Unity or UE4 (this game uses Unity) and sound engines such as FMOD, Wwise or Fabric. The game can be running and functioning at a far earlier stage of development which makes everyone’s job easier and saves time and money.

Trouble Ahead

This two part series is going to be extended as new issues arise and the project needs to be adapted for various reasons. I think this entire process is worth documenting and as a few problems have come up it is important to write about the bad as well as the good. Stay tuned for more updates us the project continues to develop.

The official SDK Blog and website can be viewed here

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)