Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

The music system 15 years in the making

This is a two part series on the development and implementation of a complex dynamic music system that is the result of 15 years of dreaming and experimentation. The right tools, team and project finally allow the concepts to become a reality.

The music system 15 years in the making

Part 1: Dynamic music creation and implementation for Defect Spaceship Destruction Kit (SDK)

Introduction

Every so often you are offered the opportunity to work on a project that allows you to push yourself and the tools you use far beyond common expectations. For me, this came in the form of the sound and music for Defect Spaceship Destruction Kit (SDK).

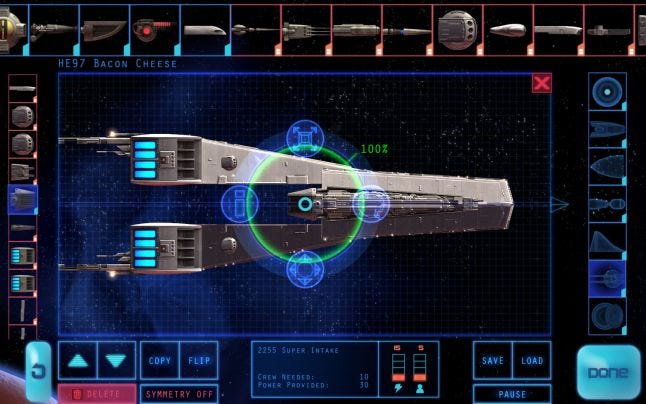

In SDK you build spaceships out of different parts and take them out to fight. After each victory, your beloved crew mutinies and steals your ship. You then need to go back and build a new ship, in which you can defeat your old ship. It’s an innovative game that tests your ability to create almost any spaceship you can imagine and then your ability to defeat your own creations.

out to fight. After each victory, your beloved crew mutinies and steals your ship. You then need to go back and build a new ship, in which you can defeat your old ship. It’s an innovative game that tests your ability to create almost any spaceship you can imagine and then your ability to defeat your own creations.

For a long time, I’ve been experimenting with and exploring the possibilities of generative audio, the process of creating sound effects and music in real time, with no repetition. It’s a fascinating area and only recently has the software and technology of games evolved enough to start doing what I’d always dreamed of.

In SDK, this ship-building approach has given me the opportunity to implement aspects of generative music, layered implementation and dynamic cueing to control the music within the game. The further I explored this process for SDK, the more I found myself inventing a whole sound system that was flexible, resource-efficient and dynamic.

Beyond SDK, I found the system I created can apply to many different kinds of games. Using generative or dynamic music can provide effective control over the sound and music in a game.

In the past, I have encountered people whose reaction to the idea of generative and dynamic music is along the lines of, “But we want a fully orchestral score!” The great thing is; these are NOT mutually exclusive approaches. An orchestral score can work beautifully for the grand cinematic scenes, or to introduce giant boss monsters and interesting characters, but for the hours on end that a player may be wandering the open world, a generative score can provide unique musical accompaniment. This can adapt dynamically on a beat, introduce layers to build tension or indicate significant actions and then dynamically blend into a more traditionally composed piece performed by a full orchestra.

The other benefit of this approach is the efficient use of resources, something that even large projects can benefit from. Music that can be created to play in real time from a small handful of sound files can be useful in games when levels or areas are loading to minimize loading bandwidth. This kind of music also works well to create efficient transitions or simply to maximise resources at certain stages of game play.

15 years in the making?

Essentially, this music system took 15 years to design and produce. I am only halfway joking when I say this. My very first game project, Starship Troopers Terran Ascendency on PC, started nearly 15 years ago. Back then, my role was to produce audio assets, both sound and music, render them out in the requested format and then pass them on to a programmer for implementation. The next step generally involved a level of hoping and praying uncharacteristic to someone that identifies as agnostic. Essentially, the success or failure of the audio, from an implementation point of view, depended far too much on the project schedule and current level of interest in audio of the programmer assigned to sound and music.

Even back then, I was sure that there must be a better way to handle this process. I wanted to have more control over the audio I was creating. I also realised that the process was inefficient, prone to communication issues between audio folk and coders, and risky from the point of view of introducing bugs into a project. I knew what I wanted to do, I knew the possibilities that computers provided us creatively, but I had no idea how I could ever convince someone to provide me with the tools that I needed.

Luckily for me and other audio teams, we have since been provided with a range of audio tools that greatly improve the workflow and control compared to what we had 15 years ago. CRI, Fabric, FMOD, Wwise and other middleware audio solutions have dramatically improved the way we both create and implement sound in games. Having worked with these tools extensively, I know that I, and I am sure many others, would struggle to cope without them.

These tools have all gone through generational changes and have evolved over time, and those of us working in game audio have developed our processes along the way. What’s great about the development of these tools is the extremely receptive nature of their developers. They want to hear from their users and they work very hard to provide us with the tools that we need. To be perfectly honest, I think they have actually left some of us a little behind. The current capabilities of tools such as Wwise and FMOD are actually pretty staggering, and I think many of us are not yet utilizing their full potential.

I have an excellent relationship with the teams that produce both Wwise and FMOD, however my familiarity with FMOD Studio is currently greater than that of Wwise, so it was with FMOD Studio that I decided to attempt to create the project I had always wanted to build.

15 years in the dreaming and thinking, I was lucky to be given the opportunity to work on a fantastic game concept like SDK. The support of the development team and their openness to new ideas is what has allowed me to make an ambitious attempt at something new. My goal was more than just to create a dynamic music score for the game, it was to be able to compose the music directly into the tool in a way that would let me quickly and easily test each musical layer or cue for its suitability and effectiveness.

How it works

The music system is designed to highlight and support the game design, so I will be explaining the concept of the game as much as how the music works. As I explained earlier, SDK involves designing and constructing spaceships for both a single player campaign as well as multi-player combat. The player is provided with a range of components to select from; these components provide various functions for the ship but are also designed to allow the creation of a wide range of aesthetic finishes. So the look of a player’s ship is likely to be as important to many players as its combat ability.

As each ship is built from components, so too is the music. There are currently close to 200 components in the game and each component has its own musical motif. More than that, it has its own musical motif for when the player is in the shipyard, a different one for combat and may ultimately have a different motif for each game state. The challenge here is to allow the various layers of motifs to stack together and produce unique themes for each ship a player creates while maintaining musicality, sounding suitable for the game state and aesthetic design and blending a variety of different instruments successfully.

Once combat starts the game also tracks both player and enemy hit-points and introduces more layers to support the player’s move toward victory or defeat or even a blend of both states. The music system uses this data to add an additional dynamic layer to the sound and music.

I am fully aware that there is a very real danger of this system collapsing under its own ambition, and my goal is not to simply create a smug demonstration of just how many elements I can cram into the sound design just simply for the sake of it. In designing this music system, I have very deliberately aimed extremely high in my expectations. We have the technology and tools to be doing so much more than we have so far been with game audio. Even if I fall short of the mark, I think the effort will be worth it. Even if this particular project does not 100% achieve the dynamic, generative, blended awesomeness I am aiming for, my hope is that others can pick up where this one fell down and continue to move us forward.

Currently the system works. The various game states transition seamlessly, the ships each have a unique theme in the Shipyard and in Combat and I have two general combat victory pieces that blend in bit by bit as the enemy takes damage. What makes the creative process so interesting is that I am effectively composing directly into the game. The ability to add or edit a component theme, hit export in FMOD and then import in Unity and run the game is as close as I have ever been to in-game composition. The turnaround time to audition content in the game is only a few seconds and this means I can test each new addition in context almost immediately with no external help required. I can run the game linked to FMOD to allow live updating of mixer levels and effect automations, so for a theme that may consist of repetitive note patterns and variation of the overall sound using effects over time I am absolutely composing within the game itself as it is playing.

In the second half of this series I will go into greater detail of exactly how I have created my dynamic music system and applied it to SDK.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)