Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In this sponsored feature, Intel's Brad Werth explains the company's open-source Threading Building Blocks structure and its potential use within video games.

Games are some of the most performance-demanding applications around. The scientist studying proteins or the animator working on the next photorealistic computer animated film can grudgingly wait for a computation to finish; a game player cannot. Game developers have the challenging task of squeezing as much performance as possible out of today's hardware.

This quest for performance has typically focused on graphics tricks and optimizing low-level instructions. The increasing popularity of multi-core CPUs in the consumer market has created an opportunity to make large performance gains by optimizing for multi-threaded execution. Intel has created a library called the Intel Threading Building Blocks (Intel TBB) to help achieve this goal.

This article demonstrates multiple paths to success for game architectures that optimize with Intel TBB. The techniques described are oriented primarily toward optimizing game architectures that already have some threading, showing how Intel TBB can enhance the performance of these architectures with relatively small amounts of coding effort. Even for a serial architecture, these techniques demonstrate straightforward ways of introducing performance threading.

This article is divided into three sections, ordered by increasing coding commitment.

The first section shows techniques in which Intel TBB provides optimization opportunities with minor coding effort and no algorithmic changes.

The second section details how Intel TBB's efficient implementation of loop parallelism can provide performance enhancements throughout a game architecture.

The final section demonstrates techniques for using Intel TBB as the basis for the threading in a game architecture and shows how to implement common threading paradigms using Intel TBB.

Applying these techniques will ensure that a game architecture is maximizing performance on the computers in the market now and will automatically take advantage of future advances in hardware.

The samples presented are available in complete form as a Microsoft Visual Studio project. Most of the samples can be ported to any platform where Intel TBB is available.

This article refers to the performance characteristics of these samples, as measured on a test system. Performance may vary on other systems. The specification of the test system:

Intel Core 2 Extreme processor X9750 @ 3.16 GHz

2 GB RAM

Windows Vista Ultimate with Service Pack 1

Source code for the samples is available at http://software.intel.com/en-us/articles/tbb-game-technology-demo-download.

One of the easiest ways to optimize a game's architecture is to swap in Intel TBB's high-performance implementations of standard containers or memory allocators. Almost all game architectures use containers and allocate memory dynamically, but the standard implementation of these common operations can carry some performance penalties when accessed from multiple threads. Using Intel TBB to optimize these operations requires minimal code changes.

Intel TBB provides concurrent implementations of common standard containers, including vector, queue, and hash. These containers use per-element locking to avoid contention from simultaneous access from multiple threads. When accessing standard containers from multiple threads, it is necessary to protect write accesses with mutual exclusion. Depending upon the exclusion mechanism and the amount of contention, this can slow down execution considerably.

class Sample1StandardKernel: public Kernel

{

...

// access a standard container, but protect it first

EnterCriticalSection(&s_tLock);

s_tStandardVector[i] = i;

LeaveCriticalSection(&s_tLock);

...

};

class Sample1TBBKernel: public Kernel

{

...

// thread-safe containers need no protection

s_tTBBVector[i] = i;

...

};

Sample 1 is an example of how a game architecture might access a standard container and an Intel TBB container. The syntax is similar, but the standard container requires the addition of a mutual exclusion object. The code using the Intel TBB container is faster than the code using the standard container by a factor of 1.21 (21% faster) on the 4-core test system.

Any game that dynamically allocates memory from multiple threads may be paying a hidden performance penalty. The standard implementations of C-style and C++-style allocators use internal mutual exclusion objects to allow multi-threaded access. Intel TBB provides a more efficient multi-threaded memory allocator that maintains a heap per thread to avoid contention.

class Sample2StandardKernel: public Kernel

{

...

// allocate and deallocate some memory in the standard fashion

unsigned int *m_pBuffer = (unsigned int *)

malloc(sizeof(unsigned int) * 1000);

free(m_pBuffer);

...

};

class Sample2TBBKernel: public Kernel

{

...

// allocate and deallocate some memory in a TBB fashion

unsigned int *m_pBuffer = (unsigned int *)

scalable_malloc(sizeof(unsigned int) * 1000);

scalable_free(m_pBuffer);

...

};

Sample 2 is an example of memory allocation and deallocation with both the standard C-style methods and with the multi-threaded Intel TBB allocator. The only syntax difference is the name of the function. The code using the Intel TBB allocator has a 1.17 speedup (17% faster) relative to the standard code when run on the 4-core test system.

Thus far, the focus has been on optimizations that leave the original code structure almost completely intact. The next level of optimizations requires more significant localized code changes. In return for these modifications, Intel TBB can provide considerably enhanced performance on multi-core processors.

Like most computationally intensive programs, games make heavy use of loops. Loops are a natural opportunity to optimize execution on a multi-core system. Intel TBB provides a scheme for parallelizing loops with a simple API. As expected, this optimization provides significant performance gains over unmodified, serial loops. Even when the original code has already been parallelized, Intel TBB's implementation can sometimes provide additional performance benefits due to its efficient use of hardware resources.

void doSerialStandardTest(double *aLoopTimes, const Kernel *pKernel)

{

...

// don't use a thread at all

pKernel->process(0, kiReps);

...

}

void doParallelForTBBTest(double *aLoopTimes, const Kernel *pKernel)

{

...

TBBKernelWrapper tWrapper(pKernel);

tbb::parallel_for(

tbb::blocked_range<int>(0, kiReps),

tWrapper

);

...

}

Sample 3 shows how Intel TBB's parallel_for function can be applied to a loop for significant performance benefits on multi-core CPUs. The code using the parallel_for shows a near linear speedup of 3.98 relative to the serial loop when run on the 4-core system.

In addition to parallel_for, TBB has other functions for parallelizing other types of loops. The function parallel_reduce handles loops that are combining results from multiple iterations. The function parallel_do handles iterator-based loops. There are also functions to handle sorting, pipelined execution, and other loop-like operations.

The techniques demonstrated in the first two sections are appropriate for developers looking to use Intel TBB piecemeal and to achieve modest performance gains as a result. Even greater performance gains are possible when Intel TBB is used as the foundation of a game's threading architecture. This ensures that any explicit functional parallelism and the data parallelism supported by Intel TBB use the same threads, which avoids oversubscription and maximizes scalability. The techniques in the third section show more ambitious ways of using Intel TBB that can help realize these performance gains.

These examples use a low-level API in Intel TBB, called the task scheduler API. The high-level API in Sample 3 uses this low-level API internally. The task scheduler API allows code to directly manipulate the work trees that Intel TBB uses to represent parallel work. Manipulation of these work trees is necessary when implementing explicit functional parallelism and other techniques that go beyond simple data parallelism.

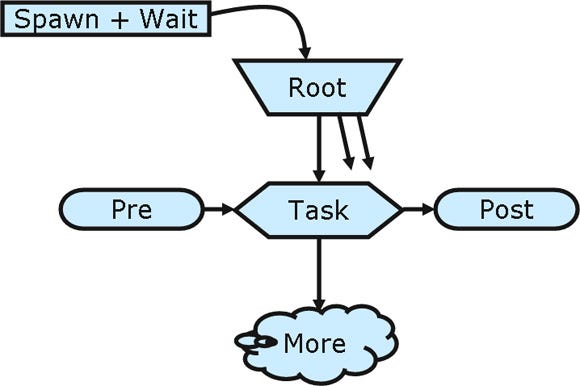

Figure A: A visual representation of an Intel TBB work tree

Figure A shows a visualization of a work tree being executed by Intel TBB. Each tree has only one root, although Intel TBB can process multiple trees simultaneously. Execution starts with the call to spawn the root, then there is a wait for execution of it to complete. The root of this tree creates one child task. This task can call arbitrary pre-processing code before optionally creating and executing more children and finally calling post-processing code. When the child task completes, control passes back to the root, which also completes, and the original wait is finally over. Diagrams of this type will be used to illustrate the techniques in the following samples.

Intel TBB is utilized more heavily in the following examples, but this does not imply that an existing threading API in a game must change to reflect the paradigm of the task scheduler API. In most cases it is possible to plumb the task scheduler API in underneath an existing API. The following examples show how Intel TBB can support some threading paradigms commonly used in games.

One of the basic needs of a threading infrastructure is the ability to dispatch work to a thread running parallel to the main thread. A callback system (sometimes called a job system) is one way to achieve this.

Callbacks are function pointers executed by a thread in a thread pool. The dispatching thread maintains no connection to the callback and does not explicitly wait for its completion. Instead, the callback sets a flag to be checked later in the dispatching thread's execution.

Callback systems using native threading solutions often implement this technique by putting the function pointer and parameter into a queue, which is serviced by threads in the thread pool whenever they complete a task.

This approach can lead to performance degradation in highly contested situations, as demonstrated by Sample 1. Using a concurrent container does not completely avoid this problem, since worker threads may still wait to get a task popped off the queue. Intel TBB avoids this performance bottleneck by maintaining a task queue per thread and implementing an efficient task-stealing system to spread the work around.

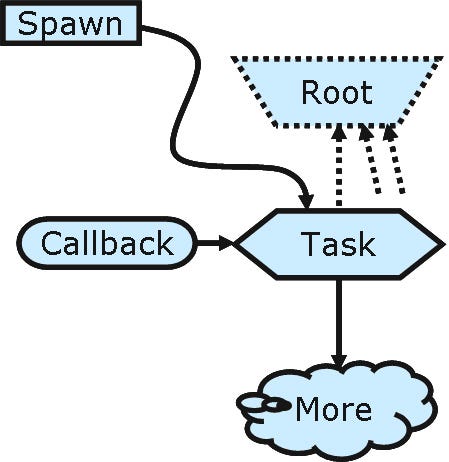

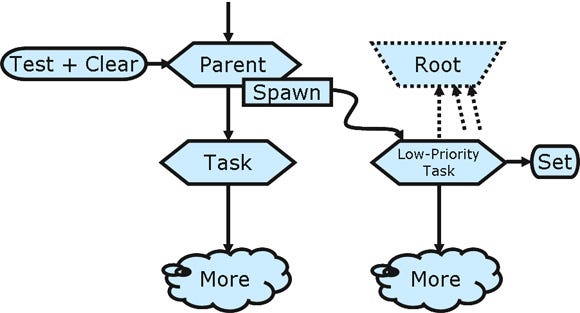

Figure B: Callback is spawned and not waited on

Figure B shows an Intel TBB callback system that attaches a task to a pre-existing root, which hosts multiple callback tasks at once. This allows the callbacks to be waited on as a group, which is helpful for proper cleanup on exit. In this tree, the child task is spawned directly since there is no wait, and work tree roots must be waited on when they are the target of a spawn. This Intel TBB approach allows an arbitrary number of callbacks to be in flight at once and guaranteed complete on exit, much like a work queue provides in the traditional approach.

Intel TBB processes the work tree in Figure B in the following way: Execution begins with the Task, which is the parameter of the call to spawn(). The Task calls the specified callback function, which may trigger the creation of additional subtasks, represented by More. If subtasks are created, those subtasks are processed next. The net effect of this execution is that the callback function is called asynchronously, and it has an opportunity to spawn additional work, which will also be executed asynchronously. Presumably the final step in the callback is to indicate to the spawning thread that the computation is complete, although this notification step is not enforced.

void doCallback(FunctionPointer fCallback, void *pParam)

{

// allocation with "placement new" syntax, see TBB reference documents

CallbackTask *pCallbackTask = new(

s_pCallbackRoot->allocate_additional_child_of(*s_pCallbackRoot)

) CallbackTask(fCallback, pParam);

s_pCallbackRoot->spawn(*pCallbackTask);

}

Sample 4 shows the steps to create the work tree in Figure B. Instead of creating a root for each callback task, this code uses the same root for all callbacks. Again, it is the callback function's responsibility to create additional subtasks and to notify the calling thread when the calculation is complete.

Callbacks meet a basic need of game architectures: how to run multiple functional operations simultaneously without introducing contention for hardware resources. Intel TBB enhances this solution further by providing a clear path to combine callbacks with loop parallelism.

In many cases, it is advantageous to keep track of asynchronous calls and to explicitly wait for them to complete. Promises are one way to accomplish this. When handling a request for asynchronous execution, a promise system provides to the calling thread an object that acts as a link to the asynchronous call. This promise object allows the calling thread to wait on the asynchronous call when necessary and to actively contribute to the calculation if the call introduced additional parallel work.

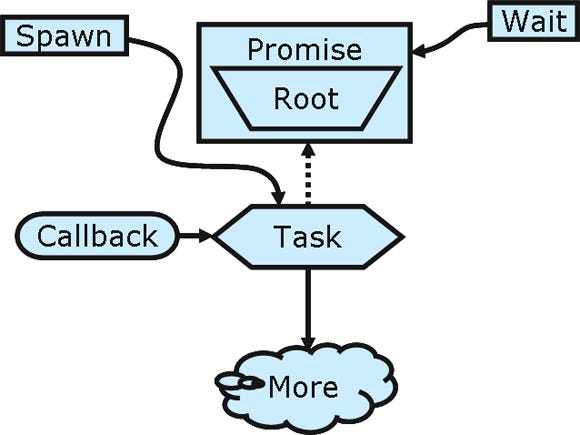

Figure C: Promise system keeps track of the asynchronous call.

Figure C shows that the work tree for a promise system is fundamentally similar to the work tree for a callback system, shown in Figure B. The primary difference is the system waits on the root of the work tree. Another difference is the root of the tree is encapsulated inside a promise object. The root is not executed immediately after the task completes, but only when the promise explicitly waits for it. This prevents the root from disappearing without the promise knowing about it.

void doPromise(FunctionPointer fCallback, void *pParam, Promise *pPromise)

{

// allocation with "placement new" syntax, see TBB reference documents

tbb::task *pParentTask = new(tbb::task::allocate_root()) tbb::empty_task();

assert(pPromise != NULL);

pPromise->setRoot(pParentTask);

PromiseTask *pPromiseTask = new(pParentTask->allocate_child()) PromiseTask(fCallback, pParam, pPromise);

// set the ref count to 2, which accounts for the 1 child and for the eventual wait_for_all

pParentTask->set_ref_count(2);

pParentTask->spawn(*pPromiseTask);

}

Sample 5(a) shows how the work tree in Figure C is created. The primary difference between this code and the callback code in Sample 4 is that a root is created, it is wrapped in an object (pPromise above), and the reference count of the task is increased to ensure that the root will not run until requested by the promise. This avoids the specific problem of having the root execute asynchronously and then get deleted and reused by the Intel TBB memory manager as an unrelated task in the tree.

void waitUntilDone()

{

if(m_pRoot != NULL)

{

// lock up access to one-at-a-time

tbb::spin_mutex::scoped_lock(m_tMutex);

if(m_pRoot != NULL)

{

m_pRoot->wait_for_all();

m_pRoot->destroy(*m_pRoot);

m_pRoot = NULL;

}

}

}

Sample 5(b) shows how the promise object ensures the completion of the asynchronous call. A mutex is introduced here to ensure that the waitUntilDone call is thread-safe. If waitUntilDone will be called by only one thread (presumably the thread that submitted the asynchronous call in the first place), this additional mutex is not necessary. The root is never spawned because of the increased reference count used in the call to doPromise. Since the root is never spawned, it is never executed and therefore never deleted by Intel TBB. This code deletes the root explicitly once the asynchronous call is complete.

Promises provide a comprehensive scheme for dispatching and waiting on parallel work. An Intel TBB implementation of promises has the additional benefit of providing an efficient work-while-waiting policy. These features make promises broadly applicable to game architectures.

Synchronized callbacks solve a specific and important problem in game development: coordination with threaded middleware. Modern game development has matured to a point where many of the common features in a game can be supplied by third-party middleware. There are multiple solutions available for physics, AI, particles, and animation. Many of these middleware solutions have already transitioned to supporting enhanced performance on multi-core processors.

In most cases, each middleware solution assumes that it has access to most or all of the computational power available. This can lead to poor performance when multiple middleware solutions are used or when a game's core computation is also attempting to access the additional performance potential of multi-core hardware.

The ideal solution to this problem is to have all computational activity use the same optimal set of threads. Some middleware is designed to support this approach, but requires that each thread be specially initialized to process each of the middleware's computational tasks. Synchronized callbacks handle this need for initialization by calling a specified function from all threads before computation continues.

Synchronized callbacks are trivial to implement in threading architectures in which the threads are directly accessible. Intel TBB does not expose its threads, so if a game is using Intel TBB to provide a high performance threading architecture, additional steps must be taken to implement this technique as detailed below.

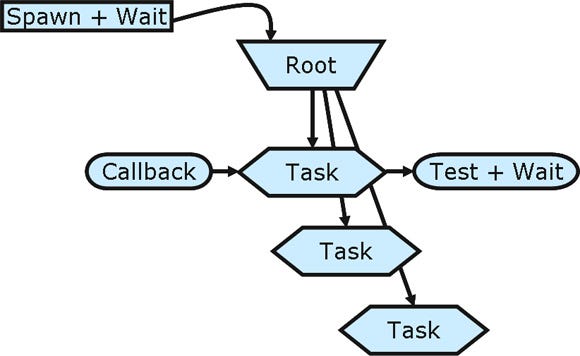

Figure D: Synchronized callbacks wait until all threads have called.

Figure D shows a work tree that implements a synchronized callback scheme. A root is spawned and waited on, and that root has a number of tasks equal to the number of threads in the thread pool. Each task calls the callback and then tests to see if all the callbacks have been called. If so, execution of the task ends. If not, then the task waits for all callbacks to finish.

void doSynchronizedCallback(FunctionPointer fCallback, void *pParam, int iThreads)

{

tbb::atomic<int> tAtomicCount;

tAtomicCount = iThreads;

// allocation with "placement new" syntax, see TBB reference documents

tbb::task *pRootTask = new(tbb::task::allocate_root()) tbb::empty_task;

tbb::task_list tList;

for(int i = 0; i < iThreads; i++)

{

tbb::task *pSynchronizeTask = new(pRootTask->allocate_child()) SynchronizeTask(fCallback, pParam, &tAtomicCount);

tList.push_back(*pSynchronizeTask);

}

pRootTask->set_ref_count(iThreads + 1);

pRootTask->spawn_and_wait_for_all(tList);

pRootTask->destroy(*pRootTask);

}

Sample 6(a) shows how the work tree of Figure D is created. The code to construct the work tree is similar to what was used in the promise pattern. Just as in the promise pattern, the root is never spawned and must be manually destroyed once its work is done. The SynchronizeTasks need more than just a function pointer and a parameter; they need a shared atomic count for the Test + Wait in the work tree.

tbb::task *execute()

{

assert(m_fCallback);

m_fCallback(m_pParam);

m_pAtomicCount->fetch_and_decrement();

while(*m_pAtomicCount > 0)

{

// yield while waiting

tbb::this_tbb_thread::yield();

}

return NULL;

}

Sample 6(b) shows how a SynchronizeTask tests and waits after calling its callback. Each task decrements the atomic count. Since this count was initialized with the total number of threads, the atomic count will reach zero when the last thread has called the callback. The code checks the value of the atomic count, and if it is greater than zero, the task spins, waiting for other SynchronizeTasks to reduce the count to zero. This spinning is appropriate since, by definition, the thread running this task has nothing else to do until all SynchronizeTasks have run.

Synchronized callbacks become increasingly important as middleware matures to accommodate threaded game architectures. A game architecture based on Intel TBB for performance reasons can take full advantage of threaded middleware using this technique.

Games occasionally rely upon computation that needs to run continuously but not at the expense of other more immediate work. Level loading, asset decompression, and AI pathfinding are common examples. These tasks can be set up to run on dedicated threads, but this exposes the problem of the continuous operation fighting for hardware resources with more immediate work. Priority APIs can help defend against this, but usually cannot guarantee that no conflict will happen. Intel TBB can implement this technique without introducing additional threads and therefore without introducing the possibility of conflict.

Figure E: Time slice a low-priority operation into the work tree.

Figure E shows parts of two work trees. On the left is some middle section of a large work tree. On the right is a root that is hosting some low-priority tasks. The Parent on the left, before spawning its own children, tests a flag for the need to initiate a low-priority task. If so, it clears the flag and adds a low-priority task to the root.

The low-priority task is added to a root with a very small task depth. If the thread that executes the Parent completes all of its other work, it will run the low-priority task. As other Intel TBB threads exhaust their own supplies of tasks to run, they will engage in stealing work from each other. If one of these other threads steals from the original thread, the low-priority task will be the one stolen due to its minimal depth value. This ensures that low-priority tasks are run periodically but infrequently even when the work tree continues to grow.

tbb::task *execute()

{

...

if(s_tLowPriorityTaskFlag.compare_and_swap(false, true) == true)

{

// allocation with "placement new" syntax, see TBB reference documents

tbb::task *pLowPriorityTask = new(

this->allocate_additional_child_of(*s_pLowPriorityRoot)

) LowPriorityTask();

spawn(*pLowPriorityTask);

}

// add some children

...

}

Sample 7 shows the test for low-priority operations as part of a base class's implementation of tbb::task::execute. This hypothetical base class would be used for all normal-priority tasks in the work tree. An atomic flag is used to test if a low-priority task should be created. This flag is set to true again when the low-priority task completes.

Game architectures will continue to use long, low-priority operations for certain types of tasks. Using this modified time-slicing scheme, a game architecture based on Intel TBB can accommodate this need without introducing extra threads and associated competition for hardware resources.

Throughout the samples in this article, some core features of Intel TBB have been used without due explanation. These low-level objects provide a cross-platform encapsulation of basic synchronization and timing APIs, which are commonly used in game architectures. The APIs for all of these objects are described in detail in the Intel TBB reference documentation.

As shown in Samples 6(a) and 6(b), Intel TBB's atomics provide a simple syntax for atomic compare-and-swap operations.

Intel TBB provides mutual exclusion objects with a variety of contention policies. Spinning, blocking, read-write, and recursive mutexes are available.

Intel TBB encapsulates each host platform's high-frequency timer into a simple API.

Intel TBB has a bright future ahead in game architectures. With the variety of multi-core topologies in the major gaming platforms, developers are faced with the challenge of designing an architecture that is high performing without requiring extensive per-platform tuning. Because Intel TBB is an open-source library, it has great flexibility for the gaming platforms of the future. Wherever Intel TBB is implemented, it will continue to provide game architectures with high-performance threading through its flexible API.

Intel TBB is available at no cost from opentbb.org for Microsoft Windows, Mac OS X, Linux, Solaris, and Microsoft Xbox 360. It ships with Intel compilers. You can learn more about Intel TBB at opentbb.org and in the O'Reilly nutshell book Intel Threading Building Blocks by James Reinders.

Read more about:

FeaturesYou May Also Like