Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Veteran coder Paanakker discusses a more efficient way to export game character models in order to achieve maximum hardware performance, with full source code of an optimization utility included.

Current game titles all use skinned meshes for their animated characters. Skinned meshes provide an intuitive way to animate and render characters. A number of hardware platforms support skinned meshes by providing hardware support (like the PC, Xbox and the PSP), but the hardware specifics may vary considerably, like the limit on the number of joints that can be used simultaneously.

Current game titles all use skinned meshes for their animated characters. Skinned meshes provide an intuitive way to animate and render characters. A number of hardware platforms support skinned meshes by providing hardware support (like the PC, Xbox and the PSP), but the hardware specifics may vary considerably, like the limit on the number of joints that can be used simultaneously.

To overcome these limitations, models that use a number of joints above the number directly supported by the hardware can be split up so every partition is processed by the graphics card in one pass. If the algorithm used to split the mesh results in inefficient partitioning this can have a severe impact on data throughput and force the artists to use less joints and/or simpler models (which means they have to redo much of their work, and/or limit the quality of animation).

This article describes a preprocessing software implementation that can handle an unlimited number of joints and at the same time partitions the model to allow maximal performance. Multiple target platforms are supported by creating classes that simulate the architecture of the target hardware and a number of different optimization heuristics can be used to search for the best solution. We have found that the software works very well and nicely fits in our toolchain, allowing programmers to easily adjust the software for new hardware and optimization techniques while at the same time providing quick turnaround time for the artists when (pre)viewing models in the game engine.

Skinned Meshes

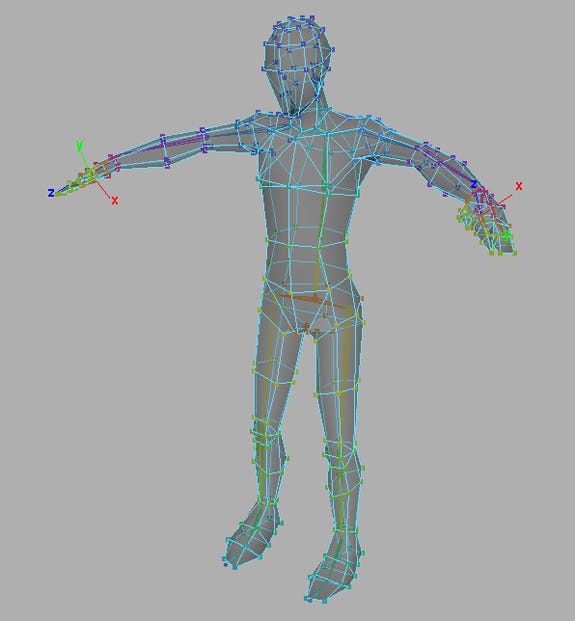

The use of skinning when rendering meshes is nowadays widely used. The technique works as follows: For a model, a skeleton is defined by using a hierarchy of bones and the joints of the skeleton are attached to the vertices of the mesh (the “skin”).

A 'skin' mesh attached to a skeleton. The colors show to which joints the triangles are attached.

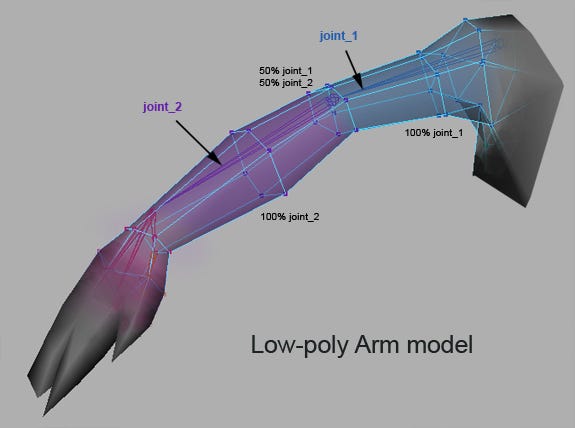

To animate the mesh we only have to animate the skeleton because the vertices of the mesh are deformed based on the joints they are attached to. The vertices of the mesh can be attached to a number of joints. We need to attach a vertex to at least one joint (otherwise the vertex will remain fixed while the rest of the mesh is animated) and for present-day game animation of human-like characters, animators need at most four joint influences per vertex. If we use exactly one joint per vertex this is called ‘Rigid Skinning’. If we use multiple joints to influence a vertex (called ‘Smooth Skinning’) we specify in which amount every joint influences the vertex with a total influence that sums to one.

Typical PS2 titles use rigid skinning because the hardware does not support smooth skinning directly. PSP titles use smooth skinning because it is supported on the hardware level. The total number of joints used in a character can vary according to the level of detail needed in a game. For a PS2 or PSP title, typically around 40-50 joints are used, but for a next-generation console title where fingers, hair and clothing is animated the number of joints can easily exceed 100 or 150.

Let us assume we have a character that uses 100 joints. To render this skinned mesh we load all the joint matrices in memory. We can walk through all the vertices of the mesh and calculate the new vertex position, taking into account the joint matrices that influence the vertex. When all the vertices are correctly positioned we draw the complete mesh in one pass. This method is known as software skinning: we handle all the skin processing on the CPU. While it is an easy solution, the CPU cannot perform other tasks while it is skinning and it has to create new vertices which are correctly positioned before sending it to the Graphical Processing Unit (GPU). On the other hand, software skinning has no limit on the number of joints that can be used: whether the mesh uses 50 or 250 joints, software skinning can handle it just as easily.

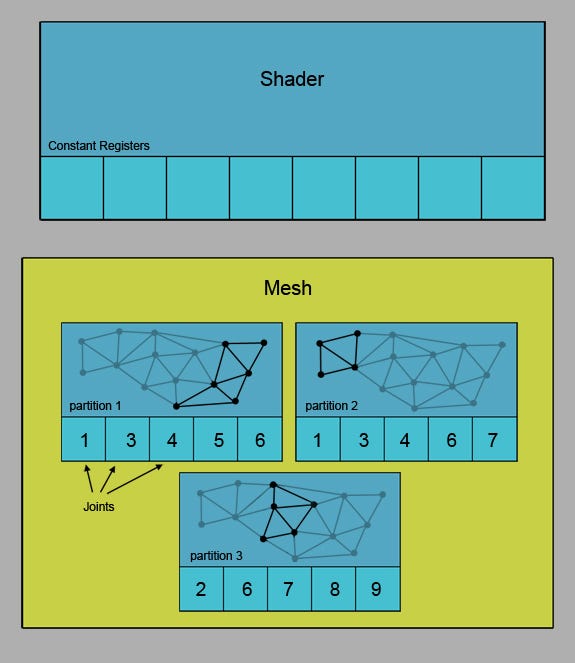

To improve performance and unload the CPU, many GPUs support hardware skinning. On the PC for instance, skinning can also be performed in the vertex shader. The most popular method of performing skinning in the vertex shader is called Matrix Palette Skinning1. Using this method, the joint matrices are stored in the constant table of the shader and every vertex has indices into the constant table to get the joint matrices. The graphics hardware defines the maximum number of constant registers that can be used in a vertex shader, thereby dictating the maximal number of joints that can be used in one render pass. We have to send new constants to the shader to use other matrices. A number of constants registers are normally reserved for general constants that the vertex shader needs, like the camera, lighting information, time, wind or fogging parameters, etc. The remaining constants can be used for joint matrices. A joint matrix is a 4 x 4 matrix, but because the last column is always [0 0 0 1]T, we only need to store a 4 x 3 matrix, thus enabling us to use 33% more joints per shader pass.

1. Skinning. Perform a google search on “Matrix Palette Skinning”.

On current hardware (as of Vertex Shader 2.0), the constant table contains 256 four dimensional vectors. Depending on the number of constants used for other parameters, this amounts to around 70-80 joints. On older graphics cards the constant table can only contain 96 four dimensional vectors and the joint limit can be as low as 20 to 28 joints (although theoretically the limit is 96/3=32 joints).

On current hardware (as of Vertex Shader 2.0), the constant table contains 256 four dimensional vectors. Depending on the number of constants used for other parameters, this amounts to around 70-80 joints. On older graphics cards the constant table can only contain 96 four dimensional vectors and the joint limit can be as low as 20 to 28 joints (although theoretically the limit is 96/3=32 joints).

If the number of joints used in the mesh exceeds the vertex shader joint limit, we need to partition the mesh and render parts of the mesh separately. When we partition a mesh, we typically want to use the least number of shader passes because uploading constants to the shader is an expensive operation.

Compared to the PC, handheld devices like the Playstation Portable (PSP) are completely different. Because the hardware is very different from PC hardware, this means we also need different algorithms to partition the mesh if we want to allow a large number of joints to be used in our models.

Optimally partitioning an arbitrary mesh is an NP-complete problem (which means it cannot be solved exactly for meshes of a practical size), but by using heuristics we can generate solutions that perform quite well in practice. Before creating such a tool however, we need to decide where we want to place this partition algorithm in our toolchain.

Exporting Skinned Meshes

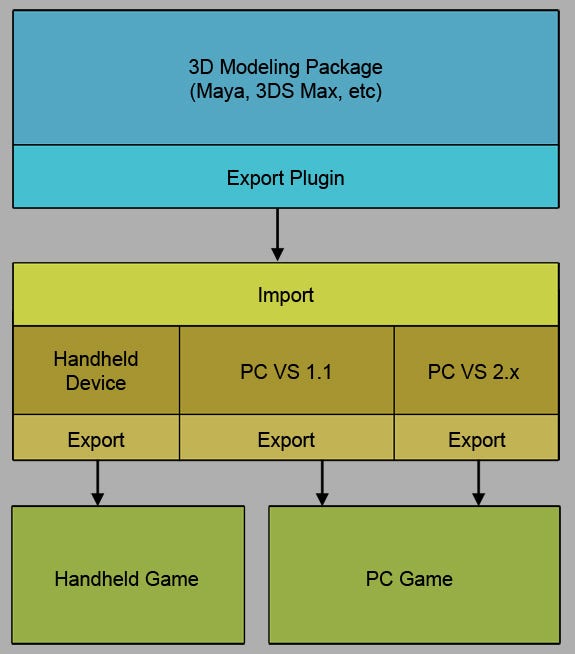

The skinned meshes are typically created by the artist in a 3D modeling package like Maya or 3D Studio Max and are exported to a format that can be read by the game. At Wishbone Games, we use Maya in combination with a third party plug-in that is especially well suited for PC related hardware. When we started on our PSP project, we found that we needed to implement some major changes to accommodate for the PSP hardware. We decided to create a separate utility to handle these properties, sitting between the modeling package and the game engine (figure 2).

The main design considerations of the tool were:

It should support multiple hardware platforms, all using the same utility.

It should be easily extendable to accommodate for new hardware or new insights in existing hardware.

It should have multiple optimization techniques that have different speeds. Fast turnaround time is key for the artists, but the final export or nightly build is allowed more time to optimize the mesh to improve performance.

The optimization utility sits between the modeling package and the game engine.

When you support multiple hardware platforms, we found it helps if you have a uniform toolchain. So exporting from a modeling package always requires putting it through an optimization program. For some target hardware this program is more difficult than for others, but, ideally, we would have one conversion utility that can create optimized meshes for any of these platforms and takes advantage of the architecture of the hardware. Much of the functionality for the hardware platforms will be shared (like reading the input file format) and by putting all the functionality in one utility we avoid discrepancies between different builds of the software.

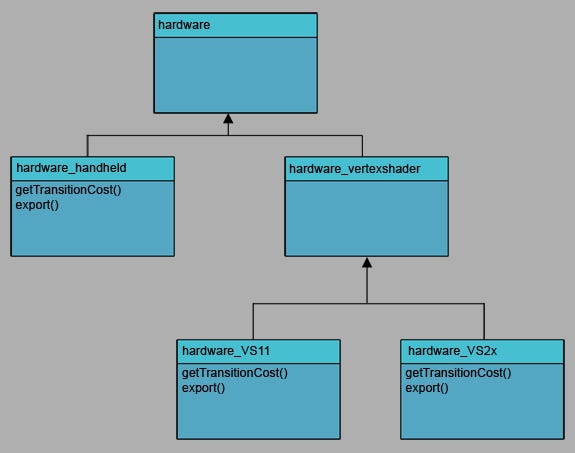

The second design consideration deals with extendability. When new hardware is released, this hardware will have specific properties. Maybe uploading complete shader constants will be cheaper, or new functionality might become available. Such new hardware might require a complete new design of the way you render a mesh. Programmers should be able to quickly create a new path to export for this hardware when needed. Alteration of existing paths is also commonly encountered during the period of a game project. When you work extensively with some hardware you might have new insights in what works well on a specific platform, or maybe you have read an interesting article and want to try a new technique. This can all be done easily by creating new subclasses. A sample hierarchy is shown in figure 3.

The class hierarchy used to represent different hardware platforms.

The third design consideration is speed. When creating models, artists should be allowed to quickly (pre)view their work. Just as programmers want excellent compile speed, artists should have excellent export speed. While the artist is still working on a character model, the mesh does not have to be extensively optimized for rendering speed but the execution speed is the most important factor. By supporting different optimization techniques, we can control the time spent on optimization. Fully optimized models can be generated in the night build or the final export.

Building the Solution

To support skin partitioning we use a separate utility program that is called with command line options to indicate the target platform (Xbox, PC, PSP, etc), optimization options (quality or speed), the input file and the output file. The program reads the file, which is an ASCII text file similar to the widely known OBJ file format. It then partitions the mesh and writes the new mesh to the output file, which is like a list of commands to be processed by the hardware. For different hardware the export formats may vary.

The data needs to be read into memory and processed independently of the target hardware. Every vertex has at most four joints that influence it and a triangle has exactly three vertices. This means that in the worst case we use 12 unique joints to render a single triangle. Because a triangle is the smallest thing we can render, we want to make sure that the maximal 12 joints are all available when we want to render it. When we create the list of unique joints that are used by the triangle, we put the joint indices in ascending order so “5 2 4” becomes the same as “2 4 5”. It is very likely that many of the triangles in the mesh have the same joint indices and such triangle-groups can be rendered in one pass. We call such a triangle-group a partition.

The problem we want to solve is the order in which to send these partitions to the GPU so we can achieve maximal performance. This is where the representation of the hardware architecture comes into play. We create an object that simulates the relevant portions of the target hardware. For the PC, this object represents a vertex shader with a limited number of constant registers. You can query the hardware object to estimate the cost to render a partition.

A Simple Algorithm

A very simple method to create a solution is to just walk through the complete list of partitions and append them to the solution. This way, the initial ordering of the partition defines the quality of such a solution. If the list of partitions is imperfect, we might perform unnecessary work which could lead to disappointing rendering speeds.

By ordering the list of partitions we can improve our result significantly. Sorting on the number of unique joints used (’1’,’2’,’3’,’1 2’,’2 3’) or in ascending order (’1’, ‘1 2’, ’2’, ’2 3’, ’3’) are commonly used orderings. Please note that we don’t use the hardware representation here. We do an informed guess about a good ordering and execute it. The biggest pro of this algorithm is its speed. The relative cost of updating the hardware to render a partition is not even used, the algorithm just assumes the ordering will be good enough. This assumption is also a disadvantage because the quality of the solution completely depends only on the hard-coded sorting of the input data. Careless (or just plain bad) orderings may generate sub-optimal solutions. This simple algorithm is easy to implement, it is very fast (there is no searching) and in practice it can generate good solutions if the programmer has chosen a nice ordering (which is normally one of the two orderings mentioned earlier). Most skin partitioning algorithms use this technique. The algorithm is shown in pseudocode 1.

Cheapest Insertion

Cheapest Insertion

Instead of providing the order in which to send the partitions to the hardware, we can also query the hardware and search for a good solution. In general, we want to get an indication of the impact of rendering a partition when the hardware is in a specific state. Minimizing the impact of each insertion is called cheapest insertion. A naïve implementation takes a lot more time to come up with a solution for, but it typically outperforms the solution quality of the simple algorithm.

The algorithm for cheapest insertion walks through the complete list of the partitions and inserts every partition in the cheapest position in the solution (and not just at the end, like the “simple algorithm”). Inserting a partition in a solution requires that we re-evaluate the cost of the complete solution because the insertion might induce costs and/or savings for partitions that are processed after the inserted partition.

Cheapest insertion is also sensitive to the order in which the partitions are added, although to a much lesser degree compared to the simple algorithm. Using random ordering gives results that are comparable to the simple algorithm with good ordering, but the same orderings as used in the simple algorithm normally gives the best result.

The algorithm is shown in pseudocode 2.

An Example

To illustrate our algorithm, let us assume our fictional target hardware supports a shader with Matrix Palette Skinning. We assume at most eight joint matrices can be used in one rendering pass (the shader has eight joint ‘slots’). Our simple mesh has three partitions, with every partition using five joints.

A shader that supports eight joint-matrices needs to process three partitions which all have five joints.

We will solve the partition using the simple algorithm while showing the cost calculation. In the beginning we have a new, clean hardware state. We want to render a partition that contains five unique joint indices. The “cost” to render this partition is the uploading of five matrices and the cost to create a new palette. The second partition also uses five unique joint indices, but four of these are also in the first partition. The additional cost to render this partition is now the uploading of just one matrix because the other matrices are already available. Our third partition also has five unique joints and two of them are already available in the current palette. Because the complete partition does not fit in the palette (three new entries are needed, but only two are available), the hardware object will create a new palette. In this new palette, we upload the five unique matrices of the third partition. Having processed all the partitions, we now are ready to export the solution. The relevant portions of the resulting export file is shown below.

Benefits

Simulating hardware to optimize the export of mesh data has a few important benefits. The specific knowledge of the hardware is located in one point (the “hardware” subclass), and this makes it easy to maintain the code. Your technical programmer can supply an implementation for the Xbox, PS2, PSP, PC or some other platform. Furthermore, programmers can supply different implementations for the same hardware platform. Maybe there are new insights in how the hardware can perform optimally.

The best thing about working with abstracted hardware is that it does not need any special implicit or explicit knowledge about properties of joints. We implement a function that estimates the cost to change the hardware state and by trying different orderings we look for good solutions. This reduces the effort required by the programmer considerably: we let the computer do what it does best: considering a great number of alternatives and selecting the best one.

The Software

A piece of software has been written to create a software utility that is easily extendable. The program is written in C++ and can be compiled using MSVC++ or GNU C++. At Wishbone Games we use the utility to optimize our meshes multiple hardware targets. On the PC, the partitioning is fairly simple and different techniques show similar results (the same number of render passes). For consoles however, we were able to improve the speeds significantly: by using a more elaborate hardware simulation we were able to reduce joint matrix data sent to the hardware by 60 percent, thus improving overall performance.

Conclusion

Different hardware platforms need different optimization techniques when exporting skinned meshes. Using our framework, it is possible to create and plug-in new hardware or a new technique fairly easily. Just create a subclass of the “Hardware” class and put in special hardware considerations.

The currently implemented ways to generate solutions are pretty standard, but we found it works just fine for us. If you have difficult hardware simulation the cost function might take too much time to perform cheapest insertion. You could try to make a better estimate of the insertion point for a partition or maybe use other optimization techniques like simulated annealing or genetic algorithms. For our purposes however, the software works just fine as it is.

This utility is created to optimize the partitioning of a mesh based on skinning information. It can be altered, however, to take other splitting criteria in mind. For instance, you could split on materials (texture or shader), physics, fracture points or some other criteria. Furthermore, other utilities can be created to process the mesh even further by, for instance, stripify the mesh to accelerate rendering or perform polygon reduction. These routines are also hardware dependent, based on the size of the cache and other criteria. On the PS2 for instance, non-textured polygons optimally have a width that doesn’t exceed 32 pixels2. Such specific knowledge might not be used normally when designing an export utility, but when we create the tool we could easily plug-in a new routine using this knowledge. We can then export the models using both techniques and compare the rendering speeds. You could even estimate the cost of rendering such polygons, but it might be too much trouble for complicated systems that use caching, multiple processors and difficult bus simulation models. If you create such a utility however, I am sure many developers would appreciate it if the source code was shared.

The conversion framework as well as an implementation for PC hardware (source code and executable) can be downloaded here [.ZIP link]. On a final note, I would like to thank my friend Erik van der Pluym for reviewing this article.

2. PS2 Programming Optimisations, page 25.

Read more about:

FeaturesYou May Also Like