Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

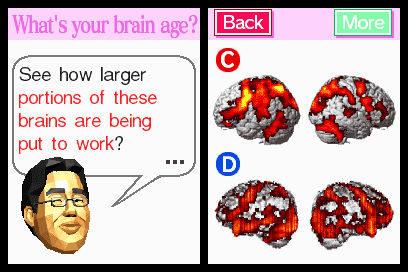

At the 2007 Game Developers Conference, Nintendo's Takeshi Shimada presented a revealing talk on the challenges of developing Brain Age: Train Your Brain in Minutes a Day, sharing his experiences with a developer audience.

Takeshi Shimada builds libraries for Nintendo games. When he was presented with the Brain Age project a few years ago, he and his team faced multiple challenges in finding, developing, and fine-tuning the technologies that would be needed for voice recognition and handwriting recognition.

In a session at the 2007 Game Developers Conference called “Rethinking the Development Timeline,” Shimada (speaking in Japanese with English translation) revealed the precise nature of these challenges and showed how his team dealt with them. He shared Nintendo’s process of how to manage that workload against a ticking clock.

One of the first challenges that came to Shimada from the design team was whether one could play Brain Age while holding the DS sideways, like a book. The technology team had not tested the DS to be handled in this way, and they didn’t know how the recognition technologies would potentially be affected by this twist.

After Shimada assured the designers that the product would not be negatively affected by turning the DS to open horizontally, his team began tackling the much bigger issue of finding engines that would support the other input elements of the game: voice and handwriting.

Handwriting recognition was a major hurdle in the development of Nintendo's Brain Age: Train Your Brain in Minutes a Day

Although finding companies that specialized in this type of technology wasn’t too difficult, choosing which ones to use required much consideration, Shimada said. The task was to find a tool that offered fast recognition speed, had good memory, wasn’t too high in cost, and didn’t need too much heavy processing. If the processing cost was too high, it would have a negative affect on the battery power of the small handheld device.

In early 2004, Shimada and his team began the serious work of tuning the engine. “At that time, we had already decided to release the product in the spring, which would mean we had a mere three months to finish it,” he said.

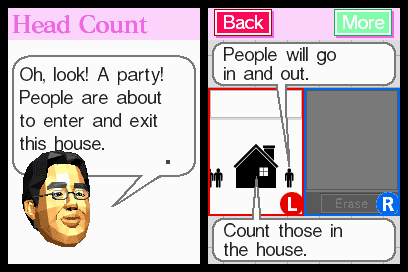

After deciding on the technology, the team encountered more unexpected challenges. For one, the voice recognition technology had been calibrated to recognize adult voices, and while Nintendo didn’t necessarily want Brain Age to be a children’s game, the company did want to appeal to the largest audience possible, so the game would have to be able to understand a wide range of pitches and tones.

To re-calibrate the engine to recognize children’s voices, Nintendo had to find children to create the input. Shimada said he resorted to asking other company employees to bring in their children so he could record their voices--20 children saying 130 words in all. The recordings had to be completed in both noisy and quiet environments, too, which presented another challenge.

Shimada and his team realized that the places they imagined people would play a Brain Age, like buses, public parks, and schools, weren’t ideal locations to set up recording and development equipment. So to simulate the ambient noise of a public space, they recorded the children directly outside the Nintendo building in Japan.

Other issues the team encountered included how to prepare the engine to recognize voices at the pace the game would be played (very rapidly); how to deal with misrecognized words (when pauses between words are not clear); and how to accommodate the elderly, who have distinct changes in clarity of pronunciation of certain syllables as they age. A tremendous amount of focus testing eventually helped the team work out many of these lingering problems, Shimada said.

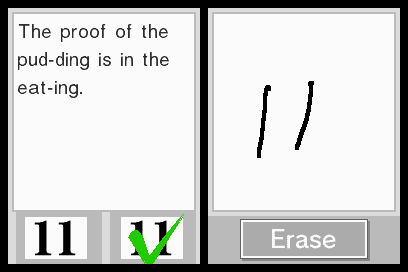

To create a useable, workable subdictionary for the game, Shimada said the team had to start thinking more from a design perspective in order to solve problems. For example, another challenge was how to recognize short words, which were more difficult for the engine to determine as being correct or incorrect. Longer utterances are easier for the system to recognize because there’s more data to compare against the “correct” answer. The more syllables, the easier to recognize.

“We designed a system to return not just one answer, but several answers ranked according to their correct-ish-ness,” said Shimada. As long as the answer detected is one of the top ranked answers, it is accepted as correct. In Japanese, said Shimada, the word for “yellow” fits into this category because older people often pronounce the word as “chi i ro” or “i i ro” instead of the correct “ki i ro.” Brain Age, however, accepts any of these pronunciations as a correct answer.

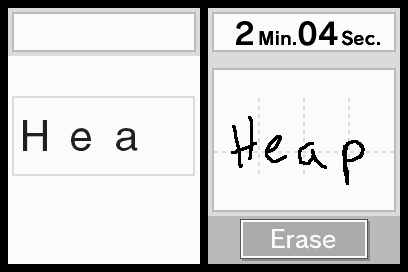

When the team came to deal with handwriting recognition, similar problems arose. The team’s first step was to collect as much data as possible about the basic handwriting of numbers and letters. They used batch processing to assess all the data gathered, repeatedly collecting data until they had enough for a usable database.

Again, because the design team required that the game be played quickly, Shimada and his group needed to enable the software to detect different varieties of handwriting under rapidfire conditions, when penmanship is likely to be at its most slovenly. They were also tasked with determining how the end of input would be signaled (the final answer is when the stylus leaves the touch screen).

Shimada also explained how Kanji characters were incorporated into the Brain Age games, as well as what Nintendo’s timeline looked like for each phase of the development against a project release date.

Finally, Shimada mentioned a few of the things his team is working on presently. “Currently, we are staying busy creating development tool libraries for the Wii and the DS,” he said. “Late last year we were finishing up touches and trying to get them out to developers.” Also late last year, the team finished “NintendoWare for the Revolution [Wii],” a tool for implementing graphics and music on the Wii.

“Until last year, you needed Wii development hardware to view these effects,” Shimada said, whereas now the company offers an auxillary devices that lets developers preview effects on a PC. Other development advances include finishing a system that lets developers create fur efficiently and developing Codecs and predictive input for the Wii.

“As soon as these [technologies] are ready, we will provide them to developers around the world. Some of these are available already,” Shimada said. Also on their plate, Shimada’s team is conducting speech synthesis experiments for the Wii, with hopes of turning the fruits of their labor into software that can be provided to other software makers and third-party developers.

Read more about:

FeaturesYou May Also Like