Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

O'Brien examines the implication of creating a turn-based vs. a real-time game, and shows how to calculate optimal time and space granularities.

Game programmers traditionally have been challenged with making things happen. Primarily this has meant getting as much multimedia data (polygons, bitmaps, and audio/video streams) onto the screen or out the speakers as quickly as possible, reacting to user input as quickly as possible, and responding to user actions in some reasonable way (using artificial intelligence). With the advent of networked, multiplayer games, there's a new challenge: waiting for things to happen.

When the performance of an entire system has to wait for the completion of a certain task, that task is called a bottleneck. Programmers are well aware of the challenges in overcoming bottlenecks. I call the wait associated with a bottleneck a bonk, after the term cyclists use to describe the performance collapse that happens when you ask for the stuff you need to continue, but it's just not there. Just as high-performance programs must balance their tasks to reduce or eliminate bottlenecks, high-performance networked games must balance their tasks to reduce or eliminate bonks.

To compensate for bonks, you must anticipate what could happen, predict what will happen, and recognize quickly what has happened. There are two obstacles to this work: latency and timing variability. Although some internal machine components (such as the clock) have metronome-like consistency, other components (such as the hard drive and CD-ROM) are inconsistent, and the timing variability of network messaging can prove more challenging than the inherent latency.

Dealing with Bonks

Hardware-Centric Vision: Anticipate what the network infrastructure and the limiting factors in communication will be and design a game accordingly. However, while there's something to be said for this approach as a useful first exercise, a game should never be driven by technology, with playability as an afterthought.

Imagination-Centric Vision: A game should be driven by a creative vision that's powerful enough to make the player forgive the inherent limitations of a network game. Since latency and variability are not solvable problems--they're facts to be worked with--it's as foolish to try to build a twitch-based game for world-wide Internet play as it is to attempt to create an amusement park with genetically engineered dinosaurs. Not because we can't imagine it, but because the technology just doesn't exist. Therefore, it's more beneficial to analyze the network characteristics from the vision down. That doesn't imply a Pollyanna vision of perfect technology; it just means you should start from a vision of what you want and only start compromising when you must.

Two Sample Games

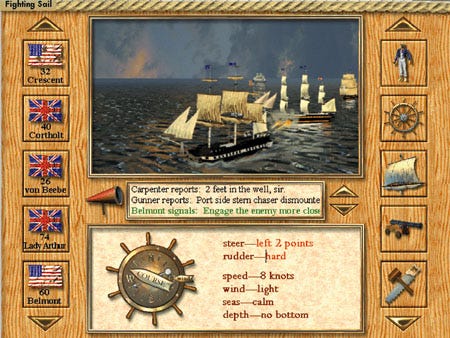

For the purposes of this article, we'll look at two Internet-based games that my company is developing. The first game is FIGHTING SAIL, in which players assume the role of a naval captain during the years 1793 to 1815. Inspired by the historical fictions of C.S. Forrester and Patrick O'Brian, this game gives players several views on furious cannon-and-broadsword naval battles.

A screenshot from the prototype is shown in Figure 1. The three dynamic panes are the main 3D perspective pane, the communications pane in the center, and the "canvas," a scrollable and resizeable region onto which various elements (such as the Helm control shown) can be dropped and arranged, in the lower portion of the screen.

The second game is VACUUM DIVERS and is set 300 years in the future in the midst of a galactic civil war. Here, players work for the powerful Salvage Guild, competing for lucrative, but extremely dangerous, salvage contracts on abandoned warships and space stations. This is a team game, with a heavy emphasis on communication and realistic portrayal of player movement, and a first-person perspective game, with teams of players in armored vacuum suits maneuvering in zero-gravity environments.

The development of these two games presents a wide variety of the challenges faced by multiplayer network game designers. Both aim to create a sense of immersion--they are not board games nor are they abstract. As far as the player is concerned, both occur in real time, which is to say that they appear to be continuous in time and space. This is an illusion, however: FIGHTING SAIL is a turn-based game, while VACUUM DIVERS is a real-time simulation.

Figure 1. The Fighting Sail Prototype

Turn-Based vs. Real-time

In a turn-based game, at any time, objects are in a well-defined state, especially in space. When playing chess, it's not necessary to pay attention to the route your opponent uses to move a piece from square to square nor is it necessary to pay attention to the exact position of a piece within the square. Spatially, it's enough to know the starting and stopping squares so you can confirm that the move was legal and evaluate the board. Temporally, it's enough to note that the other player has moved. Chess has very large granularities of space (an eight-by-eight board), time (turns can take minutes), and velocity (which isn't even a factor in the game).

In a real-time game, however, it's difficult or impossible to nail down an object's state. In tennis, for instance, you have to anticipate motion over dozens of feet, move, and intersect the sweet spot of the racket with a ball traveling in a blur. The granularities of tennis are small--perhaps four square inches over more than a thousand square feet of court, speeds of up to 100 miles per hour, and contact times of a few milliseconds.

Those small granularities mean that to keep up with the game, we have to work in a probabilistic mode--we swing the racket when and where we do, not because the ball has moved to a specific point and a specific velocity, but because the ball is in about the right point at about the right velocity, and we trust that beginning a sequence of motions will result in a confluence of other events sometime in the future.

If a network game has to work in a probabilistic, real-time mode, it is highly vulnerable to failures based on network variability. Just as a "bad bounce" can result in a missed tennis swing, a latency spike or message storm can result in a real-time network game bottlenecking or bonking. There's no such thing as a bad bounce in a chess game, with the possible exception of a happy Labrador retriever knocking over all the pieces with its tail. Similarly, as long as latency spikes stay below a certain threshold, a turn-based game can avoid "bad bounces" as well.

Space and Time Granularity

The line dividing real-time from turn-based games is really a function that relates space-time granularity to space-time movement, or velocity. If we're operating above this line, we know that, like a piece on a chess-board, we have a well-defined position. If we're operating below it, we know that things like hit detection must be treated probabilistically.

Since we're working from our vision down toward the hardware and not vice-versa, we get to decide how we want to define the game's granularity in space, time, and velocity. Space and time granularities are the smallest units we wish to treat nonprobabilistically. For instance, a first-person shooter might conceptually have bullets that occupy a cubic centimeter of space and travel at mach three, but no game will model them that way--a game would treat them as, perhaps, rods moving out in space and time or, more likely, simply as "hit probabilities" to be resolved by objects in a cone extending in the gun's direction.

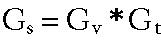

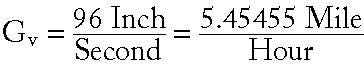

The space granularity (Gs) of a game is the minimum volume in game terms that you concern yourself with in a nonprobabilistic way. The time granularity (Gt) of a game is the minimum amount of time with which you concern yourself. And the velocity (Gv) relates the two.

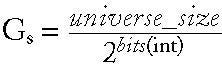

Traditionally, games have been driven by setting Gt to the inverse of the desired frame rate and setting Gs in this manner:

The universe_size is the maximum distance representable in whatever game units are chosen, and bits(int) means the number of bits in an integer (nowadays it's typically 32).

There are two common frame rates: 24 frames per second (Gt = 1/24 second) and 30 frames per second (Gt = 1/30 second). The former is typically regarded as the border for the mind to perceive frames as smoothly moving, while the latter corresponds to a television's refresh rate. Typically integers are either 16 or 32 bits. Substituting values into the two equations gives the results shown in Table 1.

To apply this data to a typical game world, let's use a flight simulator as an example. Imagine a flight simulator encompassing 250,000 square miles of land, represented by two 32-bit numbers at 30 frames per second. Using that data, anything traveling faster than 66 feet per hour moves across one spatial unit per time unit. A plane flying at 500 miles per hour is traveling almost 40,000 spatial "grains" every frame.

It's easy to see why the equation has gone unremarked in the game development literature--the ratios are just too great. If, on the other hand, you equate your space granularity with screen pixels, this equation becomes the basis for half the optimizing tricks in the book! (I'm still trying to figure out how to derive Planck's constant from this equation, but I think the key is that God apparently does not play dice with the universe, but instead uses 142-bit integers.)

However, things are different for the online game developer. As long as objects in your game world are moving slower than Gv, and Gs is fairly large, your game can be turn-based programmatically, even if Gt is still quite fast by human comprehension. This is an essential point: you can set Gt to the frame rate (or whatever rate you desire) as long as you make corresponding changes in Gv and Gs. If things are moving faster than Gv, your game is a "domain real-time" game, and not only will you face harder mathematics for such things as hit detection, you'll be vulnerable to bonking in the face of a latency spike.

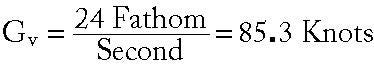

By solving for different variables and reflecting on the demands of the domain, the online game designer can accurately anticipate some of the programming problems likely to arise. Take, for instance, FIGHTING SAIL and VACUUM DIVERS, but let's consider them first as stand-alone games. In FIGHTING SAIL, our first design thought was that we weren't going to concern ourselves with distance of less than a fathom (six feet), and that we'd like to get 24 frames per second with intraframe movement. If we plug those numbers into the first equation, we get

This is great news! Since we know the fastest ships of the time moved at only about 20 knots, we know that FIGHTING SAIL will be a turn-based game--even at 24 frames per second. The same does not hold true of VACUUM DIVERS, in which players are going to be close to each other (Gs = 4 inches).

Ouch! Since arms and legs certainly have to move faster than five miles per hour, VACUUM DIVERS is destined to be a domain real-time game, whether it's on the network or off. By knowing that it's impossible for VACUUM DIVERS to ever move out of the arena of probabilistic actions, we know from the very beginning that this game must have more complex hit detection and a seamless way--such as spline-based anticipatory movement--to cover up bonking. We know that those issues will never go away, no matter what the network capabilities.

Solving for Time

For the purposes of illustration, we've been setting Gt to the framerate. But virtually the whole point of network games is that the programmer has to deal with high network latencies. How high? Typically game networks claim about 150ms locally and 200ms to 250ms nationwide. Game networks typically are obsessed with pushing the lower end of the latency envelope down, which is all well and good, but doesn't tell the whole story, as we'll discuss.

Even the fastest dial-up network involves latencies greater than the framerate. Let's say I go with a dial-up network, such as DWANGO, with a 120ms latency, and I give a nominal 30ms stall time in the outgoing modem (to achieve cleaner signaling and compression, high-speed modems stall outgoing data for anywhere from 25ms to 50ms). This would give FIGHTING SAIL a Gv of 23.7 knots and a Gv for VACUUM DIVERS of 1.5 miles per hour. Well, VACUUM DIVERS is a lost cause, but FIGHTING SAIL is still safe as a turn-based game, right?

Wrong. The network topology could ruin everything. Let's say I use the "master player" strategy common to many first-generation network games. In this topology, the computer of one player is designated as the central repository of game-world state. If this master-player is using a dial-up connection, all messages funneled through it not only get an additional network latency hit, but an additional modem stall. So we'll want to minimize messages sent through the master player and have the FIGHTING SAIL players transmit directly to each other.

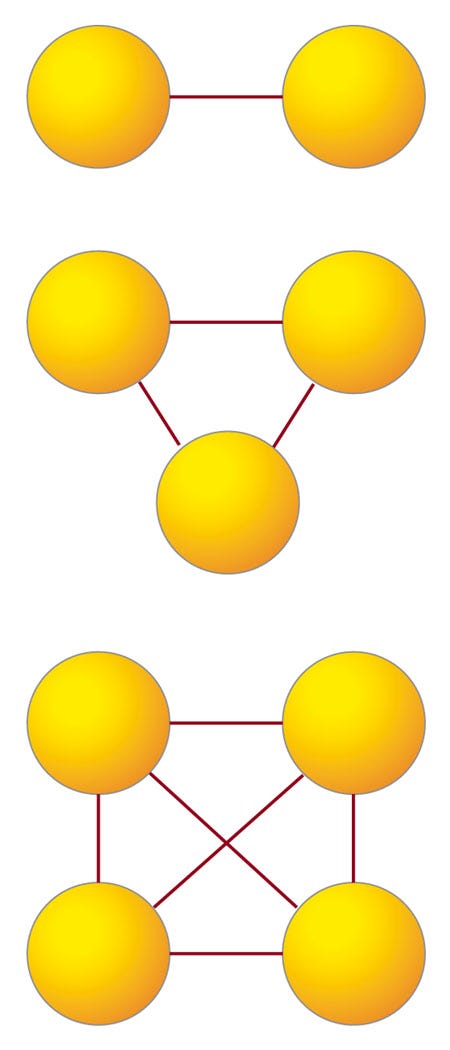

This is exactly what most multiplayer games you see today do--peer-to-peer direct transmission with perhaps a master player for occasional game-world state checks. This works fine for small groups. As Figure 2 shows, you just need one connection for head-to-head play, three connections for three people, and six connections for four people.

A moments introspection will show that for n people:

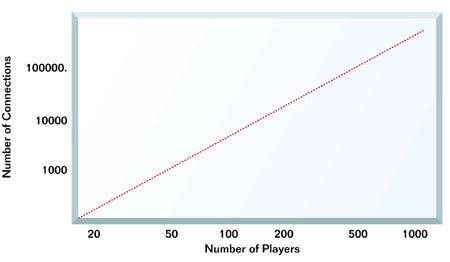

connections are required. For games supporting dozens or hundreds of players, this quickly becomes unmanageable, as the log vs. log plot of the function in Figure 3 shows.

To fully interconnect 1,000 players, 499,500 connections are required. Of course, each player only maintains a list of 999 connections, but the total would overwhelm any public network.

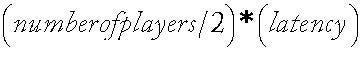

Once you get beyond a small group of players, you need to consider other network topologies and protocols. A ring network won't work, since the average transmission time is going to be equal to

The other obvious topology is client/server, where the server is directly connected to the network (not via dial-up). A client-server-client message is going to take more time than a direct client-client transmission, but it will take considerably less than client-master-player-client, especially if you do the obvious and connect your server directly to the network and co-locate it with the game network's hardware. In FIGHTING SAIL, we increased Gs to two fathoms and committed to the client/server architecture.

For VACUUM DIVER, things are more complicated. Although we know the game is inherently real time, increasing Gt to 150ms means trouble and increasing it to 200ms (or more) typical to a client/server architecture is devastating. The ratio between Gv and the necessary domain speeds becomes so great that it overwhelms any simple form of anticipatory movement.

In this situation, you can anticipate the need for hybrid network topologies, such as direct messaging between players proximate in the game universe, client/server messaging to maintain game state, and server-side filtering to determine the communication subnetworks. We had started designing a solution to this, but are now seriously considering the RTime API as the most likely solution to this problem.

It's possible to mathematically analyze networking protocols to reveal their failure characteristics and degradation under network load, but that's beyond the scope of this article. There is at least one vital fact to keep in mind: Different networking protocols have strengths at different network use levels. This is yet another reason why you should use dedicated gaming networks--even for games with relatively high Gt values. Your time isn't well spent reinventing real-time networking protocols.

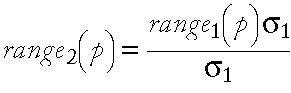

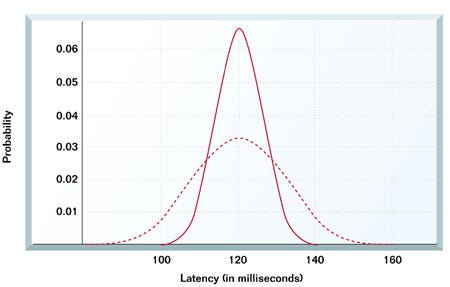

Game networks not only provide lower latency, they provide lower deviation in the arrival time of their packages. This is at least as important as the average arrival rate, and it's why the gaming networks should spend as much time talking about reliability as they do talking about lowering latency. The efficiency of your message handling is partly limited by the distribution in time of arriving streams of messages. For example, two game networks that have an identical average latency of 120ms have radically different arrival probabilities when one has a standard deviation of 12ms and the other 6ms, as shown in Figure 4.

For the network with a 6ms standard deviation (dotted line), 90% of the messages will have a latency between 110ms and 130ms. On the 12ms standard deviation network (solid line), 90% of the messages will have a latency between 100ms and 140ms--twice the gap. The ratio holds for any two standard deviations.

Where rangex(p) is the size of the gap for any given probability p, for a network x, with an arrival-time standard deviation of s. If you're developing a domain real-time game with anticipatory movement, uncertainties in arrival time cause great difficulties not just in game efficiency--uncertainties in game timing can be just as detrimental.

For example, imagine trying to predict movement within a game when latency is highly variable. Knowing a player's position vector is not enough--that vector must be assigned a time so the probabilistic calculations on movement can be made. If messaging is reliable enough, the receiver can calculate reasonably well when the vector was valid. If, on the other hand, messaging isn't reliable, every vector must chew up bandwidth with a time signature as well.

Figure 2. Peer-to-Peer

Figure 3. Players vs. Connections

Figure 4. Latency Comparisons

Bandwidth Implications

Content developers will probably be forced to create low- and middle-bandwidth games for the next seven or eight years before broadband technologies gain a sufficient installed base to entice developers. Over this period, and especially in the next two years, multiplayer game developers must be ingenious in squeezing data through the pipeline. With the kind of bandwidth being touted by cable modems, by contrast, it will be perfectly feasible to render the graphics for a client entirely at the server-side and push it through at 30 frames per second, which seems amazing until you realize that your television cable does basically the same thing.

The major implication of legacy bandwidth is that "instant-on" games with no installation phase will remain wishful thinking for the next several years. Any media-intensive game will require a client-side media cache. This cache may be downloadable, but will more likely be distributed and reside on a client-side CD-ROM. The CD-ROM itself may be purchased online, via traditional channels, or via the low-cost retail channel. (After all, one of the nicer business aspects of multiplayer games is that, with the proper planning and implementation, network games are unpirateable.)

The two things that are impossible to cache are the control events generated by the players and chat. Audio chat can be especially devastating to performance, since it requires either raw bandwidth consumption or CPU-intensive compression. To make things worse, chat is likely to increase at the most critical time--when the action is fast and furious. Finally, it's difficult, if not impossible, to prioritize and reschedule voice data the way you might with game data. (Don't look to DVSD modems for a magic solution, either. They just allocate the bandwidth between the two functions. A typical DVSD modem will allocate 19.2Kbps to data and 9.6Kbps to voice.)

A better way to implement audio chat is the use of "taunt-lines," with predetermined, cached audio streams that can be designated with only a few bytes of actual bandwidth consumption. Taunt lines can be used not only for questioning your opponent's ancestry, but with some minor modification, can be made flexible enough to handle typical in-game communication.

For instance, a player could combine phrases like "enemy at," "go to," "set target to," and so on, with positional statements such as "12 o'clock high," "north-north-west," or "enemy #3." Text chats are largely inappropriate for in-game communication, although they are attractive in terms of being both low-bandwidth and very flexible.

Your Crystal Ball |

|---|

Your game revenue:revenue per game=(total potential customers)*(customer percent)*(per unit purchase profit + average play in hours * hourly_revenue)Your potential customers:Total potential customers = 152 * 106 (estimated total worldwide Internet installed base in 2000)Your profit margin:Per unit purchase profit = $12 (assuming typical margins)Time spent by consumers playing your game:Average play in hours = 40 (average game time of top 10 PC computer games)Your hourly revenue:Hourly revenue = $.875 (based on estimated 25:75 revenue split with game network charging an average of $3.50 per hour) |

The Importance of Reliability

Finally, I want to revisit reliability. This is the most important difference between stand-alone games and multiplayer games. I've already talked about the importance of network reliability, but I want to finish with a quick rant on good old-fashioned software reliability. Stand-alone games typically have abysmal reliability compared to even shrink-wrapped business software (which is no great shakes).

Hard-core gamers aren't particularly surprised when they can't even get a game to run on their system, much less when a game crashes intermittently. For multiplayer games in general, especially for those that rely on subscription revenue and are persistent (that is, that continue from day-to-day and month-to-month), reliability jumps to the top of the list of concerns.

In a massively connected system, everything that can ever happen is happening, constantly. If your client-side game is up 99.8% of the time (corresponding to a crash every eight hours), a 10-person game is going to average a crash every 48 minutes. If you can't recover gracefully from that crash, you've alienated every player in the game as well. If you have a persistent game with a database that's 99.999% available, you're going to face a system crash every 10 weeks. You must be able to recover gracefully from both single-node crashes and systemic crashes, or your game will be too brittle to withstand the real-world buffeting that a successful online game generates.

It's not impossible to create high-reliability systems that can detect, withstand, and recover from crashes gracefully. However, such systems are the product of a development style that is much more disciplined than a typical multimedia project. Building highly reliable and predictable systems takes engineering. Building great games takes creativity, enthusiasm, and artistic ability. Building a successful multiplayer game, therefore, requires both types of people, taking the form of software engineers and creative artists.

Read more about:

FeaturesYou May Also Like