Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Effectively using shaders not only requires an understand of the technology behind them -- you also need tools to create and implement your shaders. This article shows how to use ATI's free utility, RenderMonkey, for this purpose.

One of the largest problems with getting shaders into a game seems to be the learning curve associated with shaders. Simply stated, shaders are not something that your lead graphics programmer can implement over the weekend. There are two main issues with getting shaders implemented in your game:

1. Understanding what shaders can do and how they replace the existing graphics pipeline.

2. Getting the supporting code implemented into your game so that you can use shaders as a resource.

In this article we're going to continue the series of Gamasutra articles about shaders by examining how to make shaders work. The actual integration of shader support is the stuff for a future article. (Note: You don't need a high-end video card to try your hand at writing shaders. All you need is the DirectX 9.0 SDK installed. With that you can select the reference device (REF). While this software driver will be slow, it'll still give you the same results as DirectX 9 capable video card.) RenderMonkey works on any hardware that supports shaders, not just ATI's hardware.

If you have already read Wolfgang Engel's article, Mark Kilgard's and Randy Fernando's Cg article or you've perused the DirectX 9 SDK documentation, then you've got a fairly good idea of the capabilities of the High-Level Shader Language (HLSL) that's supported by DirectX 9. HLSL, Cg, and the forthcoming OpenGL shading language are all attempts to make it as easy to write shaders as possible. You no longer have to worry (as much) about allocating registers, using scratch variables, or learning a new form of assembly language. Instead, once you've set up your stream data format and associated your constant input registers with more user-friendly labels, using shaders in a program is no more difficult than using a texture.

Rather than go through the tedious setup on how to use shaders in your program, I'll refer you to the DirectX 9 documentation. Instead, I'm going to focus on a tool ATI created called RenderMonkey. While RenderMonkey currently works on DirectX high and low-level shader languages, ATI and 3Dlabs are working to implement support for OpenGL 2.0's shader language in RenderMonkey that we should see in the next few months. The advantage of a tool like RenderMonkey is that it lets you focus on writing shaders, not worrying about infrastructure. It has a nice hierarchical structure that lets you set up a default rendering environment and make changes at lower levels as necessary. Perhaps the biggest potential advantage of using RenderMonkey is that the RenderMonkey files are XML files. Thus by adding a RenderMonkey XML importer to your code or an exporter plug-in to RenderMonkey you can use RenderMonkey files in your rendering loop to set effects for individual passes. This gives RenderMonkey an advantage over DirectX's FX files because you can use RenderMonkey as an effects editor. RenderMonkey even supports an "artist's mode" where only selected items in a pass are editable.

Using HLSL

While HLSL is very C-like in its semantics, there is the challenge of relating the input and output of the shaders with what is provided and expected by the pipeline. While shaders can have constants set prior to their execution, when a primitive is rendered (i.e., when some form of a DrawPrimitive call is made) then the input for each vertex shader is the vertex values provided in the selected vertex streams. After each vertex shader call, the pipeline breaks that vertex call into individual pixel calls and uses the (typically) interpolated values as input to the pixel shader, which then calculates the resulting color(s) as output from the pixel shader. This is shown in Figure 1, where the path from application space, through vertex processing then finally to a rendered pixel is shown. The application space shows where shaders and constants are set in blue text. The blue boxes show where vertex and pixel shaders live in the pipeline.

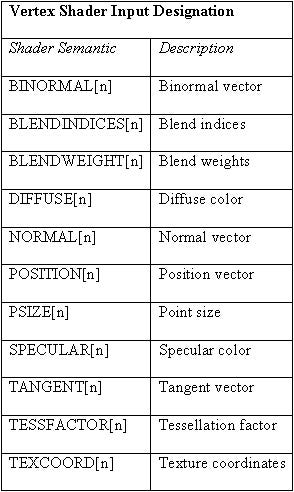

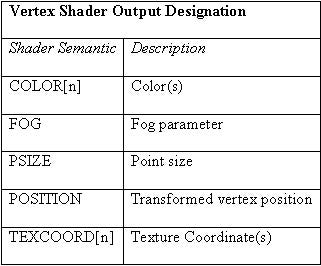

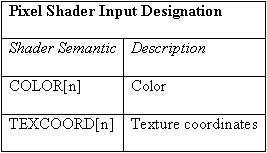

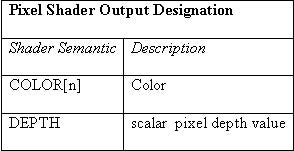

The inputs to the vertex shader function contain the things you'd expect like position, normals, colors, etc. HLSL can also use things like blend weights and indices (used for things like skinning), and tangents and binormals (used for various shading effects). The following tables show the inputs and output for vertex and pixel shaders. The [n] notation indicates an optional index.

The output of vertex shaders hasn't changed from the DirectX 8.1 days. You can have up to two output colors, eight output texture coordinates, the transformed vertex position, and a fog and point size value.

The output from the vertex shader is used to calculate the input for the pixel shaders. Note there is nothing preventing you from placing any kind of data into the vertex shader's color or texture coordinate output registers and using them for some other calculations in the pixel shader. Just keep in mind that the output registers might be clamped and range limited, particularly on hardware that doesn't support 2.0 shaders.

DirectX 8 pixel shaders supported only a single color register to specify the final color of a pixel. DirectX 9 has support for multiple render targets (for example, the back buffer and a texture surface simultaneously) and multi-element textures (typically used to generate intermediate textures used in a later pass). However you'll need to check the CAPS bits to see what's supported by your particular hardware. For more information, check the DirectX 9 documentation. While RenderMonkey supports rendering to a texture on one pass and reading it in another, I'm going to keep the pixel shader simple in the following examples.

Aside from the semantics of the input and output mapping, HLSL gives you a great deal of freedom to create shader code. In fact, HLSL looks a lot like a version of "C" written for graphics. (Which is why NVIDIA calls their "C" like shader language Cg, as in "C-for-Graphics"). If you're familiar with C (or pretty much any procedural programming language) you can pick up HLSL pretty quickly. What is a bit intimidating if you're not expecting it is the graphics traits of the language itself. Not only are there the expected variable types of boolean, integer and float, but there's also native support for vectors, matrices, and texture samplers, as well as swizzles and masks for floats, that allow you to selectively read, write, or replicate individual elements of vectors and matrices.

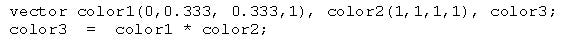

This is due to the single-instruction multiple-data (SIMD) nature of the graphics hardware. An operation such as;

results in an element-by-element multiplication since type vector is an array of four floats. This is the same as:

where I've used the element selection swizzle and write masks to show the individual operations. Since the hardware is designed to operate on vectors, performing an operation on a vector is just as expensive as performing one on a single float. A ps_1_x pixel shader can actually perform one operation on the red-green-blue elements of a vector while simultaneously performing a different operation on the alpha element.

In addition to graphics oriented data types there is also a collection of intrinsic functions that are oriented to graphics, such as dot product, cross product, vector length and normalization functions, etc. The language also supports things like multiplication of vectors by matrices and the like. Talking about it is one thing, but it's much easier to comprehend when you have an example of in front of you, so let's start programming.

HLSL with RenderMonkey

When you first open RenderMonkey, you'll be greeted with a blank workspace. The first thing to do is create an Effect Group. To do this, right-click on the Effect Workspace item in the RenderMonkey Workspace view and select Add Effect Group. This will add a basic Effect Group that will contain editable effects elements. If you have the same capabilities as the default group (currently a RADEON 8500, GeForceFX or better) then you'll see a red teapot. If you're running on older hardware (like a GeForce3) then you'll have to edit the pixel shader version in the default effect from ps 1.4 to ps 1.1.

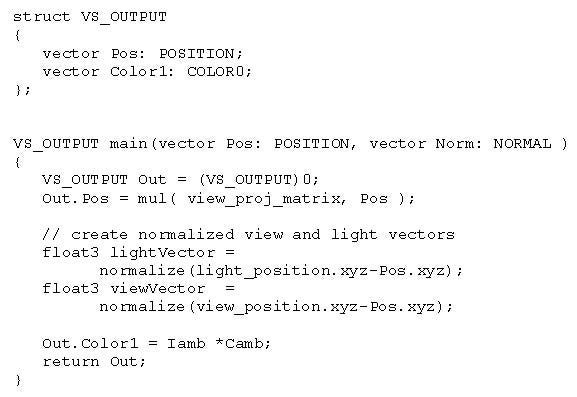

RenderMonkey automatically creates a vertex stream mapping for the positional data of the model, places the view/projection matrix in a shader constant for you, and creates the high level vertex and pixel shaders for you. The default vertex shader is shown in below.

Both the high-level vertex and pixel shader editor windows have three areas. The top area lets you manage the interface between "external" parameters (either RenderMonkey supplied or user-created variables) and the shader and lets you pick the target shader version. The middle area is a read-only area that shows the parameter declaration block used by the HLSL. When you add a parameter to an effect, it will become available as an external parameter, and the parameter declaration block lets you see the association between these parameters and the shader registers. The bottom area contains the actual shader code that you edit directly. In Figure 1, you can see that the RenderMonkey supplied view/projection matrix is mapped to shader constant c0 (c0 though c3 is implied by the float4x4 mapping), and this name is used in the actual vertex shader. These variables can be considered global declarations. The input variables from the vertex stream show up as the parameters to the entry point function, typically called main.

As you can see in the Figure 1, RenderMonkey has provided the minimal shader as the default. The default vertex shader transforms the incoming vertex position by the view/projection matrix while the default pixel shader (not shown) sets the outgoing pixel color to red. You can edit the shader code in the lower window till you get the shader you want. To see what the shader looks like, click on the Commit Changes button on the main toolbar (or press F7) to internally save and compile the shader. If the shader has any errors, there will be an informative message displayed in the output pane at the bottom of the RenderMonkey window. If the shader compiled successfully, then you'll immediately see the shader results in the preview window.

And that's about all you need to know to edit shaders in RenderMonkey! The interface is very intuitive - just about everything can be activated or edited by double-clicking. You can insert nodes to add textures, set render state, or add additional passes with just a few clicks. The documentation for RenderMonkey comes with the RenderMonkey download and is also available on this page, along with a number of documents on using RenderMonkey.

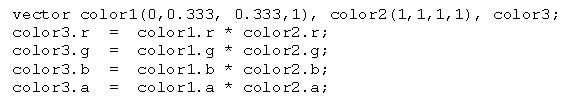

Finally, you'll need to know some internal variables that are available to RenderMonkey, shown in Figure 2. If you add the RenderMonkey names (case sensitive) as variables they'll be connected to the internal RenderMonkey variables. The time-based values are vectors, but all elements are the same value. You can use these to vary values programmatically instead of connecting a variable to a slider.

Writing Modular Code in HLSL

If you've been writing low-level shader code, you probably haven't been thinking about writing modular code. It's tough to think modularly when you don't have any support in the language for any type of control statements. And surprisingly, there's still no actual support for modular code. A shader written in HLSL still compiles to a monolithic assembly shader. However, the HLSL compiler does hide a lot of the details and does let you write like we can write a modular shader. I mention this because it's easy to get lulled into thinking that you're working with a mature language, not one that's less than a year old. You should be aware of these limitations. There's no support (yet) for recursion. All functions are inlined. Function parameters are passed by value. Statements are always evaluated entirely - there's no short-circuited evaluation as in a C program.

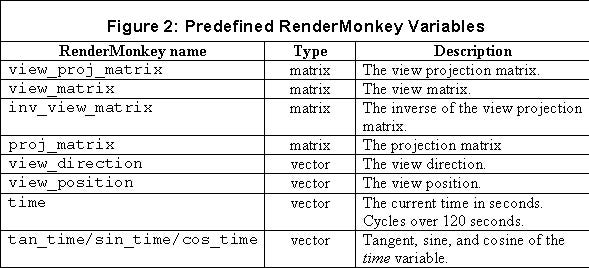

Even with those limitations, it's surprisingly easy to write modular code. In Wolfgang Engel's article, he discussed the lighting equation for computing the intensity of the light at a surface as the contribution of the ambient light, the diffuse light and the specular light.

I've made a slight change by adding in a term for the light color and intensity, which multiplies the contributions from the diffuse and specular terms and by using I for intensity and C for color. Note that the color values are RGBA vectors, so there are actually four color elements that will get computed by this equation. HLSL will automatically do the vector multiplication for us. Wolfgang also created a HLSL shader for this basic lighting equation, so if you're new to HLSL you might want to review what he wrote, since I'm going to build on his example.

Let's rewrite the basic shader, setting things up so that we can modularize our lighting functions. If I add a color element to the output structure (calling it Color1), we can edit the main function to add in the vertex normal as a parameter from the input stream and write the output color. Insert two scalar variables, Iamb for ambient intensity and Camb for ambient color (correspond the above equation) in the RenderMonkey workspace. This will allow us to manipulate these variables from RenderMonkey's variable interface. RenderMonkey has a very nice interface that supports vectors, scalars, and colors quite intuitively. To implement the lighting equation we'll need to compute the lighting vector and the view vector, so I added these calculations for later use. The ambient lighting values and light properties (position and color) need to be provided to RenderMonkey by assigning them to variables. The basic vertex shader computing the output color from the product of the ambient intensity and the ambient color looks like this.

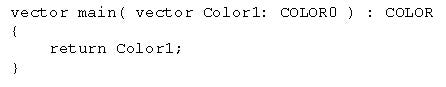

Note that vector is a HLSL native type for an array of four floats, it's the same as writing float4. Also note the use of swizzles when calculating the normalized vectors - this leaves the vector's w parameter out of the calculation. I also modified the default pixel shader to simply pass along the color created in the vertex shader as shown below. This simple pixel shader simply returns the (interpolated) color provided by the vertex shader.

Functions in HLSL

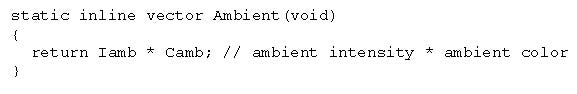

So let's start off by making the ambient calculation a function just to see how it's done in HLSL. Making the ambient calculation a function is pretty simple.

The static inline attributes are optional at this point, but I've placed them there to emphasize that currently all functions are inlined, so creating and using a function like this adds no overhead to the shader. This Ambient() function just computes the ambient color and returns it.

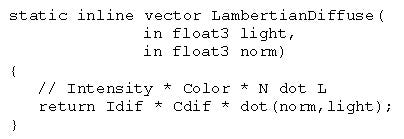

Creating the Diffuse function requires that we pass in the lighting vector and the normal vector. In addition to the argument type description you'd expect to see in a C program, HLSL allows you to specify if a value is strictly input, output or both through the in, out and inout attributes. A parameter that is specified as out or inout will be copied back to the calling code, allowing functions another way to return values. If not specified, in is assumed. Since this diffuse equation is an implementation of what's called a Lambertian diffuse, I've named it as such. The LambertianDiffuse() function looks like this.

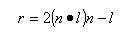

Note the use of the HLSL intrinsic dot product function. The specular equation is taken from Phong's lighting equation and requires calculation of the reflection vector. The reflection vector is calculated from the normalized normal and light vectors.

The dot product of the reflection vector and the view vector is raised to a power that is inversely proportional to the roughness of the surface. This is a more intuitive value than letting a user specify a specular power value. To limit the specular contribution to only the times when the angle between these vectors is less than 90 degrees, we limit the dot product to only positive values. The specular color contribution becomes;

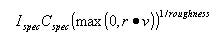

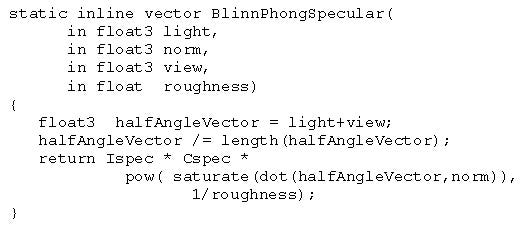

Implementing this in HLSL looks like the following:

Note the use of the intrinsic saturate function to limit the range from the dot product to [0,1]. Roughness is added to the RenderMonkey Effect Workspace and added in the shader editor as a parameter.

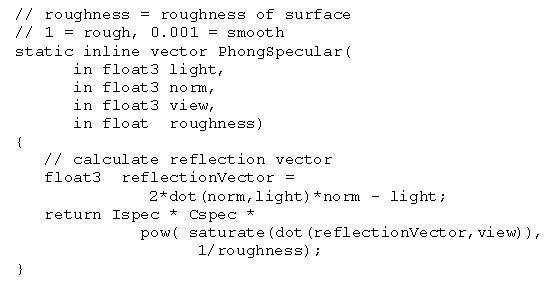

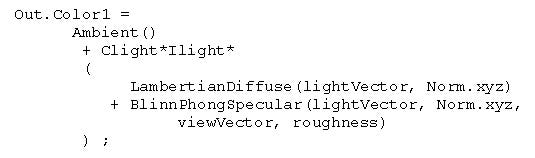

Using these functions we can now implement our main shader function as follows:

The three functions that we added are either placed above the main function or below, in which case you'd need to add a function prototype. As you can see, it's fairly easy to write functional modules in HLSL code.

Finally, Modular Code

The real utility of this comes when we create modules that can replace other modules. For example, suppose that you wanted to duplicate the original functionality of the fixed-function-pipeline, which implemented a particular type of specular called Blinn-Phong. This particular specular lighting equation is similar to Phong's but uses something called the half-angle vector instead of the reflection vector. An implementation of it looks like this:

To change our shader to use Blinn-Phong, all we need to do is change the function we call in main. The color computation would look like this;

Since all of these functions are inlined, any unused code is optimized out from the shader. As long as there's no reference to a function from main or any of the functions that are called from main, then we can pick which implementation we want in our shader code simply by selecting the functions we want, and we don't have to worry about unused code since it's not included in the compiled shader.

As we get more real-time programmability it becomes easier to implement features that have been in the artist's domain for years. Suppose your art lead creates some really cool scenes that look great in Maya or 3DS Max, but don't look right because the Lambertian diffuse in your engine makes everything look like plastic? Why can't you just render with the same shading options that Maya has? Well, now you can! If your artist really has to have gentler diffuse tones provided by Oren-Nayar diffuse shading, then you can now implement it.

Oren-Nayar Diffuse Diffuse Shading

One of the problems of the standard Lambertian model is that it considers the reflecting surface as a smooth diffuse surface. Surfaces that are really rough, like stone, dirt, and sandpaper exhibit much more of a backscattering effect, particularly when the light source and the view direction are in the same direction.

The classic example is of a full moon shown in Figure 3. If you look at the picture of the moon, it's pretty obvious that this doesn't follow the Lambertian distribution - if it did the edges of the moon would be in near darkness. In fact the edges look as bright as the center of the moon. This is because the moon's surface is rough - the surface is made of a jumble of dust and rock with diffuse reflecting surfaces at all angles - thus the quantity of reflecting surfaces is uniform no matter the orientation of the surface, hence no matter the orientation of the surface to the viewer the amount of light reflecting off of any point on the surface is nearly the same.

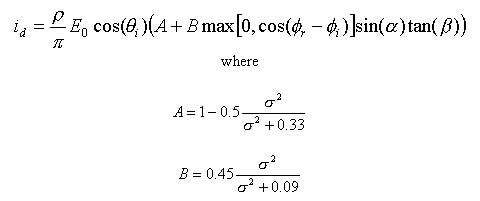

In an effort to better model rough surfaces, Oren and Nayar came up with a generalized version of a Lambertian diffuse shading model that tries to account for the roughness of the surface. They took a theoretical model and simplified it to the terms that had the most significant impact. The Oren-Nayar diffuse shading model looks like this;

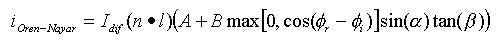

Now this may look daunting, but it can be simplified to something we can appreciate if we replace the original notation with the notation we've already been using. p is a surface reflectivity property, which we can replace with our surface color. E0 is a light input energy term, which we can replace with our light color. And the 0i term is just our familiar angle between the vertex normal and the light direction. Making these exchanges give us;

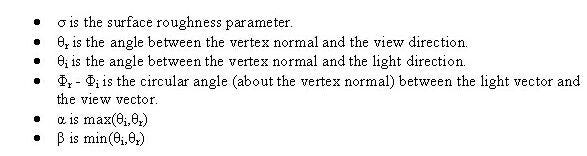

which looks a little easier to compute. There are still some parameters to explain.

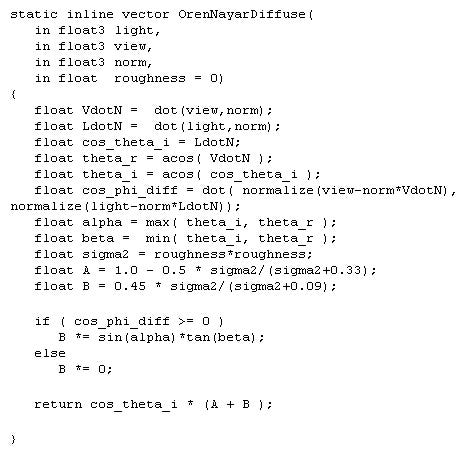

Note that if the roughness value is zero, the model is the same as the Lambertian diffuse model. Since this model gives a closer visual representation to rough surfaces such as sand, plaster, dirt, and unglazed clay than Labertian shading, it's become a popular shading model in most 3D graphics modeling packages. With HLSL, it's fairly easy to write your own version of an Oren-Nayar diffuse shader. The shader code below is based upon a RenderMan shader written by Larry Gritz. Using this function will probably make the entire shader is so long it requires that your hardware supports 2.0 shaders or you run on the reference rasterizer.

In most implementations this is paired up with a Phong or Blinn-Phong specular term.

I hope that you're getting the idea that it's pretty easy to write snippets of code for specific purposes andplace them in a library. When I was writing my book on shaders I focused more on writing it such that I had a variety of shader subroutines rather than just a collection of stand-alone shaders. As you can see this approach is very powerful and allows you to pick and choose the pieces that make up the shader to customize the overall effect you want to realize.

Like C, HLSL supports the #include preprocessor directive, but only when compiling from a file - currently RenderMonkey doesn't implement #include. The filename specified can be either an absolute or relative path. If it's a relative path then it's assumed to be relative to the directory of the file issuing the #include. Unlike C, there's no environmental variable support, so the angle bracket include notation isn't supported, just the include file name in quotation marks. It's easy to see that when function overloading gets implemented it's going to be very easy to quickly write shader code that's easy to customize. For now you can use the preprocessor and some #ifdef / #else / #endif directives to #define your own shading equations.

Shading Outside the Box

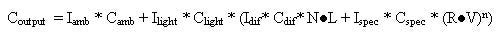

There's no reason to be stuck with the lighting equation that we've been working with. Shaders give you the ability to create whatever shading effect you want and I encourage you to try creating you own lighting equations, either by implementing academic models such as Oren-Nayar, or creating your own. Cel shading is a simple example of non-photo-realistic (NPR) rendering, but there are many, many artistic styles that are starting to show up in computer graphics, just check out the SIGGRAPH proceedings since 1999. You can also look to the real world for inspiration as well. There's a beautiful example of this type of shading done by ATI to demonstrate the Radeon 9700. In order to duplicate the deep, color-shifting hues seen on metallic paint jobs on cars, ATI created a demo that has (among other effects) a dual-specular highlight term. This creates a highlight of one color surrounded by a highlight of a different color as seen in a closeup of the car's side mirror in Figure 4.

The metallic flakes are from a noise map and the environment mapping finishes off the effect.

As shading hardware becomes more powerful and commonplace you'll start to see more and more creative shading show up in games and then in mainstream applications. The next release of the Windows OS is rumored to be designed to natively support graphics hardware acceleration for the entire desktop, and programmable shading is going to be a big part of that. With the prices of DirectX 9 (and OpenGL 2.0) capable hardware continually dropping, if your current project doesn't incorporate shaders, you haven't investigated HLSL, or the low-level shader language intimidated you, I hope this article has shown you that not only is writing HLSL easy, but with tools like RenderMonkey you can be writing shaders within minutes.

Article Reviewers

The author would like to thank the following individuals for reviewing this article prior to publication: Wolfgang Engel, Randy Fernando, Tadej Fius, Muhammad Haggag, Callan McInally, Jason Mitchell, Harald Nowak, Guimo Rodriguez, and Natasha Tatarchuk.

Resources

RenderMonkey

The executable and documentation for RenderMonkey can be found at www.ati.com/developer/sdk/radeonSDK/html/Tools/RenderMonkey.html

Cg

While not HLSL, it's pretty close. You can learn more about it at http://developer.nvidia.com/Cg, or www.cgshaders.org.

DirectX 9

The Microsoft DirectX 9 documentation is pretty sparse on HLSL, but it's there for you to puzzle out.

Shader Books

For DirectX shaders there's ShaderX by Engel, Real-Time Shader Programming by Fosner. There's two ShaderX2 additional books coming out soon as well. Cg is covered by The Cg Tutorial by Fernando and Kilgard. Real-Time Shading by Olano, et. al. is more about current shader research, but it's a useful source of information if your interested in delving further into the state-of-the-art.

Illumination Texts

Unfortunately most graphics texts gloss over all but the simplest shading models. Most of the older ones can be found in Computer Graphics by Foley, van Dam, et. al., with the newer ones in Principles of Digital Image Synthesis, by Glassner. Quite a few of the original papers can be found online as well. The RenderMan Companion by Upstill and Advanced RenderMan by Apodaca and Gritz are really useful sources of inspiration.

______________________________________________________

Read more about:

FeaturesYou May Also Like