Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

An in-depth technical article in which experienced game programmer Sergio Giucastro analyzes how to get the most accurate and least processor-intensive timing calls on the Android platform.

June 6, 2012

Author: by Sergio Giucastro

The passage of time in interactive software is a frantic phenomenon.

Let's think about an OpenGL ES game running at 60 frames per second (quite common on an average Android smartphone); we have only 16 milliseconds between two frames, this means that all the magic behind the rendering of a frame happens in this time slice.

Between two frames exists a different world, where the concept of time has a totally different meaning; in this world, the only acceptable units of measurement are micro or nanoseconds.

In this world, the developer cannot make any assumption; even the interval between two consecutive frames can vary at any moment -- for example, scenes with more objects will slow down the rendering process and the time interval between two consecutive frames will be longer.

It is to measure this kind of variation and the code should handle variable FPS in order to get a constant game speed, so that the user experience will be the same if the frame rate will drop down from 60FPS to 30FPS.

Every game developer knows how it is important to measure time in applications, for example:

to benchmark a routine;

to calculate FPSs of interactive software, in an accurate and possibly efficient way;

to achieve constant game speed independent of variable FPS.

It is confusing to choose the right tool for measuring time. Hardware timers exist in every device (and are different from device to device), the Android operating system offers different timers, and finally Java itself offers more then one timer. Each solution has pros and cons, and in this article I will discuss the possible ways of measuring time on an Android device in an accurate and efficient way.

In this article, "measuring time" means interval timing; we are focused mainly on measuring elapsed time between two events.

Game developers have faced this problem since the '80s:

Using the 8253 timer chip (PIT): Michael Abrash, in his masterpiece Graphics Programming Black Book, explained how he could get a 1μs precision, more than 20 years ago!

RDTSC x86 instruction: starting with Pentium processor, it was possible to get the timestamp 64 bit value using a single instruction that returns the number of clock cycles since the CPU was powered up.

WIN32 API: the good old GetTickCount() and later the high resolution QueryPerformanceCounter() appeared on Windows platform.

This is only an incomplete list with a few exmaples; the point here is that there are two possible ways for measuring time: directly asking the hardware (the old school, dangerous way) or invoking primitives offered by our OS Kernel (the safe and responsible way).

Direct hardware access may look an attractive idea for an hardcore developer, but nowadays, as we will see, there are so many different hardware timers, and sometimes more then one is present on the same device, that is really difficult to handle it. It is funny to know how even on the Windows platform, where the developer can easily assume the presence of an x86 compatible CPU, Microsoft recommends to use API functions for timing because the RDTSC instruction, on multi-core systems, may no longer work as expected.

Modern mobile platforms have extremely accurate hardware timers, for example ARM CPUs like the Cortex A9 (Samsung Galaxy SII) has a 64-bit Global Timer Counter Register -- that is a really accurate monotonic, non-decreasing clock, cheap to read. According to the Cortex A9 ARM Cycle Timing document, using the powerful LDM instruction takes only one CPU cycle!

On Tegra platform you can use exactly the same concept, reading memory mapped RTC (Real Time Clock) registers; in the following example from the Tegra RTC driver, with two memory read operations, you can get the 64 bit tick value:

u64 tegra_rtc_read_ms(void)

{

u32 ms = readl(rtc_base + RTC_MILLISECONDS);

u32 s = readl(rtc_base + RTC_SHADOW_SECONDS);

return (u64)s * MSEC_PER_SEC + ms;

}

Timing using the Time Stamp Counter is really fast, but on some platforms it can be dangerous as well: in case of multi-core systems, or during the low frequency/CPU saving state of the system, it may lead to errors. You should beware this, and calibrate it with the PIT on some systems.

The nightmare begins when you realize that different platforms have different kind of RTC, and that besides this, a lot of different hardware clocks exists in modern platforms: Time Stamp Counter, Programmable Interval Timer, High Precision Event Timer, ACPI PMT.

Direct access to the hardware means writing primitives specific to a single platform; furthermore, developing ASM code takes more time, is not portable, and is difficult to read for most developers. Finally, the OS can raise an exception if the hardware resource you are trying to access requires supervisor/kernel mode.

In the next section, we will discuss high level, portable solutions offered by the kernel and how to access these from Java.

The clock_gettime() posix function provides access to several useful timers with a resolution of nanoseconds. Clock_gettime() is the best way for getting an accurate timer in a portable way: it is implemented directly in the kernel and returns the best possible time measurement.

Investigating further, clock_gettime() is defined in the C standard library (libc); Android uses a custom version of this library, called Bionic, smaller and faster than GNU libc (glibc). The Bionic library will make a syscall to the kernel (calling the function at the address __NR_clock_gettime defined in the syscall table); the kernel will return the best possible timer existing on the current hardware.

Android is based on the Linux Kernel, and as happens in any system based on Kernel 2.6, at boot time initializes a linked list with available clocksources; the list is sorted by rating, and the kernel chooses the best clock available. It is also possible to specify the clocksource at build time.

You can find out which clock your system is using reading the content of /sys/devices/system/clocksource/clocksource0/current_clocksource and /sys/devices/system/clocksource/clocksource0/available_clocksource files.

On a Galaxy SII the clock source is mct-frc, the local timer which is included the Exynos4210 system-on-a-chip (SoC); on an LG Optimus One the current timer is dg_timer, the debug timer (DGT) that, together with the general-purpose timer (GPT), is an ARM11 performance counter.

In the following table is reported a list of available and current timers on some Android phone:

Samsung Galaxy S 2

Available clocksource | mct-frc | dg_timer gp_timer | clock_source_systimer | gp_timer dg_timer | 32k_counter | dg_timer gp_timer |

|---|---|---|---|---|---|---|

Current clocksource | mct-frc | dg_timer | clock_source_systimer | gp_timer | 32k_counter | dg_timer |

As you can see from the previous table, there are so many different hardware timers that it could be a good idea to use kernel primitives to access timing information!

A similar primitive is gettimeofday() (__NR_gettimeofday in the syscall table), but uses the same back end of clock_gettime() starting from kernel 2.6.18. POSIX.1-2008 marks gettimeofday() as obsolete, recommending instead the use of clock_gettime().

Getting access to an hardware timer through a system call requires a switch from user to kernel/supervisor mode; this switch can be expensive, and can vary from platform to platform.

For example on SH or x86 CPUs, gettimefoday() and clock_gettime() both implement vsyscalls. A system that on modern Linux kernels prevents the crossing of the boundary between the user mode and the kernel mode.

For example on SH or x86 CPUs, gettimefoday() and clock_gettime() both implement vsyscalls. A system that on modern Linux kernels prevents the crossing of the boundary between the user mode and the kernel mode.

In this case, the Bionic library will call kernel functions (gettimefoday() and clock_gettime()) that are mapped in a kernel page executable with the userspace privileges; vsyscall allows to access hardware timers without the overhead of a user to kernel mode switch.

So on systems with SH or x86 CPUs, the primitive clock_gettime() can be even faster than direct hardware access, and in all other cases (as ARM CPUs actually represent the bigger part of the market), for a little overhead we have a really portable and accurate solution.

In this article we will assume that clock_gettime() is the best possible primitive to get tick count in Android using native code.

Java does not offer the clock_gettime() function. Unless you develop native applications in C, or you use JNI to call native code, so what is the state of the art on Android for measuring time?

I am going to analyze and test five different solutions:

SystemClock.uptimeMillis()

System.currentTimeMillis()

System.currentThreadTimeMillis()

System.nanoTime()

SystemClock.elapsedRealtime()

We should consider, for each possible solution:

Resolution/Accuracy. It is obvious that we want to measure the time in the most accurate way.

Overhead. If we are simply benchmarking, we can always cut it out in production code, but if we need to measure the time for other purposes, for example to know the FPS rate of an interactive game, in order to make the animations on the screen FPS-independent, we really need to find a way to measure the time in an efficient manner.

In the following paragraphs we are going to test, benchmark, and discuss these functions, in hopes of finding the best solution.

Android API documentation (for example uptimeMillis()) or the Cortex-A9 Technical Reference Manual (regarding the TSC counter), assures you that "this clock is guaranteed to be monotonic".

A monotonic clock is a clock that generates a monotonic sequence of numbers in function of the time; a sequence (an) is monotonic increasing if an+1 ≥ an for all n ∈ N.

So a monotonic sequence looks like this: 1, 2, 2, 2, 3, 4, 5... That means that subsequent calls to a primitive like uptimeMillis() can return the same value more times.

So using a monotonic clock we can be really sure that no value will be smaller than the previous one; but in real hardware it is impossible to implement a perfect monotonic clock, and sooner or later the clock will be reset, or the sequence will wrap around. Even 64-bit variables have a limit! So uptimeMillis() will be reset after 292471209 years; nanoTime() will be reset after 292 years.

For practical purposes, we don't care about the reset problem, but we are really interested in the idea that more values can be equal to each other, for evaluating the timer granularity.

We can evaluate the clock granularity measuring the difference between a value and the first different value in the sequence: for example, if the sequence is 1, 1, 1, 1, 50, 50, 50, the granularity will be 49. With this code, we can measure the granularity, and we can reduce the error, repeating the test multiple times and taking the smallest value:

start= System.nanoTime();

while(true)

{

end=System.nanoTime();

if(end!=start)

break;

}

diff2=end-start;

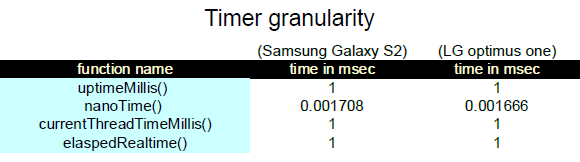

In the following table you can find the granularity measured in two completely different phones:

NanoTime() has a granularity that is several orders of magnitude better the other timing functions; we can say it is 1000 times more accurate than all other functions (ns vs. ms) and its resolution is 100 times higher.

Now we know how different timing methods perform in terms of granularity and resolution. We should do some benchmarks in oder to decide which one is the best timing primitive possible.

Looking at the Android source code we know that three timing primitives(SystemClock.uptimeMillis, System.currentThreadTimeMillis, System.nanoTime) rely on clock_gettime();

System.currentTimeMillis() invokes the gettimeofday() primitive, which shares the same backend of clock_gettime(). Shall we assume the same performance for all the different primitives?

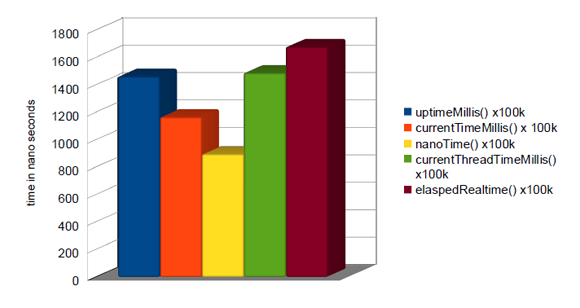

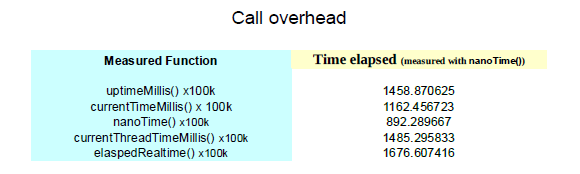

In the following graph you can see the results of some benchmarking; each method is called 100k times, and the elapsed time is measured using nanoTime().

Looking at the benchmarking results, it's clear that nanoTime() performs better.

This analysis is valid as a general rule of thumb; if timing performance on a specific platform really matters to you, please benchmark! A lot of variables are involved in reading the hardware clock through kernel primitives; never assume!

We can definitively use nanoTime() for measuring elapsed time on Android devices. If we are developing native applications, clock_gettime() will provide us the same results with less overhead. If we really want to reduce this overhead, the two possible solutions are:

make direct system calls

directly access the hardware timer

In any case, please remember that after an uptime of 292 years, the user can be disappointed by the timer reset!

Read more about:

FeaturesYou May Also Like