Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

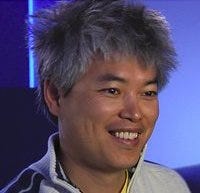

In this feature interview reprinted from the December issue of Game Developer magazine, editor-in-chief Brandon Sheffield speaks to Bungie senior graphics engineer Hao Chen about how Halo creator Bungie plans to solve problems for its new IP and the next generation.

[In this feature interview reprinted from the December issue of Game Developer magazine, editor-in-chief Brandon Sheffield speaks to Bungie senior graphics engineer Hao Chen about how Halo creator Bungie plans to solve problems for its new IP and the next generation.]

Halo has been a defining series for the Xbox platforms -- not only as the platform's first major system-seller, but also as a series that's known to push the limits of what the console can do, visually. A lot of the visual punch is done on the graphics engineering side, subtly decoupled from the artists. Halo's look is unique and well-defined, but with each iteration is given greater depth and fidelity by the graphics team.

Hao Chen is Bungie's senior graphics engineer, and as the team works on its newest project, which is the first to go multi-platform, the team has encountered new challenges, as well as confronted a whole host of older issues that many companies previously considered solved. As Bungie prepares not only for its leap onto non-Microsoft programs, but also for a transition into the next generation, we spoke in-depth with Chen about the future of graphics on consoles.

Let's talk about the pros and cons of megatextures. Do you use them?

Hao Chen: We don't use them right now. There are two main interpretations of "megatexture." One is to store a giant texture, say 16k by 16k, and use a combination of hardware, OS, and client side streaming to allow you to map that big texture to your world.

This is cool, but we already have similar technology in places where that would be needed. For example, this is great for terrain, but we already have a very good terrain solution that uses a great LOD and paging scheme to allow us to do large and high fidelity terrain.

The other interpretation of megatexture, or the so-called sparse texture, is great for things that only have valid data in small parts of a texture. For example, if you paint a mask on terrain, then you will only have valid data where the paintbrushes touched, and everywhere else it is useless data.

Sparse textures allow us to represent these textures very efficiently without wasting precious storage and bandwidth on useless data. Another cool application of sparse texture that we are excited about is shadows. If we can render to sparse textures, then it's possible that we can render very high resolution shadows to places that need them and still be efficient.

A disadvantage of megatextures is that on a console, we typically already know exactly what the hardware is doing, and we have very very tight control of where the resources go. So we could do a lot of things [that are similar to the benefits provided by] megatextures already on consoles, that you can't do on PC. So I guess there wouldn't really be a disadvantage, it's just that the coolness of having a texture that is automatically managed is less relevant in the console than it is on the PC.

I've certainly seen how even today people are having a lot of trouble rendering shadows without a lot of blockiness or dithering.

HC: That's kind of the problem with computer game graphics these days. A lot of things people consider solved problems are actually quite far from being solved, and shadows are one of them. After all these years we don't have a very satisfactory shadow solution. They're improving; every generation of games they're improving, but they're nowhere near the perfect solution that people thought we already have.

HC: That's kind of the problem with computer game graphics these days. A lot of things people consider solved problems are actually quite far from being solved, and shadows are one of them. After all these years we don't have a very satisfactory shadow solution. They're improving; every generation of games they're improving, but they're nowhere near the perfect solution that people thought we already have.

What do you think might be the answer? Your potential megatexture solution, or something else?

HC: We are still far from seeing perfect shadows. Shadows are a byproduct of lighting. All frequency shadows (shadows that are hard and soft in all the right places) are a byproduct of global illumination, and these things are notoriously hard in real time.

There's just not enough machine power, even in today's generation or the next generation, to be able to capture that kind of fidelity. There are also inherent limitations to the current techniques, such as shadow maps, for example. When the light is near the glancing angle of a shadow receiver, then it is impossible to do the correct thing.

With the current state of the art shadow techniques we can manage the resolution much better, and we can do high quality filtering, but we still have long ways to go to get where we need to be, even if we just talk about hard shadows from direct illumination.

I think megatextures could help, but still fundamentally there are things you cannot solve with our current shadow meshes. And until the performance supports real-time ray tracing and global illumination, we're going to continue seeing hack after hack for rendering shadows. Every year we see a few new hacks of current techniques, and with each hack, we see a little bit of improvement on the quality.

How about the related issue of removing jaggies from edges? I've seen a lot of discussion recently about different methodology to remove some of the jagginess as we get into more high definition displays and things like that.

HC: I think that's very very critical. It's one of the big emphases on our current graphics engine, removing what we call the "digital artifacts". Jaggies definitely is one of them. So we place a fairly high emphasis on removing these temporal aliasing. And again, this is one area that we see a lot of recent research, and there are some things that look much better, and we're definitely looking at a few of them right now.

What looks most interesting to you?

HC: I think all of the morphological anti-aliasing, especially some of the latest variations of MLAA with deferred rendering engines. Some recent techniques are fast enough for even the current generation of consoles.

But there's also the notion of decoupling sampling from shading in some of the recent papers from ATI and NVIDIA and other people, which is also very interesting. That's about having higher quality AA without having to pay for the extra storage. As you know, storage and bandwidth in a console is always in premium.

There are new techniques that allow us to achieve higher quality anti-aliasing, something like the equivalent of 8x or 16x MSAA, but which only requires 2x or 4x storage. There are also other things we can do to make the game look smoother, like motion blur, better filtering, and better lighting and material.

I also expect quality and performance improvements to continue, perhaps by taking advantage of a GPGPU. Hopefully with all this, jaggies will be reduced to a point where you have to look very hard to find them in the next generation of games.

How about that sort of texture pop effect that has continued to plague pretty much every game with more complicated normal maps as they're paged in from the disc? How are you dealing with that?

HC: Well that's more or less a resource management issue. That again is dealing with a limited amount of memory. There are ways to make it less noticeable, and they all have to do with clever blending of the textual transition or morphing into the textures as things are being paged in. And also generally higher performance allows you to hide the pops until they're further away, or have better prediction of textures that are needed. But the cold reality is, there's limited amount of space on the console that can store things in memory, so we need to page things in and out.

For a very large world, we also need level of detail management so we don't draw everything at the same fidelity everywhere; these are the main reasons of "popping." But texture differences are typically only a small part of this, often changing geometry silhouette and differences in lighting and shading of the different LODs contribute to the most jarring popping.

To combat this, we have a very clever LOD generation systems that try to preserve both the geometry silhouette and the material appearances. You might have seen our GDC presentation last year on our imposter system that talked about some of this, and we're still improving it. But still, when you want to have much larger worlds with much larger content, you have to page things in. So for now it's just one hack that's probably going to be better than the other hack.

How much time in your department do you have to spend being concerned about game performance? Can you push the boundaries as much as possible with your effects and then get reined back in, or do you have to be constantly on top of memory management and that sort of thing?

HC: You're talking about two things. One is performance, one is memory. But they kind of follow similar patterns. Our teams are typically very involved in both performance and memory, because we are the largest consumer of both.

Typically, the way that we handle performance is we want a game to be within [a performance] ballpark around milestones. So for each milestone we set a performance target and say by this milestone, we have to be within this ballpark of this performance number. And then at the end of the milestone we will have very formal performance reviews, where we go through each level and find the performance bottlenecks and then assign teams and individuals to track those performance bottlenecks and improve performance that way.

That's the typical process we go through. But obviously a lot of performance comes from how things are designed. If you design something to be low-performance, then typically you're going to be low-performance all the way until the end. A lot of our design decisions already factor in performance from very early on. We try to solve the performance issues more as a design problem, not as a hardware optimization problem.

So in terms of memory, it follows a similar pattern. We typically have an agreed-upon budget very early in the game, and then we try to make the team live by that budget, especially the content people. And every time they make a level there's going to be a content report that tells us where they're using memory, and where they exceeded their memory budget. Then we take early enforcement action to make sure we're within ballpark.

But performance and memory are some of those things that depending on the stage of your game, you cannot be overly strict about them. At the earlier stage you want people to put more content in so they can get a feel for the game and explore the look of the game. As long as they're within ballpark I think we'll be okay. So we try not to be too draconian about performance and memory numbers too early.

I don't know if you have been keeping up on advances in voxelization...

HC: Voxels are very very interesting to us. For example, when we take advantage of voxelization, we basically voxelize our level and then we build these portalizations and clustering of our spaces based on the voxeliation. And so voxelization, what it does is hide all the small geometry details. And in the regular data structures, it's very easy to reason out the space when it's voxelized versus dealing with individual polygons.

But besides this ability, there's also the very interesting possibility for us to use voxelization or a voxelized scene to do lighting and global illumination. We have some thoughts in that area that we might research in the future, but in general I think it's a very good direction for us to think about; to use voxelization to hide all the details of the scene geometry and sort of decouple the complexity of the scene from the complexity of lighting and visibility. In that way everything becomes easier in voxelization.

But as far as disadvantages, once you've figured out all this connectivity within your space that's based on voxels, how do you then map that back to the original geometry? In terms of lighting for example, if you've figured out where each voxel should be lit, how do you take that information and bake it back to the original geometry? Because you're not drawing the voxels, you're drawing the original scene. And so that mapping is actually non-trivial. So there's a lot of research that is still needed in order for voxels to be used directly in-engine.

Any word on the new project?

HC: Our project right now is at a very exciting stage. For the first time we're now shipping on multiple platforms, and then the new consoles are at least not far on the horizon. We have a brand new game, a brand new IP. But all of these things actually present a lot of challenges. We already have a very strong graphics team, but because our ambition is so much bigger, we're also looking for very talented graphics people.

Where do you generally find your people?

HC: Most of the graphics people I have on our team [joined] because they heard our talks at SIGGRAPH or GDC. So people will come up after the talk and say, "Hey, that's a pretty cool place to work, because they push the envelope but still work on very interesting problems and come up with practical techniques."

We get approached by these people, and then the majority of the graphics people we have on our team are hired that way.

What for you have been the challenges on the graphics side, going multiplatform?

HC: We re-architected our graphics engine, and the primary reason is the need to go multiplatform and get ready for the next gen. The first challenge to abstract out the platform differences and still be efficient for each platform.

The other challenge is to have a good architecture for multi-threaded, multi-core designs that allow us to distribute work across different hardware threads, and have as many things execute in parallel as possible. We found out that even for Xbox 360, we were grossly under-utilizing the CPU, mostly due to our multi-threading design that doesn't allow us to spread the work and execute them in parallel. So we redid the whole architecture in the new engine.

An even bigger challenge is to future proof the engine so when the next generation of consoles is here, we are already pretty good at squeezing the performance out of it. For example, we want the ability to have a particle system to run on the GPU for the 360, SPU on the PS3, and compute shaders on the future hardware, and this requires good design up front.

How much can you really know about the next platforms aside from the fact that they will generally be multi-threaded? Is it difficult to predict, or do you have some insight into it already?

HC: There is some confidence we can have from where the hardware is trending toward. You make educated guesses on the things that you can, and then you have isolated, potentially-binding decisions on things where you have no idea what's going to happen.

The worst thing you can do is design something that prevents you from being efficient on the future platforms. So on the things where we have sketchy details, we'd rather leave that isolated decision open until later than make the wrong decision. But there are plenty of things we know already, so we try to design an engine that's very efficient based on our knowledge.

From your perspective as a graphics engineer, what part do you play in the look of Halo? Halo has a unique universe, and I'm wondering how much the art director is actually involved with the graphics programmers on your side.

HC: All graphics features in our game are the result of collaboration between engineers and artists. In the Halo engine, we place great importance on making things physically correct. For example, we used a photon mapping process to compute our global illumination, so in that sense, technology plays a critical role in the realism of our games.

On the other hand, our games are never just a re-creation of the physical world, and art direction plays the dominant role in the look of our games. For example, our worlds are more colorful than most FPS games; we have fantastic skies that you can't find in reality; the shapes of our structures and objects conform to a consistent visual language depending on which faction they belong to.

In the new engine, we place even more emphasis on giving artists direct control over how the game appears, and the majority of our R&D time was spent making technology that makes artist work more efficiently while lowering the technical requirements.

We play sort of a collaborative role with the art director. Typically, any graphics feature is a combination of the graphics engineer providing the possibilities of what can be done, and then the art director gives their preference against our vision of what should be done. So, the combination of these two typically results in a graphics feature that gets made in our engine. So our role is not necessarily to be the visionary, but we provide the possibility and educated guess of what can be done, and how their vision is best translated in terms of technology.

What do you see as the benefits of using your own engine, since you all still do that?

HC: First of all, it's a lot more fun to write your own engine than licensing someone else's, right? But that's more of a joke. The real benefit is the flexibility, because a lot of things as we develop the game will change in the course of the game. A lot of early design decisions will turn out not to be true. A lot of things we didn't anticipate before will become big problems.

So having our own engine, where we are intimately familiar with every aspect of that engine, allows us to have the flexibility to change, first of all. Second of all, nowadays we're also using a good mix of middleware to do a lot of the nitty gritty functionality that typically would take a lot of time. So it makes even more sense for us to focus on the ones we consider to be the bread and butter, and things that give us competitive advantage. And if you adopt an engine wholesale, you lose that competitive advantage.

Do you see much in the way of middleware that actually helps what you do on the graphics side?

HC: Absolutely. We see more and more roles played by middleware these days. We ended up using a few middleware packages ourselves, even though we wrote the rest of the engine. I think good use of middleware allows us to get a feature earlier than we could if we were to engineer it ourselves, and that means the content people will get mature technology and more time to produce content. So that longer iteration time will translate to higher quality that will offset whatever engineering advantage you would have by writing your own. So in a lot of these places where it makes sense, we use middleware.

My graphics programmer friends are curious to compare how you spend your day-to-day. Are you mostly writing shaders, or doing engine development, or do you develop tools for the artists?

HC: For a typical graphics engineer [at Bungie], we have about 65 or 70 percent of the time devoted to coding. The rest of the 30 to 35 percent is spent talking to the artist, educating the content people how to properly use the tools, and gathering feedback into what needs to be changed.

So we spend about 30 percent of that time doing these tasks. The rest depends on the phase of the project we're in. In the beginning it's very much just core development of the technology itself, but if the feature comes to maturity, part of that coding time is devoted to bug-fixing, optimization, and also tools development.

Do you work on more tools development on the front end to get people implementing stuff faster?

HC: Well, we'd like to. The ideal is before you develop a feature, you figure out exactly how the content people will use it. That's the most ideal case, because then the content people have the maximum amount of time they can spend to get familiar with the tools and give feedback.

But it's quite often not that ideal, because we don't know what kind of feature we need to expose until we have enough of the technology to give them a taste of what this feature is about. Then they will say, "Hey, I really want this and that," and that will factor into the tools.

So in the beginning we typically come up with very straightforward, programmer-ish tools, and we just give them to one or two technical art leads to try it out. But before that feature goes to primetime, that's where we spend a lot of time making a very professional tools interface, so the bulk of the artists will then be able to use them.

This is a broad question, but what do you think are the big problems to solve in the coming years from your perspective, graphics-wise?

HC: That definitely is a big question! I will tell you what our emphasis is for what we think is important for our graphics and our engine. Number one is removing digital artifacts. You mentioned this already... removing all the jaggies, having very clean foliage edges, and awesome looking hair with no artifacts. Removing these digital artifacts that remind people you are staring at a computer screen is one of our top priorities.

The other challenge is selling a dynamic world. In terms of what we think is important, we will even lower some of the quality in order for us to have a more dynamic world. This means dynamic time of day, lots of things that move in the wind, lots of things reacting to players moving through them, and when you walk on soft surfaces like sand and mud, you leave footprints. So basically everything we do to sell that this world is moving and dynamic is important to us.

And then perhaps the last one is believable characters. That's still one of the areas where we still spend a huge amount of emphasis in animation, in the rendering of character faces and facial animation, and just characters in general, that's still one of our high emphases. In the end what we're trying to do is deliver the fidelity where it really matters to the end user.

All of the stuff that I just mentioned to you is because that's the stuff that makes the player not believe they're in this game. If you see jaggies, if you've seen things that should be moving but are static, if they see a character's face and they don't believe it's a real face, then their illusion is broken. So we want to spend all of this energy to try to remove these things and deliver the fidelity where it really matters.

How far away are we from having realistic hair? I know that's one of the big reasons that we have so many bald space marines in games right now.

HC: Hair is interesting, because it's actually kind of easy to do very long, natural, flowy hair, because of tessellation. We can do lots and lots of strands of straight hair. With a good material map to it, and a good lighting map to it, it looks very real. And it moves very real, because we have plenty of computing power to do the simulation part. But the problem is stylized hair. So we're talking about making many different styles, from a buzz cut to choppy hair to curly hair. So those are still very difficult to do and to make look real, so it will continue to be a difficult problem I think.

Read more about:

FeaturesYou May Also Like