Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In this technical feature, Chinese casual games programmer Zhaolin Feng gives us his tutorial on creating a fluid 2D render base, using a technique inspired by Andrei Alexandrescu's Modern C++ Design.

November 1, 2006

Author: by Zhaolin Feng

When developing casual games, a problem we often face is trying to create a pure 2D render base without 3D acceleration and creating the exact same effect. But before diving into the solution, I think it’s important to explain a bit more about why we need a 2D fallback in the first place.

3D acceleration is a powerful tool. If we draw the 2D elements using 3D triangles, the scene is probably like the one shown in Image 1 (below). But really, they are just a bunch of triangles which are deliberately aligned on a plane parallel with the camera.

This is a neat/uniform solution and is what the hardcore 3D titles do. But if you are making a casual game like the ones on Popcap or Real Arcade, you can’t assume everyone has a gear with 3D acceleration. And even if they do, they may not have the driver installed properly, because the target audiences of casual games are people like our mums and dads.

So in order to support these machines, we’ll need a pure 2D fallback. The main job of a 2D render base is moving pixels. I mean something like win32 API BitBlt() or StretchBlt() which transfer pixels from a specified source rectangle to a specified destination rectangle, altering the pixels according to the selected raster operation. You may feel confused by now. What’s the fuss with BitBlt? Everyone knows how to use it. I can even use DirectDraw if I like! But this is never an easy task if you think about it seriously. The culprit this time is combination explosion. Let me explain it to you.

Image 1: 2D elements are rendered as 3D triangles using hardware acceleration

You're probably familiar with the concept of BitBlt with color key or alpha blending. But really, they are just two examples of raster operations. It's easy to enumerate more operations such as additive, subtractive, color fill, color-keyed color fill, alpha blending with color mask, red channel only and copy with format conversion.

And that's not all. As for pixel formats, we have R5G5B5, R5G6B6, X8R8G8B8 and B8G8R8. Why so many formats? Because using the same surface format as the display setting of the user can eliminate the need to convert pixel format at runtime. And thus, best performance can be achieved. It’s also because the API you choose will demand certain pixel formats. For example, if you use GDI, the 32bit pixel is represented as X8B8G8R8. But if you use DirectDraw, it’s represented as X8R8G8B8 instead.

Finally, for sampling methods, we have versions without stretching, with stretching and with rotation. So if we are going to make a fully functional 2D render base compatible with the 3D version, we will have to write a hell of a lot of functions:

The number of functions = number of raster operations * number of sampling methods * number of pixel formats

It doesn’t seem so easy now, right?

I’d like to review another solution before presenting mine. Someone once instructed me to never encounter a combinational problem with brute force, but it’s not always true. You can download the SexyEngine and see how it does it. It is published by PopCap with all source codes and it’s free to use.

SexyEngine supports 3D acceleration. You can have a peek at the code in D3DInterface.h and D3DInterface.cpp. It isn’t much code. But their 2D counterpart has tons of code. I will show you some examples.

DDI_ASM_CopyPixels16.cpp and DDI_ASM_CopyPixels32.cpp deal with simple pixel copy for two different surface formats. DDI_Additive.inc and DDI_AlphaBlt.inc deal with additive and alpha blend raster operations. And the file DDI_FastStretch.cpp, DDI_FastStretch_Additive.cpp, DDI_BltRotated.cpp, DDI_BltRotated_Additive.cpp, as their name suggests, deal with stretching and rotating. You can find a lot of other files with similar names.

I really admire the PopCap group for the work they’ve done. It’s really a highly optimized 2D engine. But it can’t fit my needs completely. First, it doesn't seem that PopCap has plans to support Chinese or Japanese character sets. Second, it doesn’t have enough raster operations I need. And it’s definitely not trivial work to add even a single raster operation, because you will have to write a full bundle of functions to support rotation, stretching and different pixel formats.

The recipe to this problem is policy based design, as suggested in the book 'Modern C++ Design: Generic Programming and Design Patterns Applied' by Scott Meyers. I'm going to offer a C++ file with the length of only several hundreds lines, and it's functionally complete and its efficiency can compete the handcrafted code. The biggest winning point of this design is that it allows you to extend the code base to support new raster operations or new pixel formats with only several tens of lines of code!

I’m no expert to explain extensively what policy based design is, so please just buy the book I referred to. But for the ones who want a quick reference, I shall explain it a little bit.

In my understanding, policy based design is a technique to combine and generate code. It’s a bit like compiler magic and relies heavily on templates and occasionally on multi-inheritance.

In policy based design, the decisions to complete a task are abstracted into orthogonal functional parts, called policies. And these policies can be assembled and complete a task together with no runtime cost at all! For example, to implement a smart pointer, you may have a lot of choices over whether to use reference count or linked list, or whether to make it thread safe. The former decision is called storage policy and the latter one is called thread checking policy, and a host class can take them as template parameters and use them in the following form.

typedef SmartPointer ObjectPointer;

This is a reference counted smart pointer without thread safety checking. You can easily assemble more smart pointers with different behaviors. You can also write more implementation of the policies and add them to the system. This kind of design is called policy based design. It’s the WMD to fight combination explosion.

You may feel puzzled at what policy based design looks like in code level. Just read on, because I’m going to show you a live example.

So far, you’ve learned all the basics. I think you may already have a clue of the structure of the 2D render base I shall present. In my design, there are two policies, pixel policy and raster operation policy. The former one defines the storage of one pixel and the operations on it. The latter one defines how a single pixel in the source surface and a pixel in the target surface are blended together.

All the following code can be found in the sample application. You may want to check them out when reading the article. They are compiled in VS.NET 2005. Please note that my code relies heavily on compiler optimization. So make sure you build them in release mode.

First, let’s take a look at an implementation of the pixel policy:

struct X8R8G8B8

{

typedef DWORD PixelType;

typedef DWORD* PixelPtr; static const PixelType ms_colorWhite = 0x00ffffff;

static const PixelType ms_colorBlack = 0; static PixelType getR(PixelType o) {

return (o & 0x00ff0000) >> 16;

}

static PixelType getG(PixelType o) {

return (o & 0x0000ff00) >> 8;

}

static PixelType getB(PixelType o) {

return o & 0x000000ff;

} static PixelType assemblePixel(PixelType r, PixelType g, PixelType b) {

return (PixelType(r) << 16) | (PixelType(g) << 8) | PixelType(b);

}

};

This policy defines two data types, two constants and four functions. PixelType and PixelPtr define the storage of a pixel. ms_colorWhite and ms_colorBlack are there just for convenience. getR() getG() and getB() extract color components from the pixel. assemblePixel() is just the reverse of them which assemble a pixel from color components. Along with X8R8G8B8, I also defined R5G5B6. You can easily add more pixel formats as you like.

With pixel defined, we need a class to represent surface, or a 2D array of pixels. Fundamentally, 2D rendering is all about transferring pixels between two surfaces. The code is well documented, so all I need to explain here are the data members, because I’ll need to use them in the following design.

template

class SurfaceT

{

public:

int width;

int height;

int pitchInPixel;

int alphaPitchInPixel;

typename T::PixelType* rgb; // rgb buffer

UCHAR* alpha; // alpha bufferpublic:

void create(int width_, int height_, bool createAlphaChannel);

void dispose();

};

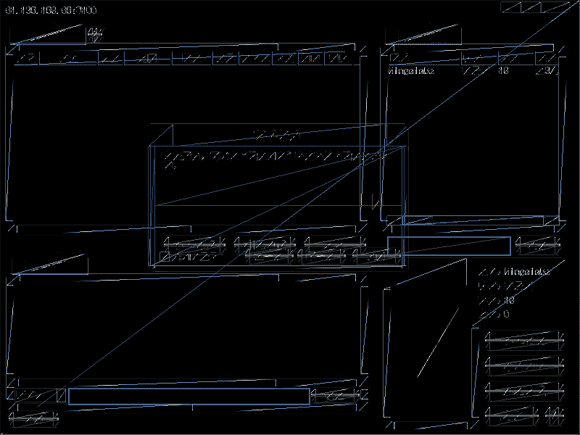

pitchInPixel and alphaPitchInPixel does not necessarily equal the width, because of memory alignment. Image 2 shows the difference. And pitch is expressed in pixel unit instead of the usual byte unit because the buffer pointer will be moved pixel by pixel, not byte by byte, as you will see later.

Image 2: Pitch >= width

In order to simplify the code and make it faster, I choose to store alpha channel as an independent buffer. The valid range of alpha value is [0, 128], not the usual [0, 255]. Because 255 is not a power of 2, dividing a number by 255 consumes more CPU power than dividing a number by 128, which can be done using bit shift operation. You’ll see more of it later.

number / 128 == number >> 7

With the code above, now you can define your own pixel and surface type:

typedef X8R8G8B8 Pixel;

typedef SurfaceT< X8R8G8B8 >Surface;

Raster operation defines how the pixels on the source surface and the pixels on the target surfaces are blended, so I often refer them as blenders. The simplest implementation is to replace the target pixels with the source pixel.

template

class SimpleCopyBlender

{

public:

static void blend(typename T::PixelType* bkg, UCHAR* destAlpha, typename T::PixelType* fore, UCHAR* srcAlpha) {

*bkg = *fore; // simply overwrite the target pixel

}

};

The blend() function has four parameters for two pixels. bkg points to a pixel in the target surface. destAlpha points to the alpha component of pixel in the target surface. fore and srcAlpha point to the pixel in the source surface. *bkg = *fore is all it does, copying color from source pixel to target pixel. And hence it’s named SimpleCopyBlender.

The next blender is somehow special. It fills the target pixels with a constant color.

template

class ConstColorBlender

{

protected:

typename T::PixelType m_color;public:

void blend(typename T::PixelType* bkg, UCHAR* destAlpha, typename T::PixelType* fore, UCHAR* srcAlpha) {

*bkg = m_color;

}

};

The first thing you’ll notice is that it has an additional data member m_color. And the blend() function is a little different too. It’s not static anymore, because it needs to access nonstatic member m_color. Despite the differences, it works just as fine. This is because a policy only defines the name and the parameters of a function. It doesn’t exert any extra constraints as long as the compiler says it’s OK.

The color-keyed version demands no more explanation. Just see it yourself.

The next one is the alpha blended version.

template

class AlphaBlender

{

public:

static void blend(typename T::PixelType* bkg, UCHAR* destAlpha, typename T::PixelType* fore, UCHAR* srcAlpha) {

typename T::PixelType r = T1::getR(*bkg);

typename T::PixelType g = T1::getG(*bkg);

typename T::PixelType b = T1::getB(*bkg);

typename T::PixelType r2 = T2::getR(*fore);

typename T::PixelType g2 = T2::getG(*fore);

typename T::PixelType b2 = T2::getB(*fore);

*bkg = T::assemblePixel(r + (((r2 - r) * *srcAlpha) >> 7), g + (((g2 - g) * *srcAlpha) >> 7), b + (((b2 - b) * *srcAlpha) >> 7));

}

};The operation for each color component is:finalColor = destColor * (128 – alpha) / 128 + srcColor * alpha / 128

= destColor + (srcColor – destColor) * alpha / 128

= destColor + ((srcColor – destColor) * alpha) >> 7

By now you should understand why I choose to use an independent alpha channel and [0,128] as its range. An independent alpha channel may seem to be a waste of memory for a pixel format such as X8R8G8B8, but it unified the storage of alpha channels and eliminated the need to extract alpha components from the pixel. And using 128 instead of 255 saved us from one expensive divide operation.

I think you already get the idea, so I won’t waste time on more raster examples.

Finally, it’s time to bring the pieces together. The class to aggregate policies is called a host class. So far, I haven’t talked about how to transfer pixels. In fact, there are many ways to do it, with or without stretching and rotation. At first, I wished I could find way to abstract them into another policy, but they seem too distinctive to be abstracted. So I finally settled down on three different classes. I will begin with the simplest one. It takes a source rectangle and copies it to a target rectangle of the same size.

template class BlendPolicy >

class SurfaceRectToXY : public BlendPolicy

{

public:

void copyRect(SurfaceT* destSurface, int x, int y, const SurfaceT* srcSurface, const RECT* srcRct)

{

// moving pointers

typename T::PixelPtr readPointer = srcSurface->rgb + srcRct->top * srcSurface->pitchInPixel + srcRct->left;

UCHAR* alphaPointer = srcSurface->alpha + srcRct->top * srcSurface->alphaPitchInPixel + srcRct->left;

typename T::PixelPtr writePointer = destSurface->rgb + y * destSurface->pitchInPixel + x;

UCHAR* writeAlphaPointer = destSurface->alpha + y * destSurface->alphaPitchInPixel + x; // line jumps

int writeLineJump = destSurface->pitchInPixel - srcRct->right + srcRct->left;

int writeAlphaLineJump = destSurface->alphaPitchInPixel - srcRct->right + srcRct->left;

int rgbLineJump = srcSurface->pitchInPixel - srcRct->right + srcRct->left;

int alphaJump = srcSurface->alphaPitchInPixel - srcRct->right + srcRct->left; // calculate end position

typename T::PixelPtr destEnd = destSurface->rgb + (y + srcRct->bottom - srcRct->top - 1) * destSurface->pitchInPixel + x + srcRct->right - srcRct->left; // start transferring

typename T::PixelPtr writeLineEnd;

int lineWidthInPixel = (srcRct->right - srcRct->left);

while(writePointer < destEnd)

{

// calculate the end pixel of a new line

writeLineEnd = writePointer + lineWidthInPixel;

// copy one line

while( writePointer < writeLineEnd )

{

blend(writePointer, writeAlphaPointer, readPointer, alphaPointer);

writePointer++;

readPointer++;

alphaPointer++;

writeAlphaPointer;

} // move to next line

writePointer += writeLineJump;

readPointer += rgbLineJump;

alphaPointer += alphaJump;

writeAlphaPointer += writeAlphaPointer;

}

}

};

There are four buffers involved in this operation, they are source color buffer, source alpha buffer, target color buffer and target alpha buffer. So the pointers readPointer, alphaPointer, writePointer and writeAlphaPointer point to the start of the four buffers respectively. The next four variables are line jumps. A line jump is the distance from the end of one scanline to the start of the next one.

line jump = pitch – scanline length

The next variable destEnd marks the end of the operation. With all the local variables prepared, we can start transferring the pixels now, line by line, until we reach destEnd. This is what the layered while loops do.

To use it, we can write some code like this. It defines a utility class for simple pixel copy.

SurfaceRectToXY o;

o.copyRect(&surface2, 0, 0, &surface1, &rct);

That’s all we need to know so far. I can’t wait to test it myself. So I wrote a simple framework, threw all the code into it and compiled it in release mode. Guess what I get? The exact assembly I’d expected. I’ll examine a piece of the assembly with you:

// copy one line

while( writePointer < writeLineEnd )

00401786 cmp eax,esi

00401788 jae Unicorn::SurfaceRectToXY::copyRect+8Eh (40179Eh)

0040178A lea ebx,[ebx]

{

blend(writePointer, writeAlphaPointer, readPointer, alphaPointer);

00401790 mov edi,dword ptr [edx]

00401792 mov dword ptr [eax],edi

writePointer++;

00401794 add eax,4

readPointer++;

00401797 add edx,4

0040179A cmp eax,esi

0040179C jb Unicorn::SurfaceRectToXY::copyRect+80h (401790h)

alphaPointer++;

writeAlphaPointer;

}

First, you can see that the function blend() is inlined, so there is no function call overhead. Second, the code related to the alpha channels is not there at all! It’s only writePointer and readPointer that are moving. You can try ColorKeyBlender and AlphaBlender and see the assembly yourself. There is no redundant assembly code. They are really comparable to the handcrafted versions.

For those familiar with assembly, you may have noticed that SurfaceRectToXY< Pixel, SimpleCopyBlender> isn’t fast enough, because it transfers data pixel by pixel. But there is a faster asm instruction rep movs which allows you to transfer a whole line of pixels. This time, the compiler failed us. Fortunately, there is a template mechanism called partial template specialization. It can solve this problem easily. All we need is to write a special version of SurfaceRectToXY for SimpleCopyBlender.

template

class SurfaceRectToXY : public SimpleCopyBlender

{

public:

void copyRect(SurfaceT* destSurface, int x, int y, const SurfaceT* srcSurface, const RECT* srcRct)

{

// calculate end position

typename T::PixelPtr destEnd = destSurface->rgb + (y + srcRct->bottom - srcRct->top - 1) * destSurface->pitchInPixel + x + srcRct->right - srcRct->left; // initialize pointers

typename T::PixelPtr readPointer = srcSurface->rgb + srcRct->top * srcSurface->pitchInPixel + srcRct->left;

typename T::PixelPtr writePointer = destSurface->rgb + y * destSurface->pitchInPixel + x; int lineWidthInByte = (srcRct->right - srcRct->left) * sizeof(T::PixelType);

while(writePointer < destEnd)

{

// copy one line

memcpy(writePointer, readPointer, lineWidthInByte); // move to next line

writePointer += destSurface->pitchInPixel;

readPointer += srcSurface->pitchInPixel;

}

}

};

See? There is only one line changed. memcpy() is used to copy a whole line of pixels. I don’t assume memcpy() to be the fastest way to copy memory, but I know almost nothing about assembly so I’d have to trust memcpy(). Believe me, making memory copy really fast isn’t an easy task. For memory of different sizes, you should choose different methods to do it. If you don’t believe me, just try memcpy() with memory of different sizes and follow the execution of assembly. You can also Google the keyword “Fast Memory Copy”; you will be amazed to see how much discussion is there.

The point I want to make is, whenever you think there is a need for optimization, use template specialization or particle template specialization.

I used two other classes to support stretching and rotation, but they are too long to be listed. So I’ll just introduce them to you.

The class SurfaceRectToRect will transfer pixels from a source rectangle to a target rectangle, so the image will be stretched if the sizes of the two triangles are not the same. In the last example, SurfaceRectToXY, the read pointers and write pointers move simultaneously in pixel by pixel bases. But in SurfaceRectToRect, the read points move more than one pixel or move a fraction of a pixel each time, depending on the scaling factors.

What demands your attention in this code is that float variable operations should be avoided at all cost. I used bit shift by 16 to achieve this goal. Theoretically, it’s called fixed point math. But for those not familiar with this concept, I’ll make it easy for you.

When you want to use a float point variable, for heaven’s sake, don’t do it. Multiply it by 2^16 and store it in an integer variable instead. This integer is in fact a float point number with fixed precision. So we can say it’s a fixed point number. If you want to add/subtract two fixed point numbers, or multiply a fixed point number with an integer, just use integer operations. But if you want to multiply two such numbers using integer operations, don’t forget to divide the result by 2^16.

The class SurfaceRectTransform supports scaling/rotation/translation in 2D space. I included some matrix/vector operations in the code so that you don’t need to include a 3rd party library. And these operations are also done in fixed point numbers! For those that are not familiar with matrix operations, I suggest you to see related chapters in any computer graphics book, or you can see the article in DirectX SDK. It’s in DirectX Graphics -> Direct3D 9 -> Programming Guide -> Getting Started -> Transforms -- but they are introduced in 3D space. You should apply and simplify them on an XY plane only. The demo program with this article shows how to use this class, so I won’t explain it here.

In an early version, I wrote a specialized version of SurfaceRectToRect for ConstColorBlender, because this blender doesn’t need to sample the source surface at all. And I don’t trust the compiler for eliminating all the redundant code for stretching the source surface. But after examining the generated assembly, I found that the VC8 compiler is such a marvel, and I really made a fool of myself by writing that specialization.

What’s pixel format conversion? Why we need it? As I said earlier, the pixel format of your back buffer and all surfaces should match the pixel format of the user’s desktop in order to achieve maximum speed. So when loading an image file, you should convert it to the format you need. But what if a crazy user changes the desktop resolution and the bit depth of the desktop while the game is running is window mode? In this case, you’d have to convert the pixel format of all the surfaces to match it again.

To do this, we need to add something to the pixel policy.

struct X8R8G8B8

{

static DWORD ms_typeid;

static DWORD toX8R8G8B8(PixelType o);

static PixelType fromX8R8G8B8(DWORD o);

……

};

ms_typeid is used to uniquely identify the format. We will need it later. The following two functions actually build a bridge between two alien pixel formats with an intermediate format X8R8G8B8.

With this addition, we can write two special raster operations like this:

template

class ConvertWithoutAlphaBlender

{

public:

static void blend(typename T1::PixelType* bkg, UCHAR* destAlpha, typename T2::PixelType* fore, UCHAR* srcAlpha) {

STATIC_ASSERT(T1::ms_typeid != T2::ms_typeid, There_Is_No_Need_To_Convert);

*bkg = T1::fromX8R8G8B8(T2::toX8R8G8B8(*fore));

}

};template

class ConvertWithAlphaBlender

{

public:

static void blend(typename T1::PixelType* bkg, UCHAR* destAlpha, typename T2::PixelType* fore, UCHAR* srcAlpha) {

STATIC_ASSERT(T1::ms_typeid != T2::ms_typeid, There_Is_No_Need_To_Convert);

*bkg = T1::fromX8R8G8B8(T2::toX8R8G8B8(*fore));

*destAlpha = *srcAlpha;

}

};

STATIC_ASSERT is a compile time assertion to make sure the pixel formats of the source and target surfaces are not the same, or else we don’t need to convert them in the first place. STATIC_ASSERT is also introduced in the book 'Modern C++ Design.'

These raster operations are slightly different from other operations we defined before. They accept two template parameters instead of one, so we can hardly say that they belong to the same policy anymore. And the host classes, SurfaceRectToXY, SurfaceRectToRect and SurfaceRectTransform, can’t work with them either.

So from here, we have two choices. We can either add another template parameter to all of the other raster classes and the three host classes. Or we can add three new host classes to take the converters and keep the older system intact.

With the first solution, we will have something like this:

template

class SimpleCopyBlender

{

public:

static void blend(typename T1::PixelType* bkg, UCHAR* destAlpha, typename T2::PixelType* fore, UCHAR* srcAlpha) {

STATIC_ASSERT(T1::ms_typeid == T2::ms_typeid, Source_And_Target_Format_Mismatch);

*bkg = *fore;

}

};template class ConvertPolicy >

class SurfaceRectToXY : public BlendPolicy

After these modifications are applied, the three host classes will be able to work with all the blenders, including the two converters.

Surfacesurface1, surface2, surface3;

Surfacesurface4;

// copy without conversion

SurfaceRectToXY o;

o.copyRect(&surface1, 0, 0, &surface2, &rct);

// copy with conversion

SurfaceRectToXY o2;

o.copyRect(&surface4, 0, 0, &surface3, &rct);

If we use the second solution, we will end up with two distinguishable systems. The older system remains unchanged. The new system consists of two converters each with two template parameters and three new host classes which accept them. But in the real world, who need to convert pixel formats and stretch/rotate them at the same time? So we will only need to write a new version of SurfaceRectToXY. You may call it SurfaceRectToXYWithConvertion.

To compare the two solutions, the first one has a unified interface for all operations at the expense of messy parameters. The second one doesn’t have all these messy parameters at the expense of a new class. I can’t say which one is better. I will leave the decision to you. In the sample code, I used the second solution.

That pretty much wrapped up what I should say about the 2D render base I presented here. Before moving on to the next topic, I’d like to enumerate some commonly used raster operations.

Table 1: More raster operations

Blender Name | Description |

CopyWithConstAlphaBlender | Copy all pixels to the target surface using a constant alpha value. It’s used to fade in/ fade out a picture. Trgb = Trgb * (1 - Ca) + Srgb * Ca |

AlphaBlenderWithColorMask | The 4 channels (ARGB) are filtered by a constant mask before blending into the background. Trgb = Trgb * ( 1 – Sa * Ca) + Srgb * Crgb * Sa * Ca |

AdditiveBlender | The RGB components of the source pixel are added into those of the target pixel. The values are truncated to maximum value. Trgb = Trgb + Srgb |

SubtractiveBlender | The RGB components of the source pixel are subtracted from those of the target pixel. The values are truncated to 0. Trgb = Trgb – Srgb |

AdditiveBlenderWithColorMask | The source color is filtered by a constant value before adding into the target color. Trgb = Trgb + Srgb * Crgb |

If you are as curious as me, you must be very interested in the performance. Well, I don’t expect it to be as fast as code written in ASM, but I can say that it’s fast enough for my game. I made some tests with it and here are the results.

Table 2 shows the operations I added in the main loop. They are executed in one frame. The fill rate is chosen so that it’s close to a real game. I ran 4 tests on 2 machines with different bit depth. The results are shown in Table 3.

Table 2: The operations executed in a benchmark program

Operation | Number | Fill Rate | Description |

800* 600 simple copy | 1 | 100% | A background image |

100 * 60 copy with color key | 100 | 125% | Most of the sprites |

100 * 60 alpha blend | 30 | 37.5% | Some sprites need alpha blending |

Table 3: Test result of the benchmark program

CPU | Bit Depth | FPS |

Pentium M 1.4G running on 583MHz | 16 | 62 |

32 | 56 | |

Pentium D 2.8G | 16 | 202 |

And I ran another 4 tests in a real game, with music, sound, network and logic enabled. I’m not very careful with the performance in this game. It uses a lot of real time rotation and some real time scaling, all with alpha blending. Image 3 is a screen shot of the game. The test results are shown in Table 4.

Image 3: Screen shot of a real game test.

Table 4: The result of real game tests

CPU | Bit Depth | FPS |

Pentium M 1.4G running on 583MHz | 16 | 41 |

32 | 40 | |

Pentium D 2.8G | 16 | 120 |

You may have noticed that I omitted some important error checks in the code. For example, I didn’t check whether the source rectangle and the target rectangle exceed the boundary of the surfaces except in SurfaceRectTransform. I didn’t even check whether the alpha channel of a surface existed before using it. I don’t do this because I want to leave these checks to a higher level of code so that the core code is small and clean.

In this topic and the next one, I will discuss some optimization not beyond the code level.

Pixel fill rate is the most wanted FPS killer. So rule number one, design the game to minimize fill rate. And you should not make the mistake of drawing something that only gets covered later. I’ll give you an example. In Image 3, the main game area covers a great part of the background. So I made sure not to paint that part of the background.

I’m sure you won’t expect a game with a lot of real time rotation and stretching to be fast. So rule number two, try your best to avoid real time rotation and scaling. You can always pre-rotate images at loading time, if the extra memory consumption is acceptable.

Rule number three, always use the degraded version of raster operations whenever possible. The background should be rendered without color keys or alpha channels. And use color-keyed images whenever possible instead of the ones with alpha channels. In fact, a lot of games from Korea use color-keyed images only. And the sharp edge even seems more charming than the blurred edge of an alpha image. The artists in Korea are really good at it. Even semi-transparent effects are created with dot grid of color key.

Dirty region is a technique to minimize fill rate by only painting the parts of the screen that are different from the last frame. If back buffer scrolling is supported with it, it can easily cut the fill rate of a game by 50% or even 80%. For those that still remember, the achievement of Starcraft, which can easily run on Pentium 133, is indispensably due to the use of dirty region.

I don’t have time to use dirty region yet. But I do think about how to do it. I think I can slice the screen into small pieces. And use the steps described below to detect which part has changed:

Pseudo-paint the scene and record all the operations happening in every piece of the screen. The operations are not really executed yet. After this, each piece will have an operation list. By list, I mean the operations should be executed one after another. If an operation extends to more than one piece, it will be refined into smaller operations.

For each piece, compare the operation list with the one of the last frame. If they are the same, then ignore this piece. If they are not the same, execute the operation list.

It’s quite straightforward, and I have confidence that it will work. If you have other ideas about how to do it, please don’t hesitate to discuss with me by email: [email protected].

I think optimization like this is more reliable than assembly level optimization, because they don’t rely on any special instruction set. So before diving into assembly level, please make sure you’ve done everything in a higher level.

Download source code here (*.rar format).

Read more about:

FeaturesYou May Also Like