Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Blitz Games Studios studio development director Chris Viggers explains how user testing revealed flaws in player interaction with two of the studio's Kinect titles -- and how the flaws challenged the assumptions of the development team.

[Blitz Games Studios studio development director Chris Viggers explains how user testing revealed flaws in player interaction with two of the studio's Kinect titles -- and how the flaws challenged the assumptions of the development team.]

It's popular, it's a buzzword, it's a growth industry and many developers are afraid of it, but what exactly is usability testing and how can it be used to create ever more compelling entertainment?

From a developer's perspective, at its core, usability testing is something that tells designers, artists, coders, animators and managers what they are doing wrong. It is human nature to try to avoid any sort of pain (mental or physical) and many of us struggle to accept criticism, even when that feedback could lead to a better end result.

In addition to the problems of essential human nature, the games industry is changing even more rapidly than before and our creations are being experienced by more and more people, and ever more diverse demographics, each one with their own expectations of how they want to be engaged while spending their precious time on our games.

As any producers reading this will testify, during development you are always acutely aware that you never have enough time, enough money, or enough resources to achieve what you really want to achieve with that game.

Adding yet another process into your production pipeline is going to be a difficult sell both to the team and to upper management, especially when that process is highly likely to result in rework to fix the problems that the players are flagging up.

However, the reality is that your game is going to be tested at the end whether you like it or not, and that testing will come in the form of a review or Metacritic score. If you ignore end user feedback and usability results during development, you run the very real risk of getting poor reviews and feedback that will publicly damage your game and reputation. Facing up to this fact and integrating this with your development pipeline is key to success.

Our studio design director, John Nash, has already talked here about how input devices for gaming have changed massively over the years and are continuing to do so. This, combined with the huge variety of new target audiences, means that we need to not only embrace player input at a much earlier stage in development, but also learn to adapt our work flow to accommodate this input.

In this article I will outline some practical examples of how we did this at Blitz Games Studios, in this case focusing more on boxed product development rather than direct-to-consumer development as the challenges are very different.

Usability testing is firmly rooted in scientific methodologies and relies on generating data from the user experience of the game that is independent of what the player actually thinks about the game (or rather, what they think they think about the game). It is about hard metrics, unarguable data and demonstrating how the player is actually playing the game.

It is not only the collection of data that is important; understanding its value is clearly critical to being able to maximize its effectiveness in development. The first thing to look at is how does the data you have collected stack up against your game's experience and the core pillars of your vision?

For example: was the user frustrated in a certain area, only to be elated when they finally solved the puzzle, and is that something that you actually want to maximize? Or were they scared during certain areas and moving slowly through the environment due to their anxiety, rather than just because they're bored or lost? I'm looking at you, Limbo and Dead Space!

To provide a concrete example of how specific user testing helped on one of our projects (Yoostar 2) last year, over six sessions of testing we found that more than 40 individual items required further attention to ensure that we maximized accessibility and experience for the player.

40 individual items identified and resolved for Yoostar 2

Even with all this data collected, the team did not just jump on each issue and attempt to fix it. Each area was assessed against the core pillars of the vision and what we wanted the player to feel during the experience.

Would this change result in the game being more accessible or more complicated, would any change in terminology be understood worldwide, or do any of these changes affect core aspects such as the timing of the game loop or the user's ability to get right to the action? These are all questions you need to ask yourself before amending any parts of the game, even with the most 'expert' feedback in front of you.

It is also worth remembering that although the data you get back is important, some of the results you get will point to the need for more generic solutions; in other words, some of the problems are caused by developing innovative features with which people are not already familiar. Assessing peoples' expectations is great, but surprising them with unique and innovative ideas is also something we should be striving to achieve.

Accord the usability results all due respect, but do not treat them as gospel: trust your instincts and your vision as well.

When we embarked on the Yoostar 2 project, we knew that we needed far more information before we wrote a single line of code.

We knew this because it was a game that not only utilized brand new hardware that no one at the time had yet experienced (Kinect), but the game also gave players the ability to directly star as themselves in movie scenes -- something fresh and new to the audience.

The first questions we asked ourselves were "Is the core concept of the game going to appeal to the chosen demographic, and what do they expect from the concept?" and "How do people expect to interact with Kinect?"

An additional and important issue was to capture feedback on the visual styling of the game. There was only one way to get answers to these questions, and that was to ask the people at whom the game was targeted.

With regard to actually navigating and playing the game experience, we immediately contacted Vertical Slice, a company who specialize in user experience and usability testing. We sent them a PowerPoint presentation of what we thought the game flow and menus might look like -- no code, just plain slides.

We also took our ideal target audience and got them to "play" the PowerPoint, standing up, with no controller, using gestures. The results were stark. An overview of players' expectations is shown below.

An internal focus test for expected and reliable gestures

From our initial focus test on the game features themselves, a number of interesting facts became immediately apparent:

The Career mode was unanimously viewed as a highly motivating reason to play the single player game.

Players wanted the option to both copy a scene accurately and to do a creative, interpretative performance, but their expectations of the results varied. Players who wanted to copy accurately wanted a score, while those who wanted to ad-lib were motivated simply by creating humorous pastiches.

Scores were also reported to be important for replay value; the players wanted to know that they were improving if in single player mode, or that they were beating their friends in multiplayer.

The possibility of DLC for further film clips was well-received.

The players expected Hollywood style imagery to be part of the visual style.

With regard specifically to people's expectations of how to use Kinect, a selection of the findings were:

People found controlling a cursor to be much slower than traditional pad navigation.

People expected a few simple options on screen rather than many different choices.

We took these results back to the team and then started work in earnest on the game design and game flow with this information in mind. Again, this information seemed like common sense in hindsight, but at the time these pointers were valuable in creating something to which the target audience would really relate.

I always joke that game developers are the worst people in the world to make games. We are so close to the process and so passionate about what we do that making objective judgments can be difficult, if not impossible, when we are inside our bubble. We have found usability testing to be a near-perfect way to address this all-important balance of passion with calm judgment. A simple example of this would be when we decided that to indicate the direction of one of the Kinect 'rail' UI elements in Yoostar 2, we would use a simple arrow:

Original start button concept

That seems perfectly simple, doesn't it? We thought so too, until we tested the UI.

90 percent of users wanted to push the button, as it looked exactly like a play button on a VCR. The first button in the game and nine out of ten people could not get past the first screen: we had a problem.

Although this was in the game for a number of weeks, not one person in the studio had questioned it. We all knew how Kinect worked and thought "Ah, this must be a rail so you swipe your hand to the right". All that detailed technical knowledge of Kinect and how to make games actually worked against us in this instance.

The final solution, to include arrow movement and effects for user feedback

This is a simple example and thankfully something that was incredibly easy to fix with little impact on either the team or the project schedule, but it beautifully illustrates the dangers of game developer thinking against a real user's perspective.

A really important element of usability testing and one that many people might overlook is the need to prepare your team, especially your game designers, for getting external feedback on a regular basis. By ensuring that our team cultures support open communication and feedback, an open mind and a professional attitude, we could encourage people to accept the feedback and learn to use the data in conjunction with the established game vision, even to welcome it, rather than seeing it as a list of extra tasks dictated to them by a faceless external third party.

Including as many team members in the actual usability sessions themselves is an excellent way of encouraging buy-in, and it also creates advocates and ambassadors who will enthuse about the process back in the studio.

Testing areas of the game depending on difficulty curve can raise some delicate issues as well. It is important to test further along in the game flow where you would expect real players to have built up game skills, so trust your difficulty matrix and make sure you distinguish between a real usability issue and a difficulty issue.

Training your designers to recognize these is crucial to ensure that your game avoids becoming generic with regard to its challenge levels.

We all know the value of polish to the final game assets, but it is also important to note that when you are testing functionality, the value of polish to the area you are testing is key to the success of the feature.

This is of critical importance with motion controllers such as Kinect. It is all too easy to get a feature to a functional pass where technically you can test it, but if the feature lacks basic polish such as sound effects, graphical effects, some control polish and music, the user will most likely reject or have problems with that feature.

This then gives you data showing the feature has "failed", and the team then either spins its wheels coming up with (usually) more complicated solutions to "fix" the feature or abandon it altogether, whereas in reality a little polish before testing would have made the results more meaningful and saved wasted effort. (Again, this comes down to the sometimes risky dichotomy between the developer mindset and player expectation.)

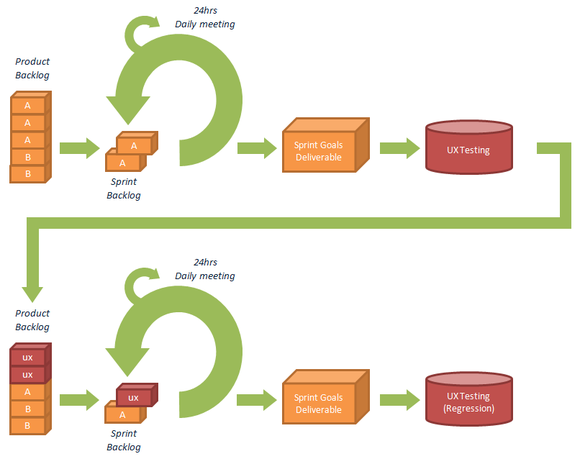

If you are using an Agile development methodology, integrating this into your process can be fairly simple, as long it is thoroughly thought-through up front. It is relatively easy to ensure that as soon as a product feature is complete (Feature A), you enter (at least) that feature into test; once the feature is tested, you take the findings and add these directly into your product backlog for the project, then deal with the relevant items during the next sprint.

Integrating user testing feedback into your sprint planning

The traditional bottleneck in this method comes when the team wants to move onto the next stage of the same feature (further iteration), but have not got the report back yet from the user testing phase. A simple way to mitigate this in most instances is to have the sprint team move to a new product feature (Feature B) while the Feature A user testing results are being recorded and discussed, and then move back to Feature A for the final sprint, incorporating the user testing findings directly into your backlog for that sprint.

Holding individual user testing sessions is useful, but ensuring that you follow up with regression sessions specifically dedicated to testing out the changes that you make is essential.

It is very easy to fall into the trap of making a change based on limited feedback and assuming that this is the "correct" fix for that issue, only to find that you have actually made it worse. Again, this can be due to the amount of polish on a particular feature over the feature itself, so that possibility should also be addressed.

One example of this was with our Fantastic Pets Kinect game, where we had reports from the first test that many of our younger users were having trouble throwing the ball to the pet; specifically, the problem lay in picking up the ball and also the ball leaving the hand at the expected time (sticky ball syndrome).

As it can be very tricky to get gesture recognition on Kinect right for all users, whether young or older, we took another look at the code and systems we were using.

Having made some tweaks to the systems and tested them internally with the game team, we saw some improvements, especially with launching the ball. We therefore believed that we had improved this aspect ready to go into the next test session.

On the second test session we were horrified to see that players still had trouble in picking up the ball. After further investigation, we realized that the problem actually lay in the player's expectation that they should be able to throw one ball directly after one another, rather than wait for the pet to bring the ball back to them.

The players thought this because the camera was in a fixed position while the dog went to fetch the ball, so they just expected to be able to throw another one. A simple fix to add cinematic cameras that followed the dog during the retrieval of the ball resulted in the players understanding that they needed to wait until the view returned to the standard camera before they could grab the ball and throw it again.

Without this regression session, we would have assumed that we fixed the issue in the code changes that we made and could have shipped the game without addressing the real root cause.

At Blitz, we all read and practice Agile methodologies for game development; integrating usability testing at regular intervals into our Agile process was therefore a relatively easy step forward for us, and something that we will definitely continue to maximize for the future. While usability testing is a relatively new science with regard to its role within game development, we believe that it offers unmatched potential in creating successful entertainment for an ever-growing and broadening audience.

Does factoring usability testing into your pipeline mean more work? Yes. Does it add cost to your project? Definitely. Are the end results worth it to your company and your teams? Without a shadow of a doubt. Our passion at Blitz Games Studios is to create critically and commercially successful games that everyone can enjoy, and testing our games with the people who matter is key to achieving this into the future.

Many thanks to Struan Robertson and Steve Stopps for their help with this article.

Read more about:

FeaturesYou May Also Like