Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Could games like Civilization benefit from putting their interfaces on a diet? Can a player control too many objects at once in a strategy game? Are the thinking man's war simulations bowing down to energetic arcade controls? Find out inside!

Computer games traditionally have a player control one or more units on the screen. In early games, each player controlled one unit. As CPU power grew, players controlled more and more units. Today, a player might have hundreds of units, each one of which they must control individually. The unit-based user interface (UI) is no longer sufficient. This article will suggest a different way of thinking about UIs, and will discuss how to compare one UI to another, or one UI to the theoretical maximally efficient UI, to tell if your game can be improved. I’ll use examples primarily from strategy games, but it applies to UIs for programs of all kinds.

My favorite game of all time is Civilization, in all its incarnations. When I introduce friends to the game, they’re enthusiastic for one or two thousand years – which, in Civ, means about a hundred turns. By then the cities, the units, and the waiting have multiplied so much that it becomes, for the novice, a chore more than a game.

Even for a Civ addict like me, the game isn’t much fun after about 1800. Too many clicks. I counted the clicks, mouse movements, and keystrokes that it took me to get through one move of Civilization III in the year 1848. Many hours later, when that turn was done, I’d counted 422 mouse clicks, 352 mouse movements, 290 key presses, 23 wheel scrolls, and 18 screen pans to scroll the screen. This was making full use of all the Civ shortcuts, automation, and group movements. I probably would have made twice as many movements if I hadn’t been counting.

You may wonder why I’m talking about Civ III, when Civ IV has been out for months. I never bought Civ IV. I’d been waiting and hoping for a more playable Civ. What finally arrived was a Civ that takes just as many clicks, but with a new animated 3D UI.

Don’t get me wrong – Civ IV has important new gameplay aspects. Firaxis did far better than companies who create some new units, artwork, and cut scenes, and call it a new version. But I didn’t stop playing Civ III because I was tired of the game, or because it wasn’t pretty enough. I stopped because it takes too long to play a game. Civ didn’t need a prettier interface – it needed a more efficient one.

Overclick isn’t limited to Civilization. Real-time strategy games will leave you with even worse carpal tunnel. That’s why I don’t play Warcraft or its descendants online. In terms of clicking skills, I’m over the hill. Strategy is irrelevant in today’s real-time strategy games when you’re playing against a fourteen-year-old who can click twice as fast as you.

The RTS user interface hasn’t improved since Total Annihilation (1997), which had more useful unit automation than many current games. Meanwhile, the number of objects our computers can control and animate has increased, and continues to increase, exponentially. The old UI model isn’t at the breaking point – it’s broken.

This article is about how to design a UI that lets players communicate their intent with fewer clicks. I’m not going to address UI ergonomics (physical ease of use) or cognitive ergonomics (issues such as eyestrain and human memory and processing requirements). The energetic reader should incorporate those as well into their UI evaluation, but it’s too complex for this short article.

Strategy games typically place the user in the role of something like a battalion commander. But think about the job of a real battalion commander. He doesn’t sit watching a map and barking orders to each tank on the battlefield. In combat, he has a small number of people he gives direct orders to. A squad leader in the US Army leads about nine soldiers, with the help of two fire team leaders. A platoon leader typically commands 4 squad leaders plus 3-4 other staff. A company commander typically gives orders to four platoon leaders plus staff; a lieutenant colonel might command a battalion of four companies plus staff. And so on, up the chain of command.

This is no accident. It is a fundamental principle of military organization doctrine that a commander can effectively manage only a limited number of subordinates. The number of directly-reporting subordinates that a commander has is known as his span of control. The most efficient span of control is believed to be the same for a platoon leader as for a theatre commander. 19th-century European armies settled on seven as the maximum span of control, and that number hasn’t changed much since.

The rule of thumb in the US military today is that span of control should be from 5 to 7. A supervisor in FEMA is supposed to oversee no more than seven subordinates during a disaster-relief effort. According to US House Report 104-631, the average span of control across the entire US government bureaucracy in 1997 was seven. Not coincidentally, seven is also the number of items that the average person can keep in memory at the same time (Miller 1956). This is why phone numbers within an area code are seven digits.

This rule should apply to strategy game design as well. A player who is controlling more than seven entities can’t effectively supervise any one of them. (A corollary is that, if a turn takes a minute, and a player makes a move more than about once every 10 seconds, that player probably isn’t focused and isn’t getting an opportunity for the kind of deep, strategic thought that is supposed to be the source of enjoyment in a strategy game. Games that require a click per second are arcade games, regardless of their complexity.)

The seven subordinates that a field commander controls often include one or two who don’t actually act in combat, but who merely relay information to the commander. This means that different information displays (such as the city screen and the technology screen in Civ) count towards the seven.

There are complications to this rule. Take chess – each player controls 16 pieces, and must be aware of 16 enemy pieces. That’s why chess is so frustrating to a beginner. But chess experts can do it. Are they breaking the rule of seven?

Well, yes and no. Gaining expertise in chess doesn’t consist of learning to keep track of more and more pieces in your head. It involves learning to break board positions down into separate, familiar structures – a pawn structure, a castled king behind three pawns and a knight, a set of pieces exerting influence on the center squares – in order to bring the number of concepts down to a manageable number (say, oh, seven).

The span of control in the US military is intended to be such that a commander can command and supervise one level down (his subordinates), and keep track of everything happing two levels down (his subordinates’ subordinates). The chess player is responsible for two levels of control: first, choosing the subgoal for each of these structures (e.g., “control the center” or “break up his defensive pawn structure”); second, choosing a move to implement each subgoal, and choosing just one of those moves. Thus, it isn’t keeping track of all the chess pieces, but having to choose every move, that violates the rule of seven. If you want your game to play faster than chess, and still involve strategy, you must observe the rule of seven.

A game designer might think she can have the best of both worlds by making a game in which the player can control every unit, but doesn’t have to. This, unfortunately, is not so. There’s a rule in economics called Gresham’s Law of Money: Bad money drives out good money. Gresham explained why, when a country tries to use both metal coins that have real inherent value, and paper bills that don’t, the paper money drives the coins out of the marketplace, until everyone is using only paper money.

In gaming, bad players drive out good players. In roleplaying games, the bad roleplayers, who emphasize accumulating wealth and power over playing a role well, advance faster and eventually drive out the good roleplayers. In a game which allows control of individual units, adrenaline-filled 14-year-olds who can make three clicks a second will beat more thoughtful players who rely on the computer to implement their plans, because we’re still a long way from the day when a computer can control units better than a player.

There is a player demographic that enjoys click-fests and micromanagement, and it may be the same 14-year-old males that the game industry��’s magazines, advertisements, and distribution channels are aimed at. Trying to step outside that familiar demographic is always hazardous. (I believe games won’t be mainstream until they’re sold at Borders; however, that’s a separate rant.) No producer would want to lose this market share, so it might be good to have individual unit control available as an option.

However, the market of players who do not enjoy carpal tunnel, which I suspect is much larger than the market of 14-year-old males, is not just underserved by today’s games; it is completely unserved. If the game is to also allow control of individual units, it must be a separate game option, and players should be able to set up multi-player games that disallow individual unit control.

By now, you’re probably questioning my sanity and the wisdom of the Gamasutra editors. Am I saying that strategy games should only allow a player to build seven units? Not at all. I am saying that the player shouldn’t control them all directly. We need to conceptualize an intervening level of control. It isn’t hard to do, but is hindered by a common misconception about object-oriented programming.

Smalltalk users called objects “objects”, and, what’s worse, they called methods “verbs”. Ever since, many object-oriented programmers have interpreted the word “object” as something like “noun”. I had arguments with other adventure programmers in the 1980s who insisted that a game wasn’t object-oriented unless the physical objects in the game were OO objects in the code. When I suggested organizing the code so that verbs in the game were objects in the code, thus enforcing a consistent physics on the game, they said, “Objects are objects; verbs are verbs.” To this day, we organize our game code, and the user interface, around the physical objects in the game.

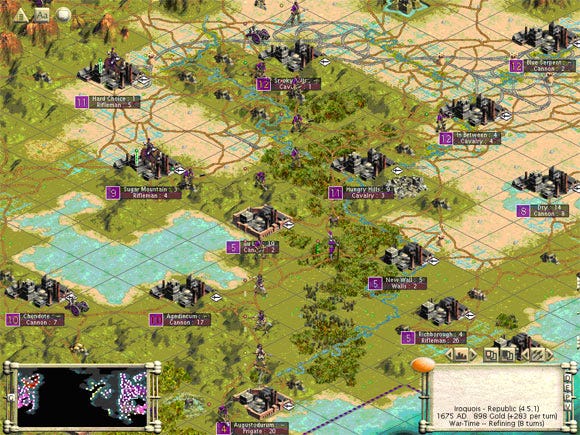

There’s no need to do so. Objects, in the OO sense, can be any abstraction you choose. In the case of Civ III, an object could be a military action or an engineering project. Consider Figure 1. In this figure, my civilization had recently developed the technology for railroads. I was attempting to construct a railway line connecting the north end to the south end of my civilization.

Figure 1

With the unit-centered interface of Civ III, this requires clicking on individual worker units and assigning them to individual sections of the railway line. Each worker must be assigned to a short section of the line, because if you start one unit from up north toward the south, and another unit from the south toward the north, they compute the path they will follow at the beginning of their assignment, and don’t adjust it to account for the work done in the meantime by other units – leading to a multitude of non-overlapping parallel railway lines, and armies that can’t get to your borders before you’re overrun by Roman legions.

I needed to assign about a hundred workers to building the railway line in order to get it built before being overrun. For each worker, I had to click on it once to bring it into focus; then type ‘g’ to begin a movement, scroll to its starting point on the railway line, and click again. Later, when it reached that point, I would have to type “ctrl-r” to build a railroad, scroll to the end of that unit’s portion of the railway, and click again. That’s three mouse movements, three keystrokes, and three mouse clicks per unit. I tried to keep the workers in groups of three, although this was possible only about half the time. So it probably took me 600 clicks, keystrokes, and scrolls to build that railway.

Imagine if I’d been able to say that I wanted to build a railroad, click on its start, and click on its end. The computer would then have directed workers, as they became available, to work on sections of the railway. The entire railroad could have been constructed with the same amount of supervision that it took me to direct one worker.

The railway was needed to move troops to my borders to defend against the Romans. Again, each new unit built had to be individually routed to some point along the border to defend. Imagine how much less pain my wrists would be in if I could simply define the border, cities, or points to defend, to which the computer would direct surplus troops as they were built. But to implement this cleanly, the programmers would have to have conceived of railroads and borders as first-class objects.

Part of the reason that a commander can get by with commanding only seven subordinates is prior preparation. He has drawn up scenarios in advance of any action, and can cause a quick and dramatic change in his battalion’s actions by ordering a switch from one scenario to another. His service branch has a standard library of tactics, from the squad level on up, which he can use during an action to explain his intent to his subordinates. His subordinates have rules of engagement to help them decide how to respond to a wide variety of enemy and non-combatant actions without his intervention. He can add to these rules prior to entering combat. He has many field exercises, and after each one, he tells his subordinates what they did right and wrong, and his superior tells him what he did right and wrong. This reduces the amount of direct supervision needed in combat.

If you design your game AI using a uniform formalism, such as a rule-based system or finite-state automata, you can open it up to your players as another way of directing their units. Creating rules of engagement for semi-autonomous units is considered necessary in real military wargame simulators such as JSAF or OneSAF. SAF, in fact, stands for “semi-automated forces,” which are units that can be given fairly sophisticated missions and rules of engagement, so that an operator can supervise many of them and intervene as little as possible, while still providing a realistic training environment for the real humans controlling each of the opposing units.

Some games, like Quake, allow players access to the AI to program enemies; others, descended from Robotwar, give players units that must be completely programmed and that cannot be directed during gameplay. None provide an interface for semi-automated forces. Providing two user interfaces – one to be used off-line to provide rules of engagement, and another to be used on-line – could reduce the stress of handling individual units.

To detect areas where your user interface is inefficient, you can play-test your game with a user-interface profiler. A UI profiler is like a code profiler, but instead of reporting CPU cycles, it reports user interface events. It should show you exactly how many clicks, keypresses, and mouse moves the player made within each part of your code. User interface profilers can present more sophisticated information, such as graphs showing the sequences of actions users took (Ivory & Hearst 2001).

You may be able to use your IDE’s profiler to count I/O events or function calls, but this won’t usually tell you what the player spends most of her time doing. You can get more information if you roll your own UI profiler by having your code call a routine to report UI events.

If the developers of Civ III had used a UI profiler, they would have found an inordinate number of clicks and keystrokes being used in the negotiation portion of the game. This would have revealed a bug in the game – a missing scrollbar in the city-selection menu – as well as the need for the game to recommend a minimally acceptable number of gold pieces to offer in trade, rather than making the user conduct a binary search to find it. These two tasks accounted for over a hundred of the UI events in my count.

To compare different potential UIs, you need a way of keeping score. Going back to my Civ III UI counts, I can come up with a total UI score by assigning a point value to each type of UI action. How you assign points depends on what you want to measure. For an arcade game, speed might be the primary criterion, and so you might count a mouse click as being nearly as fast as a keystroke – faster, if the user is already using the mouse anyway.

For a game such as Civ, which takes hours for a single game, you should weigh wear and tear on the player more heavily. Clicking a mouse button takes more than an order of magnitude more force than pressing a key, and uses the same finger each time, so that it causes a great deal more stress to ligaments in the wrist. (Pain that people blame on typing is usually caused by mouse use.) So I’ll count each mouse movement and keystroke as 1 UI point; each mouse click as 3 UI points; each wheel scroll as 6 UI points; and each mouse pan (scrolling the map) as 9 UI points. This gives a total of 2208 UI points for the turn. Different UIs can then be compared by score.

A UI profiler can be used to evaluate and refine a UI that’s already been built. With a little math, though, you can evaluate a UI before writing any code. I’ll touch briefly on that next.

You can compute the work W that a player must do to specify a move using your user interface. If you measure W in terms of game variables, such as the size of the gameplay area and the number of player units, you can then compare different possible user interfaces, even if you can only estimate W.

For an example, consider a UI for sculpting virtual clay. You have a voxel array of size n´n´n, and each voxel can be on or off. We’ll consider four possible user interfaces.

In the first interface, the user types in the coordinates of each voxel that she wants turned on. The number of keystrokes it takes to specify a number from 1 to n is proportional to log2(n), so this takes work proportional to 3log2(n) to specify each on-voxel. (From now on, I’ll drop the phrase “proportional to” and write W=f(x), with the understanding that it means W=O(f(x)).) If we suppose the artist will efficiently specify only a surface, and not fill in the entire inside of the sculpture, then the total work will be W=3n2log(n). (The size of the surface is proportional to n2.)

In the second user interface, the user chooses a point in three-space with a three-dimensional mouse (such as 3DConnexion’s Spaceball), and clicks the mouse to toggle its on/off state. The work needed to go to a particular point in 3-space is then the combination of 3 movements; each movement is on an axis ranging from 1 to n, with (let’s suppose) an average value of n/2. This seems as if it would then take work W=n5/8 to create a sculpture. However, let’s suppose that after turning on one voxel, the user moves to one of the 26 neighboring voxels. We will say that this takes work proportional to the information needed to specify one choice out of 26, which is log2(26). We’ll approximate it as log2(33=27), because the constant 3 in both this and in our previous value for W come from the three-dimensional nature of the sculpture. The total work is then W=3n2log2(3). This interface looks like an improvement over the first one.

In our third interface, we’ll start the user off with a sphere of clay of about the same volume as the desired sculpture, and the user will use an ordinary 2D mouse to move a cursor around on the surface of the clay, and click the left button to push the voxel under the cursor down (perpendicular to the surface), and the right button down to pull it up. We’ll use an accelerating push/pull interface which states that the number of voxels pushed or pulled doubles when the clay is moved in the same direction as the last click, and halves when moves in the opposite direction, so that the proper position can be found with a binary search taking time proportional to log2(n). Suppose again the user only needs to work each point on the surface once. The work needed to move to the next voxel is 2log2(3), because this movement is in 2 dimensions. The total work is then W = n2 ´ 2log2(3) ´ log2(n) = 2n2log2(n)log2(3). This is worse than the previous UI, even though we’re restricting movement to be on a surface, because of the number of clicks that it takes to push and pull the virtual clay.

In our fourth interface, the user will move around the surface with a 2D mouse, and push and pull points in and out as before. However, the surface of the clay will have tension, so that pushing a point in or out will drag all the neighboring voxels along. The result is that a surface can be sculpted in a way similar to the way you can define a curve using control points and a spline. Then W = 2c2log2(n)log2(3), where c, the number of control points, is now a function of the irregularity of the sculpture, not of the number of voxels. For very high-resolution modeling, n>>c, and W = O(log2(n)). This is a vastly superior user interface.

To incorporate cognitive ergonomics into W, you would also count the amount of memory the player needs to remember the meaning of each keyboard shortcut, clickable icon, etc., and incorporate a measure of the work done to convert displayed information into relevant usable information (this is the tricky part). You could also add a term for memory retrieval time. Retrieval time estimates for different types of memories are given in (Anderson 1974); for remembering one of a list of options (say, possible commands for a unit), the time is K+an, where n is the number of options and K and a are constants. Cognitive terms may be summed separately if you don’t how to scale them so as to be comparable with the non-cognitive terms.

It is possible, although usually difficult, to compute how efficient a user interface is relative to its theoretical optimum. Information theory shows how to compute how much information is present in a series of numbers or other symbols, given the current situation, the history, and knowledge of what symbols are likely. If you can estimate the probabilities of all of the possible player moves, you can estimate the information I that is present, according to information theory, in a move. The best your UI can possibly do is for W to be of the same order of computational complexity as I, meaning that O(W) = O(I).

I/W is a measure of the efficiency of your interface; it can be at most O(1). For every variable in the expression for I, it will have the same or a larger exponent in W, so it is easier to think in terms of W/I, which is a measure of how badly you abuse your players. Estimating I is much harder than estimating W; you can often obtain only an upper bound on I. In order for W/I to be meaningful, then, it should be a lower bound.

What you find, in a game with many units, is that for unit-based user interfaces, your lower bound on W grows much faster with the number of units than your upper bound on I does. This is because, most of the time, a lot of the units do pretty much the same thing, and knowing what a small fraction of a player’s units are doing would enable a skilled player to predict with good accuracy what the rest of their units are doing. This is exactly analogous to our user interface for sculpting clay: Most of the points on the surface of the sculpture have a surface tangent almost the same as do the points near them. An interface that requires you to move every unit individually is the equivalent of a sculpting interface that makes you move every voxel. The use of information theory allows you to calculate I, and learn the true dimensionality required of your UI, even when you don’t have a simple way to visualize the connection between the units in play.

To explain how to compute W and I in general would take another article. You can figure it out from a book on probability and information theory. A good primer is the book version of the 1949 paper that defined information theory, The Mathematical Theory of Communication, reprinted in 1998 by the University of Illinois Press. In difficult cases, I can be estimated by a combination of theory, and statistics gathered during playtesting.

Computers can now animate more units than any player could reasonably want to control, and the number will continue to increase exponentially. This leads to player frustration rather than fun. In a good user interface design, no player should control more than seven game entities. To enable this, the UI may let the player control something more abstract than an on-screen unit. This requires object-oriented developers to think of code objects as abstractions beyond the mere units on the screen. The UI may also give the player a chance to specify behaviors off-line in order to reduce the amount of on-line supervision needed.

Game developers can evaluate their user interfaces using a user-interface profiling tool, and by computing the work involved in different interfaces. They can even estimate their theoretical efficiencies, to know for sure whether there’s room for improvement. The ultimate goal of game design is to increase the game’s FPS - fun per second. The easiest way to do that is to pack the same action into fewer seconds, and the easiest way to do that is usually to improve the user interface.

John R. Anderson (1974). Retrieval of prepositional information from long-term memory. Cognitive Psychology 6: 451-474.

Brig. General Huba Wass de Czege & Major Jacob Biever (1998). Optimizing future battle command technologies. Military Review, Mar/Apr.

Melody Ivory & Marti Hearst (2001). The state of the art in automating usability evaluation of user interfaces. ACM Computing Surveys 33(4): 470-516.

Kenneth Meier & John Bohte (2000). Ode to Luther Gulick: Span of control and organizational performance. Administration & Society, 32(2): 115-137.

G. A. Miller (1956). The magic number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63.

Claude Shannon & Warren Weaver (1949). The Mathematical Theory of Communication. Reprinted in 1998 by the University of Illinois Press.

Read more about:

FeaturesYou May Also Like