Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Naughty Dog combat designer Benson Russell walks us through the decisions made when revamping Uncharted's combat AI for the award-winning sequel, complete with scripting techniques and nitty-gritty details.

[In latest installment of his series on the combat design of Uncharted 2, Naughty Dog combat designer Benson Russell delves into the techniques the studio devised for its combat AI, and how those evolved from the original game in the series. Part I discusses how the team evolved the combat design from the original Uncharted, and Part II takes a look at combat encounter design.]

I wanted to share some of the nitty gritty technical details of our AI systems to give insight as to how we tackled some of the common AI issues we all face as developers. Most games have some form of AI and regardless of the genre of game, there are fairly common requirements that have to be met. So I think you should be able to relate a lot of this to the systems you're working with, and perhaps spark an idea or two on new things you could try.

Our AI comprises a bunch of very modular systems that handle the different aspects of what we need them to do. In high-level terms:

- There are designer interface components that allow us to define the roles and behaviors for gameplay.

- There are aesthetic components that handle how they look and animate.

- There are decision-making components that pick where to go, how to get there, and what to do along the way and once there.

We try to keep the systems as modular as possible as it allows us to rapidly experiment and prototype with new ideas and gameplay mechanics. It can cause some issues when the systems need to cross-communicate, but the benefits far outweigh the negatives.

For the purposes of this article, I want to stick to what we mostly deal with from a designer's perspective, so this is what I'll be giving the most attention.

The way we define an AI type is by creating a set of default data for all of the various AI systems. It's very open-ended, to allow us the ability to create almost any kind of behaviors we want without having to hardcode them into the engine via programming support (although we can go that route for special cases). We use our custom scripting language as the primary interface for setting these parameters, as well as interfacing with them on the fly during gameplay. Let's take a closer look at how we set up an AI:

So at the highest level of defining these settings we have what is called the Archetype. This forms the starting point for the default set of data to be used when this particular type of AI is spawned in the game. We create the Archetype and then assign it to an AI game object that is placed in a level. For example, here's the Archetype that defines a light pistol soldier from Uncharted 2 (the guys with a light grey uniform wielding a Defender pistol, to be specific):

(define-ai-archetype light-pistol

:skills (ai-archetype-skill-mask

script

hunting

cover

open-combat

alert

patrol

idle

turret

cap

grenade-avoidance

dodge

startle

player-melee-new

)

:health 100

:character-class 'light

:damage-receiver-class 'light-class

:weapon-skill-id (id 'pistol-basic-light)

:targeting-params-id (id 'default)

)

It's a simple structure that contains all the data needed for an AI to know what their abilities will be. There are more options that can be included than what this example shows, but these are the basic meat and potatoes of what will make an AI tick.

You can see one of the first pieces of data (and probably one of the most important) is the Skills list. This is the heart of our AI in terms of what abilities they can have. It's designed to be modular so skills can be added and removed with simplicity. Here are some descriptions of the skills used in this example:

- Script: This allows the AI to be taken over via our scripting language. It basically turns their brain off and allows them to respond to script commands. We use this in cases where we need the AI to play a particular animation, or we want to order them to move to specific points. This is needed as without it they would still be thinking and trying to act on their own, thus they would end up fighting against the commands issued to them.

- Cover: This gives them the understanding of cover and how to use it (I'll be going into details of our cover system later on). For characters where we don't ever want them to take cover, we can simply remove this skill.

- Open-Combat: This tells the AI how to fight out in the open outside of cover.

- Turret: This allows the AI to use mounted turrets, both in terms of gameplay and technically (i.e. what animations to play).

- Idle: This gives them an understanding of idle behavior (i.e. when there's no enemy in the area).

- Patrol: This allows the AI to understand how to use patrol paths that we lay out in our custom editor called Charter.

- CAP: This stands for Cinematic Action Pack. Action Packs (AP) in general are how we get the AI to perform certain animations for specific circumstances (such as vaulting over, or jumping up a wall). The CAP is a way to have an AI execute a desired performance (like smoking a cigarette) but still have their brain on so they can respond to stimulus. The CAP would define all of the needed animations, as well as transitory animations to get the AI into and out of the different states.

There are many more skills than the ones I went over here, and each is designed as a modular nugget to tell the AI how to react for various gameplay purposes. This makes it very easy to add features to the AI as the programmers can just write a new skill that plugs into the list. We also have the option to dynamically add and remove skills on the fly during gameplay through our scripting language.

The Health parameter should be self explanatory. We specifically switched from a floating point value used in Uncharted 1 to an integer value in Uncharted 2. The reason for this is we were getting some unpredictable behavior out of the floating point system (i.e. checking for a value of 1.0 but it's coming in as 0.999999).

The main end result to the player was that sometimes enemies would take an extra bullet to go down. Also to keep things simple we try to give everything a base of 100 Health (AI and player). There are a few exceptions when we want a really powerful enemy (such as the heavy chain-gunners), but for the most part we want the consistency so it's easy to balance (a little more on this further down).

The Character Class sets the look of the character in terms of their animation sets. The classes are defined under the hood between the programmers and animators. For Uncharted 2 we had Light, Medium, Heavy, Armored, Shield, Villager, and SLA classes (as an interesting side-note, SLA stands for ShangriLa Army, which were the people mutated by the sap from the Tree Of Life that Drake fought at the end of the game).

The Damage Receiver Class is the main way we balance the game in terms of how much damage the enemies can take from the different player weapons. This is an open-ended system in that the designers have the power to set up everything however they choose without having to rely on the programmers.

We implemented this system in Uncharted 2 because we kept running into issues where we wanted to tune the different weapons against different types of enemies.

Originally for Uncharted 1 (as with most games I've worked on) weapons did a set amount of damage against everything, which you tuned by setting the health of everything. This tends to cause many headaches when it comes to balancing.

For example, if you decide to change the damage of a weapon, it would have a spiraling effect on having to re-balance almost everything because you would have to re-adjust the health of all the enemies, which in turn means changing the damage of other weapons, etc...

It also causes for a lot of confusion in that enemies would have seemingly random amounts of health upon inspection (i.e. enemy A has 90 health yet enemy B has 400?!)

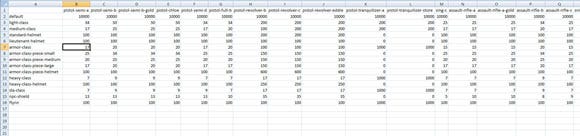

So to solve this, we created the Damage Receiver Class system. It starts with a simple spreadsheet that designers can set up, and then we run a Python script to convert it into our game script. It looks like this:

(click for full size)

The columns represent the weapon names (these are under-the-hood names that designers have set up elsewhere), and the rows represent the different Damage Receiver Classes. The intersection of each row and column represents how much damage a particular weapon will do against a particular Damage Receiver Class.

So looking at the spreadsheet for example, "pistol-semi-a" (which is the Defender pistol) will do 34 damage against anything that has a Damage Receiver Class of "light-class," and 17 damage against anything with "medium-class." We can create as many Damage Receiver Classes as we need and assign them accordingly (and not just to AI). This makes balancing the game very straightforward and simple.

This is how the AI understand the weapon they've been given and how it's supposed to operate. It's a simple structure that defines how the weapon will behave in terms of accuracy, damage, and firing pattern. We purposefully separate the player's version of these parameters so we can customize how effective the enemies will be with any particular weapon. Here's what one would look like:

(new ai-weapon-skill

:weapon 'assault-rifle-b

:type (ai-weapon-anim-type machine-gun)

;; damage parms

:character-shot-damage 5

:object-shot-damage 120

;; rate of fire parms

:initial-sequence-delay (rangeval 0.0 0.0)

:num-bursts-per-sequence (rangeval-int 10000 10000)

:auto-burst-delay (rangeval 0.4 0.8)

:auto-burst-shot-count (rangeval-int 3 5)

:single-burst-chance 0.33

:single-burst-delay (rangeval 0.4 0.8)

:single-burst-shot-count (rangeval-int 1 3)

:single-burst-fire-rate (rangeval 0.16 0.20)

;; accuracy parms

:accuracy-curve *accuracy-assault-rifle-upgrade*

:time-to-accurate-cover 3.5

:accuracy-cover-best 1.0

:accuracy-cover-worst 0.0

:accuracy-running 0.9

:accuracy-rolling 0.5

:accuracy-standing 1.0

:master-accuracy-multiplier 1.0

:master-accuracy-additive 0.1

)

At the top there's some key information regarding the visuals. The Weapon parameter references the internal game name of the weapon so it knows what art, FX, and sounds to bring in. The Type parameter sets the animations to be used (either pistol, machine-gun, or shotgun). The Damage section I think is self explanatory, and again we use a base health of 100 for the player.

The Rate Of Fire parameters defines how the AI will use the weapon, and it is here that we set up the firing patterns. There are several key issues we have to keep in mind while setting these up. The first is the actual volume of bullets as that effects how much the player can be damaged. The second is the actual behavior of the weapon (i.e. making sure a fully automatic weapon behaves as such).

The third is how the audio will be perceived in combat. This is an issue that we're constantly at odds with trying to balance. We want the AI to fire the weapon in a realistic manner as to what a normal person would do, but we also don't want every AI in a combat space firing fully automatic weapons without any pauses.

It not only doesn't feel right and breaks the immersion, it just sounds plain horrible! The cacophony of that many weapons going off at once overloads the system and instead of an intense firefight you get distorted noise. It also makes it more difficult for the player to be able to identify what weapon an enemy is using.

The way the Rate Of Fire parameters work is that there's a firing sequence with an optional delay between each sequence (in this case it's not being used). A sequence consists of a number of bursts (in this case set to 10000), and each burst can either be fully automatic (in which case it uses the fire-rate set by the weapon) or a set of single shots with a manually entered fire-rate.

So in the above case each burst has a 33 percent chance of being a set of single shots, fired at a rate of .16 - .2 seconds between each shot, and a delay of .4 - .8 seconds before the next burst fires. Else it will be a fully automatic burst consisting of 3 - 5 bullets, and a delay of .4 - .8 seconds before the next burst.

The accuracy curve points to a pre-defined structure that looks like this:

(define *accuracy-assault-rifle-upgrade*

(make-ai-accuracy-curve

([meters 60.0] [chance-to-hit 0.0])

([meters 30.0] [chance-to-hit 0.3])

([meters 20.0] [chance-to-hit 0.4])

([meters 12.0] [chance-to-hit 0.4])

([meters 8.0] [chance-to-hit 0.5])

([meters 4.0] [chance-to-hit 0.9])

)

)

The way this reads is it's a set of points on a graph that define the accuracy of the weapon at a specified desired distance, with a linear ramp between each point. So in this case, from 0 - 4 meters there is a 90 percent chance of hitting, which then falls off to 8 meters where there is a 50 percent chance of hitting, which then falls off to 12 meters where this a 40 percent chance of hitting, etc... We purposefully set these accuracy curves up separately so we can easily re-use them in any number of weapon skills that we choose.

The rest of the parameters are modifiers that effect accuracy and should be mostly self explanatory. The one I'm sure you're wondering about is the Time To Accurate Cover section. What this does is allow us to give the player a small window of opportunity when they pop out of cover to take a shot. It will lower the current accuracy of the AI by the percentage listed in Accuracy Cover Worst (in this case to 0 percent), and then over the duration set in Time To Accurate Cover (in this case 3.5 seconds) it will ramp the accuracy up to Accuracy Cover Best (in this case 100 percent).

So the net effect for the player in this case is when the player pops out of cover to take a shot they have 3.5 seconds where the AI's accuracy will go from 0 percent back up to whatever it should be based on conditions.

By using these weapon skill definitions, we are able to make the different types and classes of AI more or less challenging. As an example, for Light class soldiers overall we gave them slightly slower fire rates and a longer Time To Accurate Cover to make them easier.

With the Medium class of soldiers we would increase their fire rates, boost their accuracy by 10 percent with the Master Accuracy Additive, and reduce their Time To Accurate Cover to make them tougher. We would also try to tailor the fire rates of different weapons to make them sound a bit more unique so as to be more easily identified by the player (i.e. a FAL would have a different pattern than the AK and the M4).

The AI has a weighting system to determine how to pick the best available target. The weights are set up in a structure in one of our script files. In the game all potential targets are evaluated according to these weights, and the target with the highest weight will be chosen. Here's what the default settings look like, with some comments on the basic parameters and what they mean:

(new ai-targeting-params

:min-distance 0.0 ;; minimum distance to look for a target

:max-distance 200.0 ;; maximum distance to look for a target

:distance-weight 50.0 ;; weight to apply, scaled according to distance away

:target-eval-rate 1.0 ;; how often to evaluate targets, in seconds

:visibility-weight 10.0 ;; weight applied if the target is visible

:sticky-factor 10.0 ;; weight applied to the existing target

:player-weight 10.0 ;; weight applied if the target is the player

:last-shot-me-weight 20.0 ;; weight applied if the target shot me

:targeting-me-weight 0.0 ;; weight applied if the target is targeting me

:close-range-distance 11.0 ;; weight applied if target is within the specified distance

:close-range-bonus 0.0

:relative-distance-weight 10.0 ;; explained later

:relative-search-radius 100.0

:relative-dog-pile-weight -1.0 ;; explained later

;; weights for specific vulnerability states, explained later

:vuln-weight-cover 0.0

:vuln-weight-peek 0.0

:vuln-weight-blind-fire-cover 0.0

:vuln-weight-roll 0.0

:vuln-weight-run 10.0

:vuln-weight-aim-move 20.0

:vuln-weight-standing 20.0

:in-melee-bonus 0.0 ;; weight applied if target is in melee

:preferred-target-weight 100.0 ;; explained later

)

The majority of the AI in the game use this default set of targeting parameters. What these settings translate to in simple terms would be as such:

They will look for targets up to 200 meters out, preferring targets that are closer. They will also prefer targets that they can see (visibility-weight), are shooting at them (last-shot-me-weight), and are the player (player-weight). They will also favor a target that they were already targeting (sticky-factor), and will re-evaluate if they should pick a different target every second (target-eval-rate).

There are a few parameters that we can use to help distribute targeting of multiple enemies. As an example, when a larger battle is going on between multiple factions, we don't want everybody targeting the same guy.

The relative-dog-pile-weight is applied based on how many other AI on the same team are targeting a particular enemy (hence why this is a negative value). The value specified is multiplied per each team member that is currently targeting that enemy for the total weight to be applied (so the more team members targeting that one enemy, the stronger the overall weight).

The relative-distance-weight is used to help prioritize based on distance to the chosen target. It searches for fellow AI team members that are targeting the same target within the specified relative-search-radius, and scales the relative-distance-weight based on who is closest to that target. This helps to solve the problem of AI on the same team picking targets that they are furthest from, yet other team members are closer.

The vulnerability weights are used to help boost targeting based on certain conditions. So in this case, there are weighting bonuses for when the target is running out in the open, aim-moving out in the open (this only effects the player), and standing out in the open.

The preferred-target-weight is a way for designers to specify specific targets. The way it works is we have the option at any time to specify something as a preferred target to any specific AI. This means that when that AI runs a target evaluation, anything marked as a preferred target for that AI will get this extra weight applied as a part of the evaluation.

So as you can see we have a lot of options to influence how the AI will select their targets. We can set up as many sets of targeting parameters as we like and assign them to various archetypes, or even on specific AI placed in levels. Also a lot of these weights can be set to negative values, thus giving us more flexibility based on the context of the scenario.

And all of that makes up an AI archetype! There are other parameters that aren't covered in this article (such as testing- or prototype-specific ones), but these are the bulk of what's used. By creating combinations of these parameters we can create AI types that behave differently as well as rapidly prototype new ideas. Most of the power is also in the designer's hands and only requires programmer intervention when we need a new skill or specific behavior (which is also quick to prototype thanks to the skill list). So let's move on to how we set up the remaining parts.

When it comes to setting the look of the AI (both visually and with animation), again we have a great amount of flexibility. For the visuals, we have a parts based "looks" system that allows us to compartmentalize the characters however we wish.

For example with the light and medium class enemies we had a basic body (without hands or head), various heads (with hands included to keep the skin-tone matched), and a collection of gear we could attach however we wished. In a script file we would define a "look" as a specific collection of these items and then assign them to an archetype.

Also you can create a collection, and then attach that entire collection to another collection. We used this feature extensively to create head varieties that we could swap out randomly. So as an example, we had several classes of guys that had specific gear attached to the head (like a specific type of hat or mask). We would create several collections just for the heads with their gear, and then at run time when the AI spawns it would pick one of these collections at random to use for the head.

For animations we have a system that allows us the ability to remap any animations at any time so we can mix and match whatever we need. What this means is that when the AI system calls for certain actions under the hood, we can map any action to any animation we chose.

This is very powerful and flexible in that you don't have to create a whole animation set for each character. Instead you can just remap specific actions to new animations.

As an example, in Uncharted 2 we had differences between light and medium soldiers in how they would fire from cover in that the light guys would expose themselves more, and medium guys would not. We just used a remap so that when the AI system issued a command to fire from cover, it would use different animations for light and medium soldiers.

Other examples of where we used this system are the sequence of escorting the wounded cameraman Jeff through the alleyways, and when Drake was walking around holding a flaming torch in the old temple in the swamps.

So let's take a look at how the decision making processes works and how we as designers interact with them to get the behaviors we want.

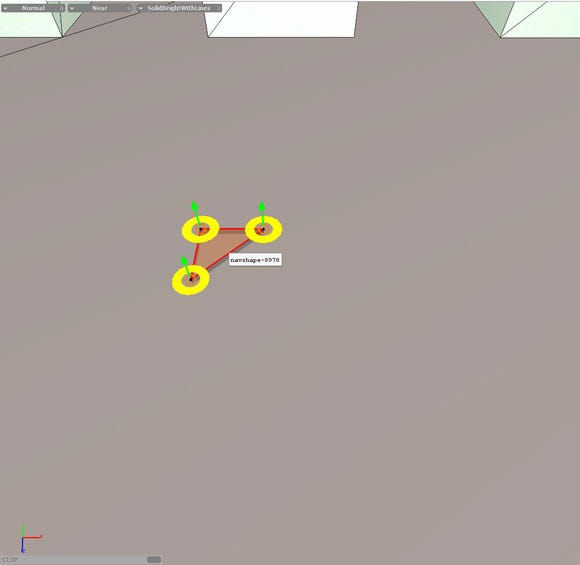

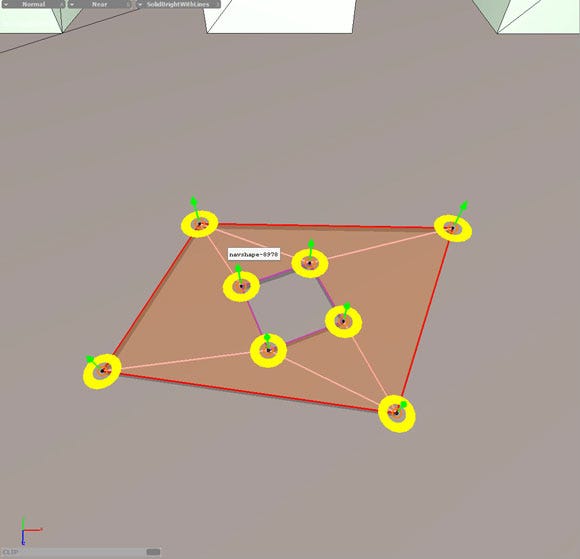

The AI has to understand the environment in order for them to move around and do cool stuff. To do this, we create what we call a navigation mesh (navmesh for short) which defines the boundaries of the world for the AI. It's a polygonal mesh created in Charter using a special set of tools to make it fast and intuitive.

[You can find out more about Charter in the previous article.]

(click for full size)

This is the start of a basic navmesh when we initially place one inside of Charter. We have a special editing mode for navmeshes where you see the yellow discs on each of the vertices.

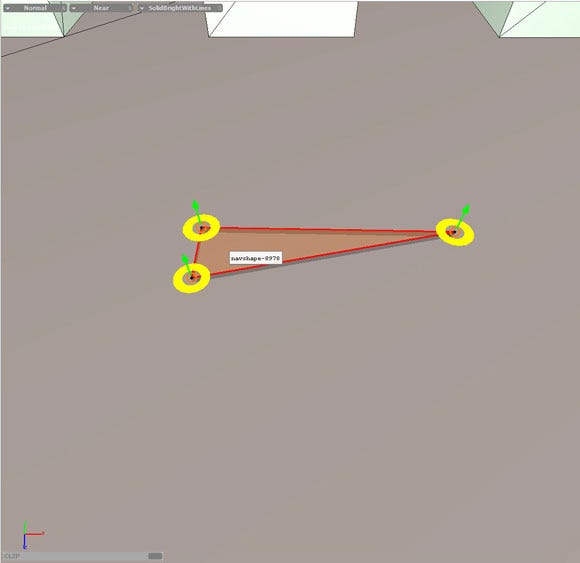

(click for full size)

These discs allow us to just drag a vertex around in the horizontal plane while maintaining its height relationship to the ground. The green arrow on each vertex allows us to move it vertically if we need to adjust the height.

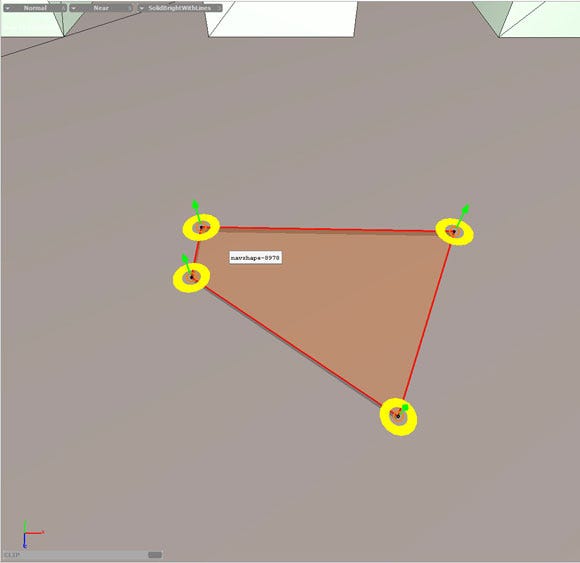

(click for full size)

We can create new vertices by clicking on the red lines that connect the existing vertices. We can also delete a vertex by control clicking in the orange circle at the center of the yellow disc.

(click for full size)

We can cut out a hole by control clicking and dragging in the middle of the navmesh. The shape of the hole can then be modified by dragging its vertices, and adding or removing vertices as normal.

Using these tools we can create and manipulate navmeshes to conform to the area that we want the AI to navigate. We can also link multiple navmeshes together by snapping their vertices to one another, and when it compiles it will combine them all into a single navmesh.

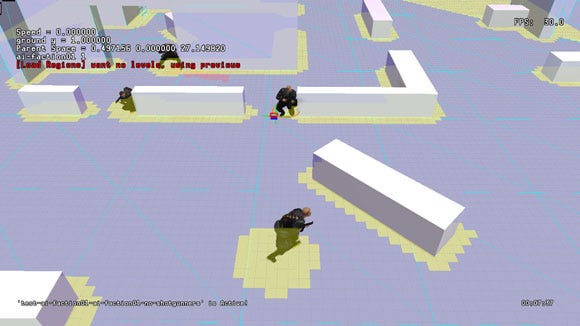

While the navmesh defines the boundaries of the navigable space, the AI rely on a second system that works in conjunction with this called the navmap. The navmap is a 2D grid projection on the horizontal axis around the AI. It takes into account the navmesh as well as dynamic objects.

(click for full size)

In this image you can see the navmap of the selected AI (the one near the center with the circle around him). You can see there's a grid overlaid onto the world, and that some of the grid squares are colored in yellow. The yellow represents space that is non-navigable. It uses the navmesh to find the world boundaries (such as the area around the physical structures), plus the navblockers of dynamic objects such as other AI.

A navblocker is something the navmap specifically reads in and can be dynamically altered. It's usually derived from the collision boundaries of objects, but can also be placed manually in Charter, as well as generated with specific settings in code (such as with other AI). This gives designers the flexibility to dynamically block off areas for gameplay purposes. Also by using this kind of system we save on computational expenses as the AI does not have to continually probe the environment for dynamic obstacles.

This system handles most of the heavy lifting of allowing the AI to properly navigate through the environment, but what to do when you want the AI to be able to follow the player over more complex landscapes? For this we have what are called Traversal Action Packs (TAPs). We create animations that meet the demands of the environment (i.e. scaling walls, jumping over gaps, climbing ladders, etc...), which we then plug into the TAPs system and place them in Charter. The AI can then use these to navigate from navmesh to navmesh, being able to better follow the player.

While the navmesh and navmap give the AI an understanding of the environment, they need a way to evaluate the space and pick specific locations that are best suited for the situation. For this we use our Combat Behavior system. This is a weighted influence map of positions where the best rated point is chosen, evaluated at a rate of once per second per AI. The overall set of points that the system considers is based on a collection of several things:

- A fixed grid of points that appliesits self over the world and is filtered by the navmesh

- Cover points

- Zone markers place a point at their center (I'll cover these a bit later)

How the weighting of these points is determined is based on parameters we set up in a script. Here's an example of one from Uncharted 2 with comments on what the parameters do:

(new ai-combat-behavior

:dist-target-attract 15.0 ;; stay this close to your target

:dist-enemy-repel 5.0 ;; do not get closer than this to your target

:dist-friend-repel 2.0 ;; do not get this close to your friends

:cover-weight 10.0 ;; prefer points that are cover

:cover-move-range 15.0 ;; how far you'll move to get to cover

:cover-target-exclude-radius 8.0 ;; ignore covers this close to your target

:cover-sticky-factor 1.0 ;; prefer a cover you're already in

:flank-target-front 0.0 ;; prefer to be in front of your target

:flank-target-side 0.0 ;; prefer to be on the side of your target

:flank-target-rear 0.0 ;; prefer to be at the rear of your target

:target-visibility-weight 5.0 ;; prefer points that can see your target

)

While these are not all of the values at our disposal, they're some of the most used. This is a trimmed down example of our mid range Combat Behavior. To break this down, the AI using this will want to stay between 5 and 15 meters away from their target, while trying to stay 2 meters away from their friends. They prefer to be in cover and will move up to 15 meters to get to cover, but they do not want to pick a cover spot that's within 8 meters from their target. They will also prefer a cover they've already been in, as well as picking points that can see their target.

Combat Behaviors allow us a lot of flexibility in how we want the AI to behave. By creating a library and switching them as needed we can account for most any type of scenario we need to set up. They're also very handy when we have large combat spaces where the player can take multiple routes as they're not reliant on the player taking a linear path since they're always evaluating the situation and picking the best spot according to the set parameters. But there are some downsides to be aware of that we've had to solve.

Because this is a fuzzy system, errant readings can occur that you have to be careful to figure out. For example, based on conditions the system might pick a different point for one evaluation, and then go back to the previous point the next evaluation. Some filtering has to be done to get the AI to respond accordingly else they can end up bouncing around needlessly. There're some additional parameters we can put into the behavior to help increase the stability and help reject this kind of behavior.

Another issue that's very important to keep in mind is that the path finding system is completely separate from the Combat Behavior system. The behavior only picks the destination, while the path finding figures out how to get there.

For example, if the behavior has an enemy repel distance of 5 meters, the AI could still travel within 5 meters of their target to get to their location. Also the system could potentially pick points that require an outlandish path to get to (i.e. the point is just on the opposite side of a wall that requires the AI to have to run a long, winding distance to get to).

While we've come up with some methods to solve these kinds of cases, it's still an issue we try to make better. This is an example of a downside of having separate, discrete systems where we have to make them share data better.

Another tool we have at our disposal that works in conjunction with the Combat Behaviors are zones. A Zone is specified by a specific point in the level called a Zone Marker, and it contains a radius and height value to define the boundaries of the zone. Any AI that has a zone set will only consider Combat Behavior positions that land within the zone. Zones can be set dynamically at any time, including being set onto objects (such as the player). This allows us to focus the AI to specific locations and objects as needed based on combat conditions.

The last system the AI use to formulate their decisions is their sensory systems (vision and hearing). One of the things we wanted to change from Uncharted 1 was to keep the AI from always knowing where their target is. We needed this to introduce the new stealth gameplay mechanics, as well as to make the AI seem less omniscient during combat.

The vision system we came up with is based on a set of cones and timers. There's an outer peripheral cone and an inner direct cone. Each cone has 4 parameters that define it, they are the vertical angle, the horizontal angle, the range, and the acquire time.

The angle values determine the shape of the cone, while the range determines how far out it extends (beyond which the AI cannot see). The acquire time determines how long an enemy has to be within the vision cone to trip its timer. This value is also scaled along the range of the cone, so the outermost edge of the range will be the full value, and it linearly becomes shorter the closer it gets.

The two cones and their timers work together when it comes to spotting their targets. When an enemy enters the peripheral cone, the timer starts to count down (and remember this is scaled based on distance). If the timer expires, this signals the AI that they think they might have seen something. They then turn towards the disturbance and try to get the location of the disturbance within their direct vision cone. If the enemy target enters the direct cone, its timer will start to count down. If the direct cone's timer expires, then this identifies the target to the AI.

This system allows the AI the ability to lose sight of their contacts and allows the player the ability to be more strategic when fighting them. It also adds to the feeling of them behaving more naturally like a human would. A great example of this is when the player enters a long piece of low cover at one end when the AI is attacking them.

While in cover the player then travels to the opposite end so they're not seen while doing this. The AI will still be shooting at where they last saw the player, hence allowing the player some extra time to get their shots off when they pop out of cover as the AI will have to re-acquire and turn towards them.

A single vision definition for the AI comprises 3 sets of cones, with each set being used for different contextual circumstances during gameplay. The first set is used for ambient situations, the second set is for a special condition called preoccupied, and the third set is used during combat.

Ambient situations are when the AI has no knowledge of an enemy in the area. This is primarily the state the AI is in when starting a stealth encounter. Ambient is set up to have a smaller direct cone and longer acquire times to allow the player the ability to sneak around the environment. Preoccupied is a special case that can be used during Ambient states.

The idea is that if the AI is doing something that they would be focusing intently on, then they're not going to be paying as much attention to what's going on around them. These are set up to be pretty short in distance and long in acquire times. Combat is the set used when the AI is engaged with their targets. It is set up so that the acquire times are much shorter, and the direct cone is much larger to account for a heightened awareness.

So there's a very in-depth technical look at how we use a bunch of our AI systems, which is the end of this installment. The next installment will be dealing with gameplay techniques and philosophies we used, as well as some lessons learned. So until next time (which hopefully won't be as long!)

Read more about:

FeaturesYou May Also Like