Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

How do you stop racing game AI seeming unfair, but heighten competition? Black Rock's Jimenez goes in-depth to reveal the company's AI tactics for the critically acclaimed Pure.

[How do you stop racing game AI seeming unfair, but heighten competition? Black Rock's Jimenez goes in-depth to reveal the company's AI tactics for the critically acclaimed Pure.]

This article offers an alternative rubber band method to balance the AI behavior in racing games. It presents the concept in a chronological manner, demonstrating its evolution throughout the development of Pure, our recently released trick-based racing game.

Initially we will cover the three main systems behind the concept: skills, dynamic competition balancing and the "race script". We then move onto explaining its implementation in Pure and how we used the previously mentioned toolset to try and give the player the desired experience. Finally we will offer conclusions and suggest possible alternative uses for the system.

When developing the AI in Pure, we wanted to create a system which always provided convincing, fair and interesting races for the player. We wanted to challenge the player by spreading out the field, while keeping rivals close but not punishing them too much for making mistakes. Races in video games need to be managed well to make them exciting; otherwise the player will almost always stay ahead or fall behind the pack and stay there.

Rubber banding is a system that tries to maintain the tension and thrill of the race by keeping the AI characters around the player. It does so by reducing (drastically) the velocity, cornering skills, obstacle avoidance, etc., of the AI characters in front of player, and increasing (just as drastically) the skills of the ones behind.

Usually rubber band methods rely predominately on speed changes, and so are often criticized because it's obvious when AI riders are going superhumanly fast or brain dead slow.

This method is very effective, as it keeps players surrounded for the whole race. It has an important downside: it's not fair, and that unfairness is easy to spot. It can easily break the illusion of fairness in the race. No matter how well a player does during the first 75% of the race, everything is decided by how they perform at the end. A single mistake in the last section can cost the player the whole race.

On the other hand, no matter how many mistakes the player makes at the beginning, there is still a chance of winning the race. The result: players can get frustrated and feel the competition is not fair. Given all this we rejected using rubber band.

When we originally started the project we wanted to base the performance of the AI mainly on the concept of skill. Every AI character's performance was originally based exclusively on a unique set of skills. Different aspects of the behavior of the AI will do better or worse depending on the associated skill for each ability.

For instance, the "tricks performance" skill governs how well the character performs the tricks, and how often he fails them would be dictated by what we called the "jump effectiveness" skill.

The skills are represented as a real number within the range [0..1], where 0 is the worst the character can perform in the associated category and 1 is the best.

Besides performance, skills can also be used to represent personality. For instance, the character's aggressiveness (controlling how much a character will try to take you off the track) or the probability for him to oversteer/understeer the corners. Thus, you can have skills that won't noticeably modify the performance of the AI character but nonetheless change its behavior. In this article, we will only discuss the skills that affect performance.

We initially thought the game difficulty could be balanced by determining a skill range for the AI. For instance, we thought a range of [0.4..0.6] would be fine for normal difficulty, having the best character of the race 0.6 in all his skills and the worst character 0.4. This approach presented some problems:

The difficulty level was usually either too hard or too easy. It was very difficult to exactly match the player's skill, and therefore the game always seemed to have the wrong difficulty setting.

Lonely racing. This is a side effect of the previous point: since the difficulty level was wrong, the player spent way too much time racing alone. This is not fun.

We soon realized this was far too inflexible and put us even further from achieving a fair but challenging race. Therefore we decided to call these values "initial skills" and have another set that would be the actual skills to be applied, which would be based on the initial ones.

These new sets of "actual skills" (we will refer to them simply as "skills" from now on) were calculated by adding an offset (either positive or negative) to the initial skills. This offset depends on the state of the race. We called this system of modifying the skills dynamically during the race "Dynamic Competition Balancing" (we will refer to it as DCB in this article).

You could argue that this first iteration of the DCB was merely a rubber band method with some variations. We used a different name anyway because of the bad reputation of the phrase "rubber banding". We tried to (internally) avoid the prejudices associated with that term.

We initially based the DCB values on the leaderboard position of the character. Every AI character was aiming to be in the position of their index -- that is, player 0 (human player) gets the first position, player 1 the second, etc.

This system alleviated the original problems a bit, but was far from solving them. It was still too unresponsive and it didn't work; the AI characters finished in a random order. Most importantly, the human player was still racing alone a lot of the time.

We also wanted the characters to form three groups that would be distributed over the race so we decided to add a "group" concept to the DCB. That meant that we had "group leaders" that were the ones trying to finish in a fixed position in the leaderboard and the rest of their group was trying to beat the next group's leader.

We ended up having a way too complex system for the DCB that didn't work so well either. The groups usually weren't compact and more often than not, the leader and the group were distributed randomly in the leaderboard.

We could have gone on with this concept and maybe we could have achieved acceptable results. Instead we came up with a different and innovative concept (that I will explain later on) and that worked really well.

There were some issues with the skills that appeared when we implemented the DCB.

The first thing that came up was what to do when the skill goes out of the range [0..1]. We defined the skill to be applied must be in the range [0..1]. We realized the initial skill sometimes ended up falling outside that range, after applying the DCB modifier to the initial value.

To solve this, we just clamped it to the valid range, because values outside make no sense. We really couldn't have the AI performing at -20% or at 110%; if we could make them perform better or worse we simply reassign both ends of the range to match the better and worse performance we could (and wanted to) allow.

We found out that an important part of the problem we had balancing the AI and trying to keep them close to the player had to do with the skills themselves and the way they were implemented. We ended up identifying properties the skills had to have to be suitable for the job:

Broad Range. We needed the skills to determine the AI character's behavior, so we had to allow a lot of variation in every skill -- so the 0 value truly means the AI will behave incredibly badly and the 1 value means the AI will behave very well indeed. If the range of the skill is not wide enough then we will find ourselves without enough coverage to match the variety of players' skill levels.

Responsiveness. It's not enough to have a wide skill representation if it's distributed in big discrete steps. For instance, if the tricks skill is distributed so that any value under 0.5 means that the AI character will fail every trick and every value over 0.5 means he will successfully land every trick, you may not have enough dynamic range in the skill.

The 0 skill character will perform very badly and a 1 skill character will perform as well as possible. But if a character has skill 0.3, his performance won't vary when upgrading to 0.4 or 0.45, nor will it change when reducing to 0.2 or 0.1. This makes the skill quite useless since it's not responsive enough to changes.

Since most changes will be small (probably below 0.2) we need a very responsive skill representation, continuous if possible. If the steps are discrete there should be plenty of them and they should be evenly distributed.

The progression should also be linear. It's not very useful if 90% of the behavior change correlates to a 10% change in value. The behavior changes should match the value changes as much as possible.

The AI still wasn't performing as well as you would expect for a next-gen AAA racing game, not to mention how well we wanted it to perform. It wasn't challenging, it wasn't fun, it wasn't respecting the groups we tried to keep together, and you still had this lonely racing sensation during most of the race.

Definition

We had a meeting to brainstorm around this subject, and that is where the "race script" concept came up. It was proposed that the designers wrote a "script" for how they wanted the race to develop. It had to cover all possible scenarios, such as when the player's skill is greater than the best AI, when it is lower than the worst AI, and at specific points somewhere in between.

Races don't always follow the ideal script, in other words, as they depend on the player's performance. The script forced the designers to think about that fact and how the game should behave on those occasions. The script is only a guideline, then, not a strict, linear script to be followed.

An example of what this script would look like could be:

If the player is as skilled as the top AI characters, the race will start and soon after the start he will be at the front of the back group of AI competitors. Then he will escalate positions throughout the first 70-80% of the race. When he is progressing to the next group, some AI characters will jump with him to try to avoid him feeling alone in the race. He should be fighting for the first position the last 20-30% of the race.

The guys in the first group will make it a little bit easier for the human player to get in first place being more forgiving with mistakes, especially in the very last part of the race. This way it will seem like he has worked his way through the race, and even if he make a mistake in the last part he won't be so punished for that. This is the main script for the race.

How could a typical race scenario really play out, based on the player's skill level, without a race script?

If the player is more skilled than the AI, the player will get in the first position soon after the start of the race and will keep adding distance to the AI every lap.

If the player is less skilled than the AI, the player will start and will very soon end up in last place, staying there for the rest of the race while the AI will be adding distance every lap.

If the player is more skilled than the lesser AI characters, but not as good as the ones in the first group (minus the maximum skill reduction), he will spend the race progressing only to the second group or just struggling against the AI characters in the last group.

Using this, the programmer can focus on the standard case: the ideal situation. In the previous example, this would be the first point.

Implementation

We then had to find a way to implement the script. We came up with a method that worked very well in our case: we decided to have every AI character aiming for a point a number of meters ahead or behind the human player. If aiming ahead, this value will be positive; if behind, it will be negative.

Then, each AI character's skills were set up to change dynamically depending on the AI character's distance to their assigned point, not in relation to the player (although this target point depends directly on the player's position).

Thus, an AI character could be shifting up its skills to try to get to its target point, even though it is still ahead of the human player. The same could happen the other way around: the AI character lowering his skills to get to the point even though he is already behind the player.

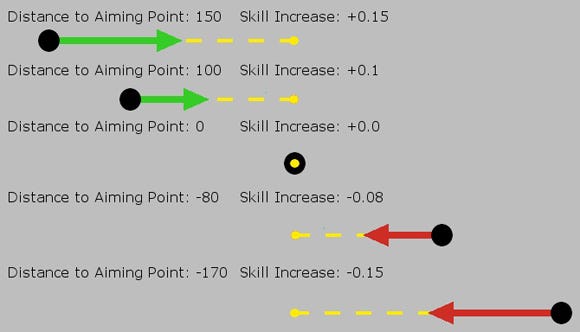

The previous image shows how the skills shift according to the distance to the target point. The skill increase is proportional to the distance to the target point. There's a maximum distance (150 meters in the figure) after which the skill increase gets limited (both positively and negatively).

Since we are aiming to a point relative to a human player, we realized we could dynamically modify this point during the race. This is a key concept, as it allowed us far more control over the behavior of the AI.

Since now we are aiming at a point in the track relative to the player we can modify this point to simulate the ideal script we had written. The best way to illustrate this is using an example:

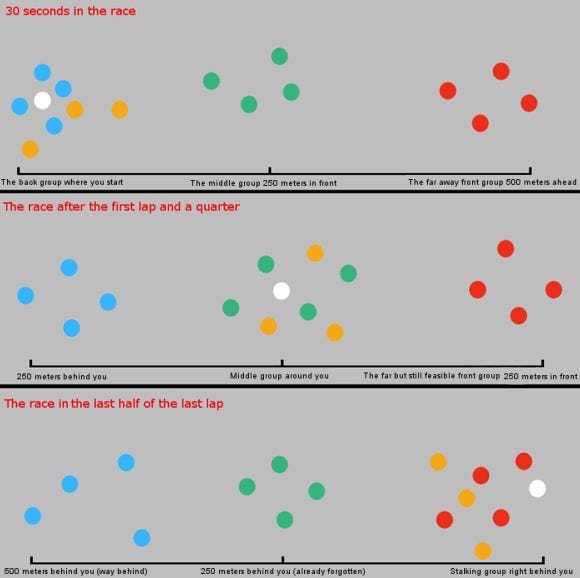

We had three groups of AI characters corresponding to the advanced, middle, and back groups described. All of those groups had four AI characters. This gave 12 characters in total, plus the human player, so there were three characters not assigned to any group.

Those three remaining characters were assigned to another group we called the "close group". This group always aimed to be with the human player (0 meters ahead of him) to try and simulate the characters that progress from one group to the other with the player. This group ensured some AI characters were near the player all the time, unless the player was outside the skill margins of the AI.

The "back group" was aiming to be with the human player (+0 meters) at the beginning of the race and 500 meters behind (-500 meters) at the end. This group was crowding the player's first meters, but was easily left behind soon after the race start.

The "middle group" was aiming 250 meters ahead of the player (+250 meters) at the beginning of the race and 250 meters behind (-250 meters) at the end. This group passes the player at the beginning, getting some advantage over him, and should progressively lose that lead. From the player's perspective he was gaining the positions slowly, and eventually left the group behind because of his skills. It doesn't feel like the group is letting you pass, because it's progressing slowly enough that it feels like you deserve to beat them.

Finally the "advanced group" was aiming 500 meters ahead of the player at the beginning of the race (+500 meters) and to be with the player (+0 meters) at the end. Thus at the beginning of the race this group tends to leave the player behind easily, but after the player passes the middle group, they would seem accessible.

Then, he could eventually catch them and fight for the first position right at the end of the race. Players feel rewarded as they work their way through the groups to finally join the last group (the one that you lost sight of at the beginning) and even beat them in the final meters. It should be rewarding and exciting, keeping the thrill of the race right until the end.

The previous image shows how a race would typically evolve. The white dot is the player, while the blue dots are the back group, the green ones the middle group, the red ones the advanced group and the orange ones the close group.

There are a number of subtle extra mechanisms used to try to adapt to the script, but the one described above is key to the whole system. Every AI character may be set up to follow a different progression in relation to the human player, but they are also constrained by their skill limits. They will only increase or decrease their initial skills so much, so if this isn't enough to get to their position they will fail, performing better or worse than "expected".

It's important to note that we don't allow the AI to increase or decrease their ability as much as they may want, but instead limit their improvement to a range determined by the difficulty level. This helps to bring on the illusion of fairness to the game.

We really wanted the player to feel they have earned their place in the race. If they have a very bad race, they will see themselves punished for that (not getting to the first group at the end of the race). They will also be rewarded (by adding some distance to the next AI character) if they have a great race.

Extra Mechanisms used in Pure

As mentioned previously, we developed a few extra mechanisms to have the AI perform as expected:

At the very start of the race it's really important for the AI to overtake the player as then they become an obstacle the player has to avoid to progress. The group structure is also harder to form correctly if the player gets ahead.

Thus the AI will have the top skill in every category for the first seconds of the race (10-20 seconds). Then the AI characters interpolate to the final skills for another few seconds (~5 seconds). This usually helps forming the groups. It doesn't work so well for very high difficulty levels, since in that case their skills are already close to the 1 value.

We found out people sometimes found it a bit easy during the initial part of the race. It didn't happen for everybody, but some people seemed to have it happen more often than others. We decided to include an additional modifier that reduced each lap until it was 0 in the final lap. For example, in a three lap race we would have all the AI characters with a +0.05 skill bonus during the first lap, then a +0.025 skill bonus the second lap and no bonus the last lap.

The script says that the player should be fighting for the first position during the last 20-25% of the race. If the "advanced group" is aiming for +0 meters at the end of the race then the player is only fighting with these riders for the very last meters (if any at all).

We decided to apply the moving target point mechanism only during the first 80% of the race. Thus the human player should be surrounded by the advanced group for the last 20-25% of the race and it should make it easier for him to achieve a first place. Note that when we talk of percentages of the race, we are referring to having covered that percentage of the total race length.

Even with the previous measure it may still be hard for the player to achieve first place in the race, and it won't be very forgiving. If you make a mistake in the last meters you will probably suffer the consequences. We decided to stop the AI characters from improving their initial skill in the last meters of the race.

We actually decrease the maximum skill modifier in that last 20% of the race so that it is 0 at the end. That makes it harder for the AI to recover from their mistakes, giving the human player some room to maneuver. They still decrease their skill if the human player gets caught behind. This usually allows the player a bit of room in the final meters so that even if he makes a mistake at the very end the loss is minimal.

The algorithm presented here offers a solution to "configure" or "script" your race in a fixed, predefined way, but allows a dynamic evolution of the race that tries to match the human player's performance -- but in a subtle way, and with bounds, so that it doesn't break the sensation of fairness.

We hope that the system explained here, which is the underlying AI behaviour in Pure, was never obvious to the player and kept the rewarding sensation from working their way through the race positions.

We think this method fits pretty well for our game since the genre is arcade racing. We wanted to present the player with exciting races where they are almost always surrounded by other guys and where they could easily progress, gaining positions, and overtaking opponents.

We wanted the top positions accessible but they had to fight for those positions at the end of the race, so that it's as thrilling and as exciting as possible. So we found this method fits our Hollywood-like goals very well.

We also think the algorithms described here may be used in any other kind of race game (simulators) and should be easy to implement.

We also think it could be used for other kind of games like FPS, RTSs, RPGs, etc. For instance, in a shooter you may want to have the player reaching certain strategic points with a certain amount of energy (assuming you don't have auto-regeneration).

If the player is over that energy, then you know your AI guys must press harder, but if the player is below that zone your guys should leave him some room to recover.

Then you can put some bounds to that, so that your guys never stop fighting and never have a lucky 100% headshot rate, and you set these bounds based on the difficulty level.

On easy levels they shouldn't press very hard, no matter what the human player's energy and the strategic points should have a higher reference energy values. It should be the opposite on the hardest difficulty level.

In short, this methodology presents a set of tools or values (the skills) that determine the way the AI characters behave. Then it uses those tools to try to match the player's experience to a predefined script, dynamically modifying the values of the skills based on the player's performance.

I would like to credit the people that worked shoulder by shoulder with me developing the Pure AI and whose ideas you may find in this article. It was thanks to working together and a lot of iteration and sharing our ideas that we ended up with the successful AI system we finally had.

The article presented here is the result of one year of hard work iterating over the initial ideas and doing lots of tests to improve and evolve the existing systems. The focus tests that we had were especially important, and helped us realize what the problems behind the AI were.

I would like to thank, in no particular order, Jason Avent, Michael Benfield, Jim Callin, Iain Gilfeather, and Tom Williams for their input as well as all of the people who helped us blind-test the product throughout development, without whom we couldn't have achieved the quality level we have.

Read more about:

FeaturesYou May Also Like