The new grammar of television (and games): Lessons from an avocado

Designer and academic Dan Hill takes an in-depth look at a game about an avocado, drawing fascinating insight about interactivity in both television and video games.

Part three of a four-part series, in which a new game about an avocado and a young inventor sketches out new ideas for both television and video games.

Attention-seeking cameras

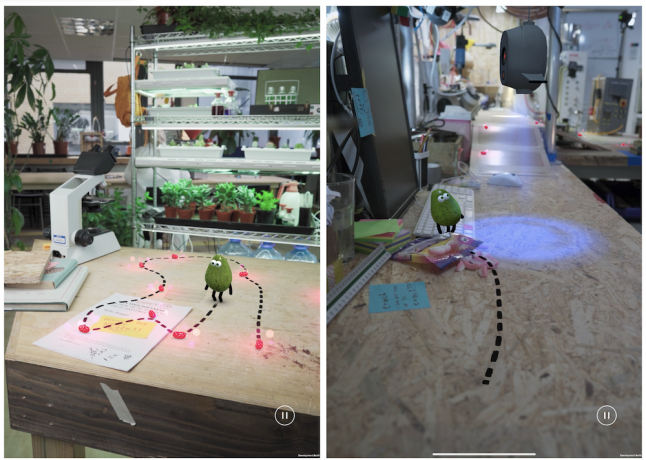

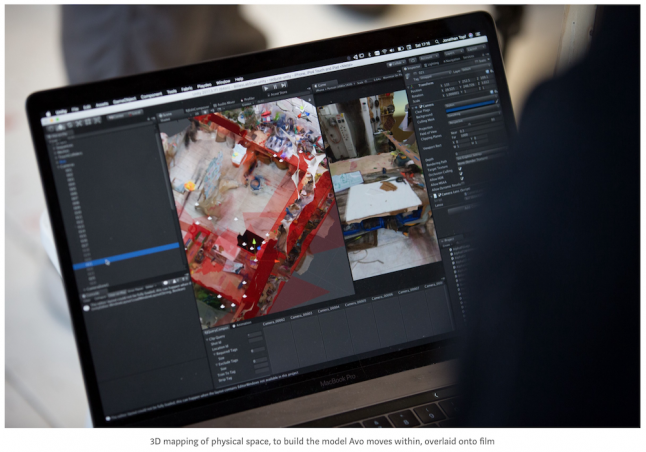

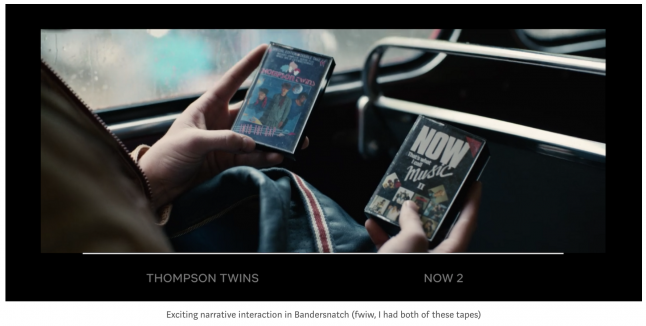

So what’s going on within Avo? The direct manipulation mentioned earlier, and the sense that this lends of “touching TV itself”, is in part enabled by a game engine (presumably Unity) and its ability to drop interactions on top of 3D environments mapped onto pre-filmed sequences. In other words, you control the Avo in Avo by moving a real-time animation over a set of filmed physical backdrops and scenes, of Billie’s studio, or of other locations. By apparently moving Avo freely in that world, you lean-in to the point of being-in. Avo exists on a separate layer super-imposed onto the set, yet thanks to real-time lighting effects and 3D scanning the bumpy green protagonist feels situated within, rather than upon. And so do you. This apparent freedom, albeit necessarily limited, moves Avo beyond Bandersnatch, with the latter really only a stack of pre-filmed elements piled up in an somewhat editable sequence. One ends up feeling rather disengaged, particularly when the experience is overlaid with the cynicism drenching the narrative, and thus it is essentially lean-out.

In this balance of lean-in, lean-out, it is a quite different experience to traditional console games, like Call of Duty or NBA 2K, say, which stress the lean-in of engagement with sometimes overly long lean-back moments in the extravagant cut-scenes: exposition and spectacle in Call of Duty; half-time commentary in NBA 2K. (I’d love to see the analytics on how often such cut scenes are skipped.) With Avo, this is a more rat-a-tat relationship, with shorter cut scenes dropped almost seamlessly in and out of lean-in gameplay elements, and thus a more engaged rhythm of lean-in, lean-out.

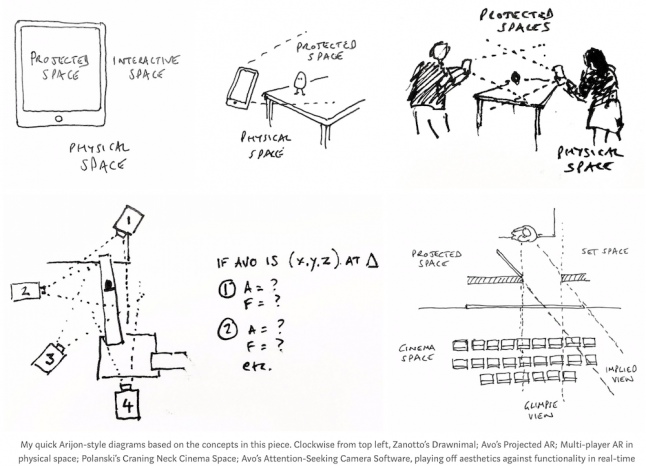

This dynamic capability is underpinned by a fascinating approach to camerawork. In gameplay, the backdrops are filmed sequences, without Avo but with the young inventor Billie and other active elements. These are necessarily shot beforehand. Yet due to Avo’s real-time freedom of movement—the thing is under your finger, after all—which could involve numerous camera angles, Playdeo have created software that essentially picks the best camera to present in real-time.

If Avo is walking along a long planar space, like a propped-up plank of wood between two tables, this might be best viewed side-on—a sort of sawn-off epic view that David Lean might tend towards, were he given a shed and an avocado rather than a desert and Peter O’Toole. Yet as you reach a decision-point, you, the player, need to see what’s ahead, so the optimum camera position there might switch to overhead, at an angle, to enable a glimpse of what’s around the corner. Having drawn a dotted line in that direction, for Avo to follow, the best camera might switch to one behind Avo, watching the fruit totter off. (The sound design, cleverly, does the same trick.)

Because Avo has CGI-level freedom of movement, any of these events could happen next—or you could turn around and head back up the plank to some beans you thought might be in another direction—hence a more distant view from behind, along the length of the plank, entirely perpendicular to the original David Lean shot. And all that could happen in a few seconds. Or not.

Playdeo’s software understands each of these cameras, their various viewpoints, their efficacy in terms of game elements (beans to collect, or objects important to the plot), or their sheer aesthetic qualities—does it look good, essentially. At any one time, each of the cameras is assigned a value based on a rapid assessment of these facets, trading off function against aesthetics. And the winning camera gets the edit. Again, in real-time, and in response to the subtle nudges your fingers are making.

The software suspends a kind of net around you and Avo, a space of possibility defined by the accumulated views from these attention-seeking cameras, and allocates camera time based on the best possible decision at that point.

Playdeo’s Ruby Bell:

“As a player, you’re dynamically editing between cameras. We’ve had to really consider the language of film, and the language of editing.”

The traditional movement of the virtual cameras in games has complete fluidity, as it is moving around in a space generated by CGI. Yet that freedom of movement, and potential edit, usually conforms to a series of typical positions, to simplify things: third person shooter; first person shooter; panning panorama, as in sports games NHL or NBA, and so on. But Avo’s approach, based on hybridizing a small fruit-shaped CGI element into 3D mapped CGI spaces, overlaid onto pre-shot backdrop sequences or views, is somehow more filmic, more TV.

As such, it sits between interactive and non-interactive entertainment, and creates a new space; integrated, and not opposed, as is usually assumed (as say with in this piece at Gamasutra) or executed, flitting between interactive spaces and cut-scene sequences. Avo tries to do both, simultaneously. It asks questions about a real-time cinematography, and the notion of an algorithmic director/editor making decisions in real-time. You can find numerous research papers studying the idea of interactive cinematography, yet not many examples of genuine combinations of interactive and cinematic cameras as with Avo. Aalborg University’s Paolo Burelli writes, in ‘Game Cinematography: From Camera Control to Player Emotions’� (2016):

”With few exceptions, there is a strict dichotomy between interactive cameras and cinematic cameras in which the first one is used during the gameplay, while the second one is used for storytelling during cut-scenes.”

The popular hack and slash title Devil May Cry suggests this possibility, and in a very different and demanding genre. At moments, it moves its relatively traditional form of ‘third-person shooter’ camera control scheme into another mode, which Haigh-Hutchinson, in his paper Real-Time Cameras (2009) calls ‘pre-determined’, based on multiple cameras placed around the environment and switches view during the game in real-time for both cinematic and functional effect, as per Avo.

Most of Devil May Cry is typical virtual camera movement; unlike Avo it is entirely CGI. Yet there is suggestion of this switching between multiple pre-determined views. It will be interesting to see if the fast-paced demands of action games can explore this mode too. (You can sense it in this strangely appealing and esoteric fan video of the ‘back-end’ of the game engine.)

The question for the director/editor/game-designer here concerns this balancing act between creating a convincing universe, telling a compelling story, and providing enough functional hooks to create a game. Burelli again:

”(When) interactive narrative is analyzed as a component of a medium such as computer games, the focus of the cinematography and the purpose of the camera shifts away from solely supporting narration.”

In other words, film in this new hybrid format is not simply about narration, but interaction, function. This, again, pushes it close to design practice, as well as being a filmic and narrative concern, and draws on design’s perennial challenge to find a compromise between function and aesthetics.

Playdeo’s Nick Ludlam says that the ‘look-ahead’ of the line drawing helps the code “create a set of camera cuts that will frame you in the most interesting way at that moment.”

But what is “most interesting” is, well, an interesting question.

Eliminating anything that interferes

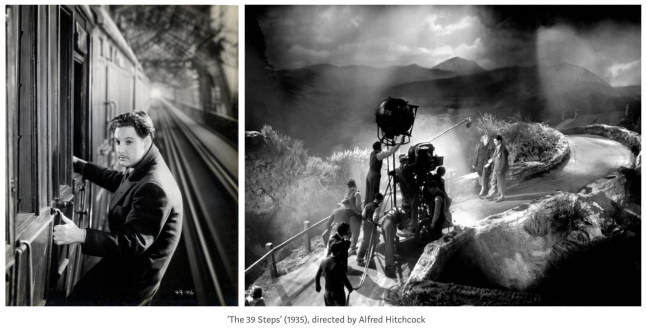

Lest this seem a little overly algorithmic, it’s worth noting Alfred Hitchcock’s belief, in framing and editing, that everything is subservient to the narrative, to the emotion. In his famous rolling conversations with François Truffaut, he outlined his own methods and, yes, rules.

Hitchcock had little time for this apparent compromise between function and aesthetics. He repeatedly suggested there was one primary intent behind his editing and framing: the story.

Hardly the most reliable of narrators, Hitchcock also relentlessly invented and reinvented cinema’s aesthetic. Yet in the Truffaut conversations he repeatedly comes back to the primacy of storytelling, and for visual invention to get us there.

For Hitchcock, and arguably most videogames, the story unfolds visually, through action rather than prose. For Hitchcock, this is not a novel, after all, and the constraints of the theatre stage need not apply either.

“In many of the films now being made, there is very little cinema: they are mostly what I call ‘photographs of people talking.’ When we tell a story in cinema we should resort to dialogue only when it’s impossible to do otherwise. I always try to tell a story in the cinematic way, through a succession of shots and bits of film in between."

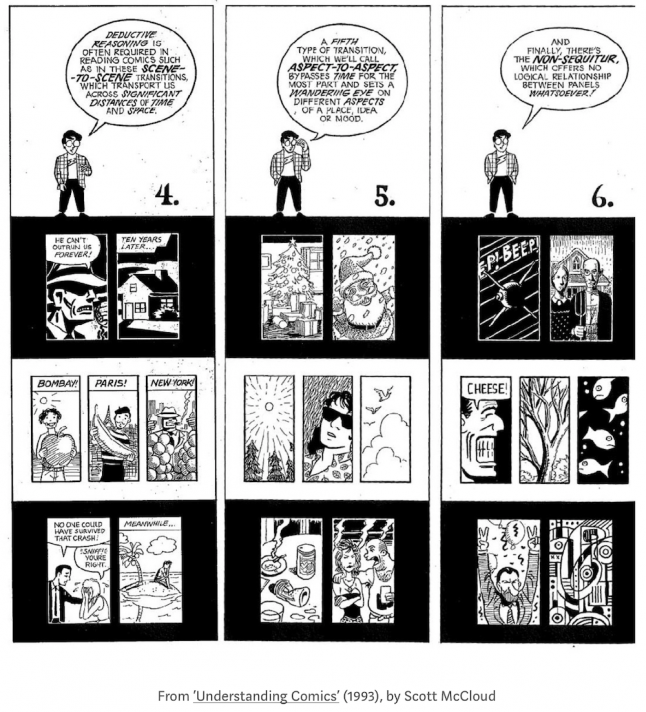

In Scott McCloud’s Understanding Comics—a key text for interaction designers, as it happens—McCloud outlines a vocabulary for such transitions. Transitions are key to games, film and comics, obviously, but the key issue of closure—the viewer/reader closing the gap between the frames as their own invention—also suggests a deeper level of agency in viewer and reader (a concept common to literary theory and practice concerning the postmodern short story, whose brevity and mode necessarily draws the reader in to complete the text, after Angela Carter, Marina Warner and Julia Kristeva. A different kind of lean-in, or be-in.)

So transitions themselves enable a key form of agency, even in ostensibly non-interactive forms like comics and novels, never mind high-agency lean-in media like videogames. McCloud’s typology is useful here. His ‘Aspect-to-Aspect’ transitions best describe what’s going on with these multiple pre-determined real-time edits in Avo. Interestingly, he notes these are more commonly seen in Japanese comics than in American or European.

McCloud suggests this form of transition is about ‘being here’ rather than ‘getting there’, a different balance of the journey and the destination. It’s an unusual mode for goal-oriented media like video games or traditional linear narrative TV or film. Some game formats, like the ‘open world’ games of No Man’s Sky or Red Dead Redemption for instance, can emphasize the ‘being here’ aspect. But Avo is trying to strike a balance between freedom of movement, the heightened agency involved in the closure required of comics, and the goal-oriented mode of plotline development and unfolding narrative.

Hitchcock told Truffaut he delighted in developing the “swift transitions” he needed for The 39 Steps (1935):

“The rapidity of those transitions heightens the excitement. It takes a lot of work to get that kind of effect, but it’s well worth the effort. You use one idea after another and eliminate anything that interferes with the swift pace.”

Playdeo’s balance of code, film and interaction has created a generative space which has to walk through these possibilities, automatically (or algorithmically), and in real-time. The attention-seeking cameras have to automatically do what Hitchcock was doing—not in a Hitchcock style, although it might be interesting to provide training data for the cameras based on different directors! Activate Godard-mode! Activate Bruckheimer-mode!— but in terms of “eliminating anything that interferes.”

Hitchcock said he reduced everything to this purpose:

“I’m not concerned with plausibility; that’s the easiest part of it, so why bother? … I am against virtuosity for its own sake. Technique should enrich the action. One doesn’t set the camera at a certain angle just because the cameraman happens to be enthusiastic about that spot. The only thing that matters is whether the installation of the camera at a given angle is going to give the scene its maximum impact. The beauty of image and movement, the rhythm and the effects—everything must be subordinated to the purpose.”

Making a hybrid of game and TV programme is extraordinarily complex, yet that complexity has been neatly “subordinated to the purpose” by Playdeo. Its image and movement, rhythm and effects are being calibrated by code in real-time, subordinate to this balance of player’s agency and plot development.

Truffaut later points out to Hitchcock that “in your technique everything is subordinated to the dramatic impact; the camera, in fact, accompanies the characters like an escort.” This is entirely how the virtual cameras hover around Avo, and your interactions with the character, just as Hitchcock would never show a train speeding through the countryside from a distance (“giving us the viewpoint of a cow”, he snorted) but his cameras would stay on the train, with the characters. Hitchcock had his rules, even about how, as Truffaut put it, the cameras should not “precede the action instead of accompanying it … the camera should never anticipate what’s about to follow.”Hitchcock added, “If a character moves around and you want to retain the emotion on his face, the only way to do that is to travel the close up.”

In Avo, the cameras swing back for aesthetic effect only, when Avo has to travel a distance, or there’s a chance of a wide. When conveying emotion, to Hitchcock’s point, the camera is tight on Avo. One can see how rules could be developed, to enable this array of virtual cameras for CGI and attention-seeking cameras for predetermined elements. (Playdeo’s founders almost exemplify these various inputs themselves: Timo Arnall’s ability to fluidly synthesize high-craft film with design; Nick Ludlam’s code creating a vocabulary for this new dialogue, for the off-screen chatter between those cameras and the CGI; Jack Schulze’s capability with interactive product design, as well as character and narrative.)

New Grammar of the Film Language

So does this suggest a new rule-set, a new grammar?

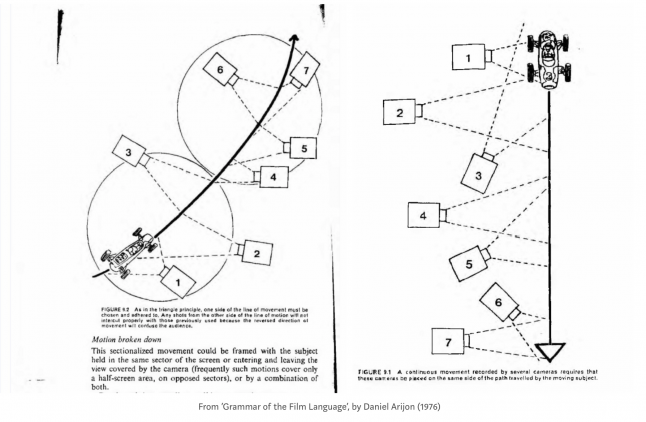

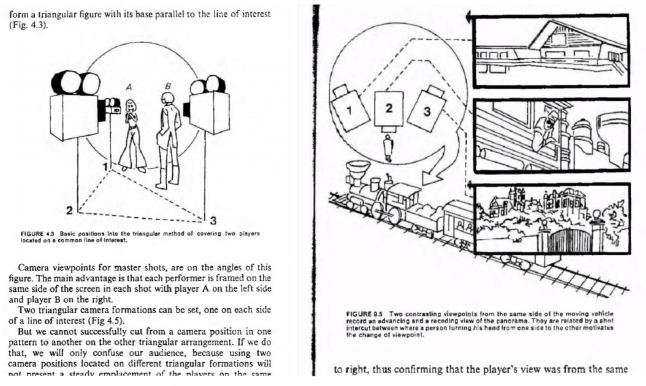

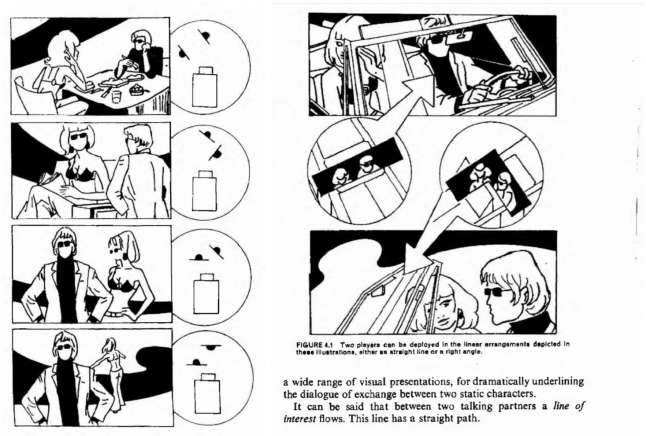

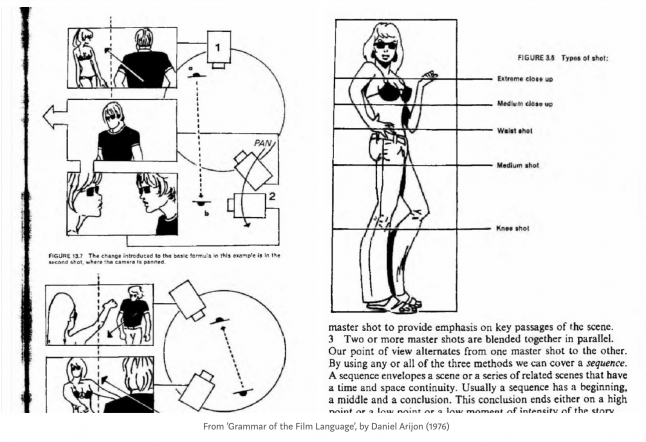

Daniel Arijon’s 1976 book, Grammar of the Film Language, became the de facto guide to filmmaking, in terms of framing, editing and shooting for films and TV. Its focus is on the visual grammar itself: how to shoot a narrative sequence, essentially. It provides a vocabulary, and visual language, for making film and TV. Across 600 incredibly detailed and illustrated pages, Arijon describes the way cameras ought to be positioned or move, the way that cuts should happen, the way scenes should be framed, the entire grammar of filmmaking, shot by shot, scene by scene, story by story.

Yet in those 600 pages, there is, of course, little or no mention of audience or viewers, or their context. The grammar is constrained to the frame of the film, and the depth within that frame. The output device is assumed to be a cinema, perhaps a TV in a living room, and so the interaction is necessarily lean-back, and the spatial environment perhaps assumed to be an armchair, or row of armchairs.

Yet looking at the rather lovely cartoony diagrams in Arijon’s book, and then mentally panning across to Avo, or Monument Valley, Dance Dance Recoiution, Drawnimal, two-player NBA, multi-living room Fortnite or Apex Legends, outdoor Pokemon Go, and yes, Bandersnatch, a different set of diagrams begins to be imagined, Arijon’s originals augmented in multiple dimensions.(Warning: this being a book by a man in the 1970s, it is apparently necessary for all women to be portrayed in a bikini.)

Yet looking at the rather lovely cartoony diagrams in Arijon’s book, and then mentally panning across to Avo, or Monument Valley, Dance Dance Recoiution, Drawnimal, two-player NBA, multi-living room Fortnite or Apex Legends, outdoor Pokemon Go, and yes, Bandersnatch, a different set of diagrams begins to be imagined, Arijon’s originals augmented in multiple dimensions.(Warning: this being a book by a man in the 1970s, it is apparently necessary for all women to be portrayed in a bikini.)

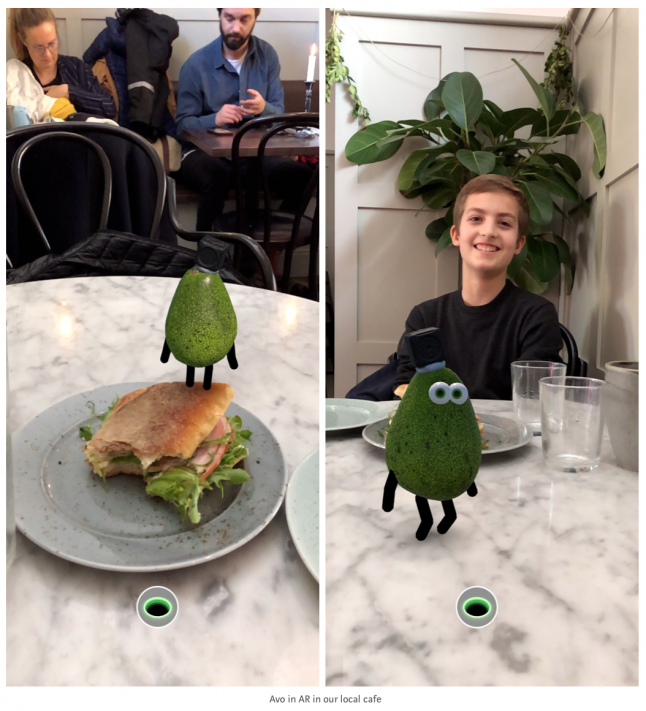

The frame is particularly broken into another dimension by Avo’s subtle deployment of true augmented reality, for one thing. The viewing or playing context also involves a further dimension, of the space you are in as well as the space implied on-screen. It is spatial, physical, mobile, distributed across multiple spaces, and lean-in as well as lean-out. The interaction is embodied, and social.

The frame is particularly broken into another dimension by Avo’s subtle deployment of true augmented reality, for one thing. The viewing or playing context also involves a further dimension, of the space you are in as well as the space implied on-screen. It is spatial, physical, mobile, distributed across multiple spaces, and lean-in as well as lean-out. The interaction is embodied, and social.

Arijon could hardly be writing with videogames in mind, yet it is narrow, even given the context of the time. It doesn’t take account of the impact on the audience’s space that an inventive director could have. Playing with the space of the cinema, rather than simply the frame, is not unknown in film, including the movies Arijon was writing around and about.

Arijon could hardly be writing with videogames in mind, yet it is narrow, even given the context of the time. It doesn’t take account of the impact on the audience’s space that an inventive director could have. Playing with the space of the cinema, rather than simply the frame, is not unknown in film, including the movies Arijon was writing around and about.

Famously, in Rosemary’s Baby (1968), director Roman Polanski and cinematographer William Fraker staged a scene in the Woodhouse apartment set, wherein satanic collaborator Minnie Castevet is is told that Rosemary is pregnant, and she leaves the living room and enters a bedroom to call the other coven members with ‘the good news’. To represent Rosemary’s point-of-view, Polanski insisted that the shot should be framed such that the audience could only see a portion of the actor, and not the phone at all.

Fraker recalls:

“I didn’t get it until we were in dailies and I saw everyone leaning in their seats, trying to look around the edge of the door frame to see what was happening.”

It’s this understanding that the spatial context of the screen can be part of the experience of framing that is now emerging most clearly in videogames, who can take advantage of the more engaged lean-in dynamic of players, the social interaction around multiplayer games in multiple spaces, as well as beginning to augment the environment through AR and other techniques.

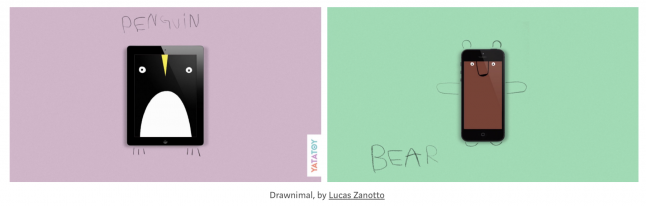

Thinking of this space around the app, I’m also struck by another designer’s work with games: Lucas Zanotto, and particularly his apps (or are they toys?) Drawnimal, Bandimal, Loopimal etc.

With his early Drawnimal, designer Lucas Zanotto’s clever use of the space around the device implies a different kind of augmented reality altogether, with pen, paper, and screen. Either way, we are encouraged to envisage the halo around the device as part of the experience.

Thanks to the size and resolution of contemporary devices, and their balance of individual attention and social interaction, I’ve played/watched Avo with my kids, with both of us pointing, drawing, watching. This decries the notion that the phone is necessarily a strictly individuating device, just as watching any kids crowding around a shared screen on one of their phones will do likewise. Yet unless we design with purpose, the phone is indeed a largely individuating device. So reaching into the space around the device is interesting, and the ability to use the immediate environment even more so.

Watch the reaction of the students in this project by Ericsson ONE for UN HABITAT (my former team and I worked on the early stages of this project.) This is a combination of the mobile’s social function, combined with its ability to ‘draw’ on the environment around, in this case using Minecraft as the pen and the city itself as the paper.

Avo, when it breaks out into AR mode, has just this delightful effect. Clearly Playdeo have more in mind in terms of AR, and Avo is a nod in this direction. But meaningful AR is hard.

As Shigeru Miyamoto, who knows a few things about games, said:

“You can use a lot of different technologies to create something that doesn’t really have a lot of value.”

Avo has judged that its current use of AR is just about enough. It’s there, but not yet everywhere. Avo hints at the potential rather than doubling-down on just yet—for all the effort it takes to just make the hint.

One could see the potential trajectory in games like the lovely Unravel 2, by Swedish developers Coldwood. A similarly-sized and characterized protagonist to Avo, which we can also imagine in AR, parkour-ing off the living room furniture around you, yet based on multiplayer collaboration.

One could see the potential trajectory in games like the lovely Unravel 2, by Swedish developers Coldwood. A similarly-sized and characterized protagonist to Avo, which we can also imagine in AR, parkour-ing off the living room furniture around you, yet based on multiplayer collaboration.

We’re not there yet, technically, but not so far off that we can’t see it.

Assessing the invention in Avo—the AR, the attention-seeking cameras, the lean-in, lean-out rhythms, and more besides—to Netflix’s Bandersnatch, we can see the latter suffers in comparison, despite it garnering many more column inches when it launched last year. Bandersnatch, as with almost all Black Mirror episodes, is beautifully crafted, sharply scripted, well-acted, and essentially compelling viewing, in a ‘rubber-necking contemporary culture’ mode. But in the end, it is neither as inventive or optimistic as Playdeo’s work.

Whilst a technically impressive feat to be able to deliver multiple video files within the Netflix platform with little or no latency, the viewer is still essentially choosing between stacks of predetermined media files—that’s it. There is the most basic lean in, and the most basic interaction, pecking at one predetermined narrative fork over another, before the lean out which characterizes most of the show.

Bandersnatch‘s footage is ‘hardcoded’, incapable of truly changing, as opposed to the game-like infinite possibilities of Avo’s attention-seeking cameras and the interactive CGI overlay itself. In Bandersnatch, the viewer’s interaction is reduced to simplistic choice: jabbing this phrase as opposed to that, at predetermined points. That may be a sharp critique of contemporary culture, but technically, formally, Bandersnatch is really just serving up media files. It’s clever, but ultimately a little empty.

Bandersnatch‘s footage is ‘hardcoded’, incapable of truly changing, as opposed to the game-like infinite possibilities of Avo’s attention-seeking cameras and the interactive CGI overlay itself. In Bandersnatch, the viewer’s interaction is reduced to simplistic choice: jabbing this phrase as opposed to that, at predetermined points. That may be a sharp critique of contemporary culture, but technically, formally, Bandersnatch is really just serving up media files. It’s clever, but ultimately a little empty.

About the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)