Game Design Deep Dive: Using vision as cursor in Tethered

"VR offers new ways of interacting that allow us to get much closer to that mouse and pointer functionality by turning your face into a mouse pointer," says Ian Moran, technical director of Tethered.

Deep Dive is an ongoing Gamasutra series with the goal of shedding light on specific design, art, or technical features within a video game, in order to show how seemingly simple, fundamental design decisions aren't really that simple at all.

Check out earlier installments, including using a real human skull for the audio of Inside, the challenge of creating a VR FPS in Space Pirate Trainer, and The Tomorrow Children's voxel-based lighting system.

Who: Ian Moran, Technical Director and Programmer at Secret Sorcery

I'm technical director at Secret Sorcery. Previously, I was a founder at Rage software and I�’ve held programming positions at THQ and Evolution Studios. Titles I have worked on in the past include Striker, Incoming, the Juiced series, MotorstormRC, and Driveclub. Most of my time recently has been spent prototyping/programming the title Tethered, our debut VR game at Secret Sorcery.

Having worked with several generations of new hardware since the 80’s I’ve seen each wave bring step changes in capability that allowed for increasingly sophisticated content to be viewed flat on a screen, but the latest wave of hardware allows something truly special. It’s a paradigm shift that allows players to be fully immersed in a virtual reality. This is more than a qualitative improvement, more a change of medium.

Secret Sorcery was formed at the confluence of opportunity and technology, with the explicit mission of producing games for this new medium. Virtual reality is finally here, and it brings with it some exciting and tricky challenges for developers to overcome.

What: Tethered's use of vision as cursor

Having developed various VR prototypes, it became clear to us that we wanted to move beyond great 10-minute VR experiences and produce immersive game titles that players would want to play for extended sessions. We settled on a God-Game which felt like a great fit for VR.

With a short experience, it seems to be less of an issue if the player feels affected by fear or nausea, but for a game where extended periods of play were likely, comfort was paramount to enjoyment.

One fundamental challenge was the route to a view selection system, something that benefited from development of a system of comfortable target reach and intuitive selection.

You progress in Tethered by accumulating ‘Spirit Energy’. Along the way you will be giving purpose to your ‘Peeps’, solving environmental puzzles, building infrastructure and wielding your power over the elements such as the sun, the wind, the rain, and lightning.

All of this is possible using the simple tethering mechanic. You begin by selecting a Peep or weather cloud, and dragging a ‘tether’ to another game object which will offer contextual possibilities, a fate that can be bound by dropping the ‘tether’ between the two objects.

Why?

Object selection for the best god game interfaces previously found traction on PC with mouse and pointer. These tools allow the player to select and organize game objects with precision point and click. While some interesting designs have attempted to mitigate limitations of pad based selection, none have done it justice. Virtual Reality, on the other hand, offers new ways of interacting that allow us to get much closer to that mouse and pointer functionality – by turning your face into a mouse pointer.

We had anticipated that we would need some additional methods of object selection as picking objects with gaze felt as if it was going to be very tiring for extended play sessions. Turns out, however, that we are naturally well exercised in holding our head up! It is one of the few things that even the likes of programmer like myself, who tends to lead a somewhat sedentary existence behind a computer screen, does for most of the waking day. With careful application of some simple methods we were able to make an intuitive selection system that it is comfortable during substantial play sessions.

Comfort is key

We had implemented a vantage point view system specifically designed to avoid motion sickness, avoiding autonomous camera movement and accelerations, and our godly scale works to avoid vertigo, despite you literally having your head in the clouds.

Then to be able to intuitively pick and interact with object targets we needed to address comfortable view of targets, and the evaluation of most likely target of interest.

Cone of comfort

In the real world, when we pivot our head we generally adjust our bodies before the point that the head turn becomes uncomfortable. But for longer, seated play sessions this is not practical.

Comfort for rotational reach for seated virtual reality ranges from being most comfortable when looking directly ahead, dropping off as angle to target increases. Although an absolute evaluation of comfort will vary from player to player, we tried to determine a notional average for comfortable rotational reach.

We extend comfortable reach by mirroring the tendency for eyes to lead when targeting. When the player rotates their view horizontally to focus on objects away from dead ahead, eyes tend to lead, which can be accounted for by introducing a fractional additional offset to the reticule. Used very subtly this effectively extends the comfortable rotational reach. We do this around the vertical and horizontal axis.

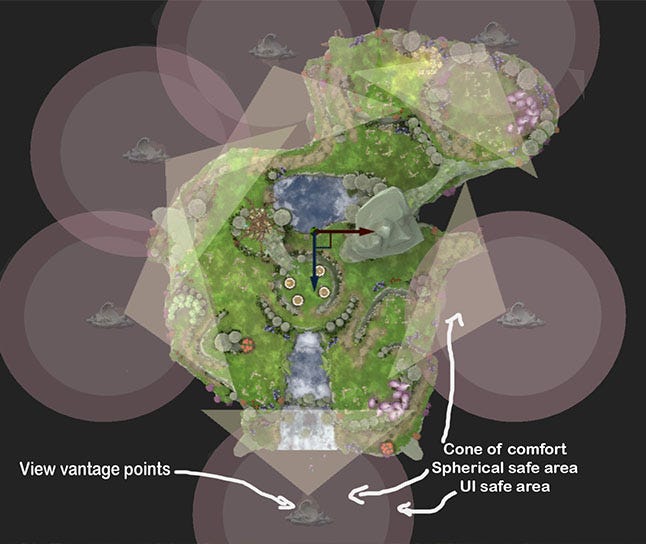

Then to ensure comfort we have a level design rule that all targets must be within our comfortable rotational reach; this is achieved by defining a cone of comfort that describes acceptable positioning of objects that the player might want to address relative to the vantage points the player plays the game from.

Spherical safe area. Free from world geometry

It’s a spectacular thing in VR to have elements close to view, maybe showing my age here but think along the lines of a spike being poked out of the screen in old 3D movies. This is something that is dramatically effective, but uncomfortable after even a short duration.

Additionally, close geometry is likely to be intersected by a moving view point, in other words the player’s head, and this is something which collapses the suspension of disbelief and interferes with gameplay, having your head in anything less than clear air causes these issues.

To maintain immersion and comfort, we reserve the closest areas for temporary effects only, such as particles and our voxel effects. We define an extent of comfortable translation based on seated reach, a spherical “safe area” around each vantage point that can be occupied, within which we avoid gameplay geometry. This allows us to visualize our spherical safe area during level construction, something that we can then use as a level design rule to completely avoid gameplay geometry intersecting with the player view.

Picking objects out. Ray cast, view value and snap

To evaluate the object the player wants to interact with we use a ray cast from player’s view, you will remember this is augmented by fractional offsets to aid comfort; this also will normally give a more likely target direction than directly forward in headset.

The fractional offset helps in most general scenarios, like any extrapolation technique. When predicting against expectation, it is error rather than correction that is increased. We keep the fractional level marginal to reduce the scope for pathologically counter intuitive results.

The result from this ray cast can identify the likely object of interest, for example a Peep, a building, a resource or what have you.

This is great when your shapes are of an easily targetable size, but this system is not without its problems, especially at distance. Not all objects are the same shape which meant we needed to augment selection with something a little more. When a ray cast trace fails to return a contact, we go on to give each target a view value, based on distance and angle to their selectable point. We also snap to the most likely candidate to give more positivity to selection and give a small degree of stickiness to reduce the tendency to lose a target when tracking or view wavering.

UI Spherical safe area

We also use target picking with UI dialogs. The first goal with these had been to avoid or at least minimize UI elements, favoring interpretation and use of audio to give the player some key gameplay indicators, but we did find that in-world layered UI elements can look interesting and are clearly well understood in practice.

Information UI elements can be kept at the correct depth along with object of interest, for example the reticule always surrounds a plausible target when the player looks at something like a peep. For more substantial menus and dialogs, such as build menus, to avoid visibility and intersection issues, we found that we could reserve an area at the extent of the previously defined spherical “safe area” to avoid any intersection issues. With a safe area reserved for UI elements, we could show any UI dialogs we wanted to at a readable distance and keep them safe from intersection from both world geometry and the players view.

Function reticule

We wanted to minimize UI elements that tend to break immersion, we shelved target outlining for example, but we have in-world info cards and dialogs, and possibly most importantly we have a reticule that helps with targeting and function.

To highlight a target we run a reticule full time, this helps confirm the direction of view and the likely target of interest. When the reticule can possibly interact with a target in some way we expand the size of the reticule and instantly show the possible contextual function as an image icon within the reticule.

The contextual tether function(s) shown can allow the player to identify that binding a tether will, say, farm a field rather than strike it with lightning. Something that we find enables identification of function and application of instruction quickly and simply.

Some targets have a secondary mechanic option, for example you can farm a field, or upgrade a field. Options like these are required at lower frequency and although multi function popups or menus were a possibility, we implemented a simple multifunction reticule that switches to the lower frequency instruction when dwelling on the target for a short time. This keeps the primary function instantly accessible and the secondary function accessible without the need for additional UI interfacing.

Reinforcement of interaction

We reinforce interactions with rumble and sound cues as you would expect. Additionally, we were fortunate enough to have the opportunity of having the inspirational talent Kenny Young working with us after his sterling work on LittleBigPlanet and Tearaway. He provided a sophisticated audio system that weaves a web of contextual musical stings which give a rich contextual reinforcement by way of musical accompaniment that compliments gameplay.

It’s also worth mentioning that directional audio events are particularly effective in VR, with the player attention being drawn to demanding game events nicely. This helps us avoid cluttering the play space with UI whilst continuing to keep the player well informed.

Result

Virtual reality is still in its infancy, still plenty of possibilities to be discovered, but we think we have managed to put together a straightforward and comfortable system of interaction that goes beyond aping previous flat and indirect interfaces, using a more direct and natural view to guide intuitive actions that can be taken quickly by the godly player in virtual reality.

We’ve been taken back by the level of positive response to Tethered, and are looking forward to exciting further possibilities in VR.

About the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)