Game Design Deep Dive: Dynamic audio in destructible levels in Rainbow Six: Siege

"By using a series of strategically placed points in the map, called Propagation Nodes, we are able to calculate the lower cost paths of a sound between the listener and the source." - Louis Philippe Dion, Audio Director on Rainbow Six: Siege

Deep Dive is an ongoing Gamasutra series with the goal of shedding light on specific design, art, or technical features within a video game, in order to show how seemingly simple, fundamental design decisions aren't really that simple at all.

Check out earlier installments, including using a real human skull for the audio of Inside, the challenge of creating a VR FPS in Space Pirate Trainer, and creating believable crowds in Planet Coaster.

Who: Louis Philippe Dion, Audio Director at Ubisoft Montreal

I’m Audio Director for Rainbow Six: Siege and have been working at Ubisoft for seven years. Prior to Siege I worked as an Audio Artist on titles like Prince of Persia and Splinter Cell. I have also worked as product manager for Ubisoft’s internal audio engine solution.

Before working in the game industry, I worked as a sound editor for several television series and films. As a hobby, I have been making music for as long as I remember and nurture my addiction to synths, guitars and basically anything that can produce sound as much as I can.

Having great interests in technical aspects of sound, I was excited to join the game industry. I felt that compared to the well-established industry of film and TV, games offered much more opportunity in innovations and technical breakthroughs. We are only starting to tap into the potential of interactive sound, real-time mixing and new positioning algorithms, I can’t wait to see what the future holds for us.

What: Dynamic sound propagation in a destructible environment

There are basically three main concepts in the physics of sound propagation: Reflection, which is when a sound bounces off surfaces; Absorption, which is when a sound passes through a wall but absorbs certain frequencies along the way; and Diffraction, which is when sound travels around objects. You can hear these phenomenon in everyday sounds. Many other factors of real life come into play for localizing sound but I will focus only on the propagation side of physics and how we managed to simulate it.

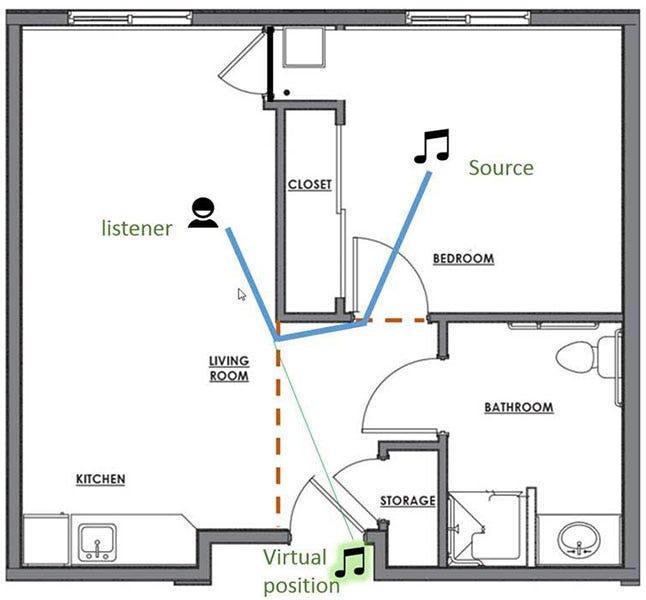

The main innovation on Siege was the extended use of diffraction, which is called Obstruction. By using a series of strategically placed points in the map, called Propagation Nodes, we are able to calculate the lower cost paths of a sound between the listener and the source. The cost of a propagation path depends on multiple factors, namely, the path’s length, its cumulated angles, and the penalty assigned to the destruction level of the specific Propagation Nodes impacted.

For example, if a wall is intact, the Propagation Nodes inside the wall are unavailable to the algorithm (infinite penalty). If a hole is created, however, the closest Nodes will be exposed to the Propagation Path selection and will potentially let sound pass through depending on the area impacted. Then, we virtually reposition the sound to reflect the direction of the paths, instead of the actual position of the sound source, which ultimately simulates diffraction.

We also use several strategies to simulate Absorption, which we call Occlusion. Depending on the source we will either play a pre-rendered simulation of the obstructed sound (e.g. footsteps on the ceiling) or play the same source in a direct path along with real-time filtering. Since the latter is more CPU intensive, it was mostly reserved to guns. Just like in real-life it is possible to hear the occluded and obstructed versions of sound at once, we combined phenomenon to give more information as to the source location.

Finally for Reflection, which is essentially Reverberation in the game terms, we opted for the use of an Impulse Response Reverb Processor. This specific type of reverb “samples” the acoustics of a real room, and then plays our game sounds through it. This method is, in my opinion, light years ahead from Traditional Parametric Reverb—at least for simulation purposes. The only drawback is that due to CPU constraints we could not use it in many instances. To counter this constraint, we relied on “baking” the Reverberation on guns and playing it back on the position of the gun. This allowed the player to benefit from a positioned reverb on weapons, which provided better positional information.

Why?

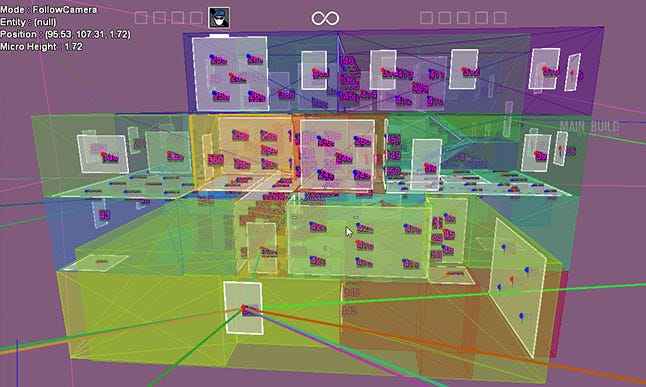

Destructible environments were one of the great challenges of the Sound Propagation during production. It’s one thing to propagate sounds through shortest paths, but to have the level modify itself during gameplay was something that we had never done before. Not only from a rendering quality perspective, but also from a CPU performance perspective it was quite challenging. We put several nodes on all “breachable” surfaces, and these nodes stayed closed until destruction occurred. We went through many iterations on the needed granularity to find a sweet spot between precision and performance constraints.

Another interesting factor is that Sound Propagation modifications are not one way: the nodes can go from closed to open, but also from open to closed. With barricades and wall reinforcements, players can modify the potential sound paths and the algorithm will re-calculate in real-time the new propagation paths solutions. These Occluders (e.g., barricades, wall reinforcements, etc.) don’t necessarily have to close those propagation nodes completely; depending on their material properties (e.g., wood, glass, concrete, etc.) they can add a certain amount of penalty for the sound to pass through them. For example, wooden barricades and metal barricades both have their own obstruction settings. So we can muffle the sounds more or less depending on the materials used.

Additionally, with a high level of destruction and bullet penetration in Siege, it would have been disastrous if we would only rely on Occlusion without the presence of Obstruction. Occlusion would have been a major wall hack. For instance, as a Defender, all you would need to do is reinforce as many walls you can, and wait to hear Attackers walk by non-reinforced walls to shoot away--the Attackers would never know what hit them. We try to be as accurate as we can, but the simulation of “real life physics” adds a certain guessing-game that levels the playing field. Granted, there are some situations when it can be downright frustrating, but that’s kind of how real life is, too.

This is not to say that we are not continuously trying to improve our algorithm. We love to read posts on Reddit of players who have taken on explaining situations when they feel Sound Propagation was unfair; this is pure gold to us, and we will definitely take this into account in future improvements.

Rainbow Six: Siege's Hereford map.

Listening as player action

With quiet and inaction being such a core part of the game, even with the relatively short round timers of 3 minutes, the primary action of the player is listening, and when we started the development process we actually thought that, from an audio perspective, the map ambiences would be a bit of a bore. Waiting inside of a suburban bedroom is not like being in the middle of a battlefield or in space, right?

At that time not all gadgets, navigation, and gun sounds were plugged in, and Sound Propagation was still in its early stages. But as the pieces of the puzzle started to fall into place, we realized that we had something way better than “faked tension.” The threat you hear is real, and it’s coming for you. The restraint in using heavy ambience layers helped us to add tension as well as to give a much more space as to offer accurate information to the players.

The sound propagation for the Hereford map.

Special attention was put to the realism and amount of details in our Navigation Sounds to help the player gain more information simply out of listening to others navigating through the map: the weight, armor, and speed of Operators can all be determined by listening to cues in the Navigation Sounds.

Gadgets deployment like breach charges, barricades, and other devices also received particular attention to make sure we gave good cues to players relying on sound to get Intel.

First Person Navigation Sounds are also mixed quite loud for two important reasons: it informs the player that they are producing quite an amount of noise revealing their position, and second, it tells the player that they need to slow down if they want to hear the others.This is the basis of Siege’s sound design; if you go slowly and listen to your environment, you can gather more Intel and perform better.

A close up of propagation nodes.

Result

From the beginning of the project the desired emotion was tension. At some point we were adding a bunch of music and artifacts to infuse more tension, but as stated earlier, the best element that we had was the sound of the other players who you could not see. So we removed all “imposed” emotion-giving sounds to focus on what really mattered: the sound created by players.

Today, in retrospect this sounds obvious, but I find that not many games refrain from using any classic tension sounds during gameplay. Keeping the experience void of artifacts, to me, gives Siege a sound print that is not only fun to listen to, but also that influences the game greatly.

About the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)