Game Design Deep Dive: Bringing the FPS to VR in Space Pirate Trainer

"Best practices and established mechanics were useless or sometimes actively misleading, so we had to search for an optimal way to transfer the FPS experience into VR." -Dirk Van Welden, project lead on Space Pirate Trainer

Game Design Deep Dive is an ongoing Gamasutra series with the goal of shedding light on specific design features or mechanics within a video game, in order to show how seemingly simple, fundamental design decisions aren't really that simple at all.

Check out earlier installments, including the action-based RPG battles in Undertale, using a real human skull for the audio of Inside, and the realistic chat system of Mr. Robot:1.51exfiltrati0n.

Who: Dirk Van Welden - Gameplay designer & programmer

Hi, I’m Dirk Van Welden, founder of I-Illusions and project lead for Space Pirate Trainer, the arcade shoot ‘em up for the HTC Vive (and Oculus Touch in the near future). Since we’re a small team, I was involved on all the aspects concerning the creation of Space Pirate Trainer, but I probably spent most of my time programming gameplay and technical stuff.

I’ve been programming games for quite a while now, but I’ve started taking it seriously about 10 years ago, when I got my hands on the first version of Unity. I-Illusions did a lot of freelance work in its first years of existence, to fund the creation of Element4l, our first Steam release in 2013.

As an early backer of the first Rift and a VR fan, I received my first headset pretty early. After trying, I immediately thought I would never create a VR game, since I’m too susceptible to motion sickness.

Receiving a Vive devkit from Valve in 2015 with some cool but very basic demos made me realize we could actually pull off a VR shooter without being motion sick, so we dropped all our projects, and started working on SPT.

What: Exploring the arcade FPS in room-scale VR

As we started to implement FPS mechanics into the VR environment, we came across unique issues that were not present in traditional FPS. Best practices and established mechanics were useless or sometimes actively misleading, so we had to search for an optimal way to transfer the FPS arcade experience into virtual reality.

Why?

So, we’ve got 3x4 meter max that we can walk around in, sub-millimeter tracking on the controllers, and a blank page on first-person-shooter-in-VR design… Where do we start?

1. Laser guns

First of all, I wanted some laser guns, not just because I loved shooting with them, but also because I wanted to know if the accuracy was good enough for shooting targets at a distance.

In our first designs, everything was pretty straightforward and pure, and it actually worked pretty well out-of-the-box.

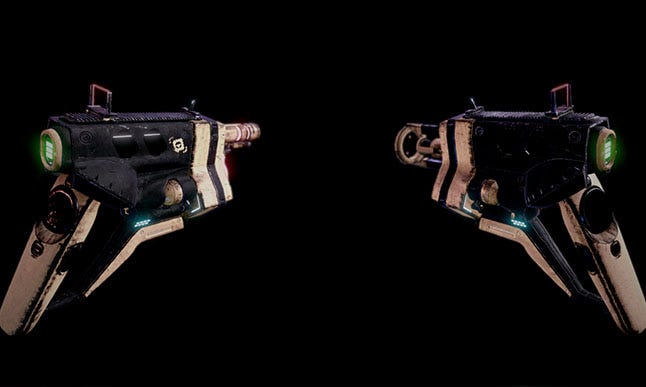

Space Pirate Trainer “Boltons”, with the Vive model as a base for the handle.

Space Pirate Trainer “Boltons”, with the Vive model as a base for the handle.

To improve the overall feel, we integrated the Vive handle in the gun model, to make it feel just that bit more realistic when holding the controller. Once we starting experimenting with new guns, like the railgun, we felt that accuracy was becoming a problem. Not because of the technology, but because of the light weight of the controllers.

When you release the trigger, that tiny action actually rotates your gun by a tiny bit, sometimes just enough to miss the target. To solve this, we added some sort of “locking” system. If your aim is on target, the target is locked for 0.06 seconds. If after that time, your aim is still within 10 degrees of the target, it’s a guaranteed hit.

2. Aiming

So, as everyone can imagine, aiming is quite different from a normal FPS. One of the main reasons, next to using your hands instead of a mouse/controller, is that your guns are not aligned to your viewing direction. This causes several issues.

First of all, people were asking for decent gun-sights. If you have never seen one in real-life, you probably don’t know how it actually works… In FPS, the red-dot sight is always steady in the middle of your sights because of the alignment. This is not the case in reality and VR.

Technically, it involves some shader magic to make it work. (in short and possibly not 100% correct: it’s a dot projected at a finite distance, parallax to your eye-movement). For those interested, there is a kit available on the asset store that can give you a headstart for creating your own reflex sight.

Secondly, people started to complain that the barrel-to-handle ratio was not correct. The problem with this is that there is no perfect angle between the barrel and the handle. Typical guns have an angle between 105 and 112 degrees, while the Vive ring is around 120 degrees. Again, this is never an issue with traditional control schemes, but in VR… it is.

Since we were using a gun model based on the Vive handle, we just split up the handle and the barrel, and made the barrel angle selectable between 105 and 120 degrees. Selecting a different angle also changes the visible angle in-game. As you can imagine, this can be a problem when you are using models that have a fixed barrel angle. You would have to rotate the whole model relative to the controller.

This introduces a weird side-effect that you are holding the gun in a different angle than displayed. Adding some sort visual representation (a hologram for example) of the default controller is a possible solution. Some other VR games that use realistic guns are already successfully implementing this.

H3VR, implementing controller holograms as a reference

H3VR, implementing controller holograms as a reference

3. Droids

For the droids, we have this central manager, called the DroidManager. It tells the droids where to go, and maybe even more important, where not to go. It’s sort of the central intelligence of the droids.

We choose droids because it is an easy way to make targeting practice more diverse. If all of your targets are on a plane, gameplay tends to get more boring. You can actually make clever use of level design to get around this, but with droids, we were able to let them move to any position or formation.

Because of that freedom we actually had to limit the gameplay area. We tried experimenting with full 360 gameplay but it actually made it disorientating. Also, you felt like you were missing the most important events, like in a badly directed 360 movie. We needed so many cues that it became overwhelming. In most of the cases you didn’t even know which droid shot you, which is one of the reasons we implemented the so called “hit-line”, which show you the path of the laser that hit you.

Even now, in a +- 220 degrees gameplay field, we use quite a bit of cues. There are visual cues like droids preparing to shoot (lens flares), subtle flashes on the edge of your HDM when a droid is shooting lasers at you outside of your field-of-view, and so on. Audio cues include charging up sounds, pitch change (bullet time) when lasers are close, 3D positional audio for those cues + droid engines, etc.

Left red flash indicated a droid firing at you from the far left side, outside your peripheral vision

Left red flash indicated a droid firing at you from the far left side, outside your peripheral vision

For the 3D audio we actually had to boost the volume of some audio cues. A droid charging up or shooting at you from a certain distance is very important, more important than the default (realistic) volume would suggest at a certain distance.

4. Dodging

Early on in the process of creating SPT we knew that dodging was going to be a core mechanic. We implemented the bullet-time effect to make the possible impact more dramatic and forcing people to dodge. It’s hard to make people dodge in VR for the first time, but if there’s a giant glowing laser coming towards your chest and bullet time gives you an opportunity to dodge it, people tend to do so. I say chest because incoming shots are much easier to notice if they are coming towards your chest instead of your head.

Our main concern was that the space on the platform was rather large in contrast to people’s living rooms. And if you are moving fast the chaperone (virtual walls) might kick in a little late as well. That’s why we added a preview of your available space on the ground; it’s always visible and a good way to estimate how far you can dodge.

And since some of us even don’t have the space to dodge a few feet, we included the shield, which became much more important gameplay-wise than we first anticipated.

One more thing about dodging: if players do get hit they need to feel it, otherwise they won’t dodge next time. This is quite hard since outside of the haptic feedback through the controllers, you can only do this with sound or visuals. Whenever you need to simulate an unavailable sense, overdo the other senses, and your brain will fill in the gap.

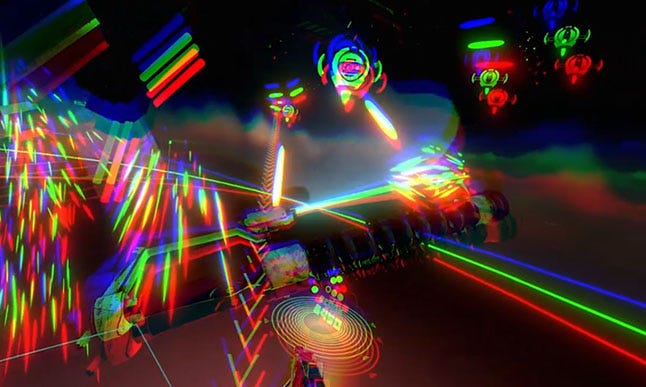

RGB split when hit

RGB split when hit

5. Difficulty

Ramping up difficulty in a room scale VR game is also quite interesting. At first glance you could just add more droids to amp up difficulty, but there’s more to it. In fact, three droids could be much more dangerous than 6 droids depending on some settings in the droid manager.

A few examples: the allowed space for the droids is increased in every wave. The gameplay area actually opens up from around 60 degrees to 220 degrees gradually. You’ll never see a droid to you far left or right in the first few waves. The “bullet-time”-effect actually wears off gradually. It starts at 0.2 (5 times slower), and becomes realtime at 66.

One problem we are still facing is the relation between difficulty and play-area. It’s just easier to survive if you can jump around in a large play-area. We tried solving this with some new droids that have a larger attack area and take your position and velocity into account, but in the end, running around like a ninja will probably result in higher scores.

There were no rules on a VR FPS, so next to just trying out new stuff, the whole process is just about trying creative solutions and trial-and-error. Everyone is still in a learning process here, so either coming up with new approaches or inventing new stuff is beneficial for the whole community.

Result

I can only imagine that if we come back to SPT in a few years, that we will see that there are a lot of best VR practices missing. What’s the best way to implement motion, emotion, reaction, etc? We’ll only know if we try every approach, even if it’s totally outside of the box. Embrace the limitations and work around them, or even make a feature out of it. Last but not least: Share your experiences, even if they don’t work.

About the Author(s)

You May Also Like

.jpeg?width=700&auto=webp&quality=80&disable=upscale)