Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In this analytical article, EA veteran and Emergent VP Gregory looks at the problems of iterating game concepts and assets with a large team, suggesting possible roadblocks and solutions.

[In this analytical article, EA veteran and Emergent VP Gregory looks at the problems of iterating game concepts and assets quickly with a large development team, suggesting possible roadblocks and solutions.]

You often hear from people who build games for a living that it's unlike building any other piece of software. Why is this?

Building interactive fun is a very different problem than building any other type of software, because "fun" can't be planned: you can't schedule a project with waterfall, execute the schedule perfectly, and expect to get a fun game 100% of the time at the end.

There are usually lots of go-backs and retries as you bring together elements of the game -- the code, content, scripts, etc. -- and see if it's entertaining. In the worst cases, teams don't realize that it's not a fun game until it's so late, they can't fix it.

In the best cases, teams prototype their game in "sketch" form during pre-production, and find the fun before they spend the bulk of their time in production filling in content and polishing the gameplay.

So, how do you align your team with the best case scenario? It's a generally accepted practice in the industry that quickly iterating and trying different things is the best way to make incremental progress towards the final goal of a fun game. It's less risky, and you find the core of your game (look, mechanic, etc.) much more quickly.

Developers are now dealing with more -- more of everything. For instance, these elements have all increased:

People on a game team

Specialization of talent because of complexity of technology or tools

People necessary to iterate on a single game element

Number of team members distributed across multiple geographies

Amount of time it takes to compile and link code due to increased size of code

Amount of custom tool code

Amount of content needed to satisfy today's players

Complexity of content creation and transformation

Number of tools to create and transform content

Amount of time it takes to transform content

Number of platforms simultaneously released from the same team (PC, Xbox 360, PS3, Wii, etc.)

Infrastructure (code & content repositories, automated build farms, automated test farms, metrics & analysis web sites, offsite development infrastructure [VPN, proxy servers], game and tool build distribution, etc.)

Rate of change of content, code, tools, pipeline, infrastructure, etc.

Build stability problems because of all of the factors stated above

Dollars at stake when a mistake happens that hurts productivity due to number of people idle and unable to work

Management focus on risk because of all the factors stated above

That last one (increased management focus on risk) has created a cyclic dependency in some of these items that have actually increased them even further, particularly infrastructure. Increased focus on risk brings with it the wish to control the chaos, and implement systems that provide increased visibility and predictability into that chaos.

Game team management usually doesn't have enough information to know where the problems are. Getting the information in place requires new systems, and hooks into existing systems, which increases the rate of change of code, the amount of code, and the number of systems to be maintained.

Game build systems will usually be put in place to increase the predictability of new releases back to the team. When those aren't stable, other things are done to make those systems more reliable. Everything done here adds to the volume of work and the complexity of the project.

As game development has gotten more complex, projects have become unwieldy and for many teams, progress has slowed. It's caused developers to have a focus they never had to have before: optimize not only the game, but also the tools and processes involved in building the game. It's a huge shift in mindset.

What items above are most responsible for impeding a single developer's progress these days? It's generally the tools involved in daily workflow: compilers, debuggers, digital content creation tools, source code and content control systems, custom tools and the game runtime itself.

Each component in the tool chain adds time to your iteration rate. When you make a change, how long does it take you to see the effect of that change in the most appropriate environment (game, viewer, whatever)? The closer it is to instantaneous, the better you are doing.

A change made locally doesn't really exist until everyone on the team can see it (Is it in the build yet?)

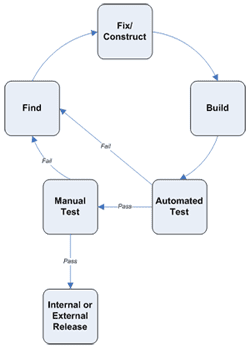

This is usually all about getting your change submitted to a repository, and getting it successfully through a build cycle. Here's a build cycle diagram that will be useful in discussion.

As project leaders and studio executives have trouble understanding how far along their game development project is, they implement metrics and automated processes to help them achieve some predictability.

They stand up automated builds to bring order to a very complex, error-prone process.

In addition, to stabilize the build and protect the ongoing investment in the game, "gates" are placed in the way of checking in anything into the game. Sample "gates" include:

Get your change integrated into your local build

If it's a code change, either:

Compile a single configuration on your main development platform.

Compile all configurations (debug, release, shipping) on your main development platform.

Compile all configurations on all platforms.

If it's a content change, either:

Transform data on your main development platform.

Transform data on all platforms.

Test your change by either:

Running manual tests on all platforms, performing sufficient tests to verify that your change worked as expected and didn't break anything.

Running an automated test on all platforms, in addition to the above.

Running the entire automated test suite on all platforms, in addition to the above.

Wow. I'm forced to do that to check in my change? No wonder things are slow.

The best approach is to treat this situation like an engineering optimization problem. You have to measure the process to find the bottlenecks, pick some low-hanging fruit to go after, and then tackle the tougher problems. You should start with each individual's process, and then look at the team as a whole. Never do something with one, without considering the impact on the other.

One place to start is with the artists' tools. These days, there are more of both artists and tools, so fixes there will have a big impact.

Some content transformations are more expensive than others. "Expense" is defined by the time elapsed before the change can be seen in the appropriate medium, usually a game engine or an engine-derived viewer.

Factors contributing to this elapsed time are transformation, hard drive read/write bandwidth, processing power, memory size, memory bandwidth, and network bandwidth. The key to rapid iteration is reducing this expense.

People say this, then forget it, so it's worth mentioning again: Measure first, then optimize. Don't guess at where your bottleneck is.

As part of looking at your iteration bottlenecks, you should try to classify the different types of data transformations you have in your pipeline. This can help you understand what's taking the time, and what changes are possible -- and safe -- for you to make.

Generic mesh to tri strip |

3D math, vector math, animation curves, shadow maps, baked lighting in textures, image compositing |

Image format changes (bit depth, alpha, mip maps, HDR) |

No expense other than copying the data from one file to another, or from one place in memory to another. |

Putting the same object in multiple streams or sectors of the world to speed up runtime loading. |

DXT texture compression. There are cheaper compression algorithms, but you usually trade increased compression time in the pipeline for reduced load/decompression time in the runtime. DXT texture decompression is hardware assisted, so it's very cheap in the runtime. |

Lots of data coming from art packages are stored in a compressed form. TIFF texture files, in some cases, are compressed. |

Stripping/adding data. Server doesn't need graphical data, but does need collision data. Clients may need several levels of detail. |

Converting from human readable text to some "quicker to load and execute" form. |

Some intensive process is required to layout the data into a file or set of files. Setting up files to optimize seek times based on world layout (streaming) can be very expensive. Especially with respect to the size of the actual change. |

For some content transformations, the size of the change is reasonably proportional to the "Expense".

Developers are usually most upset when they perceive they've made a small change to source content, and see a disproportionate expense. They'd be even more upset if they additionally found out that only a few bits of game-ready data changed after all that time.

By the same token, even when the size of the change is small, some types of transformations take an incredible amount of time and require nearly the entire game world to do their job, such as stream packing steps, global illumination or light baking builds. These need planning so they have a minimal impact.

If you take a methodical and holistic approach to optimizing your pipeline for rapid iteration, you'll see great results at the individual and team levels.

Coming soon:

Part 2: Some Patterns to Follow and Pitfalls to Avoid

Read more about:

FeaturesYou May Also Like