Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

How do you organize and structure the creation of dialogue for video games, "perhaps the single most important aspect of video game audio"? Game audio veteran Bridgett (Prototype, Scarface) examines the key issues and possible solutions.

[How do you organize and structure the creation of dialogue for video games, "perhaps the single most important aspect of video game audio"? Game audio veteran Bridgett (Prototype, Scarface) examines the key issues and possible solutions.]

Dialogue production for a large budget, cinematic video game can often be an intense and often brutally challenging process. Getting an actor in the booth and reading a script is in itself a monumental achievement that requires solid tools, pipelines, and communication.

While there are a great many articles written about the voice actor's process and performance, there is a dearth of information about the technical process and steps that are taken prior to and after the recording session, and it is these processes, planning, and techniques "behind the scenes" on which this feature will concentrate.

There is a wide spectrum of different approaches to dialogue tools and production process throughout the industry. It is fair to say in fact that almost every developer has a totally different way of working, and there is certainly no rulebook -- as long as the job gets done to the desired quality.

However, working on an integrated dialogue database solution from beginning to end of production can speed up process, reduce organisation and administration time both in and out of recording sessions, and remove a whole slew of duplicated work and a mess of multiple scripts from various members of the dialogue production team.

The desire and benefits are clearly there for a tightening up of the production process and integration of dialogue through a single master database. Sadly dialogue is one of the areas that audio directors and audio designers can be less passionate about, and the lack of investment in solid tools, process and pipelines is probably due in some part to this.

Dialogue, it can be argued, is perhaps the single most important aspect of video game audio, in that it is often the only element of the audio that a reviewer will mention, and poorly implemented and badly directed dialogue can completely ruin an entire game.

Dialogue production also has very deep dependencies stemming from within mission design, story architecture, and it's anchored at the heart of cinematic production dependencies. To this end it needs to be one of the tightest and most organized and "locked-down" elements of audio production, yet remain completely fluid and open to change all the way along the chain.

To further understand the bigger picture of game dialogue production, it is helpful to look at the broad stages from the beginning of production to the end.

Design (characters / AI categories / reactions, naming conventions and folder structure etc)

Content Creation (Writer(s) fill out pre-assigned dialogue categories, or create story scenes and dialogue)

Casting (describing character to casting agents and potential actors with sample lines)

Recording (requires export of character script for actor to read) - (notation of required takes) (changes to lines due to improvised performance etc)

Editing (editors cut the required takes from the recording)

Implementation (files are placed in relevant pipeline path to be built into game)

Tuning (in-game tuning of frequency of playback, volume of playback, ducking mixes etc)

Iteration (critical in adapting the performance and script to changing game design and story changes and often loops production back to the 'Content Creation' stage)

Quality Assurance (all lines are tested in the game)

Localization (various language files are made available in pipeline so language can be switched)

Mastering (all dialogue files are mastered, given same overall level, then replaced in pipeline)

Mix (dialogue is mixed at a consistent and clearly audible level in final mix of game content along with music and fx, dialogue ducking is implemented and tuned)

Because the stages of dialogue production are somewhat linear in nature, it can be envisaged that a single database can be created and maintained from day one, right through all stages of production. Such a database can be updated at every stage of production and can export the exact required information for each of the various 'clients' along the way. A further breakdown of each broad element of production will help define what is required and by whom at each stage.

Prior to assigning content creators (writers) the different forms of spoken dialogue need to be clearly understood and planned from a game design requirements perspective. Usually this will occur in the form of various demo levels or a vertical slice of gameplay, whereby various dialogue types are tried out in-game using scratch dialogue recorded by either actors (if going for a 'high quality impress-the-suits' demo) or using keen members of the development team. A 'full game script' usually breaks down into some or more of these three main component areas...

Story (Usually in the form of scripted scenes, cut-scenes, NIS, FMV etc)

In-Game Dialogue (main character, enemy, and pedestrian character reactions etc)

Scripted Mission Dialogue (main and ancillary mission information)

This is perhaps one of the most challenging sections of dialogue production, yet it also can be the most often overlooked. There are an enormous amount of dependencies that hang on having a script and a story fleshed out early, such as cinematics production, character creation, world building and scoping -- as well as pure mission design and breakdown.

Once the three areas of dialogue (as above) have been defined, it is time to consider who will be responsible for the writing of the actual content and whom, on the developer's end, will manage its quality and integration. Video game writers are usually (this is of course a very broad generalization) brought in to work with the developers on the overarching story, characters and major story events worked in among the gameplay mechanics and the location and art direction of the world. All these elements are tremendously influential on one another and it is essential to get these collaborators working early on the story.

Much has been written about story process and game development (particularly of note is the recent story process notes from Valve writers Marc Laidlaw and Erik Wolpaw at GDC Austin), so it is not an area that I want to touch on in any more detail here. Suffice it to say that story in games development is an on-going collaborative process that will demand changes throughout production and much flexibility from the dialogue production process, implementation, and tools.

What I do want to touch on are the other two areas of dialogue content creation: the in-game character reaction dialogue and the mission dialogue, areas not traditionally (at least not yet anyway) taken care of by third party writers, or at least not by the same writers as were responsible for the story.

In-game dialogue is a huge area of content that should not be overlooked. Depending on how many characters there are, and the AI behaviors the characters have, there could be as many as 10,000 to 15,000 individual contextual reactions that will need to be written. There are third party writing houses that cater to these kinds of in-game dialogue -- however, they are few and far between, as the in-game reactions of tertiary characters are often not really considered as part of the story.

I will say that it requires a special skill and understanding of how these lines and variants of these lines work in games to be able to do this job well; generating non-repetitive variants for the same event, over multiple characters, can quickly run a writer's reserves dry. It is also worth noting that it is these lines that potentially will get the most airplay during actual gameplay, whereas mission and story dialogue lines play far less frequently.

Mission dialogue generally comes in the form of short informational and instructional lines of dialogue relevant to very specific objectives of very specific missions. This dialogue is generally created and defined by the level designers and mission scripters on the development team, as they are closest to the moment-to-moment requirements of their missions. Once this content has received a pass by the designers, it makes the most sense to get a beauty pass done by a contracted writer, preferably the story writer, so they can interweave it into the story arc and motivations of the characters as well as tidying up how the lines scan.

The mission lines and in-game lines need to be just as flexible and adaptable as the story, because as the game and story change throughout development, these secondary dialogue areas will also need to bend to the will of the overall game design.

One further note on content creation at the writing stage is to always read the lines out loud before signing off on the work. Many writers write for the page very well, however when these lines are read out loud by an actor, they often sound clunky and wordy -- perhaps with repeated words, or repeated character names. An editorial pass is almost as important as the actual content creation itself in getting you to a final, actor-ready script that can be convincingly performed, and that feels more naturalistic.

Getting all these different types of content into a central database can be a challenge, however if a database can export templates for in-game writers and can quickly create events for story and mission dialogue moments, then the interchange of data as well as the management of the different forms of content can be more easily maintained.

Casting has client requirements from the game developer in the form of casting sheets. These sheets should typically contain concept art or image reference art of the character in question, a description of the character's age, race and temperament, and perhaps even some background bio information.

The sheet should also come with an additional sheet of audition lines, both in-game and story (if both are required) and a brief synopsis of the game story and the type of game that is being developed. The process usually involves a casting agent, (or yourself if you are a pro-active audio director) sending out the audition sheets to agencies and receiving back auditions through which the team will sift and pick the best sounding voices for the roles.

This process is made all the more effective by putting in as much detail and preparation into the casting sheets; the better presented they are, the higher quality and more commitment and enthusiasm you will get from the casting agents and also the voice actors themselves. Again, having a tool that can handle this kind of casting information and character images as well as being able to export the actor's audition sheets is another point at which a database can not only speed up, but improve quality in the process.

It's essential to print out the script or agree with the recording studio on a format with which the actor can view the lines of the script on screen. Often there will be guidelines recommended for font point sizes for paper scripts. or for onscreen displays, depending on how far away the actor will be from the screen in the booth. Preparation here before getting into the studio is again a huge factor in the smooth running of any voice recording session.

Particular attention must be paid, if using paper scripts, to how the lines and any additional info fit onto the page; the commonly used Microsoft Excel is notorious for printing out poorly over several pages if the cells have not been formatted correctly. Always do a few test printouts to find a good format that fits in with the suggested font point size.

Also essential for paper scripts are page numbers at the bottom or top of each sheet so that you can refer to and direct the actor quickly back to re-do a take. All scripts must be thoroughly proofread for spelling mistakes and grammatical errors, as these often trip up actors who have not seen the lines before the session and can waste a huge amount of time while the real meaning of the line is communicated to the actor.

Further preparation must also be done in the area of structuring the script for the session. For any conversational scenes, ensure that the accompanying character's lines are also included, so the actor knows the all important context and can judge the tone of the delivery.

Also, reading cinematic lines first in the session followed by mission lines and then the more repetitive, and often louder, in-game lines will help the flow of a session. Ensuring that the script reflects the order that you want the session to run in is a must. Leave efforts and screams until the end as these are the most intense and demanding and will finish the session off nicely -- and starting a session with these lines will invariably blow out the actor's voice.

Again, being able to format and export session scripts from a single database will save huge amounts of time in production preparation. Other features a healthy, single dialogue database possesses include being able to move around the order in which lines are presented to the actor, as well as having some kind of data column to preserve take-notes for each line while the session unfolds, as well as changing lines that the actor improvises within the database.

Once a session has been satisfactorily recorded, the correct files, or takes, will then need to be edited. There are several schools of thought on whether to edit every single take that was recorded, or to just edit the files that were marked during the session. Things to bear in mind here are cost -- are you paying for editing on a per line edited basis? -- and time management and organization.

Once you have received the files, if you get every take edited, you also will likely have to make a whole other pass on every file, choose the one you liked best (as you already probably did at the session) and then more than likely rename the file so that it doesn't have something along the lines of "alt_3" in the filename? My advice is to mark the takes in the session, send the editor the take list along with the entire session recording, only pay for the lines you want edited, and the files will all be named as they should and ready to drop into the game when you get them back.

Should you require alts, it is very quick to go back to the original recording and listen through the other takes for any specific lines, to replace those that are not working as planned.

Sending the editors a digital version of the script (exported from the central dialogue database), with line text, filenames and session take notes is a very efficient method, as the editor can simply copy/paste the filenames from the digital sheet into the sound editing software and name the file as well as reading the exact take notes from the session alongside each line. This is far quicker and less error prone than expecting the dialogue editor to type out the filenames from a paper take sheet, especially as many filenaming conventions contain a mix of upper and lower case as well as underscores, numbers and special characters.

A digital editor sheet also allows the editor to make their own editing notes about a particular file, stating if a file was corrupt during recording and an alternate had to be used or a combined edited take used in place of the one marked at the actual recording session.

Whoever is overseeing the dialogue must also be very clear to the editors what is acceptable in an edited file, how much silence is acceptable before and after a file, whether to remove pops or breaths from files, etc. If this is not communicated clearly upfront, then there can be a lot of reworking required. Again, organization is key to getting this work done quickly and efficiently. And that's key to getting content turned around and quickly working in game.

Once the edited and named files are received by the developer, it is time to get them integrated into the game. Usually at this point in development, the triggers for these lines or events to play in the game have already been set up and implemented with some form of placeholder content. If this has been done, then it is just a simple case of replacing the old placeholder files with the newly recorded ones, and rebuilding the dialogue. Having the central dialogue database/tool know about file-path per line will enable this data to be built into the game via a build-step.

Once the dialogue is triggering in the game, it is probably going to need some form of tweaking to make it feel natural. Often the frequency of the dialogue event will need to be tuned so that perhaps it doesn't trigger each and every time the in-game event happens. Game data associated with each character event and line can now be tweaked, and the ability to add new custom game data properties to each line, character or group of lines is another area that can be supported by the dialogue database.

This is often one of the more misunderstood and awkward parts of dialogue production in video games, due to the inevitable feature creep and story scoping that goes on throughout video game development. This ongoing process usually means that significant portions of the gameplay (affecting in-game categories) and story (affecting mission and story dialogue) will have changed by the time the original dialogue recordings are implemented.

These inevitable tectonic shifts in game-play will always leave the story and in-game dialogue in need of repair in some way, be it merely cosmetic or even structural. Once the dialogue is in and settled, and required fixes can usually be figured out by a combination of the design team, audio director, and producer team. These changes are then integrated into the script by the writers (returning to the content creation stage) and the changes are then followed through all the same production stages back to implementation.

This re-work can often be problematic in that getting a particular actor back to do short pick-up sessions may not fit with their schedule, and such sessions may not have been included in the original contract (or budget). Always plan for pick-up sessions with every actor in a video game. At best lines will simply be cut; at worst, whole new plot-lines and missions will need to be recorded or completely re-cast.

Testing that the right dialogue lines are playing on the right events and the right characters is a crucial part of the QA process. It is at this point that an easily accessible dialogue database is extremely useful to hand over to the QA department. Using this, they can test that each line that is being heard in the game is playing as it should be and also if subtitles are present that each and every lines of dialogue in the game has an exactly correct accompanying subtitle.

The localization process is often one of the very last things to occur in dialogue production and as such also needs careful planning in order that its implementation does not come as a surprise during post-production. It has to wait this long because all the changes and iteration need to have taken place by the time the localization teams begin translation of hundreds of thousands of lines.

The localization team will usually be in touch during production to verify the content pipeline, and to get line and word counts per character, for translation cost estimates. Again, access to your dialogue database is crucial at this stage, whether directly or through some kind of export, the localization team will need to be working with the absolute latest master version of the game script that is currently implemented into the game.

They will also require all the English files and file-paths, as well as any timing restrictions for any particular files if full file localization is being done on the files themselves so that, for example, the spoken length of a German file matches as closely as possible the spoken length of the English file, if timing is critical to the file.

Mastering of the dialogue files is one of the final processes for game dialogue production and is essential in bringing all the dialogue files to a consistent overall output level. Mastering is also especially important if some of the dialogue files were recorded at different studios or with different microphones and pre-amp set-ups, often requiring some EQ treatment to unify the sound of all the different files.

Much of the dialogue that is recorded for video games occurs in acoustically dead ADR booths with a very close mic, sometimes resulting in a very booming low-end "radio" voice sound. This is not as realistic to our ears as the boom pole, on-location mic sound achieved in movie production dialogue, so more often than not the low-end will need to be finessed on the dialogue files in order to give it less of a radio character, and more of a movie character.

Having a dedicated dialogue mastering facility or on staff mastering engineer take care of this at the final stage of production is very wise. Once the mastered files are returned, it is simply a matter of doing a final replace of all the un-mastered files and a full re-build of the content, and you are ready for the final mix.

Getting dialogue to be clearly intelligible at all pertinent times in most video game mixes is essential. Listening to the game at reference mix level of 79dB (the home entertainment standard) and getting dialogue to hit -12 peaks with -20 to -18 dBFS average RMS is approximately the levels one should be aiming for.

In a mix it does come down to common sense -- though above all else, if you cannot hear the dialogue for the music or SFX, ensure that the music and effects are ducked down during that line of dialogue using interactive mixing techniques (or offline techniques, if working on cutscenes). Because the dialogue is now mastered, you can be confident that all the lines will be of roughly equal level, enabling you to assign and tweak a master dialogue fader for overall voice level.

As can be seen, with so many different phases to the production of dialogue, it is a huge challenge to maintain the script in a master format throughout production, especially between the printed paper script for the actors and the in-game implementation with all its additional parameter requirements per line or character.

A single dialogue tool that is able to be used to design, structure, create characters and dialogue events, lines and sequences, and be able to export and import usable sheets at different stages of production to the different clients at each can clearly help in maintain tight control over what is typically a job that can quickly get out of control.

Keeping all the data in one central place, with both in-game data and extraneous data such as editors' notes and line direction built-in, is an exciting proposition for audio directors and game designers, in that changes can be quickly made to the content, without needing to modify multiple versions of the script or data. In this case, if a line is removed from the script, it is removed from the game's pipeline and vice-versa. It is this idea of synergy between in-game dialogue implementation and off-line scripts and clients that is the key to retaining a flexible dialogue production pipeline.

At Radical Entertainment we have been working with such a single dialogue database for around three years now. Called UDO (Universal Dialogue Organizer) it has been used to ship three titles with several further titles in development. Originally written and designed by audio coder Robert Sparks, the premise is simple, as laid out by the design requirements of the different stages of production above; to maintain a single master database and location for all dialogue information and data from the beginning of production to the end.

It's augmented by the ability to export and import custom data in various different formats for the various differing clients along the way (writers, casting agents, actors, dialogue editors, localization specialists etc). The tool is essentially a dialogue production one-stop-shop as it has input and output at every crucial step in dialogue production.

One of the major selling points of this tool for someone involved in dialogue production is that it is pipeline agnostic, meaning that the database itself has no pipeline building role; however, the data (XML) can be prepared by any pipeline tool and built into the appropriate data for the game engine, whether proprietary or third party.

Starting with pre-production, character templates can be created and given any number of dialogue events. Each of these events comprises a number of lines -- essentially variations of the line. So, for example, a pedestrian being hit by a car has ten lines in its "hit by car" event.

Once a character archetype has been suitably designed (usually defined by its AI behaviors), it can be duplicated from this archetype template in order to quickly populate the entire game with the various characters and character variants, such as Miami_Cop_01, 02, 03, and 04, etc.

Mission and conversation lines are slightly different, using what we refer to in UDO as "sequences", which are basically groups of ordered sequenced events. These event lines can be sequenced to playback in any order and custom silence or overlap can be added between each line, with a real-time preview so that timing tweaking is as the listener desires. This timing data is then able to be re-appropriated by whatever game engine is being used in the build step.

All lines and sequences are searchable and previewable in UDO, so any tuning and QA work can be done in the tool directly without building to the game. Unwanted lines can simply be found and deleted this way based on a word search, for example.

Once all the game characters, and mission and cinematic lines have been created, writers' scripts can be exported to Excel format, for an in-house or out-of-house writer to fill in. Once the writing work has been done, it can be quickly imported back into the UDO database, checked for errors and then prepared for the recording session.

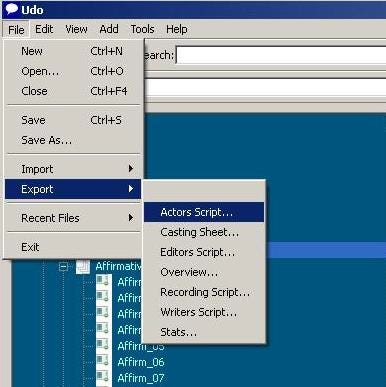

Export is also available for a casting sheet (you can browse for and assign character art for any character and also add descriptions as "character properties" which will show up on the casting sheet) and a recording sheet for the actor, showing relevant information for the actor such as event name, line text and direction for the line.

While the session is underway, changes to the script can be tracked instantly within the UDO database, ensuring that every line is completely up-to-date at the end of every session without having to use different versions of other people's scripts. UDO is always the master and is used to generate other custom Excel exports for the various dialogue clients.

For example, during the recording session, there is a column in UDO to make take notes next to each line, showing the VO editor which take to edit or give timing info. An editor's script can then be exported, displaying file-name, take notes and line info, and also making available an editor's notes column, into which an editor can place notes about the editing work, such as "used take 2 instead of 3 as it was distorted".

Beyond the actual script data, a whole spectrum of game data exists in UDO that can be associated with characters, events, lines or sequences, such parameters as which ducking mix to install when the line plays is critical in quickly applying pre-existing game data to new dialogue content that is coming on line midway through production, any other kind of data can be associated with a dialogue file in this way too, such as face fx files or lip sync data.

UDO's import and export options for the various stages and clients of dialogue production.

Because the database is standalone, and not built into the audio engine, it also allows a variety of users to have quick access to the dialogue database, such as designers or localization staff. A single .udo file is checked out in the version control software and all changes are tracked via that.

A variety of useful ancillary features also exist within UDO for seeing the entire game dialogue as a whole from a high level, such as showing the current total line count and word count for all or only selected lines, exporting character overviews which shows the breakdown of lines and words per character and also the ability to completely customize the xl exports into any format, font and colour with locked cells etc.

Having the entire dialogue process from creation to implementation in one central place has certainly proved imperative for the digital management of dialogue production and has enabled us as to concentrate on the quality of the dialogue and performance itself, rather than becoming bogged down in tracking changes and fixing technical issues. Even if only for the fact that one no longer need scribble illegible take notes onto a paper script and have it faxed over to a dialogue editor, it has been worth the investment.

Read more about:

FeaturesYou May Also Like