Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Sometimes we reach greatness by standing on the shoulders of giants -- and anyone who has ever made a game with the Unreal Engine knows that Tim Sweeney is just such a behemoth. In this interview he folds forth on the present and future of 3D engines for high-powered games.

A reprint from the March 2013 issue of Gamaustra's sister publication Game Developer magazine, this article finds is a brand new interview with Epic Games founder Tim Sweeney.

You can subscribe to the print or digital edition at GDMag's subscription page, download the Game Developer iOS app to subscribe or buy individual issues from your iOS device, or purchase individual digital issues from our store.

Sometimes we reach greatness by standing on the shoulders of giants -- and anyone who has ever made a game with the Unreal Engine knows that Tim Sweeney is just such a behemoth. He wrote a quarter-million lines of code in the original Unreal Engine, and though he's founder and CTO of Epic Games, he still actively codes every day.

It's a curious exercise to think about where the industry would be today if not for the Tim Sweeneys and John Carmacks of the world. Luckily, we work in an industry where most of our legends are living, working, and still pushing us forward. Many of them are shuttered away from the world, either working diligently on their next project, or shielded from the press by concerned public relations staff.

As luck would have it, I caught Sweeney in Taiwan after giving a talk, unencumbered by handlers or hangers-on, and was able to have a candid discussion with him about the future of game consoles, code, and, as it happens, John Carmack.

What does it take to be five years ahead of the game industry, so you can have tools ready when developers need them? What problems do we still have to solve? Who will be the guardians of triple-A games, as much of the world moves to web and mobile? Sweeney doesn't have all the answers -- but whom better to ask?

Let's start off with a big sandbox question. What do you see as the next big thing the industry needs to tackle graphics-wise and computationally in games?

Tim Sweeney: Oh, wow. The industry's advancing on a bunch of fronts simultaneously. One is just advancing the state of the art of lighting technology. We've really added to that with sparse voxel octree global illumination stuff -- basically, technology for real-time indirect lighting and glossy reflections.

Tim Sweeney: Oh, wow. The industry's advancing on a bunch of fronts simultaneously. One is just advancing the state of the art of lighting technology. We've really added to that with sparse voxel octree global illumination stuff -- basically, technology for real-time indirect lighting and glossy reflections.

That's really cool stuff, but it's also very expensive and only suited for the highest-end GPUs available, so having a family of solutions for that that scales all the way down to iPhone with static lighting is a big priority for Epic -- the ability to go to a game that scales in a dramatic factor from low-end to high-end.

We're really concerned -- I think it's our number-one priority, really -- with productivity throughout the whole game development pipeline, because we're looking at companies like Activision spending $100 million developing each new version of Call of Duty, and that's insane!

We can't afford that sort of budget, so we have to create games with fewer resources. Any way we can tweak the artwork pipeline and the game scripting pipeline to be able to build core games more quickly with less overhead is better. We put a lot of effort into visual scripting technology to greatly improve the workflow.

It does look a lot more intuitive for a less tech-savvy person.

TS: With Unreal Engine 4, we really want to be able to build an entire small game on the scale of Angry Birds without any programming whatsoever, just mapping user input into the actions using a visual toolkit. This technology will be really valuable. We're also expanding the visual toolkit for everything: for building materials, for building animations, for managing content when we have a huge amount of game assets. We're just greatly simplifying the interface so that it's basically as easy to use as Unity.

On one hand, you have the Unreal Engine having by far the largest and most complete feature set of any engine, but also with Unreal Engine 3 it was a big, complicated user interface. With Unreal Engine 4, the effort is to expose at the base level everything in a very simple, easy-to-use, and discoverable way and to build complexity on it so that the user can learn as they go without being terrified by it in the form of a huge, complicated user interface.

That's a problem all applications have to deal with nowadays. If you look at an iPad app that does 90 percent of what the world needs really easily, versus a Windows version of the app for something like Photoshop -- I spent an hour and couldn't even figure out how to draw a picture in Photoshop; it's that bad. (laughs) There is a lot to do there and a lot to learn from.

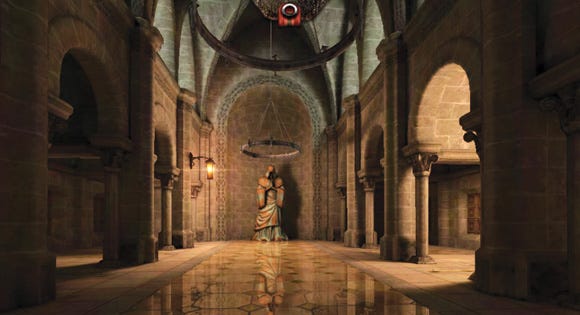

Epic Citadel

Going back to voxels, I've always been kind of fascinated by them because they're less expensive with higher fidelity potential, but you can't texture them and you can't really animate them well. Do you ever foresee a future in which that might be possible? It would be a total industry shift away from triangles, but...

TS: It's clear now that voxels play a big role in the future.

Certainly for lighting, right?

TS: Well, that's just one way we've been finding to use them effectively. [John] Carmack did a big write-up about voxels and the virtues of sparser representations of the world. It seemed crazy to me at the time, but now it's becoming clearer that he had a lot of far-ranging insight there.

The thing about voxels is they are a very simple, highly structured way of sorting data that's easy to traverse, whereas any other form of data, like a character stored as a skinned skeletal mesh, any time you want to traverse it, you have to do a gigantic amount of processing work to transform it into the right space. You need to figure out what falls where and rasterize it or whatever, but voxels are just efficiently traversable. I feel like there's a big gap.

The data representation you want to use for rendering your scene differs greatly from the representation you want to use for manipulating your scene and basically moving objects around and choosing how they interact. It's not clear which representation wins. In the sparse voxel-oriented approach, one neat thing is we can update it dynamically; as objects move around, we can just incrementally change those parts of the voxel octree that are relevant. You can figure out the extreme edge case of the algorithm, so that instead of the voxel octree being fixed with its orientation to space, all you have to do is align it to screen and arrange it objectively so you're seeing a 2D view of this voxel octree, whereas the other dimension is your z dimension.

You basically have this projective voxel octree, stick everything in the entire scene into it, every frame, and then that unifies all of these screen space techniques like screen space ambient occlusion with the large-scale world effects that we're using with the sparse voxel octrees. Imagine rasterizing your entire scene directly into a representation like that -- using that for real-time lighting and shadowing and then rendering that result out the frame buffer. It's hard to say whether that has merit; that's an algorithm where you need 20 or 30 teraflops as opposed to one or two.

It seems like we've gone so far in the polygon direction that it would take a significant spend and a lot of research to try to push in a different direction.

TS: Yeah. Polygons are nice for representing your scene that the idea that you can go with this smooth, seamless mesh that has nice properties like being planar... I have a hard time seeing the world moving away from that. And if you think about something like a skeletal animated character, trying to represent data like that in a voxel representation would be hopelessly inefficient.

Say you have two fingers and want to animate them independently, but they're close enough that they probably share some voxels in common; you want a polygon representation so these objects can be independent and move independently with no relationship between them. So I think polygons ultimately will always be our working scheme representation, but our rendering scheme representation is where you're seeing a lot of this innovation.

You can really look back and say, a-ha! The industry started inventing these techniques five or six years ago with screen space techniques like ambient occlusion, and now we're starting to realize that, instead of just doing that in screen space, you can also do it in a voxel representation of the world. They have in common this very regular structure that's easy to traverse.

I think we're going to see enormous innovation in these areas over the next 10 years because, with DirectX 11, you now have all this power of general-purpose vector computing hardware, and you can use a few teraflops of performance in traversing an arbitrary data structure. In the previous generation, we had to rethink everything in terms of pixel shaders and vertex shaders, and that rules out most of these techniques.

So the next few years are going to be very interesting. I suspect that a lot of developers will look at this from different perspectives and come up with entirely new techniques that we're not anticipating now.

What do you think about Carmack's solution to textures with id Tech 5, where every texture has to be specifically drawn?

TS: It's a neat idea that's kind of the extreme Carmack way of doing things, the brute-force, completely general solution to the problem. Some artist is going to want to customize any particular part of an object, and that makes it really easy to go in and paint over and customize any part of a scene to an arbitrary degree. That is certainly the general solution, and it's a very brute-force algorithm that gives you regular performance regardless of scene complexity, and gives you regular performance for streaming, which is really important.

I personally tend to be on the other end of the spectrum, which is that, rather than going at everything brute force, we want procedural authoring techniques to enable content developers to build small enough content and then amplify it by instancing, reusing, scaling, rotating, and customizing objects with minimal amounts of work. In Gears of War, you see that the same mesh was used maybe 20 times in different places. I think those techniques tend to be the best solution to the overall game development productivity problem because we want to go and create games with minimal effort. Although they're not as flexible, they do give you the greater amplification of both data and development effort, and, to me, that is always going to be a really desirable property.

You can envision the two ideas being combined. The thing that's awesome about Carmack's megatexture approach is that it gives you the freedom to customize everything. The drawback to it is it gives you the cost of everything being customized, even if it's not, because you have to store everything uniquely. What I would like to do is basically represent the world in some sort of hierarchical, wavelet-inspired way so that data layers that are zero or haven't been changed relative to their base aren't stored at all, and therefore don't incur any cost.

The idea is that you want to build a cool door façade and use it in 10 different places, and in three of those places you go in and do a custom thing on top of it. You're storing the data there, but you're only incurring the cost for the areas where it's actually being customized. Similarly, the idea with wavelets is you can paint at multiple resolutions and you can do perturbed geometry at multiple resolutions, so you might have a beautiful car mesh that's used in a bunch of places; then, in some places, you have it wrecked because you destroyed the high-level vertices or the lower-level detail versions of it, but the rest of the content is still there.

So, with a little bit of data, you've made a really interesting procedural modification to an object that already exists. I think nuanced techniques like that will win in the long run because they give you the full customizability of the Carmack approach without the full cost of using it everywhere.

Fortnite

I feel like there aren't really a lot of companies that are taking such a long-term view of things -- thinking five, 10, or 20 years ahead technology-wise, especially in games and also in terms of business models. Epic is thinking about that with Unreal Engine 4, but also with Infinity Blade being the company's most to-scale profitable game. How have you been able to take this view, where a lot of others aren't thinking past what they're going to put out next year?

TS: Well, being both an engine developer and a game developer forces us to think further ahead. Our big success with the engine came in the third generation, and that was because everybody else had just been finishing their previous-generation games, while we'd been building Unreal Engine 3 three years prior to Microsoft or Sony actually choosing their hardware for the generation. So we had this enormous investment that we'd already built up, and as soon as the industry moved over from that we were ready for it and everybody else was years behind. That gave us a huge advantage.

We've been doing that informally all along -- really trying to think ahead -- but that experience made it an explicit and clear part of our strategy. We need to stay ahead of the rest of the industry so we can be there with tools and technology when people need them. People don't realize they need them until they need them, so we must realize they'll need them three years before they need them, so that we can actually build them. That's been the challenge.

We have great relationships with the hardware companies -- Intel, Nvidia, and Apple. With the pure hardware manufacturers like Intel and Nvidia, we can talk about our roadmap with them and they can talk about their roadmap with us three, four, or five years out, and we can really line everything up. It's what's necessary. We have to start thinking of technology developers like Epic, Crytek, and Unity a lot like the hardware industry thinks of itself.

When Intel ships a CPU design like the new Sandy Bridge or whatever, it first envisioned that architecture seven years ago. They had to, because that's their development cycle and they have to go through this long series of processes to develop that from scratch to ship. We need to have a lot more engine development work in the pipeline. UE4 was in development simultaneously with UE3 for three years or more, plus a longer research cycle than that in advance. It's just necessary for survival and continuing to lead the industry forward.

So it was a conscious choice to be ahead of the game with Unreal Engine 3, but earlier, when you realized people needed your technology, was that a tipping point and a change in Epic's mindset? Were you initially envisioning it as this service, essentially, that was going to be sold to people, or was it like, "No, we need to build this game, and this is what we need to build it; so we're going to go that direction."

TS: We came into 3D game development really seat-of-the-pants. When id Software created Doom, I looked at that and said, "Oh my god; they've invented reality. I'm giving up as a programmer. I'll never be able to do that." But, over the next few years, as they started to build Quake, I started to think, "Hmm, maybe I can figure out this texture-mapping stuff."

With the first generation of Unreal Engine, we went in not really intending to build an engine so much as build a game, and the engine was a byproduct of that effort. Then we were a couple of years into development when a couple of developers called us up and said they wanted to license our engine, and we were like, "Engine? What engine? Well, I guess we have an engine."

The whole engine business at Epic was a completely customer-driven idea. As it's evolved, it's become a much more serious effort. More than 40 people are contributing code to Unreal Engine 4. That's a huge effort. It's a team worldwide who works for customers providing support and developing features in Japan and Korea and China and Europe.

We're creating a real significant global business, working closely with all of the hardware companies to determine the roadmap as much as we can. Roadmaps then line up with working with customers to work out various conflicting requirements between different markets and desires. It's a very serious, real business now, completely different than it was a few generations ago. I wrote a quarter-million lines of code on Unreal Engine 1 -- I wrote about 80 percent of the code myself. What could I do now being one person out of 40?

That makes me curious -- how much day-to-day coding do you actually get to do?

TS: I spend at least a few hours a day, but right now I'm not critical path on anything like I was on Unreal Engine 1. My schedule is too unpredictable to contribute to that, but I really try to stay on top of it and talk with all the key guys who are architecting the major systems.

It seems like in some companies -- this is especially a Japanese problem -- people get pushed up and out of doing stuff and into having meetings about doing stuff instead. It's good you've avoided that.

TS: Yeah, we've really put a lot of effort into making sure our key folks at Epic are able to do what they are best at. There are some world-class programmers at Epic who are never going to be leads because they are far more valuable at inventing new ideas than coordinating the efforts of the people who do that. There's a very different set of talents required for leadership versus more individual contribution. It's very important that you recognize the distinction between the two and realize what each person is really best at.

Of course, it's also important to compensate accordingly if you really need someone in that non-leadership position.

TS: Some of Epic's most valuable people aren't in leadership roles.

Borderlands 2

These days, it feels like there are not very many people trying to push graphic fidelity forward. There are a lot of people who are more concerned with business models and things than they are with graphical fidelity and stuff. Do you feel some kind of pressure to push graphics in the next phase of game evolution? There's you, there's Crytek, DICE, possibly id... Who else is going to fight for graphics over convenience?

TS: Well sure, if you look at EA's DICE studio with Battlefield and Activision with Call of Duty, they're certainly making major investments in graphical quality. I think that's a general goal of the major Western developers -- at least developers of major shooter franchises -- to really push the graphical line.

It's an interesting distinction; when you talk to Asian developers, the overall focus is more on maximizing the customer experience than on maximizing the graphics. A lot of the companies out here are decades ahead of us in that area; every day they look at the stats of what users are doing, whether they're getting stuck, what things they're buying, what things they're not enjoying. They gather massive amounts of data and use it to tweak the games constantly and make it better on a daily basis. I think both of those methods have merit, and the ideal would be to do both of them.

I think that's going to be the interesting thing that happens when you see Western companies trying to move their big game franchises into a free-to-play model worldwide and coming into contact with the Asian companies who are moving their free-to-play games to the West; you get this big clash of production values versus customer experience optimization. That's going to push everybody to improve significantly. That's going to be quite an arms race because it means we need to learn different ways of making our games.

We can't come up with this grand vision for Gears of War, spend three years building it, and then see if customers like it. I'm exaggerating; we actually put a lot of effort into playtesting and getting customer feedback up front, but it's nothing like the scale of what happens in a game maintained by Tencent, for example.

I feel like, over the last five years, many companies have dropped the graphical fidelity and stopped trying to push graphics and have left it to the realm of blockbuster guys. Riot can make League of Legends look good enough, then have [such] a fantastic user experience that it doesn't matter. So I wonder if you consider yourselves guardians of graphics technology for the future, keeping graphics moving forward because you're trying to push the console makers, to some extent, through the chipsets they may have?

TS: Well, Epic's engine programmers and our artists really take it as a matter of pride that we want to have the best-looking stuff available, bar none, on every platform. If we're building a high-end PC game or a next-generation console game, we want to have the best graphics quality possible with however many teraflops are available. If we're building an iOS game, we want that to be the prettiest iOS game.

With every generation, the number of things you need to do right to succeed with your game increases. It can't just be a beautiful game; it also has to be a super fun game. It has to have great multiplayer. It has to have great sound and great controls. Now we're adding all of the user experience maximization on top of that. It's just getting more and more challenging to build a game, and we need to respond to that by growing in team size and really staying on top of all that the industry is trying to do. In the future, we can't just give up pushing graphics. That's not and never has been an option.

If Epic were the last company -- if Unreal Engine 4 or 5 or whatever were the last high-graphic push in games -- would you continue pushing forward graphically if there were no competition?

TS: Sure. We always want to outdo ourselves regardless of where the competition is. The main goal is not to increase graphical quality by just throwing more money at the problem, but to do it intelligently by building better tools and technology that make it possible to do that efficiently. We've been very much focused on not competing by brute force all along.

Read more about:

FeaturesYou May Also Like