Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

In the first part of a new analysis, high-end game audio veteran Rob Bridgett (Scarface, 50 Cent: Blood In The Sand) examines Skywalker Sound's mixing on the remastered Ghost In The Shell movie, then extrapolates to ask - is real-time mixing of sound effects, music and dialog in games an important part of the future of AAA game audio?

[In the first part of a new analysis, high-end game audio veteran Rob Bridgett (Scarface, 50 Cent: Blood In The Sand) examines Skywalker Sound's mixing on the remastered Ghost In The Shell movie, then extrapolates to ask - is real-time mixing of sound effects, music and dialog in games an important part of the future of AAA game audio?]

Over the last five or so years, interactive mixing has developed considerably. From basic dialogue ducking systems to full blown interactive mixer snapshots and side-chain style auto-ducking systems, there is currently a wide variety of mixing technologies, techniques and applications within the game development environment.

The majority of these systems are in their infancy; however, some are already building on several successful iterations and improving on usability and quality. I have believed for some time now that mixing is the single remaining area in video game audio that will allow game sound to articulate itself as fully as the sound design in motion-pictures.

As you'll see later in the article, I recently had the chance to sit in on a motion-picture final mix at Skywalker Ranch with Randy Thom (FX Mixer) and Tom Myers (Music and Foley Mixer) on a remix project for the original 'Ghost in the Shell' animated feature for a new theatrical release. I was able to observe first-hand the work-flow and established processes of film mixing and I attempted to try and translate some of them over into the video game mixing process.

The comparison and the possibilities for game audio is especially true now that we are able to play back samples at the same resolution as film and are able to have hundreds of simultaneous sounds playing at the same time.

The current shortfall in terms of mixing technology and techniques has lead to games not being able to develop and mature in areas of artistic application and expression. While examples in film audio give games a starting point at which to aim, it certainly is only a starting point, as many film mixing techniques can only translate so far once mapped onto the interactive real-time world of games.

Interactive mixing offers up a completely different set of challenges from those of mixing movies, allowing for the tuning of many more parameters per sound, or group of sounds, than just volume and panning.

Not only this, but due to the unpredictable interactive nature of the medium, it is entirely possible to have many different mixer events (moments) all occurring at the same time, fighting for priority and affecting the sound in unpredictable and emergent ways.

There are a whole variety of techniques required to get around these challenges, but in the end, and looking beyond all the technical differences in the two media, it all comes back to being able to achieve the same artistic finesse that is achieved in a motion picture sound mix.

To begin, I have chosen to highlight some of the techniques and concepts used in linear film mixing so we can quickly see what is useful to game sound mixing and how each area translates over to the interactive realm. But first, I should clarify a few simple mixer concepts that often get confused.

Fader - A software or hardware slider that is used to control the volume of a sound.

Channel - A single channel, representing the volume and parameters of a single sound, usually manifested by a fader, sometimes called a 'channel strip' when including other functionality above the fader such as auxiliary sends or EQ trim pots. (Not to be confused with a 'speaker channel', a term used to describe the source of a sound from a particular speaker, Left, Right, Centre, left Surround etc)

Bus / Group - This is the concept of a parent channel which has global control over the values of other child channels as a collective group. This is also usually represented by a fader in either software and/or mirrored in hardware.

Mixer Snapshots - A 'snapshot' of all the volume and parameter positions and settings of an entire set of channels and buses, like a photograph of the mixing board capturing all its settings at any particular moment. Blending from one snapshot to another results in automated changes in these parameters over time.

Film Standards: Grouping

Perhaps one of the most basic concepts in mixing, grouping is the ability to assign individual sounds to larger controller groups. It's essential in being able to efficiently and quickly mix a movie or a piece of music.

In music, a clear example is that of all the different drum parts that go into making up a drum kit, you have the hi-hats, the kick-drum and so on, all mic'd separately. All of these individual channels are then pre-mixed and grouped into a single parent bus called 'drums' - when the 'drums' bus is made quieter or louder, all of the 'child buses' belonging to it are attenuated by the same amount.

In film this can be as simple as the channels for each track being routed through a master fader, or belonging to generic parent groups called 'dialogue', 'foley', or 'music'. In terms of video games, having a hierarchical structure with parent and child buses is absolutely essential, and the depths at which sounds can belong to buses often goes far deeper than in film mixes.

For example, a master bus would contain 'music', 'sfx' and 'dialogue' buses, within the 'sfx' bus there would be 'weapons', 'foley', 'explosions', 'physics sounds' etc. Within the 'weapons' bus there would be 'player weapons' and 'non player weapons', within 'player weapons' would be 'handgun', 'pistol', 'machine gun', within 'machine gun' would be 'AK47', 'Uzi', etc. Within 'AK47' would be the channels 'shell casings', 'gun foley', 'dry fire', 'shot', 'tail' and so on.

These bus levels need to go much deeper because at any given moment in a mix you could need to control all of the 'sfx' parameters as a group, or all of the weapons parameters, or just the AK47, or just a single element of the AK47 such as the shell casings.

In film this is easier to do because you have frame by frame control over the automation of linear 'tracks' on a time-line, so at any moment you can decide the volume, EQ or pitch of any number of individual sounds on simultaneous tracks. In games however, we are talking about many individual triggered events, that can occur at any frame in the game.

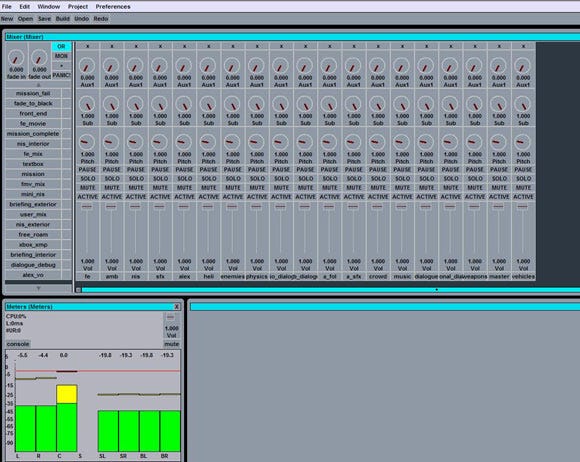

For a quick hands-on investigation into video game busing, third-party engines such as Audiokinetic's Wwise feature a very rounded and simple to use example of this 'parent / child' bus structure and can be quickly used to set up some complex routing allowing a great deal of control over the mix of large scale or very detailed elements. FMOD also has the concept of Channel Groups which act in much the same way.

Bus grouping in Wwise

Film Standards: Auxiliary Channels

These are extra channels, usually representing effects or different output paths such as headphone monitors, to which other designated channels can be routed. 'Aux channels' in film mixing usually get used to access expensive hardware reverbs or any number of digital or analogue effects units.

One important difference to note here between film and game mixing is that film mixing is done 'offline' in a studio, meaning the film is mixed, and then the mix is mastered or 'printed', meaning all the automation and effects are bounced down to a single final master version of the soundtrack to accompany the picture.

In a game, the mixing happens at run-time in a software engine and is happening every time the player actually plays the game. Because video game mixing can only ever rely on the software effects and DSP that ship with the game, software-based aux channels may be used to send the sound from a particular channel to a software reverb, also running in memory in real-time.

An aux send value in video game mixing software is very useful in being able to tune the amount of signal that is sent to a reverb, for 3D sounds for example, and tuning these values definitely falls under the category of 'mixing'.

Film Standards: Automation

Automation is an essentially linear media concept, but it can be mapped over to interactive realms in various forms. Put simply, automation is the recording of changes in the values of parameters of a particular channel over time, and as such can be used to record and play back volume or panning changes on a given channel strip. Going from one snapshot to another over time, could be considered a low resolution form of automation.

If during a cutscene, for example, you wanted to change the level of the in-game ambience that is playing in the background at a particular moment, you could install a different mixer snapshot on a given frame of the movie which changes the value of the ambient in-game sound volume.

Mixer snapshots themselves also form a cornerstone of interactive mixing technology, allowing a preset of channel and bus levels to be installed and un-installed to coincide with generic or specific in-game events.

These mixer snapshots can be used to perform a whole range of mixing functions, from simple ducking, to complete change of sonic perspectives by manipulating pitch and distance fall-off values. They also form a fundamental part of the interactive mixing technology in today's third-party and proprietary tool-sets, and will be explored in more detail later.

Film Standards: Standardization

Reference listening levels of 85dB are well established in motion picture mixing. This is a very important distinction from the music industry, which has no standards, and as a result music levels are completely varied from record to record.

The standard listening reference level in movies means, at least theoretically, that the movie is mixed at the same volume that it will be exhibited in the theatre, so the mixers can replicate that environment and make the best decisions about the sound levels based on their ears in that situation. It is because of these standards that we do not get motion picture soundtracks of wildly differing output levels.

Current video game output levels are much closer to the varied and sporadically different output levels of the music industry than those of film, so much so that a recommendation for a common reference listening level is clearly needed. My own thoughts are that this level should be in line with other 'home entertainment' media, such as TV shows or DVD remixes of movies and there already exists a standard reference listening level of 79dB for these media.

It makes sense that games, being a home entertainment medium, should adopt these output levels in order to be on a par with DVD movies (which like theatrical 85dB mixes, are generally in line with the 79dB output level).

This also makes sense because DVDs and Blu-ray discs are more often than not played back on the very same consoles on which we play our games. The goal is that the consumer should not be constantly having to change the volume of their TV set or surround sound system to make up for the differences in levels from game to movie, movie to game and even game to game.

Film Standards: Internal Consistency

The notion of consistency in levels from the beginning of a film to the end may go without saying. However, in addition to the overall output level of the in-game sound, there is a great deal of internal consistency that game developers need to pay more attention to.

More often than not, in-game cutscenes will have different output levels from those of the in-game sounds, perhaps because they were outsourced and came in at different levels, at the last minute. Not only this, but they may also have completely different surround sound configuration or routing, choosing to send in-game dialogue to left and right speakers, while in the cutscenes, dialogue comes from the centre speaker only.

In film, this would be like reel one having different dialogue levels to reel two, and reel three having dialogue panned left and right, rather than center. Having an internal consistency to the mix of a video game, through all the different modes of storytelling and gameplay, is one of many areas that a full in-game mix pass on the content at the end of production can greatly improve.

It takes time, metering, a calibrated reference level listening environment, a good ear and very careful attention to detail to do this effectively.

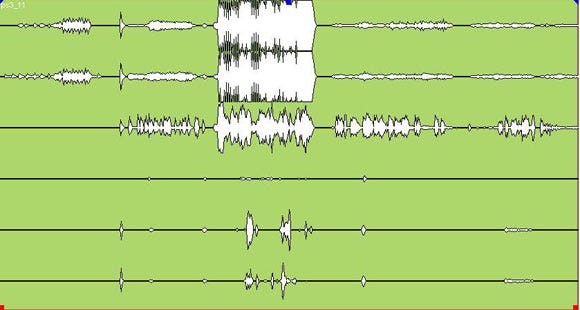

Above: An example of internal inconsistency in a video game: This 5.1 surround sound waveform from a recent next-gen title shows the Left, Right, Center, LFE, Left Surround and Right Surround channels from top to bottom over time from left to right -- the first section is a cinematic cutscene, the middle (louder) section an in-game battle, followed by a second cinematic cutscene.

Having examined some of the basic techniques and concepts of film mixing and how they can be mapped over to video game mixing, lets now examine some of the features that are currently specific to mixing video games.

Fall-off

On top of the basic mixing features that linear motion-picture dubbing mixers use, there are vast arrays of completely different techniques that are exposed by video game mixing engines. Some parameters that movie sound mixers don't get access to are the fall-off curves for sounds over 3D distance. These fall-off values, often also called 'max min distance', form as much a part of mixing as volume does.

In film, mixers are always trying to 'fake' the distance a sound is from the camera by using volume automation; with the fall-off values in a game, once these are tuned the volume raises and lowers itself automatically based on the position of the listener relative to the sound emitting object in the 3D space.

Other parameters that are automatically set up for 3D sounds in games are the amount of the sound that is sent to an environmental reverb depending on distance from the listener, again something that film mixers have to carefully fake via automation to aux reverb channels.

At this point it is probably wise to break out two distinct branches of mixing technology and techniques that have emerged, 'passive' and 'active'. Garry Taylor, Audio Manager at Sony Cambridge, usefully delineated these two categories at a lecture he gave at the Develop conference in 2008.

Passive Mixing Techniques

Those values which, once set-up, attenuate parameters, volumes or filters of the content 'automatically', I think of this as 'auto-mixing'. These techniques can either duck given channels by a particular fixed amount each time an event occurs, or can 'read' the volume amounts that are being played through a particular channel and attenuate the other channels by an equivalent amount (similar to side-chain compression used in radio broadcasts).

3D volume fall-off curves of positional sounds and occlusion filtering settings of 3D sounds all factor into passive mixing too. Passive mixing can produce more subtle volume attenuation, depending on how drastic the attenuation values are set and is, at its core, the setting up of rules and parameters that allow the system to react and work within those rules. These systems often get implemented and work well in first person shooter games, which have a single, unchanging point-of-view for the entire game.

Active Mixing Techniques

This describes systems which allow greater control over sound parameters and the ability to completely override a passive system for a specific moment in time. These overrides often take the form of mixer snapshots in which parameters at the channel or bus level are redefined and then returned to normal once the event has finished.

Using these systems, a particular sound, or group of sounds, can be made deliberately louder or quieter at a particular moment, and this allows sound designers the ability to make artistic decisions about sounds outside of a passive system.

This allows for a very different point-of-view to be articulated from that defined by a passive system; changing filtering, pitch, reverberation, DSP values, or moving the listener position and changing any fall-offs outside of a notion of objective reality and more in line with cinematic sound design. These techniques are more likely to appear in games that seek to emulate 'Hollywood' sound design or have special 'sound moments'.

Some developers only need to use passive systems, and this is usually because their games only require a single point-of-view that has no need for any kind of overrides to their model of 'reality'. However, all games are different, and a combination of a passive system with an active system offers deeper creative control and opportunity for aesthetic use of sound in video games: the loudest sound isn't necessarily always the most important.

This is not to say that a passive system cannot be artistically tweaked or weighted, but that there exist greater possibilities for sound perspective manipulation and design by introducing elements of an active system.

Video game mixing then, can make use of a combination of both of these systems in real-time: systems of carefully defined rules and parameters (passive), as well as deliberate overrides of the mix for 'sound moments' based on special game events (active).

Taking Snapshots Deeper

Snapshots, while simple to understand on one level, once implemented often require several different kinds of behaviors pre-defined in order to achieve the desired effect in the mix. At Radical we have found it useful to have the following three kinds of behaviors associated with snapshots...

Default Snapshots (only one installed at a time, used to set all the default levels of the various groups)

Multiplier or 'ducking' Snapshots (Several of these can be installed at any one time, when added to a default snapshot these create a ducking effect)

Override Snapshots (Event Snapshots) (only one at a time, these completely override any default or ducking snapshots that may be installed for the duration of an event)

Individual channels and buses themselves eventually need further defined behaviors when using multiplier (or 'ducking') snapshots, because by their very nature, when multiple ducking snapshots occur at the same time (because say a dialogue event and an explosion occur at the same time), more attenuation than desired is created as they multiply together.

When this occurs, controller values on each channel or bus need to be set to define the maximum values that a channel can be attenuated by, and essentially by assigning priority to the snapshots, you allow for special cases to be allowed to behave in the ways that the mixer intends.

More Than Just Volume

Snapshots can be used to alter parameters beyond regularly associated notions of mixing such as amplitude and panning. By changing values like pitch, LFE send, 3-D fall-off values, speaker assignment or listener position, greater creative opportunities can be opened up.

Potentially any parameter associated with a sound could be changed by using a mixer snapshot. Because snapshots are kind of like automation, any of the game parameters per sound or sound group can be manipulated. There is a wide scope to allow a mixer snapshot system much more control than is currently available in most audio tools, how far this will or should go is really up to the needs of the developers.

(click for full size)

In June 2008 I had the chance to sit in on a motion-picture final mix at Skywalker Ranch with Randy Thom (FX Mixer) and Tom Myers (Music and Foley Mixer) on a remix project for the original 'Ghost in the Shell' animated feature for a new theatrical release. I was able to observe first-hand the work-flow and established processes of film mixing and I attempted to try and translate some of them over into the video game mixing process.

The majority of technical ideas I have described above in the 'Film Standard Features' section translate over to game mixing as they have been described, yet, some of the more interesting elements of mixing, the artistic and aesthetic challenges, ideas and working practices, also map over onto games in interesting ways, depending of course on the game type or genre. Here are the three cornerstones of movie mixing aesthetics that Randy and I discussed and how I see them translating over to video games.

1) Rule of 100%

Randy talked about the rule of 100%, whereby everyone who works on the soundtrack of the film, assumes it is 100% of their job to provide the story content for the film. So the composer will go for all the spots they can, as will the dialogue, and the same with the sound editors.

When it comes to the mix, this often means that there is little room for any one particular element to shine with each element hitting each moment with the same amount of sound. This means severe mixing decisions have to be made, and often results in elements of the mix, such as the music, being turned right down.

In more successful movies, collaboration is present, or composers decide that it is fine to just drop certain cues etc. When Randy is mixing, he wears the mixers hat, and is at the service of the story and the film, in this mode he often makes decisions to get rid of sounds that he has worked very hard on (What sound designer Dane Davis also refers to [via Ingmar Bergman quoting Faulkner] as learning to 'Kill your darlings').

Sometimes mix ideas about particular scenes are talked about early with the director, at the script stage and Randy tends to work this way with Robert Zemeckis. However, not enough directors consider sound in pre-production and often end up with the 100% situation and a lot more decisions, and compromises, to make at a final mix, resulting in lots of chaotic sound moments and conflicts to figure out.

This certainly is very familiar in games. Music can, for the most part, be constant in video games, perhaps to an even greater degree, particularly elongated action sequences. Given that missions and prolonged action battles can last for a very long time (30 -60 minutes of game-play) being able to create or work with the mission designers to figure out dynamics maps or action maps of where the pace or excitement needs to peak and trough is a very fruitful and useful exercise.

2) Changing Focus & Planning for Action

Randy talked about mixing as being a series of choices about what to hear at any particular moment, it is the graceful blending from one mix moment to the next that constitutes the actual mix.

These decisions come from the story and what is important for the audience at any particular moment. He mentioned that cinema with deep focus photography often made things easier to 'focus' on with sound, as in the recent Pixar movie Ratatouille.

In action movies, particularly those with long, drawn-out action scenes, it becomes difficult to go from music to effects constantly, especially if in the script there is no let-up of action to allow the sound to take a break.

We talked about the extended chase scene in The Bourne Ultimatum as being a good example of handling this well by having an extended sequence with no music and dropping out various sounds at various times. This comes from the scene being well written for sound and by extension having a clear path for the mix.

Randy also cites Spielberg as being good for examples of how to use sound and a mix well. Often the arrival of the T-Rex in Jurassic Park is mentioned to him as an effect that a director wants to emulate, yet they rarely realize there is no music in this scene.

Directors, however, often go to music first to try and achieve the emotional effect. The opening moments of Saving Private Ryan are similarly cited as an effect that directors want to achieve in sound, again, there is no music in the this opening sequence. Knowing when not to use music seems to be a big and often brave decision to take at the writing stage of development, however deciding to drop cues can also work at a final mix.

Again, less is more, and dynamics maps for levels and missions are an essential way to begin to create moments that can exist without music for extended periods, rather than a single wall of noise that occupies the entire spectrum of perceivable sound and that ultimately fatigues the player.

3) Point-of-View

This is a topic that Randy Thom is passionate about, and for him, epitomizes the role of sound in being able to tell the story of the movie. It is also a concept that translates over to games with very little modification. Effectively everything that the audience, or player, hears is heard through the ears, or 'point-of-view', of a character in the film or game.

This means that sounds are going to be heard 'subjectively' according to that character. Sounds that are more important to that character are going to be more important in the mix.

Fictional characters, like real human beings, never hear things 'objectively' - that is to say that all sounds are never of equal importance all of the time. Focus is always changing, just as you notice the ambience of a room when you first encounter it, but then different sounds will become prominent and gain your attention, such as someone speaking or a telephone ringing.

The ambience never disappears, but it is pushed to the back of our perception, any change in ambience may bring it to the foreground again for a moment. This is true in both our perceived version of reality and also in the version of reality portrayed through sound for a character in a movie.

This subjectivity is a core goal for a movie or game mix, and this is why point-of-view sound design and direction is more valuable than attempting to create an objective sound reality based solely on the laws of physics.

This is an area I feel can be more deeply investigated for video games in regard specifically to mixing. Subjective sound effects design is of course used in video games all the time, larger than life weapon sounds, cinematic punch sounds, all these are 'point-of-view' effects.

Designers and producers want the player to 'feel' that what they are doing in the game is incredible and larger than life, whether role playing as another character in a third-person action adventure setting, or power playing as your own creation in a first-person shooter. Exaggeration and the twisting of reality for dramatic effect is present in the sound design of every single video game, and the whole point-of-view challenge culminates with the game mix.

Great examples are often quoted from films that at first have ambience and establishing audio information in a scene, these sounds are then mixed down low, or out completely, in order to concentrate on very intimate sounds or on a single sound source that could be miles away from the character in the movie.

These moments are conceived by the director at the script stage, and are more often than not orchestrated almost purely through the mix. It is the emulation of these kinds of moments in games that has yet to be artistically demonstrated with the same finesse and polish as occurs in motion picture sound.

The reason is probably because the technology is only just emerging, but mainly that these moments are not well enough designed before hand with sound in mind as an agent of tension, emotion and character.

Read more about:

FeaturesYou May Also Like