Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

The audio director on open world game Prototype 2 shares a crucial revelation about how to create a consistent soundscape for his game across all different sections -- and explains in depth how he achieved that great mix.

[The audio director on open world game Prototype 2 shares a crucial revelation about how to create a consistent soundscape for his game across all different sections -- and explains in depth how he achieved that great mix.]

Mixing any genre of game is a deeply logistical, technical, and artistic undertaking, and figuring out an overall approach that is right for your specific game is a challenging endeavor. I have been responsible for mixing on five titles here at Radical: Scarface: The World Is Yours, Prototype, 50 Cent: Blood on the Sand, Crash: Mind over Mutant and Prototype 2, and while we used similar technology on each title, each had unique requirements of the mix to support the game.

Sometimes this was emphasizing the "point of view" of a character, sometimes an emphasis on destruction, and sometimes on narrative and storytelling. As our tools and thinking become more seasoned (both here at Radical internally, and in the wider development community within both third party and proprietary tools), it is becoming apparent that, like any craft, there is an overall approach to the mix that develops the more you practice it.

With Prototype 2, we were essentially mixing an open-world title based around a character who can wield insane amounts of devastation on an environment crammed with people and objects at the push of a button.

The presentation also focused a lot more on delivering narrative than the first Prototype game, so from a mix perspective it was pulling in two different directions; one of great freedom (in terms of locomotion, play style, destruction), and one of delivering linear narrative in a way that remained intelligible to the player.

While this was a final artistic element that needed to be pushed and pulled during the final mix itself, there were other fundamental mix related production issues that needed to fall into place so we could have the time and space to focus on delivering that final mix.

This brings me onto one of the main challenges for a mix, and something that can be said to summarize the issues with mixing any kind of project; the notion of a consistent sound treatment from the start of the game to the end, across all the differing presentation and playback methods used in development today. Though it may seem obvious to say consistency is at the heart of mixing any content -- be it music, games or cinema -- it is something that it is very easy to lose sight of during pre-production and production. Still, it ended up being the guiding principle behind much of our content throughout the entirety of our development, and this culminated in the delivery at the final mix.

Knowing that all content will eventually end up being reviewed and tuned in the mix stage is something that forces you to be as ready as you can be for that final critical process of decision making and commitment. It comes down to this: You want to leave as many of the smaller issues out of the equation at the very end, and focus on the big picture. This big-picture-viewpoint certainly trickles down to tweaking smaller detailed components, and generating sub-tasks, but the focus of a final mix really shouldn't be fixing bugs.

This mindset fundamentally changes the way you think about all of that content during its lifespan from pre-production through production, and usually, as you follow each thread it becomes about steering each component of the game's soundtrack through the lens of "consistency" towards that final mix. Overseeing all these components with the notion that they must all co-exist harmoniously is also a critical part of the overall mix approach.

Consistency is certainly a central problem across all aspects of game audio development, whether it is voiceover, sound effects, score, or licensed music, but it comes to a head when you reach the final mix and suddenly, these previously separated components, arrive together for the first time, often in an uncomfortable jumble.

You may realize that music, FX, and VO all choose the same moment to go big, cutscenes are mixed with a different philosophy to that of the gameplay, or that the different studios used to record the actors for different sections of the game is now really becoming a jarring quality issue because of their context in the game. The smaller the amount of work you have to do to nudge these components into line with the vision of the overall mix, the better for those vital final hours or weeks on a mix project.

For the first time, in writing this postmortem, I have realized that there are two distinct approaches for mixing that need to come together and be coordinated well. There are several categories of assets that are going to end up "coming together" at the final mix. These usually form four major pre-mix groups:

Dialogue

Music

In-Game Sound Effects

Cutscenes

Here is the realization bit: Every piece of content in a video game soundtrack has two contexts, the horizontal and the vertical.

Horizontal context is what I think of as the separate pre-mix elements listed above that need to be consistent with themselves prior to reaching a final mix. Voiceover is a good example, where all speech is normalized to -23 RMS (a consistent overall "loudness"). This, however, only gets you so far, and does not mean the game's dialogue is "mixed".

Vertical context is the context of a particular moment, mission, or particular cutscene which may have different requirements that override the horizontal context. It is the mix decision that considers the context of the dialogue against that of the music and the sound effects that may be occurring at the same time. There can be many horizontal mixes, depending on how many different threads you wish to deem important, but thankfully, there is only one vertical mix, in which every sound is considered in the context(s) in which it plays.

There is an optimal time to approach the mix of each of these at different points in the production cycle.

Horizontal consistency is the goal of pre-production, production and pre-mixing (or "mastering").

Vertical consistency is the goal of the final mix, where missions and individual events should be tweaked and heard differently depending on what else is going on in those specific scenarios.

I will discuss each of these in terms of their lifespan through production, and trajectory towards the final mix, and then summarize by talking a little about how the final mix process itself was managed.

A large part of the consistency puzzle for the mix was on the voice content side, and this was solved by choosing a single dialogue director / studio for all our major story voice assets. Rob King at Green Street Studios did an incredible job at championing a consistent direction, approach and energy level from the actors, not to mention the invaluable technical consistency of using the same mics and pre's wherever we recorded.

This "pre-pre-mix" work was the ideal foundation upon which to get the dialogue sounding absolutely consistent and dynamic from in-game to cutscene (Rob handled both story and in-game dialogue).

All the non-radio-processed dialogue was dropped straight into the game from the recording sessions -- no EQ, no normalization, just as clean as we heard it in the sessions. Rather than having all the dialogue maximized and compressed before it went into the game, we decided to simply mix around the dynamic and RMS levels of the VO recordings using the in-game mixing engine, without the need of obliterating the WAV assets with digital limiters.

This also ensured that the dynamic range was consistent in the recordings and it is something that I feel comes through clearly in the game. This approach catered well to the horizontal consistency across the dialogue within the whole game.

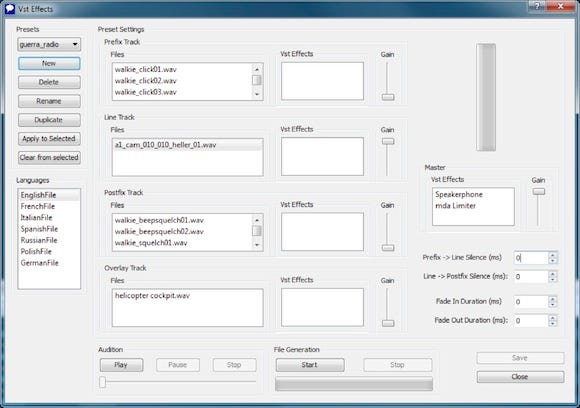

Because of this, whenever we recorded a pickup session, the new content sounded as though it had been a part of the exact same original session. Radio processed dialogue was handled in-house via the dialogue build pipeline (fig 1), which incorporated VST chain processing as part of the dialogue build.

This also helped ensure consistent VST presets were used across in-game and cutscene dialogue (as I could easily use the exact same speakerphone etc presets while building the cutscene sessions up as the ones i'd used in the dialogue tools), and also ensured that all localized assets had exactly the same processing as the English version, this way there was also no pressure on our localization team to re-create the sound of our plug-in processing chains on their localized delivery.

Fig 1. The VST Radio Processing Pipeline in our dialogue tools allowed consistency between not only cutscenes and in-game dialogue (by using the same presets), but also consistency between all the localized assets and the English. (Click for larger version)

During the final mix, a lot of the dynamic mixing in the game is focused on getting the mid-range sound effects, and to a lesser extent music, out of the way of pertinent mission-relevant dialogue. In terms of vertical consistency, we employed a quickly implementable three-tier dialogue ducking structure.

For this, we had three different categories of dialogue ducking for different kinds of lines in different contexts. Firstly, a "regular" dialogue duck, simply called "mission_vo", which reduced fx and music sufficiently to have the dialogue clearly audible in most gameplay circumstances.

Secondly, a more subtle ducking effect called "subtle_vo" which very gently ducked out sounds by a few dB, barely noticeable, this was usually applied in moments when music and intensity is generally very low and we didn't want an obvious ducking effect applied.

Finally, we had an "xtreme_vo" (yeah, we spell it like that!) snapshot, which catered for all the moments when action was so thick and dense, music and effects were filling the spectrum and the regular VO duck just wasn't pulling enough out for lines to be audible. Between applying these three dialogue-centric ducking mixers, we could mix quickly for a variety of different dialogue scenarios and contexts without touching the overall levels of the assets themselves.

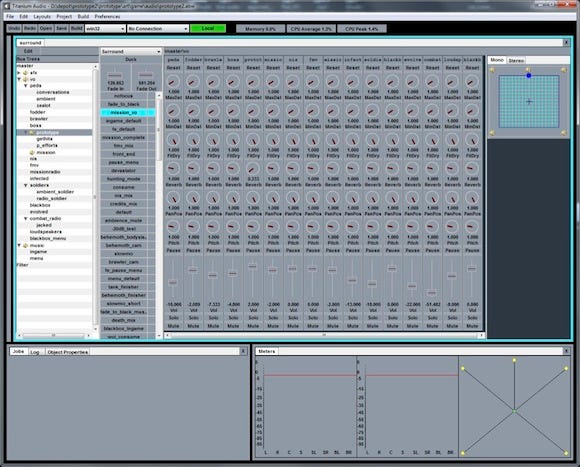

Fig 2. AudioBuilder: An example of the state-based dialogue ducking mixer snapshot used to carve out space in the soundtrack for dialogue. Also provides a good general look at our mixing features. Bus-tree hierarchy on the left, snapshots highlighted in blue to the left, fader settings in the center, meters below and panning overrides to the far right. (Click for larger version)

Scott Morgan explains the music mixing process:

The music mixing process consisted of first organizing and arranging the original composition's Nuendo sessions into groups to be exported as audio stems. Stems generally consisted of percussion, strings, horns, synths, string effects and misc effects. Because the score was originally composed with MIDI and samples or synths, each group was rendered to a 24-bit, 48kHz stereo audio file that was imported back into the session for mixing purposes.

Each section of music which corresponded to an event in the game, was individually exported, stem by stem. Rendering the MIDI/sample source out into sample content allowed for additional fine tuning with regard to EQ and volume automation per group and also allowed for fine tuning of the loop points to smooth out any clicks or pops that would have been present in the premixes. Sessions were prepped late in the day before mixing the next morning in the mix studio. A typical morning would see the mix of 2-4 missions worth of music, depending on their complexity.

One mission's worth of music was mixed at a time. A mixing pass consisted of first adjusting the individual layers to predetermined levels and applying standard EQ settings on each stem (e.g a low shelf on the percussion to bring down everything below 80Hz by about 4dB). Once this balancing pass was done, each section got specific attention in terms of adjusting the relationship between the stems. This was done to bring out certain aspects of the music and allow it to breathe a bit more dynamically.

At the end of each mixing session, each section was rendered out and these mixes were then used to replace the existing premixes in the game. The music was then checked in the context of the mission itself and crudely balanced against the sound effects and dialogue. This mission was now considered pre-mixed and ready for a final contextual game mixing pass.

These were all handled in-house by either Scott Morgan, myself, or Technical Sound Designer Roman Tomazin. The nature of our mixing and live tuning tools means that we are able to work with sound effects from creation to implementation very quickly by getting them into the game (live sound replacement on the PC engine build of the game), and being able to tune the levels while the game is running.

This has enormous implications and advantages for the final mix, as most of the process of designing a sound effect in the game, involves pre-mixing it directly into the context of either a mission or a section of the open-world (as any sound is added it must be assigned to a bus in the mixer hierarchy). You would never submit anything that was too loud or didn't bed into the context in which it was designed. This means that 95 percent of the mixing and balancing work (both horizontal and vertical) is already done by the time you get to the final mix.

Having that real-time contextual information and control at the time you are creating the sound effect assets, is absolutely invaluable at maintaining quality during production, giving ownership over the entire process to the sound designer, and also helping the consistency of the entire sound effects track of the title.

Bridging the gap between the sound design of the in-game world and that of the cutscenes is often a challenge in the realm of consistency. We chose to handle the cutscenes in-house and their production took shape on the same mix stage that we would eventually use to mix the final game. Handling the creation and mix of the cutscenes alongside the mix of the actual game itself had distinct benefits.

Using almost exclusively in-game sound effects as the sound design components for the movies themselves, from backgrounds to weapons, HUD sounds and spot-effects, solidified these two presentation devices as consistent, even though visually, and technically, they existed in very different realms.

The cutscenes were pre-mixed during production and received a final mix just prior to the final mix of the actual game. Overall levels of cut scenes was treated as a consistency priority so that once the level was set for the cutscenes in the game (a single fader in our runtime hierarchy), it need not change from movie to movie, allowing us to use only one kind of mixer snapshot for any Bink-based movie that plays in the game.

Whenever a cutscene plays, we install a single mixer snapshot which pauses in-game sound and dialogue as well as ducking the levels down to zero, with the exception of hud sounds and the bus for the FMV sound itself. There was almost no vertical mixing to be done on the FMV cutscenes once they had been dropped into context, as their dynamic range matched the bookends of the missions pretty well, usually by starting loud, subtly becoming quieter for dialogue, and then building towards a crescendo again at the end of each scene. This worked well with the musical devices (endings) employed in-game to segue into the movies.

NIS scenes, or cutscenes that used the in-game engine, had a slightly different approach on the runtime vertical mix side of things. As the game sound was still running while the scenes played, within different contexts in different parts of missions, we often needed to adjust the levels of background ambience, or music running in the game depending on how much dialogue was present in the scene. For each one of these sequences we had an individual snapshot, which allowed us to have specific mixes for each specific scene and context.

So, how did this all come together for the vertical final mix? We are incredibly fortunate to have an in-house PM3 certified mix stage in which to do our mix work on the game. This is incredibly valuable not only during the final mix phase of post-production, but also, as previously alluded to, in the creation of the cutscene assets and the pre-mixing of the music. The room is central to our ability to assess the mix as we develop the game and provides touchstones at various key milestones.

Essentially we try to think about the vertical areas of the mix at major milestones, rather than leaving it all to the end. Because of this, the overall quality of the game`s audio track is center stage throughout our entire production process because we can listen to, and demonstrate, the game there at any time to various stakeholders.

The play through / final mixing process was essentially a matter of adjusting levels 'in context', even though all dialogue was mastered to be consistent at -23RMS, the context in which the lines played (i.e. against no music, or against high intensity battle music) dictated the ultimate levels those files needed to play back at. Similarly with the music and fx, the context drove all the mixing and adjustment decisions of each music cue.

With all our assets, mastering/pre-mixing on the horizontal scale got us 75 percent of the way there, individual contextual tweaking (vertical), got us the rest of the way. One would not have been possible without the other. The final "mix" phase was scheduled from the outset of the project, and took a total of three weeks.

Our state-based mixer snapshot system really got pushed to its limits on this title. There were several areas where multiple ducking snapshots being installed at the same time created bugs and problems, such as in the front end, which was a ducking snapshot, when a line of dialogue mission dialogue was also played. Transitions between different classes of mixers such as 'default' to 'duck' were also somewhat unpredictable and caused 'bumps' in the sound.

A combination of new user-definable behaviour for multiple ducks, side chaining and default snapshots will be a future focus for us, as we try to handle the overall structural mixing (such as movie playback, menus, pausing and various missions) with active snapshots, and the passive mixing with auto-ducking and side chaining.

As it is, the entire mix for the game was done using state-based snapshots, so everything from dialogue to different modes (such as hunting), was handled in this way. The complexity of handling an entire game via this method also became evident as there were almost too many snapshots listed to be able to navigate effectively (an unforeseen UI issue in the tools).

Having said this, the system we have developed is intuitive, robust and allows deep control, having snapshots not only handle gain levels, but panning (even the ability to override the panning of 3D-game objects), environmental reverb send amount, high and low pass filters, and fall-off distance multipliers, all dynamically on a per bus basis. This gave us a huge amount of overall control of the sound in the game, so that rather than being an unwieldy out-of-control emergent destruction-fest, the big moments in game play could be managed quite efficiently and allowed a clear focus on 'the big picture consistency' for the entirety of the final mix.

Panning was very important in respect to consistency, and something we have seen many AAA titles treat irreverently (although probably not deliberately). Dialogue is consistently center only, with the exception of positional pedestrian or soldier dialogue. Music remains Left/Right only, ambience quad, and hud is center. No matter what presentation device is used, the same panning map is applied.

This is also reflected in the way we approached audio compression, ensuring that the overall fidelity of the audio in cutscenes matched that of the in-game dialogue, etc. as closely as we could achieve. Our goal of consistency also carried across between the two major platforms. The Xbox 360 and PlayStation 3 mixes, when run side by side, are identical, and even though the PS3 sounds is a little different because of our use of mp3 compression over XMA, mix levels and panning are absolutely consistent across the two platforms. This was a major achievement in terms of meeting our primary goal.

Following recommended loudness guidelines, and measuring via the NuGen VIS-LM Meter, we initially aimed for -23 ITU for all areas of game play, however soon discovered that, while it made sense for cut scenes and some quiet missions, this felt too quiet and inhibiting for our title overall, particularly for intense action section of the game.

The long term metering expressed in the ITU spec was developed for broadcast program content, and suits 'predictable' program content usually around one hour or half an hour in duration, and in this respect a single long-term number can't be applied as easily to video games that have indeterminate lengths and unpredictable content.

The strategy we adopted wasn't a conscious one, but more an observation based on what sounded good. We noticed different sections of the game naturally pooling into different loudness ranges by simply mixing to what sounded right. We noted a 'range' of long term loudness measurements which can be anywhere between -13 and -23 LU, based on the nature of the action.

story cutscenes -23

in-game cut scenes -19

background ambience -23.

'Talky' missions, or 'stealth' missions between -23 and -19 depending on context.

Action missions -19

Insane action missions -13

These numbers are what we are calling, for now, our "Long Term Dynamic-Range", a grouping of loudness measurements that apply to certain types of game play or presentation elements, in our case -13 to -23. In essence I think what is required here is a method of measuring all these different aspects of the game within a short time window, around 30 mins to make a single overall loudness measurement useful. This is not always an easy approach when the game isn't structured in that way.

Even when a game is perfectly balanced, it can be ruined when the player has a poor audio setup. There isn't much we can do as content creators to accommodate every different home audio system, other than turn up at their homes and offer to calibrate it for them, but we can offer a couple of listener modes that address the common problems associated with the differences between "high end" home-theater playback systems and more humble speaker systems.

This problem is apparent here at work too, as many of our meeting spaces and offices display wildly varying degrees of speaker abuse (comparing speakers to scolded children, stuffed in the corner facing the wall or behind a monitor, would not be too far an analogy to describe the way these objects are treated). So, this is the first time we have introduced a setting in the sound options that caters for either the home theater setup or the TV speakers.

These are essentially two different mixes of the game, the home theater version being the one with the most dynamic range and the listening levels in the ranges described above, while the TV speaker setting adds some subtle compression to the overall output, allowing players to hear quieter sounds in a more noisy home environment, and also pushes up some of the overall levels of dialogue.

In the past we have attempted to do both with one single mix setting, by mixing in our high end mix theater, and then by "tweaking" that same mix while listening through TV speakers; however, this compromised the home theater mix we had spent so much time on.

By allowing two completely different mixes to co-exist and be chosen by the user, we seem to have got around the issue of supporting the two most common listening environments available among our audience. In retrospect I also feel we could have labelled the "home theater" mix as the one to use for "headphone users", as that would be the obvious choice, but being the default setting it should be reasonable to expect most headphone players will end up hearing the higher fidelity version.

Activision's internal AV lab, headed up by Victor Durling, was critical as our "third ear" in helping us to contextualize our game's levels in the wider commercial landscape. Their dedicated audio test lab utilizes Dolby metering via the LM100 and consists of two custom-built surround rooms. We were aiming for levels around that of Rockstar's Red Dead Redemption or GTA IV, however, for an action, horror title our game felt like it needed to be pushed a little louder to match competitive games in our genre.

Hence we eventually landed somewhere in the region of -19, but as mentioned before, this single number for long-term measurement made little sense to the actual practical mixing of the game from section to section. I strongly believe it is critical to have a third-party listen to the mix, and even though the communication between us and the AV lab was only via email, we got a pretty good picture of what they were hearing and could adjust accordingly.

By following a goal of consistency throughout the project on several major asset threads such as music and voice, we were able to have a great deal of control over how these sounded throughout the game, no matter what the context or the technical delivery method. This was accomplished by thinking about the mix horizontally and vertically from early production.

I think this approach throughout production came about by being conscious from the beginning of the project that we were going to be spending time on a final mix, and we knew we'd be under pressure in those final few weeks, so didn't want to have to address too much of the horizontal minutiae that belonged to pre-mixing in those valuable final days on the project. Scheduling and planning always begins from a potential ship date, and from there we work backwards through our final mix dates, through to our sound alpha dates, and then backwards further to the booking of production resources like voice-over studios.

I believe consistency is an overall approach that can be brought to any mix project, no matter what the genre or the playback context. However figuring out the best approach is often a complex cultural and contextual negotiation in and of itself.

Given all of our metering and various loudness level numbers, one may think the mix is a dry, cold, technical exercise, however the question we asked ourselves every day on the mix stage was simply, "Does it sound good?" and by using a combination of common sense and metering, made any necessary adjustments. One of the defining measurements of success, in the end, became that if you closed your eyes, you would find it difficult to discern if you were hearing a pre-rendered cutscene, a game engine cutscene, or in-game moment. Listening back post-release, after having finished work on the game, I believe we achieved this goal exceptionally well with a very small audio team.

Rob Bridgett: Audio Director / Mixer / Cutscene Sound Design

Scott Morgan: Audio Director / Composer / Sound Design

Peter Mielcarski: Audio Programmer

Roman Tomazin: Technical Sound Designer

Rob King: Voice Director

Read more about:

FeaturesYou May Also Like