Postmortem: AI action planning on Assassin's Creed Odyssey and Immortals Fenyx Rising

When Ubisoft's sprawling open world games became increasingly dynamic, the company had to develop new AI that could keep up. Ubisoft Quebec AI programmer Simon Girard explains in-depth.

November 18, 2021

After the release of Assassin’s Creed Syndicate, in 2015, as we turned our gaze towards the future, we thought about the direction we wanted AI development to take for our next game. While the AI framework on Assassin’s Creed had served us very well, our games had dramatically changed. Our worlds were a lot more dynamic than they used to be, we now had vehicles, a Meta AI system to simulate AI behavior for many agents over large distances. We were working on a new quest system and the fight system was being revamped to be less choreographed and more hitbox-based. Unfortunately, the underlying technology driving the AI had not necessarily kept pace, and it was starting to show some limitations.

The core of our AI system, at the time, was based around a handful of large, monolithic state machines. These state machines had become increasingly complex and bloated over the years, due to the sheer number of features being added and the occasional addition of minutes-to-midnight hackish code snippets. Implementing new behaviors was starting to become painful because of frequent side effects on other states, or just the clumsiness inherent to large state machines. We realized, especially towards the end of Assassin’s Creed Syndicate, that our designers and programmers were spending increasingly more time handling edge cases and working around our system’s complexities and quirks, rather than iterating on game features.

We wanted to improve our underlying systems by moving from a reactive AI to a deliberative system. A deliberative system could spend some CPU cycles to deliberate on what the best course of action should be before making a decision, something that our current reactive AI was not suited to do. Deliberation could take care of some of the complexity that we had to handle by hand, without the explicit intervention of a designer to tell it what to do.

As we looked at solutions other than state machines to handle the complexity of our AI, an elegant solution resided in planning algorithms. By nature, they would allow some automated problem solving, which suited our ambitions very well. The designers would write the rules of the AI and it would figure out, on its own, how best to play by those rules. While it would require an adjustment, as designers would not have access to the same total control they were used to, we felt that it was control that wasn’t needed if there was a system to handle some of the complexity of AI behaviors. We ended up choosing a well-known method called Goal-Oriented Action Planning (GOAP) for our planning algorithm. Without delving too much into the details, GOAP uses a pool of Actions, defined with Preconditions, Effects and Cost. The planning algorithm essentially does a graph traversal of the action space to try to find the least costly sequence of actions to reach a particular Goal. In other words, planning is akin to pathfinding, only you’re doing it logically rather than in 2D or 3D space.

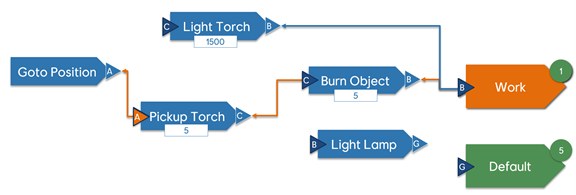

Figure 1- Simplified representation of GOAP Planning

In addition to production-driven needs, we also had gameplay-focused ambitions that justified the move to a planner. We wanted, among other things, to improve our NPC’s connectedness to their environment. We wanted them to be able to use objects around them as weapons, burn or otherwise destroy some gameplay ingredients and have more interesting behaviors, that actively used the environment. GOAP seemed to offer us an elegant way to implement those kinds of dynamic, multi-step behaviors.

A few years later, we would use the foundation we had laid with the planner on Assassin’s Creed Odyssey to build the AI for Immortals Fenyx Rising, improving the system further to suit Immortal Fenyx Rising’s particular context and gameplay.

This postmortem offers insight into technical, gameplay, as well as production considerations of implementing and maintaining a planning algorithm, over the course of Assassin’s Creed Odyssey and Immortals Fenyx Rising.

What worked well

1) Modularity!

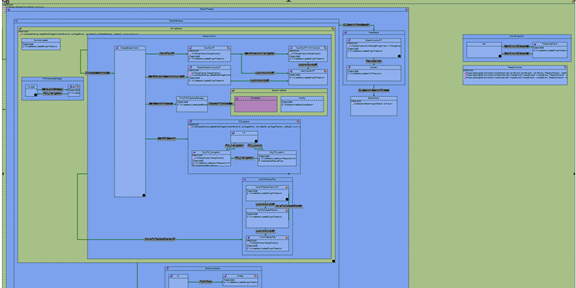

Figure 2 - Partial view of state machine spaghetti

From a production perspective, wading through state machine transition spaghetti as part of everyday life was becoming fairly cumbersome. We had a handful of large state machines, encompassing the broad game states the AI could get into: Fight, Search, Investigation. It was becoming very difficult to tell which enemy archetype could enter which state and archetype-specific code was getting spread all over the place.

Since we didn’t start the character behaviors on Assassin’s Creed Odyssey from scratch, owing to a long brand legacy, we started the modularization push by simply converting sub states of existing state machines to planner actions. For the most part, this was rather straightforward. For instance, the state machine for the Search behavior contained a few states controlling the various interaction with haystacks and hiding spots. It was a given that making planner actions out of those would be a breeze. The different conditions to enter those substates were GOAP Action Preconditions and we could easily come up with the GOAP Action Effects that would allow linking the different actions together.

As time went by, we slowly converted almost the entirety of the old state machines to planner actions. Archetypes that had specific behaviors didn’t crosstalk with archetypes that didn’t, and we could easily prototype new behaviors without having to deal with messy state machines. Both data and code were, as a result, much cleaner.

By the end of Assassin’s Creed Odyssey’s production, the benefits of GOAP’s modularity were well understood by programmers on the team. We saw night and day differences in the ease of debugging the AI. While modularity itself didn’t necessarily show directly in the game, it did have a significant impact on our ability to debug and ship the game.

On Immortals Fenyx Rising, the gains from modularity were even more readily apparent. Unlike Assassin’s Creed Odyssey, Immortals Fenyx Rising had the luxury of starting the AI behaviors from scratch. We didn’t have legacy behaviors to support, everything was fresh and new. In addition to benefiting from some improvements to the planner’s expressiveness, designers were able to build the behaviors, the planner data and the enemy archetypes to be as modular as possible. On a project where frequent iteration was one of the prime directives, it was paramount that our designers be able to swap behaviors in and out and juggle them as they wanted, without programmer intervention and, more importantly, without fear of breaking some other archetype entirely, by mistake.

In short, the modular nature of GOAP provided a straightforward, elegant way to represent the AI data. It helped reduce spaghetti code (or data) and enforced a separation of concerns. If the only thing we had achieved was to modularize the existing AI and left everything else the same, it would have been worth our while.

2) Environment interactions

The implementation of the planner was motivated in part by a wish to have our NPCs interact with the world more. This meant making use of torches, looking over walls to look for the player and using everyday objects, such as shovels or brooms, as weapons. We also used the concept for Immortals Fenyx Rising, where cyclops could rip trees from the ground and use them as clubs or throw them at the player or could pick up large boulders.

The planner offered a very natural mean to implement such features. The pool of actions that is sent to the planner can be assembled from multiple sources. An object in the environment could conceivably be a source of planner actions. We dubbed those interactable objects Smart Object, as they contained which actions were available from them.

Before planning, when we assemble the pool of actions currently available to an NPC, we query our smart object manager, to which all loaded smart objects are registered. After a few calculations, like navmesh reachability and distance, we do a pruning pass, in order to not explode the search space the planning algorithm will be dealing with. On Assassin’s Creed Odyssey, we were actually overly aggressive with the pruning, with only one smart object being chosen for each action. The main driver affecting the action’s cost was distance, so we figured we could help the planner by pre-picking the closest object. No use spending CPU cycles trying out possibilities when you can make that call early.

For Immortals Fenyx Rising, we rolled back this optimization, as it could be at times too aggressive and had some noticeable effects in the game when the conditions were right. For instance, a case where a NPC wanted to pick up an object to then use it at another location could get a bit sketchy, with the NPC going to the closest object, regardless of direction. This could cause some weird behaviors where NPCs would double back. The environment is complex enough that it’s not immediately noticeable, but if you know what to look for, it is a bit jarring.

On Immortals Fenyx Rising, we built our data in a way that made better use of the planning features and we allowed the planner to consider up to 3 actions of the same type, that related to different objects in the world. This means that a NPC that is considering throwing a boulder will consider 3 different boulders. Other parts of the plan can influence which boulder will be selected. It also makes for a more ‘correct’ decision, when watched from a bird’s eye view.

The concept of smart objects also dovetails nicely with modularity. Design for such gameplay ingredients usually develops starting from the ingredient, not the NPC. We’ll look at different props around the world and say “it would be cool if some NPCs could do X with Y”. Rarely do we go “Well this Minotaur absolutely needs to break columns”. That would be a way of doing things that didn’t really favor reuse.

3) Debugging Tools

From the get-go, we put a particular focus on debugging tools. Planning is a general-purpose algorithm, and, in our case, it is dealing with generic data. Actions are assembled by designers, in data, using a handful of reusable Conditions, Effects and Costs that can all be parameterized. Designers do not have access to a debugger. Even to a programmer, the planning loop itself is very generic and the planner’s search space is large. Considering this, stepping through the relatively small number of lines of code of a planning algorithm is not an efficient way to debug. It can be done, but it is a monumental waste of time.

This is an issue that we identified early on. If we were to be able to debug efficiently, we needed to keep track of everything that the planner considered. Consequently, we logged all planning steps. Everything that was tried by the planner made it into the log, as well as other stats such as action costs and a running list of open conditions. We could track most of what the planner was doing behind the scenes, without resorting to time-consuming breakpoints. As an additional benefit, since the information was logged, there was no chance of accidentally stepping one step too far and miss what you were looking for.

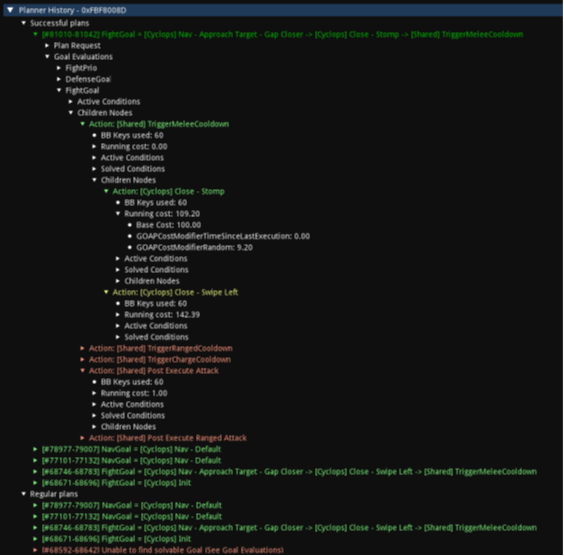

Figure 3 - Planner monitor showing the different evaluated branches

The value of this tool became increasingly apparent as we approached the shipping stages of Assassin’s Creed Odyssey. One key learning from Assassin’s Creed Odyssey was that we spent more time trying to figure out why something didn’t happen, rather than why something did. Using the planner monitor made finding the answer far more convenient than stepping through the code would have.

In the face of this success, for Immortals Fenyx Rising, we added more information about why some actions would not be considered. This meant we could know in even finer details why some plans failed at a particular time. We also drastically improved display. Assassin’s Creed Odyssey used a HTML dump as a viewing tool, which had a distinctly guerilla flavor. Immortals Fenyx Rising saw the log browsable and integrated in our in-game debugging tool, making viewing a breeze.

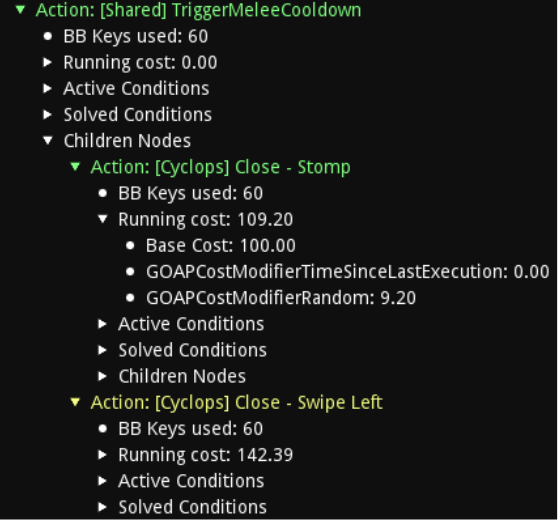

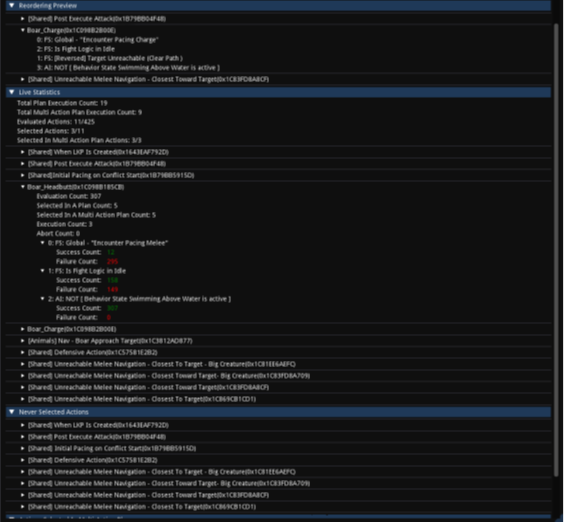

Figure 4 - Detailed view of logged information

Another tool that was invaluable was GOAP Statistics. It logs evaluation and usage statistics for all the actions in the game. It was created late during Assassin’s Creed Odyssey, in order to track if we had unused data that was useless or never triggered.

It also saw time as a performance tool. Unsurprisingly, with such a large search space and such a large number of conditions being evaluated, we were spending a significant amount of CPU time inside condition evaluation code. Since our actions were built in data by designers and iterated on frequently, it would have been impractical (and opposed to our entire philosophy for using a planner) to ask designers to take performance into account when building the actions. We felt a more practical approach was to automate this optimization process, and so we did.

GOAP Statistics is, among other things, a special execution mode that tracks all action condition evaluations during planning. It will log how many times they’ve succeeded or failed. Then, it can automatically re-order the conditions in the most CPU-friendly order, for all actions. And it’s not theoretical, either. We use empirical game data, harvested from our testers playing the game in order to do this. While it is possible that a particular area of the game might have been less tested, the coverage typically hasn’t been an issue.

Once again, Immortals Fenyx Rising saw improvements to GOAP Statistic, with a live view mode, that allowed us to see statistics on action usage and action coverage as we were testing enemy behaviors.

Figure 5 - GOAP Statistics from Immortals

4) ‘Automated’ problem solving

One problem that had plagued us for a long time, though it is not necessarily obvious during gameplay, is determining if NPCs are able to reach the player and their behavior when they can’t. On Assassin’s Creed, the player character has navigation abilities that vastly outstrip the NPCs. Likewise, on Immortals Fenyx Rising, our main character has a set of wings and can jump and double jump pretty much anywhere. From a technical point of view, there would be massive challenges to make NPCs that can navigate just like the player character can. Even if we solved the technical hurdles, from a design standpoint, we also want to create enemy archetypes that have limitations or weaknesses, in order to make them more interesting to interact with. It’s not because we have the technology to make creatures fly that they should all have access to flight capabilities. A flying cyclops would be a positively frightening proposition, best left unexplored.

Traditionally, we resorted to hardcoded metrics. We know, from trial and error that a human-sized NPC might be able to reach the player character if they’re within 1.3m of the edge of the navmesh. That was a gross approximation of the actual reach, but for the most part, the NPCs on Assassin’s Creed are all similarly sized. For cases where the player was standing outside of that reach, most NPCs have some sort of ranged attack. And for the most part, this works fine, though the NPCs sometimes seem to really like their bow and arrow or give up the chase a tad too easily in favour of ranged attacks. This is mostly due to the approximation being more or less precise and the navmesh being more or less complex.

For Immortals Fenyx Rising, that was no longer cutting it. While this method worked well enough for human-sized NPCs, it fell apart when dealing with larger creatures. Fighting large mythological creatures was at the core of the game’s experience and early builds showed that the types of attacks featured in Immortals were a lot flashier than the traditional AC direction. In addition, some of our creatures had incredibly large wingspans, which exacerbated the problem. We tried scaling the tolerance as a quick and easy fix, but since that tolerance applied to all attacks, we never got good results across the board.

For the most part, attacking is getting into position and swinging. However, with attacks of wildly different types and amplitude, it was less and less clear what ‘getting into position’ meant. Is it getting as close as possible to the player? Or could the NPC use another position that is potentially quicker to get to, but still within reach? Which of those 2 options is the better one for the current game situation?

That’s where the planner came into play. Since it weighs multiple options, we could provide the planner with multiple movement actions and multiple attacks and it could then sequence the movement actions with an attack a make sure that everything would fit together. With proper cost adjustment, it could also decide if it was better to navigate longer to get a good position or if it should get into position quicker. By looking a couple of steps in the future, the planner allows us to make much sound decisions and makes building the data easier. All we had to know was the reach of the attack (and a few other options, like charging and if the attack required straight line clearance), which is simple information that the designer can give.

Figure 6 – Example of deliberative decisions taken by the planner. Option A and Option B are both considered, but weighted differently depending on the attack metrics.

We then let the planner decide, rather than asking a designer to come up with arbitrary rules that need to fit in a large number of potentially extremely complex situations. It’s a more elegant way to handle the problem and the planner is in large part responsible for giving us this opportunity.

5) Performance

One of the well-known drawbacks to using algorithms like GOAP is the CPU cost inherent to running it. It was something we were very aware of from the start and a lot of our architectural decisions early on were made with performance in mind, sometimes to the detriment of the planner’s power or expressiveness. While we rolled back some of the more aggressive optimizations for Immortals Fenyx Rising, owing to more experience with the system and first-hand knowledge of our actual performance bottlenecks, it was imperative when first implementing the system that we did not kill our CPU performance. The main driver behind the planner’s performance is its search space, so we took steps to limit it where we could.

Our old state machines were meant to go the way of the dodo, but that didn’t mean we couldn’t learn valuable lessons from them. Even though we were moving to the GOAP paradigm, our AI design still revolved largely around 3 states: Investigation, Search and Fight. As a general principle, actions that are available in fight didn’t make much sense in the other 2 states and vice versa. So, instead of sending the planner tens of actions that would be impossible to actually use for a given state, we divided the actions into buckets. Only the bucket associated with the NPC’s current state would be sent for actual planning.

While it’s easy to see how it limits the planner’s strength, it was a choice that paid off, as we kept our number of actions, and thus CPU performance, under control. Being aggressive on these kinds of pre-computations was key to us being able to put GOAP in a AAA game where so much is already happening.

What didn’t work well

1) Changing the engine as the plane is flying

One thing that had a huge impact on the development of the planner is that we never had the luxury of starting from scratch. The transition from state machines to the planner was expected to take some time, as we had a small AI team during the conception stages. However, other departments were going full steam ahead with various content creation or prototypes, some of which required working NPCs. We could simply not afford to break the AI entirely for any length of time, be it a day or a week. In addition, the overall NPC design for the game was going ahead full tilt and we needed to support new archetypes and new gameplay ingredients, while changing the inner workings of our AI pipeline. Consequently, some of the architectural decisions that we made early on were very much influenced by the particular needs of our production.

As the project picked up steam, we had to put all our energy in keeping up with design demands and debugging the new architecture. Some of the choices that were made early on to ensure our NPCs would be always playable ended up sticking around until the end of the project. This created some code bloat and headaches, especially around system frontiers.

One such example (out of many) is how our planner deals with Meta AI. For those not in the know, Meta AI is a system that was introduced by Assassin’s Creed Origins to simulate AI behavior over great distances, at low CPU cost. We never really intended for the planner to drive the Meta AI simulation, as a planner is nothing if not expensive, which runs counter to what the Meta AI is meant to provide. However, we had several instances of infighting between systems. Meta AI would try to push a behavior it had decided for our NPC. But the NPC was at a high enough LOD and was engaged with the player, which meant the planner was also running and trying to get the NPCs to do stuff. So Meta AI kept interrupting the plans and vice versa.

Another, slightly more bothersome issue was how we had decided, in order to speed up conversion of legacy behaviors and ensure a certain level of functionality, to create GOAP Actions that would represent relatively complex behavior. In short, and to harken back to what went right: we violated the modularity principle. This wasn’t much of an issue at first, but looking back, we ended up creating an AI that wasn’t taking advantage of modularity and associativity of actions as much as it could. Some of this also showed up in our blackboard, a sort of scratch memory used by the planner. There are some unusually specific entries in the blackboard for Assassin’s Creed Odyssey. Some of the core GOAP code also refers to some highly gameplay-specific concepts. Any game that would use the GOAP implementation from Assassin’s Creed Odyssey would find blackboard entries for a priority token system for torches, regardless of if that game had torches, NPCs or had even discovered what fire was.

At the end of the day, those aren’t huge problems, and they hardly have an impact on player experience. However, they are a symptom of how our GOAP implementation was birthed and, as we experienced with Immortals Fenyx Rising, being able to build an AI to use the planner, from the ground up, is a lot more straightforward. Throughout Immortals, we occasionally had to proceed with refactors in order to clean some of the leftover code from the early days of Assassin’s Creed Odyssey.

2) Underestimating the importance of design-support features

One thing that caught us off-guard was the scope of features required to support design intentions. We started from the misguided assumption that an NPC should choose the most optimal behavior for the current situation. Our first test case was of an NPC guard with pyromaniac tendencies. The NPC would have several options to burn objects around them and depending on distances, would choose what made the most sense.

Figure 7 - Pyromaniac caught in the act

However, in actual game cases, things aren’t always so clear cut. An AI that always chooses the most optimal option can be great if you’re building a bot that is meant to emulate player behavior, to play, say, chess. However, when building a game where the AI is, for all intents and purposes, a narrative tool, there are other considerations to take into account. In short, the “game” the AI is playing is not the same as what the players are playing. Things like pacing and variety are everyday concerns for AI designers on our games.

Taking the example of variety of behavior, an AI that always chooses the same sword swing might be doing some very smart computations behind the scenes, but to the player, it’s either bugged or stupid, two qualities that are seldom sought after in game AI. At least some variety in the behaviors they execute is expected for a NPC to attain a sufficient lifelike quality.

One of the tenets we had was that a planner should be making an informed decision when weighing its options. What we failed to realize was the variety and pacing were also part of this informed decision, while it is not a consideration at all for a human player. As AI programmers, we had failed to realize how important this consideration would be.

Consequently, we found ourselves lacking tools to implement any kind of variety or pacing in our AI Behavior. We had an inkling that we could adjust the cost of actions dynamically to cycle through the different possibilities. After all, our planner was already handling dynamic cost computation, variety was just one more variable in the equation. But pressed for time and with the game development well underway, what was the easy way to spice things up? Adding a random factor, of course!

Now, I can hear the distinct sound of furrowing brows. Random, really? And like a lot of applications of random, it didn’t really work, but it worked well enough that we shipped Assassin’s Creed Odyssey with a random component to our action costs as more or less the only variety tool the planner had access to. This was possible due to a quirk in our planner implementation that made it so the costs of actions, despite their dynamic components, were only evaluated once at the start of planning. This meant that the cost, and thus the random component of costs, was stable for the entire planning loop.

Fast-forward a few years and we corrected that quirk at the beginning of Immortals Fenyx Rising. Now, costs were being re-calculated at each planning step, in order to account for planned changes to entity positions and other modifications to the environment. And suddenly, random essentially broke planning, on a theoretical level. If we were recalculating action costs during planning steps, it meant that an action that was used by 2 different planning branches would have a different cost. This didn’t necessarily have a noticeable impact on in-game behaviors, but it did mean that the planning algorithm would be inherently unreliable. In other words, the planner couldn’t guarantee that a = a at all stages of planning. You can see how, for a programmer, that is a highly worrying proposition.

We did end up developing tools for variety and pacing during Immortals, owing to our experience on Assassin’s Creed Odyssey. We (almost) removed random entirely, in favor of a system that modifies the cost of actions depending on the last time it was executed. This provided a much more reliable variety tool than random and gave us much, much better results. Since we were building the AI data from the ground up, pacing was built in our plans for Immortals Fenyx Rising: almost all plans that are executed will set pacing information as the last action of the plan.

Why is this under “What went wrong” if we fixed the problems in Immortals Fenyx Rising? Because we tackled the issue of pacing and variety far too late. It should have been on our radar from the start. It’s all nice to think in theoretical terms when it comes to AI, but there are considerations down on the ground that are highly important to the overall success of the AI.

3) One pipeline to rule them all

Assassin’s Creed Odyssey had enhanced brand features and several gameplay innovations on the docket when we started working on the planner. Epic battles, naval gameplay, mercenaries that would hunt you across the world.

In a foolhardy attempt at convergence, we tried to fit all the game’s AI under a single umbrella, technologically speaking. We were wary, from past experiences, of having different gameplay pillars being built in silos and so we wanted everything under the same roof, using the planner.

While this sounds like a good idea, the timing just wasn’t there. For instance, naval gameplay needed features from the planner that just weren’t ready or was asking questions that we just didn’t have the answer to, yet. This all stemmed from the two initiatives starting in parallel.

In short, we ended up putting the cart before the horse. For instance, ships were an interesting planning case, because they had very different movement from regular NPCs. Unlike humans, ships cannot turn on a dime and therefore, we felt that in addition to planning attacks, we also had to plan for movement. Another consideration was that movement doesn’t stop for ships. For human NPCs, we were very much following a pattern of “an action is a movement and an animation”, which didn’t hold true for ships. We ended up running 2 planners on ships: one for movement patterns and the other for attacks. They would be planning independently and communicate via the shared blackboard when necessary. This is a very interesting problem to tackle. Unfortunately, the need to have ship AI came fairly early, as we were still coming to grips with how the planner worked with human NPCs. It was an extremely difficult task, considering the special needs of the naval use case while still building human NPCs, which were vastly more familiar to us.

In the end, we should probably have bit the bullet and built the naval AI in its own silo, rather than go through hoops to try to make the tech fit. It would have allowed us much quicker iteration on the naval AI itself, as we wouldn’t have needed to spend as much time laying the architectural foundation and could have used systems that existed from the start. Trying to fit everything under one umbrella, which seemed like a good idea at the time and a way to not recreate mistakes of the past, ended up creating a few unnecessary headaches.

Simon Girard is an AI Programmer, Ubisoft Quebec

You May Also Like