Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

Content over Immersion: Using Kinect instead of a VR headset and how it worked better for us

A virtual reality teaching simulator that I am working on and why we chose to put a hold on our plans to develop for a VR headset, like the Oculus Rift, and opted to use Microsoft's Kinect instead.

In this post, I will describe a virtual reality teaching simulator that I am working on and why we chose to put a hold on our plans to develop for a VR headset, like the Oculus Rift, and opted to use Microsoft's Kinect instead. Although this example is specifically for a teaching simulation, there are many more applications that could be derived from the techniques and philosophy described here.

My current project is to create a virtual reality simulation that can be used by college students studying to become teachers. The main goal of the simulation is to create an environment where soon to be teachers can practice in front of virtual students. Lack of regular opportunities to practice in front of live students, as part of their college education, inspired the need for this application.

The sense of immersion that comes from wearing a VR headset is undeniable. The eyes and mind can quickly be fooled into believing they are someplace else. However, this trickery can send the rest of body's senses into state of rebellion. Part of this battle between mind and body has been well documented and the term "VR Sickness" has become part of almost every article about the subject. But there is more to the problem than a dizzy headache.

When the mind and body separate they no longer are working together. This separation, may not only make someone nauseated, but it might also cripple their ability to genuinely absorb content. For us, this is totally unacceptable. For us, content is, and will forever reign as king.

The purpose of our simulation is to practice teaching in front of students. In the virtual environment, the students, and how they are behaving, is the content! If we do it right, the students should provide visible clues that should require a response from the user. Therefore, the user should be watching the students through out the simulation. I have read several articles where people have described an issue with VR headsets, and VR content, is getting people to look where you want them to, when you want them to. Getting people to look at timed content is difficult because they might have their head turned and complete miss what you needed them to see.

The Virtual Class

The Virtual Class

The importance of content made us question how important immersion is. Do our users need to be able to look at the ceiling, the floor, the wall behind them to feel engaged in our simulation? With a VR headset, it is cool look around in all directions but that tends to where off pretty quickly especially if there is no relevant content in all areas. For our purposes, it is far more important for our users to focus on the content.

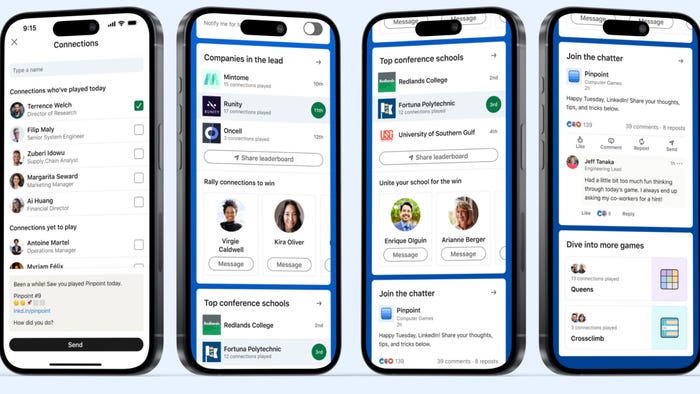

With less focus on immersion and more focus on content, our next challenge was to provide the users with methods of control to interact with the content. The controls needed to be as natural as possible. We want the users of our simulation to act as they would in a real life classroom. It is not a realistic option for teachers to believe they can control a classroom with the flick of a joystick. We also wanted the controls to have a low barrier to entry. Past video game experience should not be a prerequisite to use our simulation.

For all these reasons we decided to give the Kinect for Windows a try. The Kinect, in combination with the Microsoft Speech API, gave us the ability to provide our users with a realistic and natural way of interacting with content without difficult to learn control schemes and with out the risk of nauseating motion sickness.

To use our simulation, users stand comfortably in front of a large monitor with a Kinect mounted above it. They are able to move, free from wires and headsets, forward and back and side to side, in order to change their position in, and their perspective of, the virtual classroom. As a users moves closer to the monitor in physical space, they move closer to the virtual students. As they move side to side in the physical space, it is as if they are walking side to side in the virtual classroom. The monitor plays the role of a dynamic window into the virtual environment. While not as immersive as a VR headset, this keeps our user focused on the content while still providing them with a sense of presence in the virtual environment and a natural method of control of what they are looking at.

Walkable Virtual Space

Walkable Virtual Space

Using the Kinect, in combination with an algorithm to proportionally match the virtual walkable space to the user's physical space, we can allow the user to naturally move around the virtual environment. We can also limit where they can go to keep them focused on the content.

Using the Kinect, to capture natural movement through physical space, also has several purposeful applications in our simulation. It can change how many students the teacher can see at any given moment. It can also effect how many students might have an unobstructed view of teacher. In addition to handling line of sight issues, we can also measure how close the user is to each of the virtual students. We can use these physical factors to measure how individual students, and the class as a whole, are engaged in the teachings of the user. Students in the back row, with an obscured view of the teacher, are likely to be less engaged than those who can make eye contact. Teachers who continually stand on the left side of the room will likely do so to the detriment of the the students located on the right side of the classroom, etc. These examples of physical movement, used to alter line of sight and proximity, can be used in the practice and measuring of quality teaching.

Lines of Sight

Lines of Sight

Obviously there is much more to teaching than just moving around the classroom. We need our users to be able to interact with the students not just walk around them. We also need to provide our users with the most natural method to control this interaction, which is to use their voice. Luckily we could do that with Kinect as well! Using Unity as a bridge between the Kinect and Microsoft's Speech API we are able to give our users the ability to use their voice as a method of control and interaction.

Talk to an Individual Student

Talk to an Individual Student

Using speech has many obvious purposeful applications in our simulation. It is, after all, how a teacher would interact with students in real life. Calling on a student by name is far more effective, and far more realistic, than selecting them with a joystick or via a menu. Also, something like the amount of time a teacher spends talking to individual students versus speaking to the class as a whole can be used to measure successful teaching.

Every technology choice we have made is focused on content. For us, creating a successful simulation depends on being able to observe and interact with content in meaningful and natural way.

For more information about this project you can follow my blog at www.gamefullyemployed.com

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like