Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Bungie's head of user research takes another look at his decade-plus old article, which has become both influential and infamous for its suggestion that games can be better when developers take the psychology of players into account.

[Bungie's head of user research takes another look at his decade-plus old article, which has become both influential and infamous for its suggestion that games can be better when developers take the psychology of players into account.]

A lone scientist labors late into the night in his lab, assembling his creation piece by piece, and then releases it to rampage across an unsuspecting world! Muwhahahaha!

No, not Frankenstein. Behavioral Game Design!

When I wrote that article a decade ago, I was a psychology graduate student and amateur game designer who had never worked in the games industry. Since then, the article has run amok, living an almost completely independent existence in the wilds of the internet.

It's been translated into multiple languages and assigned as homework. It's been cited by academics, pilloried by the Huffington Post, and even lampooned by my childhood favorite, Cracked magazine.

[Footnote: This actually makes me the second of Bungie's employees to be called out by Cracked. Their treatment of our security chief was much more complimentary.]

And as anniversaries tend to do, the 10 year anniversary of this article has spurred a lot of reflection on my part. The industry has changed almost beyond recognition since 2001, and I'd like to take the opportunity to ruminate publicly about where this topic has gone in the past decade.

The biggest change is that it's hard to find a game today that doesn't take its reward structure seriously. At the time of the article, it was a radical idea to say that games contained rewards and that the way those rewards were allotted could affect how people played. Now it's simply a given.

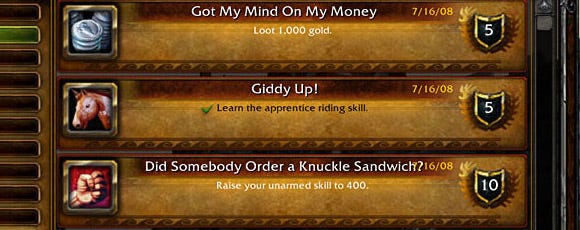

The clearest example of the acceptance of reinforcements in game design is the widespread use of achievements. Achievements are a really interesting case for study because there often isn't any tangible reward past the achievement itself. Some games, such as World of Warcraft, have used achievements to direct players towards alternate modes of play they might find more fun, such as exploration or PvP. In my eyes, helping players find more fun in the games they're already playing is one of the best uses of reinforcements.

The rise of social games on Facebook and elsewhere is another great example of how reinforcements have become a central topic in the games industry. Indeed, the early pioneers of this genre were basically reinforcement schedules with graphics. Their simplicity made it impossible for anyone analyzing them to misunderstand what made them so popular.

They had only three ingredients: well-structured rewards, strong viral communication channels, and high accessibility. Their runaway success has meant that no one will ever discount the power of those factors again. As competition among social games has grown, they've become much more sophisticated, but their contingencies still lie closer to the surface than in most genres.

The successful use of contingencies in games has also led to a reexamination of how they can be applied outside of games, on topics from fitness to encouraging safer driving. "Gamification" really has nothing to do with games and everything to do with contingencies. It's a little baffling that it took a fluffy entertainment field like games to make people take reward structures seriously on more serious topics, but it's nice that they are finally doing so.

Beyond behavioral psychology, the whole topic of psychology in games has gone mainstream. There are entire blogs devoted to studying how psychology and games interact, and some studios even keep full-time psychologists on staff.

While the science underlying these techniques is true and the techniques do work, they are not the Philosopher's Stone of game design. Classic behavioral psychology is a nice simple model of certain basic mental processes, but it falls down when trying to explain the totality of human behavior. There's a reason why modern psychology consists of more than just behaviorism!

The over-emphasis on these techniques has been seen on both sides. On the "enthusiast" side, a number of game developers have assumed that fun didn't matter; they just had to have a reward structure. This has generally proven untrue, since they are competing against other games that are both fun and have a good reward structure. Good contingencies are helpful to a game, just as good graphics or good music is helpful, but not sufficient.

On the critical side, there have been plenty of claims that reinforcement schedules are too powerful, that they compromise the will of the player. Again, reinforcement schedules are useful and effective, but don't represent the total sum of human psychology or the game experience.

Consider the use of loyalty cards at a coffee-shop. It is a contingency, exactly like the game contingencies covered in the original article. Indeed, it should be more powerful than game contingencies because it provides tangible real world benefits. And yet I don't think anyone would argue that "buy 10 lattes, get 1 free" is manipulative or too powerful for the average person to resist. (The chemical properties of caffeine notwithstanding.)

Nearly a century after Watson defined behaviorism, it's still misunderstood. Many people seem to assume that there are no contingencies in a game unless they're explicitly added. This is simply wrong. If you're playing a game, there's something in there that's rewarding for you.

It doesn't matter if the reward is intrinsic (self-expression) or extrinsic (an achievement). If the player doesn't find something rewarding in the game, they don't play it. Any pattern in how that reward is provided is a contingency of the sort described by behavioral psychology. They exist whether or not the designers are aware of them.

The original Behavioral Game Design article was fairly clear about this:

"Every computer game is implicitly asking its players to react in certain ways. Psychology can offer a framework and a vocabulary for understanding what we are already telling our players."

Unfortunately, many people still believe that contingencies are some sort artificial additive: MSG for video games. This just isn't true. Contingencies are fundamental to games, so much so that I'm not sure it's possible for something to qualify as a "game" without at least one contingency.

Furthermore, when critics of this approach describe games as Skinner Boxes, they completely miss the point of the Skinner Box. The goal of the early behaviorists was not to create an artificial environment where some new form of mind control could happen; it was to create a simplified model of the real world.

Furthermore, when critics of this approach describe games as Skinner Boxes, they completely miss the point of the Skinner Box. The goal of the early behaviorists was not to create an artificial environment where some new form of mind control could happen; it was to create a simplified model of the real world.

It was an attempt to understand learning at a fundamental level by creating the simplest possible operant learning task: "Press lever, get food." Like all scientific experiments, it was an attempt to isolate a phenomenon, to remove distractions and alternative explanations that could confuse the issue being studied.

A Skinner Box is completely unnecessary to create operant conditioning. It is an experimental tool for studying conditioning, nothing more. There wouldn't be any point to "putting players in a Skinner Box"!

The ethics of applying behaviorism to game design are still very much a subject of debate. Behavioral Game Design has been called "creepy", "freaking disturbing", and accused of promoting addiction.

For me, the starting place for this discussion has to be the fact that contingencies always exist and reinforcement learning is always going on. Game designers can be completely ignorant of the psychology involved while still creating mechanics that draw on these principles. People had been making games with random loot drops for years before anyone pointed out that they were creating variable ratio schedules. Contingencies are the essence of games, and those contingencies shape player behavior.

The designer is responsible for the reward system they create and its consequences. In my opinion, this means that ethical game design requires the designer to consider the sort of contingencies they are creating.

Note that this would be even more true if the critics were correct in thinking that these reward structures are a subversive influence. The more powerful these contingencies are, the more seriously game makers should take our responsibilities to design them well.

In my personal view, contingencies in games are ethical if the designer believes the player will have more fun by fulfilling the contingency than they would otherwise. You have to believe in the fundamental entertainment value of the experience before you can ethically reward players for engaging in that experience.

Contingencies are a larger structure, a molecule made up of atomic actions and rewards. Both the actions and rewards must be genuinely fun things for a game contingency to be ethical. Designers often toss achievements semi-randomly into their games, rewarding players for playing in a way that's fundamentally unfun and sabotaging otherwise fine game design. This was actually the entire point of the original article -- the idea that designers who understand their contingencies will produce games that are more fun.

Finally, I think the most hopeful thing about the current state of this topic is the way that it is recapitulating the early history of psychology. Before behaviorism, psychology was an extremely subjective field, driven primarily by opinion and introspection. The radical behaviorists represented an overreaction to that, refusing to acknowledge any aspect of the mind that couldn't be measured objectively by an outside observer.

The radical position was obviously wrong, but its focus on provable data and its profound commitment to Occam's Razor were effective and useful and have become permanent parts of modern psychology. Radical behaviorism was overly simplistic, but it laid necessary groundwork for later, more complex approaches such as cognitive psychology.

I believe that something similar is happening in games. Right now, there is an overemphasis on this topic. We talk a lot these days about rewards and investment mechanics, achievements and gamification. In a few years, the industry will move on and the topic will be taken for granted, but we will have permanently shifted towards a more empirical approach to game design, and our players will benefit from that.

Read more about:

FeaturesYou May Also Like