Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs.

Introductory bullshit detection for non-technical managers

Here's an introductory checklist of some questions to get you started on getting the answers that will actually help you get the information you need to do your job well

You are a non-technical manager for a team of programmers or other highly skilled domain experts. You sometimes have some difficulties “speaking the same language” as your team and sometimes that means things don’t go well.

Here is an introductory checklist of some questions to get you started on getting the answers that will actually help you get the information you need to do your job well. These are things you should expect your team to be able to answer in a way that you can understand, so that you can have the confidence you need to say yes or no to the right things.

You need to communicate that you expect all answers to be in plain English (or whatever language you’re speaking.) Stop if there is anything at all you do not understand. Not “I have an idea of how it works…” but actual understanding. If you don’t understand, you’re speaking at the wrong level in the conversation and you’re going to get snowed. Making sure you’re communicating well is your responsibility as manager.

Let’s assume you have a team of programmers. What should you be asking?

Understand the problem

☐ Ask: What problem are you actually trying to solve? No metaphors (“It’s like a car…”). Expect the people on your team to describe the actual, concrete problem they want to solve for the benefit of a specific person or group. Describe it from that person or group’s point of view. Is it an iteration problem? Is it a usability problem? Is it a problem of meeting expectations? These things are all things you can quantify and verify. Unless you have a satisfactory answer to this question, you do not move on with any project, no matter how small it seems.

☐ Ask: What is one concrete example of a problem this will solve? A trap a lot of technical people can fall into is wanting to create something simply because it’s interesting, not because it’s actually useful in any way. If you don’t have a satisfactory answer to even one problem that could be practically solved, then it’s at best considered research. Perhaps you can make room for research and that’s fine. But you need to know that’s what it is. And not put it into production.

☐ Ask: Who specifically will represent the users of this system? You should expect your team to tell you who they are actually creating something for. Who will directly benefit from the work. And a real life individual person they will consult with on questions and who can verify it meets their expectations, at least. If your team is working in a vacuum, it’s a sure sign they’re working on the wrong thing.

☐ Ask: What are the platform constraints? What you don’t want to happen: You discover only when you try to ship on a particular platform that it doesn’t work and was never intended to work. Or, that you spent twice as long creating a solution that would work on platforms that will never be used or tested. Regardless of what anyone may try to tell you, you have a finite set of platform constraints. “Everything” is nonsense. Enumerate what is expected to work well. Is it for PC desktop? What are the minimum specs for all the hardware? Is it for the browser? Which browsers? What versions? What hardware will those be running on? Is it custom hardware? What CPUs, GPUs, OSs, other software, performance characteristics of peripherals, networking, etc. Get answers that are as specific and thorough as possible. Create the list because you have to make sure it all gets tested.

☐ Ask: What are the memory constraints? Memory access is the most common real bottleneck in any software system. Understanding the deeper issues is well beyond this simple introduction, but you should at least expect to have an answer to how much memory will be needed. Memory on real hardware is not infinite. What are the limits? Is it a fixed amount or does it depend on what’s happening in the system? What are the real limits of the system, given the various platform limits outlined above? If you can’t get satisfactory answers to these questions, send it back to the drawing board.

☐ Ask: What are the performance constraints? Every system has performance requirements. They’re either explicit or will surprise you when you’re not ready. What are the exact performance requirements for this problem? Would it be acceptable if it took a week to calculate or 20 minutes to open the application? Does it need to happen in 30 seconds or 5 milliseconds or 100 microseconds? There is an acceptable upper bound and you need to have absolute clarity on what that is. If your team can’t answer this now, then whatever they do design will inevitably fail to meet the unarticulated real world constraints at some point.

There are also some phrases you should aware of that are red flags and generally very good signals of bullshitery. When someone on your team says…

“I’m making a generic version of…”

It means: I don’t understand the constraints of the actual problem, so I’m going to design an even bigger problem that we have no way of verifying the efficacy of.

“I’m creating a framework to…”

It means: I’m not interested in solving the actual problem, so I’m going to create something else so that the person that actually will solve the problem has to also fix the problems in my stuff on top of that.

“It’s platform independent.”

It means: I literally have not spent two seconds thinking about what platforms this will obviously not work for.

“I’m adding this to make sure it’s future proof.”

It means: I believe in fairies.

“I really need to refactor this bit…”

When you hear “refactoring” it’s a bit more of a yellow flag. Technical debt is a real thing that needs to be addressed and that you should be aware of. I recommend reading Paying Down Your Technical Debt to get a basic understanding of the concept.

However, it can also be just an excuse to change things to some conception of “better” for no real benefit. Whatever better means to that programmer today, you can be confident that they’ll think it’s shit in a couple of years as they gain more experience and skill. That’s totally fine, but you can’t afford to get caught up in a loop of always retrofitting everything to “better” every time the definition of better changes. Unless you’re Google, in which case you keep doing that until some other team solves the actual problem and your project gets deprecated.

Value

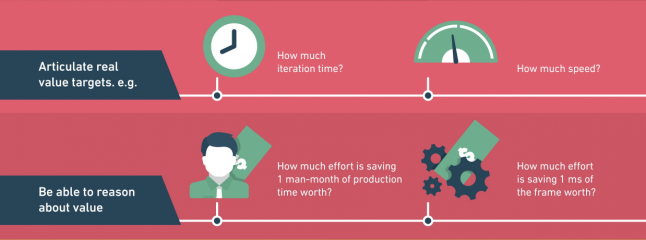

As a non-technical manager, once you understand the problem, you have to articulate the answer to the question of value.

☐ Answer: How much is this worth solving? Is solving this problem or creating this system, based on your understanding of the problem, worth two weeks of development? Two years? Twenty years? At what point does developing it cost more than the value you could possibly gain? This is your actual budget. You’re not asking how long it will take, you’re saying how much is worth spending. If you cross that line, you stop development.

Beyond the problem at hand, you should also ask about what additional value could be created that may not be obvious to you:

☐ Ask: What specific things will we be able to do that we can’t do now?

☐ Ask: What specific things will be cheaper than they are now?

☐ Ask: What specific things will be higher quality for the end user than they are now?

Cost

As a non-technical manager, you still need to be able to reason about the actual costs of development. Which includes opportunity costs. You are relentlessly asking yourself and your team: Is this the most valuable thing we can be doing?

☐ Ask: What previous art solves a similar problem? Get to the heart of the build or buy question right away. If no one has ever solved this specific problem before, ask what parts have been solved. Is an 80% solution good enough for your needs? Is this work really where your team can add unique value?

☐ Ask: What evidence can you show that this will solve the problem? A lot of development is just guessing. You need to see some evidence those guesses are founded in some reality. You should expect your team to provide a simulation or prototype or fake (but statistically relevant) data which can demonstrate the solution is at least plausible before you commit.

☐ Ask: What connects to this? What systems will depend on this? What systems will this depend on? Enumerate all the dependencies. Other systems, libraries, users, protocols, everything. Even if you don’t understand what each thing does specifically, you can understand the complexity of the network of connections. Things with a more complex network of dependencies will be more costly to develop and maintain.

☐ Ask: What’s Plan B if this doesn’t work? A sure danger sign that someone is way too attached to a specific solution (and at risk of ignoring the actual problem) is an inability to articulate any alternatives. Ask: What are you going to do when we’re up against a deadline, this solution isn’t working as expected, everything is broken, and we still have to ship? Yeah… Get an answer to that, then do that first. Then you can talk about how you can make it better.

☐ Ask: What are you not doing instead? Real life is all triage and trade-offs. You need to understand what’s not happening so you can communicate that to interested parties. And so you can make sure the right trade-offs are being made in this case.

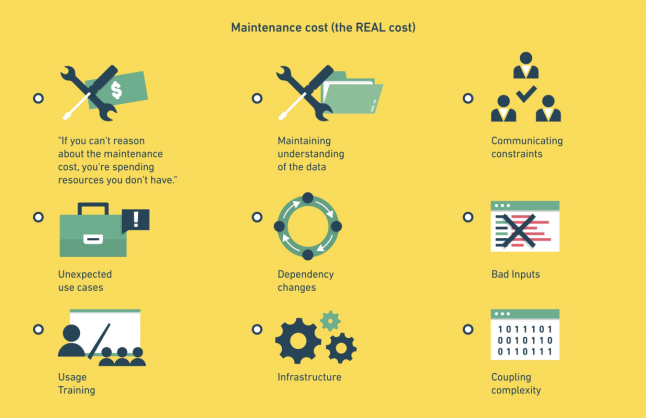

Maintenance cost: The overwhelming cost of developing any solution is almost always maintenance. If everything goes well, the initial development cost is noise compared to that.

☐ Ask: How long will the system survive? No system lasts forever. When will this system be scheduled for replacement? To put a finer point on it: During what period will you be making no changes to the system except for critical bug fixes?

☐ Ask: What are the prerequisites for using the solution? What must continue to be true? What do users need to know? What does the data need to look like? If you’re not convinced your team knows the requirements then they will be ill-prepared to handle it when those requirements inevitably change.

Confidence

As a non-technical manager, you also need to have a process for developing confidence in the progress of a system without digging into the internal details.

☐ Ask: How can someone else be able to verify it works as expected? While the author of the system may have a pretty good idea of whether or not the system works as expected, there will be blindspots. You need some kind of QA process which can validate things work and can find those unanticipated areas which weren’t obvious to the developer.

☐ Ask: Can you demonstrate a failure? Your team will be showing you progress over time. Perhaps giving demos of workflows or playthroughs of how things are supposed to work. You can generally assume that works. what you really want to see is what happens when you go off script. Most of the complexity is in handling those off-script behaviors. If that’s not being handled well, it’s a real problem that you need to address immediately and it’s definitely not "nearly done," whatever they might think.

The 80/50 Rule

If you’re not 80% done by the time you’ve used 50% of your resources, you are behind. 80% done means you can ship it right now. You may not like it. It may have some rough edges. But it actually works and handles off-script input in an acceptable way. Use this rule rigorously to verify your team is where they expect to be. You don’t need to know the details of the system to know that if it’s not actually usable at this point, it’s almost certain that you won’t ship it on time. When something doesn’t pass this test, it’s time to evaluate what needs to change. Does this project need to stop? Do other projects need to move? “I can make up the time” is not a realistic response.

What you’re not asking…

By contrast, there are two specific questions that you are not asking. You should be confident that your experts can solve the problem you’ve agreed to, given the constraints you’ve discussed (including costs.) You should be confident it’s worth solving. But you’re wasting everyone’s time if you’re trying to break down tasks you don’t understand.

⊘ Don’t ask: What are the specific tasks?

⊘ Don’t ask: How long will this task take?

None of the above requires you to understand what your team is doing as well as they do or how they are doing it, technically. But having a rigorous process for understanding why they are doing it, the value, the costs, and evaluating your own confidence level will help you ensure that your team is, in fact, working on the right things.

Read more about:

Featured BlogsAbout the Author(s)

You May Also Like